US Delegation Heads to India AI Summit Intent on ‘Domination’

Merve Hickok, Marc Rotenberg / Feb 16, 2026Merve Hickok and Marc Rotenberg are the president and founder of the Center for AI and Digital Policy, a global network of AI policy and human rights experts. Hickok will speak at the AI India Impact Summit. Rotenberg helped draft the OECD AI Principles.

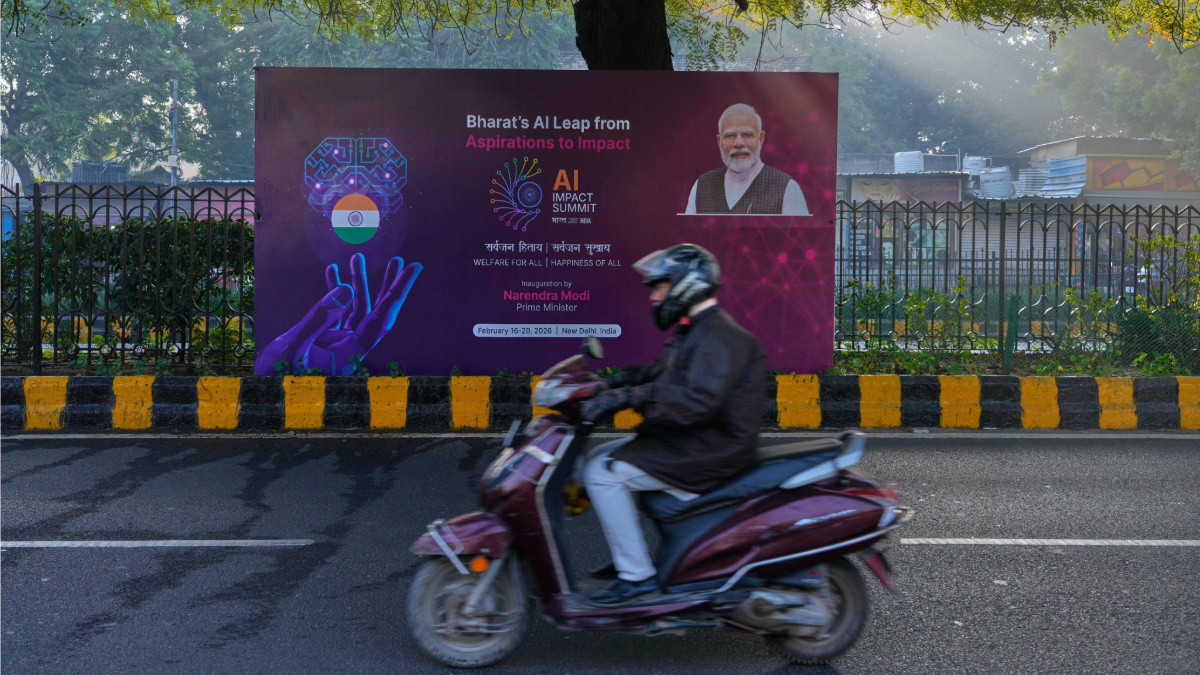

A scooterist drives past a banner announcing the India AI Summit on a road leading to the summit venue in New Delhi, India, Monday, Feb. 16, 2026. (AP Photo/Manish Swarup)

This week, world leaders, civil society organizations, academic experts, and business executives will gather in New Delhi to discuss the future of artificial intelligence. The 2026 India AIImpact Summit has adopted the theme “Shaping AI for Humanity, Inclusive Growth, and Sustainability,” reflecting a growing global consensus that AI policy must be aligned with human rights, democratic governance, and long-term social welfare.

The United States has chosen a different approach. Following its new AI strategy into a period the White House calls “The Great Divergence,” the US delegation is expected to promote the adoption of the “American stack”: American-designed chips, American-controlled networks, and American-developed models and applications. Rather than emphasizing inclusive growth, shared governance frameworks or international cooperation, the US strategy underscores global “dominance” and technological dependence.

For the last several years, we have studied national AI policies and practices to assess their alignment with democratic values broadly understood. Our work, published annually as The CAIDP Index – AI and Democratic Values, examines whether countries have established meaningful opportunities for public participation, independent oversight, accountability mechanisms, and protections for fundamental rights. The goal is not to rank technological capacity, but to help policymakers understand the consequences of AI governance choices and to identify emerging global trends. And we have seen remarkable progress made in countries as diverse as Brazil, Canada, Spain, and the United Arab Emirates.

Over five years of publishing the index, we have not seen a country show a sharper reversal in a national AI strategy than the US has in the past year. Several recent developments raise concern, not only for Americans, but for countries that have looked to the US as a partner in responsible AI governance.

One such development is the decision to withdraw from UNESCO, an organization that has played a central role in advancing a global framework for ethical AI. UNESCO’s 2021 Recommendation on the Ethics of Artificial Intelligence, adopted by nearly 200 countries, established baseline commitments on human rights, transparency, accountability, and capacity building. While non-binding, the Recommendation has influenced national legislation, procurement rules, and literacy and capacity strategies worldwide. By stepping away from UNESCO, the US has distanced itself from one of the most widely supported global efforts to shape AI governance.

At the domestic level, the White House has also moved to preempt state AI laws, seeking to prevent states from adopting rules that reflect public support in the US for algorithmic accountability, consumer protections, safety guardrails, protections for children and creative artists. These developments stand in contrast to the earlier US approach to AI governance.

Our methodology is non-partisan and predates the current political climate. In earlier editions of the CAIDP Index, we reported favorably on US leadership during the first Trump administration. At that time, the US worked with allies at the Organization for Economic Co-operation and Development to establish the OECD AI Principles, the first international framework for AI governance grounded in democratic values. More than 50 OECD and G20 countries have endorsed those principles, which continue to shape AI strategies across Europe, Asia, Africa, and Latin America.

We also reported positively on President Donald Trump’s 2020 executive order emphasizing the responsible deployment of AI across federal agencies. That order highlighted the importance of public trust, risk assessment, and accountability in government use of AI. While imperfect, it reflected an understanding that AI governance is not solely about innovation and competitiveness, but also about public legitimacy and institutional responsibility.

Much has changed since then. With a venture capitalist now setting national AI policy, the US current strategy favors unilateral influence and transactional agreements. The Trump administration intervenes in business decisions of companies. Allies are treated as customers rather than partners. Dominance is the word of the day.

At the same time, key federal AI policies that emphasized civil rights protections, impact assessments, and public accountability have been weakened or ignored. The emphasis has shifted toward speed of deployment and market advantage, with less attention to long-term social consequences. Elon Musk’s controversial AI chatbot, Grok, is now deployed in multiple federal agencies.

A global technological network built on interoperability and shared standards, once championed by the US, is being replaced by efforts to promote the American Stack. The administration frames its approach as an AI ‘race,’ countering Chinese competitors. However, this has contributed to a growing backlash — under the banner of “digital sovereignty” — in which countries seek greater control over data, infrastructure, and AI development within their borders. This is a predictable response to strategies that prioritize dependence over cooperation.

It is possible that the US will secure short-term gains in New Delhi through bilateral agreements for data centers, semiconductor supply chains, or cloud infrastructure. Some countries may see economic benefits in aligning with US firms. But the longer-term trajectory points elsewhere. Many countries are investing in domestic AI capacity, diversifying technology partnerships, and deepening cooperation through international bodies that the US has chosen to abandon.

The risk for the US is not simply lost influence, but diminished credibility. Leadership in AI governance has never rested solely on technological superiority. It has depended on a willingness to engage with others, to accept constraints in the public interest, and to recognize that the legitimacy of AI systems derives from the legal and democratic frameworks that govern their use.

As AI systems become more deeply embedded in public administration, employment, healthcare, and education, countries will seek governance models that promote growth, cooperation, and sustainability. The CAIDP Index, like the upcoming summit in India, tells this story in countries around the world.

A vision of the AI future limited to the economic success of one nation is unlikely to endure.

Authors