The Internet Beyond Social Media Thought-Robber Barons

Richard Reisman / Apr 22, 2021It is now apparent that social media is dangerous for democracy, but few have recognized a simple twist that can put us back on track, says Richard Reisman.

A surgical restructuring -- to an open market strategy that shifts control over our feeds to the users they serve -- is the only practical way to limit the harms and enable the full benefits of social media.

Introduction: A pro-democratic logic for reforming social media

Of the many converging crises that threaten humanity in the early 21st Century, the harms of social media have emerged as among the most insidious, urgent, and poorly understood. There is a growing realization this technology has taken a wrong turn from early utopian visions of “global community.” Social media are increasingly regarded as an acute threat to democratic society -- and to our ability to solve any problems at all.

While a diversity of proposed remedies vie for attention, there is also deep disagreement on their potential effectiveness and desirability. There is little focus on just what we seek to achieve, with disagreements over “oversight boards” and knotty issues around free speech. These issues may seem intractable- but if you look beyond the loudest arguments over issues like Section 230, a new consensus is emerging that may yet deliver on the early promise of social media to bring people together, help build consensus and serve as a positive force for democracy.

It is time to step back and decide what we want these powerful tools to do for us -- not to soften the edges, but to reform the core structure of social media platforms. We need social media to help us be more empowered, free, and democratic. We have a diversity of values and viewpoints and we need social media to help us understand one another, seek win-win solutions, and build consensus. Our long-term vision should be to empower society with increasingly powerful “bicycles for the mind” that work for each of us in democratic, self-actualizing ways -- and to foster cooperation.

The social media oligarchies, including Facebook, Twitter, and Google/YouTube, have seduced us – “giving” mechanisms they spent years and billions engineering to "engage" our attention. The problem is that we do not get to steer our attention ourselves. They insist on steering for us, because they get rich selling targeted advertising. Not only do they usurp our autonomy, but they are destroying our ability to arrive at a consensus view of reality and solve problems. Democracy and common sense require that we, the people, each guide our attention where we individually want, and toward the purposes and truths that we value. Some will steer foolishly, but that is how a free society flourishes.

While Western social media lack a drive for human purpose, understanding, and cooperation, democracies are confronted by a growing global competition with China. China seems to be succeeding at building a state-controlled social media ecosystem that promotes its undemocratic techno-authoritarian purpose and drives understanding and consensus to that end. We are beginning to realize that we have allowed private oligarchies to gain too much power over our social media, with no overarching purpose except to drive us to view ads. We have created new thought-robber barons and allowed them to manage our marketplace of ideas (and of values). Is that techno-oligarchism how a democracy should work?

We cannot save ourselves, or compete with China, without recentering on a democratic purpose. But who frames that purpose? As a democratic society, we cannot impose that purpose from the top-down -- we must allow a thousand purposes to bloom and let them compete for support and results. At the same time, society has learned that purely bottom-up processes are too unstable and often destructive to be ungoverned, especially when complexity and dynamics increase. Societies flourish through a dynamic and emergent balancing dialectic between hierarchical and distributed control.

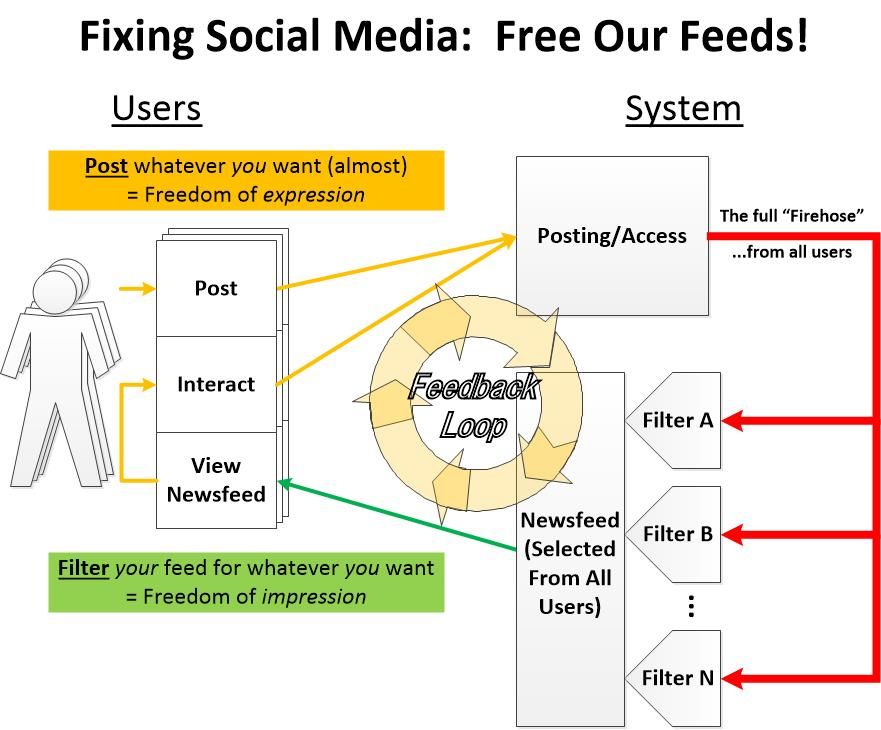

The path through the forest manifests when we look at a society's use of social media from a systems perspective. The essential nature of social media is to drive social feedback loops. We post news items, receive a newsfeed of items others have posted, and interact with them, closing the loop. Social media filters uprank and downrank those items, based partly on how other people interact with them, to select what goes into each of our feeds.

- Feedback loops can be reinforcing or damping -- and if not managed well, can cascade into harmful extremes and instabilities. Controlling feedback loops requires filtering to amplify constructive feedback and to damp out, limit, or negate destructive feedback.

- Now these social feedback loops have been given a quantum leap in speed and reach that greatly amplifies extremes in our interactions, and can lead to extremes in the real world. China manages its social media for its state purpose: to control its citizens. Our oligarchic social media manage for their own purpose: selling ads -- and, secondarily, to keep us happy enough to keep viewing ads and to avoid onerous regulation.

Huge network effects bring compound problems. At Internet scale and speed, managing feedback to a whole world of users with diverse interests becomes increasingly difficult and unstable. That is where the fulcrum for an elegant solution pops out. Those network effects apply to posting and accessing items on social media. But filtering what goes into our newsfeed has minimal network effects -- so we can each choose and manage our own filters independently. A breakup of dominant social media platforms not by size, but by function, can allow decentralized filtering, even from a “universal” raw feed source:

- If we divest control of the filters to an open market that users can select from, we break up the feedback loops.

- If users are free to select filtering services that filter in ways that seem valuable to them and serve their purpose, the services are motivated to deliver that user value in order to attract users.

- That enables a new level of virtuous feedback -- diverse services catering to diverse user and community needs to drive feedback to support the purposes of each user and community.

This article explores these proposals for how we can free our feeds -- and how that might work for us, as users, to get us back on the path to a better democracy. It is based on 50 years of my own thinking on what social technology can do, and insights that have emerged from like-minded experts in recent years.

A surgical restructuring can change the course

How can we, the people, take back the steering of our own engagement? Several very similar proposals to do this have emerged, with roots in earlier ideas from technologists. The most prominent and accessible have been published recently inForeign Affairs and the Wall Street Journal. The following draws on all of these works and my own vision for how to not only protect, but systematically enhance democracy – and to augment human wisdom and social interaction more broadly.

These proposals share much the same diagnosis of a serious breakdown in the proper functioning of our marketplace of ideas and how we achieve consensus on truth:

- The problem of control: The dominant social media platforms selectively control an increasingly large proportion of what we each see of the world, when they should be enabling each of us to have meaningful control for ourselves (in spite of a history of denial, some of them have recently begun to admit to and adjust this to a limited extent). They unilaterally decide which of the many items of available content is amplified and recommended to appear in our individual social media feeds—and which people and groups to suggest we connect to -- with only very limited user input. Clearly society cannot allow a few private companies to have that level of control over our view of the world.

- The problem of incentives: Severely compounding this, these dominant platforms are motivated not to present what we might value seeing, but instead to addictively “engage” us so that we might be tempted to click as many ads as possible. The severity of this conflict of interest is now understood by nearly all who do not profit from not understanding it. A decade of algorithm design has twisted the objective of social media platforms from “connecting people” into targeting lucrative audiences for advertisers. Oligarchies profit obscenely from selling advertising in “free” services.

The core idea of these proposals is to restore the freedom of attention that has been extracted from each of us.

Giving users an open market in filtering services that work within the platforms is the best way to do that -- by enabling competition that stimulates diversity and innovation to meet diverse user desires and purposes. This function can be spun out from the social media services to work as a kind of “middleware,” to be controlled using protocols that interoperate with the core posting and access functions of the social media platforms.

This strategy draws from the differential impact of network effects:

- Because of network effects, these dominant platforms have arguably become essential common utilities to much of the world’s population. Every speaker rightly seeks to be heard by every listener willing to hear them (with very narrow limitations) and every listener wants access to every speaker who interests them. It is widely recognized that this drives toward universality of posting and access. Pernicious as the advertising model is – “if you are not the customer, you are the product” -- few see an alternative way to sustain these services with user revenue. (That is not to say it cannot be done – I have proposed new user-based revenue models designed to do just that, but they are not yet proven. I have also proposed simple new regulatory strategies to “ratchet” the platforms toward user-based revenue models, but that remains a steep regulatory climb.)

- But filtering is personal – we each should be fed what we individually desire, and not what we do not. Each of us needs overall control over how algorithms promote or demote items to or from our attention. That service is largely immune to network effects, and so could be spun out to be done by a multitude of services that manage what we see of the “firehose” of available content and people on these posting/access platforms. Note that some have suggested that algorithmic filtering be outlawed, and we just revert to the raw chronological firehose -- but it is called a firehose because the flow is far too overwhelming to drink from.

The platforms themselves could provide more user control features, and could self-regulate better -- but without a clear decoupling from the advertising model, the temptation to drive toward addictive engagement in order to sell more ads is overwhelming. Regulation could seek to limit those perverse effects, but it would always be swimming against this tide. For those not convinced of that, the authoritative and thorough analysis in The Hype Machine, by Sinan Aral (who leads MIT’s Social Analytics Lab) should erase any doubts. Top that off with recent reporting by Karen Hao in MIT Technology Review on the refusal of Facebook’s Mark Zuckerberg to address the fundamental issues in his business model, whether due to duplicity or just motivated reasoning.

Creation of entirely new social media services with better, more purpose-driven filtering is a promising alternative, but one that faces an uphill climb to achieve critical mass and network effects. And, if these new services do succeed in growing to large scale, they will have the same challenge of filtering in ways that serve their growing diversity of users well.

That leaves only one viable option: proposals for an open market in filtering services that users can choose from, to work in conjunction with their preferred posting/access service. Filtering services could be sustained by a share of the ad revenues of the platforms they work with, charge user fees directly, or simply by serving as brand extensions from existing publishers with known reputations and sensibilities; or indeed even from government (think PBS or BBC) or nonprofits.

This spinout of filtering services would give users control and decouple filtering decisions from advertising. We forget that traditional publishers long recognized the need to separate editorial from advertising. Now that the dominant social media platforms have more power than any traditional publisher and extract obscene profits from the sale of our attention and data, why is there no insistence on such a separation?

Skeptics question how this task of steering could be made simple enough for most users. Of course, managing the details of filtering is not practical for most users to do unaided. That is another reason the platforms have not bothered to offer more than the most basic choices to users. But even if they tried, how diverse could they be? Individual needs vary so widely that no single provider can serve that diversity well. Open, competitive markets excel at serving diverse needs – and untangling incentives. Breaking filtering middleware out as an independent service that interoperates with the platforms enables competition and innovation. A multitude of providers could manage those details in varied ways, to serve diverse interests and tastes, even for small niche markets -- giving users real choice.

New filtering services could range from highly tunable for the few who want that, to largely preset for those who just want to pick a service with a style, focus, or brand they like. Users should be able to change or combine filters whenever they wish. The code base now used for filtering within the platforms might be spun out into one or more new open-source entities to jump start the creation of new filtering services.

There remains a fear that some users would just create their own echo-chamber filter bubbles, focused on QAnon or the like. But hasn’t that always been a problem? Our open marketplace of ideas has always faced this challenge. What has changed is the scale and speed of amplification and connection. By breaking up the filters we will have a diversity of smaller filter bubbles that will damp out the scale of the extremes we now face. And the decoupling from advertising incentives for engagement will no longer motivate against developing measures to reduce incitement and to make filter bubbles less hermetically sealed and polarizing, introducing new kinds of serendipity and collaboration (as explained below).

In many other ways, this kind of hybrid structure provides a more natural architecture for the marketplace of ideas, and for managing the economics of network effects. There is value in a more or less universal substrate for posting and access. That could be a monolithic utility service or an interoperable, distributed database of multiple posting/access services. But the task of filtering is personal and parochial, and so naturally is done best in a decentralized way, with flexible composition of filters from multiple services -- a marketplace of feeds. This can create benefits of open competition and innovation -- and co-opetition.

There are regulatory precedents for similar functional divestitures, and there are models for open, user-driven markets for filtering algorithms that can favor quality and value.

Emerging proposals for an open market in filtering services (...and Twitter buys in?)

All of the proposals described below are aligned in their objectives and basic approach, but details differ in ways that should provide a fertile field for development. And, all of them intend that any mandated separation of filtering from posting/access platforms be mandated only for platforms that exceed some threshold scale, to avoid unnecessary restrictions that might impede innovation and new competitive entrants.

The first wide publication of this concept was late last year, by Francis Fukuyama, Barak Richman, and Ashish Goel in Foreign Affairs, How to Save Democracy From Technology (drawing on a report from a Stanford working group). They suggest these filtering services can work “inside” the platforms, as middleware, using APIs (Application Program Interfaces), and that they could be funded with a revenue share from the platforms.They frame the policy appeal:

…few recognize that the political harms posed by the platforms are more serious than the economic ones. Fewer still have considered a practical way forward: taking away the platforms’ role as gatekeepers of content...inviting a new group of competitive “middleware” companies to enable users to choose how information is presented to them. And it would likely be more effective than a quixotic effort to break these companies up.

A 2/12/21 Wall Street Journal essay by some of the same group, Barak Richman and Francis Fukuyama, How to Quiet the Megaphones of Facebook, Google and Twitter, gives an updated presentation (and cites Twitter’s similar proposals). They highlight:

The great advantage of the “middleware” solution ...is that it does not rely on an unlikely revolution in today’s digital landscape and could be done relatively quickly. …This solution would require the cooperation of the major platforms and new powers for federal regulators, but it is less intrusive than many of the other remedies currently receiving bipartisan support. ...Regulators would have to ensure that the platforms allow middleware to function smoothly and may need to establish revenue-sharing models to make the middle-layer firms viable. But such a shift would allow the platforms to maintain their scope and preserve their business models. ...to continue delivering their core services to users while shedding the duty of policing political speech.

They counter skeptics:

Could middleware further fragment the internet and reinforce the filter bubbles ...Perhaps, but the aim of public policy should not be to stamp out political extremism and conspiracy theories. Rather, it should reduce the outsize power of the large platforms and return to users the power to determine what they see and hear on them.

Middleware offers a structural fix and avoids collateral damage. It will not solve the problems of polarization and disinformation, but it will remove an essential megaphone that has fueled their spread. And it will enhance individual autonomy, leveling the playing field in what should be a democratic marketplace of ideas.

A revenue share need not reduce platform revenue, since better service could yield more activity and more users. The filtering services could best serve users by applying much the same “surveillance capitalism” data that that the platforms now use – but subject to controls to restrict that to only the extent users opt-in to permit, and subject to regulatory constraints on privacy and data usage.

Stephen Wolfram proposed a similar solution in testimony to a US Senate subcommittee, with some variations in technical approach that get a bit deeper into the AI (6/29/19):

Why does every aspect of automated content selection have to be done by a single business? Why not open up the pipeline, and create a market in which users can make choices for themselves?

One of my ideas involved introducing what I call “final ranking providers”: third parties who take pre-digested feature vectors from the underlying content platform, then use these to do the final ranking of items in whatever way they want. My other ideas involved introducing “constraint providers”: third parties who provide constraints in the form of computational contracts that are inserted into the machine learning loop of the automated content selection system....fundamentally what they’d have to do is to define—or represent—brands that users would trust to decide what the final list of items in their news feed, or video recommendations, or search results, or whatever, might be.

He also pinpointed key policy advantages:

Social networks get their usefulness by being monolithic: by having “everyone” connected into them. But the point is that...there doesn’t need to be just one monolithic AI that selects content for all the users on the network. Instead, there can be a whole market of AIs...

...right now there’s no consistent market pressure on the final details of how content is selected for users, not least because users aren’t the final customers. ... But if the ecosystem changes, and there are third parties whose sole purpose is to serve users, and to deliver the final content they want, then there’ll start to be real market forces that drive innovation—and potentially add more value.

Mike Masnick offers a similar proposal, with a more radical technical/structural approach, in a 8/21/19 Knight Institute paper, aptly titled “Protocols, Not Platforms: A Technological Approach to Free Speech” (building on his work from 7/17/15). Masnick applies the idea of openness, interoperability, diversity, and user choice to all components of social media, using a model of open protocols much like those used with great success for Internet email and for the Web. He also reports such architectures were considered previously to some extent by Twitter and Reddit. Instead of relying on the code of any one company for social media, anyone should be able to develop code that does filtering and interoperates with other services, including the posting and user databases, and the user interfaces. He also nicely explains the broad attraction of this kind of open diversity in service infrastructure to support the open diversity we depend on for our marketplace(s) of ideas. And he notes that the choice between platforms and protocols is not either/or, but a spectrum.

Jack Dorsey, Twitter CEO, offers perhaps the greatest validation of these ideas, tweeting on 12/11/19 that “Twitter is funding a small independent team of up to five open source architects, engineers, and designers to develop an open and decentralized standard for social media. The goal is for Twitter to ultimately be a client of this standard.” He reports that early on Twitter was very open and had thoughts of becoming a protocol, and now he is returning to those ideas given the “entirely new challenges centralized solutions are struggling to meet. For instance, centralized enforcement of global policy to address abuse and misleading information is unlikely to scale over the long-term without placing far too much burden on people.” He cites Wolfram and Masnick for having “reminded us of a credible path forward.”

Dorsey expanded on this “Bluesky” project in his 2/9/21 earnings call, making arguments about the advantages of decentralization and diversity in filtering services to present distinct views of a centralized “corpus of conversation” to “suit different people’s needs” that is very aligned with those presented here.

Of course some observers reasonably question whether this is more than a public relations effort, but he makes a point that “this is something we believe in:”

… one of the things we brought up last year in our Senate testimonies … is giving more people choice around what relevance algorithms they're using, ranking algorithms they're using. You can imagine a more market-driven and marketplace approach to algorithms. And that is something that not only we can host, but we can participate in.

… the thing that gets me really excited about all of this is we will have access to a much larger conversation, have access to much more content, and we'll be able to put many more ranking algorithms that suit different people's needs on top of it. And you can imagine an app store like VU, our ranking algorithms that give people optimal flexibility in terms of how they see it. And that will not only help our business, but drive more people into participating in social media in the first place. So this is something we believe in, not just from an open Internet standpoint, but also we believe it's important and it really helps our business thrive in a significantly new way, given how much bigger it could be.

It seems that Dorsey advocates, much like Masnick, that not just filtering, but rather the posting/access core of social media services should be open and interoperable -- and that the open filtering services might be usable across multiple posting/access services. Dorsey’s 3/25/21 House testimony on Bluesky seems to make that more explicit:

Bluesky will eventually allow Twitter and other companies to contribute to and access open recommendation algorithms that promote healthy conversation and ultimately provide individuals greater choice. These standards will support innovation, making it easier for startups to address issues like abuse and hate speech at a lower cost. Since these standards will be open and transparent, our hope is that they will contribute to greater trust on the part of the individuals who use our service. This effort is emergent, complex, and unprecedented, and therefore it will take time. However, we are excited by its potential and will continue to provide the necessary exploratory resources to push this project forward.

Contrasting these proposals, and tempering idealism with pragmatism, there are advantages of fuller independence in Masnick’s protocol argument, and some hope for that in Dorsey’s apparent support. But given how far down the platform road we have gone, the more code-based middleware/API variation suggested by the Stanford group and Wolfram might be more readily achievable in the near term. That leads to the possibility of a step-wise correction, first as code-based, and then gradually opened more fully with protocols.

Protocols, APIs, and middleware all relate to enabling functional modularity, a best practice in system design. The idea is to decompose systems into smaller functional components that can be designed, managed, and substituted without changing the other components they work with. To the extent social media systems are well designed, these techniques facilitate the proposed divestiture and flexible selection of alternative filtering components. As a semantic detail, some view the term “middleware” as being code-based, and thus not including pure protocols. I use the term broadly as a mediating capability that can be enabled anywhere on the protocol/code spectrum – in this case, mediating between the firehose and the filtered feeds designed to be open and user-controlled.

Nick Clegg, Facebook’s VP of Global Affairs, put out a telling counterpoint to these proposals (perhaps without any awareness of them) in a 3/31/21 article, It Takes Two to Tango. He defends Facebook in most respects, but accepts the view that users need more transparency and control:

You should be able to better understand how the ranking algorithms work and why they make particular decisions, and you should have more control over the content that is shown to you. You should be able to talk back to the algorithm and consciously adjust or ignore the predictions it makes — to alter your personal algorithm…

He goes on to describe changes Facebook has just made, with further moves in that direction intended. But he begs the question of how these can be more than Band-Aids covering the deeper problem. Putting the onus on us -- “We need to look at ourselves in the mirror…” -- he goes on (emphasis added):

…These are profound questions — and ones that shouldn’t be left to technology companies to answer on their own…Promoting individual agency is the easy bit. Identifying content which is harmful and keeping it off the internet is challenging, but doable. But agreeing on what constitutes the collective good is very hard indeed.

Exactly the point of these proposals! No private company can be permitted to attempt that, even under the most careful regulation - especially in a democracy. That is especially true for a dominant social media service. Further, slow-moving regulation cannot be effective in an age of dynamic change. We need a free market in filters from a diversity of providers - for users to choose from. Twitter seems to understand that; Facebook seems not to.

Two recent commentaries refer to these proposals with some reservations. Lucas Matney's Twitter’s decentralized future (1/15/21, Techcrunch) raises the dark side: "[Twitter’s] vision of a sweeping open standard could also be the far-right’s internet endgame," suggesting that “…platforms like Parler or Gab could theoretically rebuild their networks on bluesky, benefitting from its stability and the network effects of an open protocol.” I see those concerns as valid and important to deal with, but ultimately manageable and necessary in a free society, and addressable by an open filtering approach, as explained below. And some of the comments from the Bluesky team that he reports on are very encouraging in suggesting that other platforms may see this as a solution to their “really thorny moderation problems.”

The recent Atlantic article by Anne Applebaum and Peter Pomerantsev broadly reviews the need for “reconstructing the public sphere” and summarizes the Stanford group proposal. It raises the general concern that algorithms are not a static thing to control, but a new kind of thing that interacts with humans, and changes “much like the bacteria in our intestines.” They report that “when users on Reddit worked together to promote news from reliable sources, the Reddit algorithm itself began to prioritize higher-quality content. That observation could point us in a better direction for internet governance.” That is one of the key strengths of what I propose below – not only should the filters be open, but they should be emergent and dynamic, to fully augment human intelligence, not replace it. That is the essence of feedback control when humans are in the loop.

My own proposals for open filtering have incubated since around 1970 and drew on my exposure to an open market for filtering in the financial market data industry around 1990 (in the form of analytics). These ideas took full shape in a design for a massively social decision support system for open innovation in 2002-3, which incorporated most of the features suggested here. I updated that to address the broader collaboration of social media over the last decade, most fully in 2018 on filtering algorithms and on the broader architecture. I have generally been agnostic as to questions of pure protocol vs code-based modularity, going beyond the suggestion of enabling open markets in filtering to detail strategies for how we can filter for quality and purpose. Those are based on nuanced social media reputation systems that have the express purpose of seeking quality of content and connections in ways that users can select and vary. They build on the success of Google’s PageRank algorithm for inferring and weighting human judgments of reputation to rank search results for quality and relevance. The next two sections draw on that to offer a vision of how this kind of open market in filtering can apply such strategies to transform social media so it becomes a boon to society, rather than a threat.

These proposals aim to redirect technology and policy to protect both safety and freedom – and to seek the lost promise. These proposals will likely be best managed with ongoing oversight by a specialized Digital Regulatory Agency that can work with industry and academic experts, much like the SEC and the FCC, but with different expertise. My focus here is not on regulation itself (I address that in a elsewhere), but on a normative vision for uses of this technology that we should regulate toward, so that better business models and competition can drive progress toward consumer and social welfare. The following sections expand on how this vision might affect all of us.

Part 1: A vision of what an open market in filtering services enables

Paradise lost sight of -- filtering for social truth and value by augmenting human intelligence

Imagine how different our online world would be with open and innovative filtering services that empower each user. Humans have evolved ourselves and our society to test for and establish truth in a social context, because we cannot possibly have direct knowledge of everything that matters to us (“epistemic dependence”). Renée DiResta explains in “Mediating Consent” how these social processes have been both challenged and enhanced by advances in technology from Gutenberg to social media. Social media can augment similar processes in our digital social context to determine what content to show us, and what people (or groups) to suggest we connect with.

What do we want done with that control? We do not want to rank on “engagement” (how much time we spend glued to our screens) or on whose ad we will be disposed to click on -- but what criteria should apply? Surely, we can do better than just counting “likes” from everyone equally, regardless of who they are and whether they read and considered an item, or just mindlessly reacted to a clickbait headline.

Consider how the nuanced and layered process of mediating consent that society has evolved over millennia has been lost in our digital feeds. Do people and institutions with reputations we trust in agree on a truth? Should we trust in them because we trust others who trust in them? Can we apply this within small communities -- and more broadly? That is how science works – as do political consensus and scholarly citation analysis. That is how we decide who and what to listen to and to believe. Society is no stranger to “Popular Delusions and the Madness of Crowds.”

Technology already succeeded at extending this kind of process into Google’s original PageRank search algorithm, weighing billions of human evaluations at Internet speed and scale -- links in to Web pages signal both quality and relevance. Social media feeds can empower users to mediate consent in ways they and their communities favor. Filtering algorithms can make inferences based on the plethora of information quality “signals” of human judgments that social media produce in the normal course of operation (clicks, likes, shares, comments, etc,) and can combine that with rudimentary understanding of content. Much as PageRank does, they can factor in the reputations of those providing the signals, augmenting what humans have always done to decide what to pay attention to and which people and groups to connect with. AI and ML (artificial intelligence and machine learning) have their place, but for mediating consent in social media, it is to augment human wisdom, not to replace it. The same applies to selecting for emotions, attitudes, values, and relationships.

To be effective and scalable, reputation and rating systems must go beyond simplistic popularity metrics (mob rule) or empaneled raters (expert rule). To socially mediate consensus in an enlightened democratic society, reputation must be organically emergent from that society. Algorithms can draw on both explicit and implicit signals of human judgement and reputation, to rate the raters and weight the ratings -- in transparent but privacy-protective ways (as I have detailed elsewhere). Better and more transparent tools could help us consider the reputations behind the postings -- to make us smarter and connect us more constructively. We could factor in multiple levels of reputation to weight the human judgments of those who other humans whom we respect judge to be worth hearing (not just the most “liked” by whoever). We could favor content from people and publishers we view as reputable. And we can factor in human curation as desired.

Such mechanisms can help us understand the world as we choose to view it, and to understand and accept that other points of view may be reasonable. Fact-checking and warning labels often just increase polarization, but if someone we trust takes a contrary view we might think twice. Filters could seek those “surprising validators” and sources of serendipity that offer new angles, without burying us in noise.

To make reputation-based filtering more effective, the platforms should better manage user identity. Platforms could allow for anonymous users with arbitrary and opaque alias names, as desirable to protect free speech, but explicitly distinguish among multiple levels of identity verification (and explicitly distinguish human versus bot). Weighting of reputation could reflect both how well validated a user identity is, and how much history there is behind their reputation. This would help filter out bad actors and bots in accord with standards that users choose.

Now the advertiser-funded oligopoly platforms perversely apply similar kinds of signals with great finesse to serve their own ends. A Facebook engineer lamented in 2011 that “The best minds of my generation are thinking about how to make people click ads.” They have engineered Facebook and Twitter and the rest to work as digital Skinner boxes, in which we are the lab rats fed stimuli to run the clickbait treadmill that earns their profits. We cannot expect or entrust them to redirect that treadmill to serve our ends -- even with increased regulation and transparency. If revenue primarily depends on selling ads, efforts to counter that incentive to favor quality over engagement swim against an overwhelming tide. That need not be malice, but human nature: “It is difficult to get a man to understand something, when his salary depends on his not understanding it.” (See the Karen Hao's reporting for evidence of this pathology. For more background, see the recent Facebook disclosure, “How machine learning powers Facebook’s News Feed ranking algorithm,” and the critique of that in Tech Policy Press.)

Driving our own filters

Users should be able to combine filtering services to be selective in multiple ways, favoring some attributes and disfavoring others. Algorithms can draw on human judgements to filter undesirable items out of our feeds, by downranking them, and recommend desirable items in, by upranking them. Given the firehose of content that humans cannot keep up with, filters need rarely do an absolute block (censorship) or an absolute must-see. Instead, interoperable filters can be composed by each user with just a few simple selections -- so their multiple filtering service suggestions are weighted together to present a composite feed of the most desired items.

That way we can create our own “walled garden,” yet make the walls as permeable as we choose, based on the set of ranking services we activate at any given time. Those may include specialized screening services that downrank items likely to be undesirable, and specialized recommenders to uprank items corresponding to our tastes in information, entertainment, socializing, and whatever special interests we have. Such services could comefrom new kinds of providers, or from publishers, community organizations, and even friends or other people we follow. Services much like app stores might be needed to help users easily select and control their middleware services at basic level -- or with more advanced personalization. We have open markets in “adtech” – why not “feedtech?”

Some have suggested that filtering should be prohibited from using algorithms and personal data signals—that would limit abusive targeting but would also cripple the power of filters to positively serve each user. Better to 1) motivate filtering services to do good, and 2) develop privacy-protective methods to apply whatever signals can be useful in ways that prevent undue risk. To the extent that user postings, comments, likes, and shares are public (or shared among connections), it is only more private signals like clickstreams and dwell time that would need protection. Filtering services could be required to serve as fiduciaries, with enforceable contracts to use personal data only opaquely, and only for purposes of filtering.

Filtering services might be offered by familiar publishers, community groups, friends, and other influencers we trust. Established publishers could extend their brands to re-energize their profitability (now seriously impaired by platform control): New York Times, Wall Street Journal, or local newspapers; CNN, Fox, or PBS; Atlantic or Cosmopolitan, Wired or People; sports leagues, ACLU, NRA, or church groups. Publishers and review services like Consumer Reports or Wirecutter can offer recommendations. Or if lazy, we could select among omnibus filters to set a single default, much as we select a default search engine. But even there, we could select from a diversity of such offerings, because network effects would be minimal.

Users should be able to easily “shift gears,” sliding filters up or down to accommodate changes in their flow of tasks, moods, and interests. Right now, do you want to see items that stimulate lean-forward thinking or indulge in lean-back relaxation? – to be more or less open to items that stimulate fresh thinking? Just turn some filtering services up and others down. Save desired combinations. Swap a ‘work’ setting for a ‘relax’ setting. Filter suites could be shared and modified like music playlists, and could learn with simple feedback like Pandora. Or, just choose one trusted master service to make all those decisions.

Filter-driven downranking could also drive mechanisms to introduce friction, slow viral spread of abusive items, and precisely target fact-checks and warning labels for maximum effect. Friction could include such measures as adding delays on promotion of questionable items and downranking likes or shares done too quickly to have read more than the headline. Society has always done best when the marketplace of ideas is open, and oversight is by reason and community influence, not repression. It is social media’s recommendations of harmful content and groups that are so pernicious, far more than any unseen presence of such content and groups.

The nature of echo chambers and filter bubbles and other mechanisms of polarization in social media are complex and often oversimplified. Sociologist Chris Bail argues that “social media is really not about a competition of ideas, but it’s about a competition of our identities” -- not so much echos or filtering of ideas, but of emotions, attitudes, values, and relationships. He notes that cross-fertilization can backfire, increasing polarization instead of reducing it, and makes the important point that “identity is so central to polarization,” describing social media as distorting “prisms.” He proposes a number of tactics that can be implemented to help counter that distortion. Many of those tactics are aligned with the design strategies for more positive filters and related human signaling outlined here.

That is a matter of defining the filtering algorithm’s “objective function” to match its human purpose. Engineers, psychologists and social scientists can collaborate to design to accommodate the desired ends of the users of their services, and to replace “dark patterns” with beneficial ones. Government regulators and civic organizations might offer guidelines and tools to support that -- in the form of soft nudging toward a mediated consent that emerges democratically, in an open market, from the bottom up. There are no panaceas to this many-faceted problem that predates social media, but we can at least seek to avoid amplifying these destructive feedback loops -- and can better align incentives to support that.

Filtering services can emerge to shine sunlight on blindness to reality and good sense. They can entice reasonable people to cast a wider net and think critically. Simple fact-checking often fails, as does simple exposure to opposing views, because when falsehoods are denied from outside our communities of identity and belief, confirmation bias increases polarization. But, as Cass Sunstein suggested, when someone trusted within our group challenges a belief, we stop and think. Algorithms can identify and alert us to these “surprising validators” of opposing views. A notable proof point is the Redirect Method experiments in dissuading potential ISIS sympathizers by presenting critical videos made by former members. Bail’s bots do much the same, retweeting to liberals items from conservatives that have resonated with other liberals, and vice versa. As Derek Robertson’s commentary on QAnon suggests, “...more than censoring or ignoring such a thing, those who would oppose it should be prepared to make its followers a more appealing offer.” With the right incentives and design strategies, purpose-driven filters might learn to nurture this more constructive kind of feedback. Clever filtering can also augment serendipity, cross-fertilizing across information silos and surfacing fringe ideas that deserve wider consideration (Galileo’s “and yet it moves”). Clever design can enlist people to help with that, much like Wikipedia -- and even gamify it. America has a strong tradition of free expression for a reason. This is not just naïve idealism – the Inquisition and Index of Prohibited Books were long ago found to be counterproductive.

User-driven markets for filters can also better serve local community needs within the global village. As Emily Bell explained, “Facebook’s attempts to produce a “scalable” content moderation strategy for global speech has been a miserable failure, as it was always doomed to be, because speech is culturally sensitive and context specific.” Network effects drive global platforms, but filtering should be local and adaptive to community standards and to national laws and norms. US, EU and Chinese filters can be different, but open societies would make it easy to swap alternative filters in when desired. The Wall Street Journal’s “Blue Feed, Red Feed” strikingly demonstrated how different the Net can look through another’s eyes. Members of any community of thought – religion, politics, culture, profession, hobby – could enable their members to filter in accord with their community standards, at user-variable levels of selectivity, without imposing those standards on those who seek broader horizons.

A compelling case for much greater user control of our own filters is assembled by Molly Land in The Problem of Platform Law: Pluralistic Legal Ordering on Social Media. She makes the case that now, social media users are subjected to “platform law,” which from a human rights perspective is “both overbroad and underinclusive.” It threatens diversity, the rule of law and accountability, and impedes pluralistic decision-making. Instead, “technological design that allows individuals greater choice over the norms by which they want to be governed…would enable each individual to become a decision maker with respect to the information and expression they want to consume.” This could go well beyond tinkering with curation to provide a dynamically emergent kind of “community law.” To the risk of self-imposed filter bubbles, she counters that “Allowing or even requiring users to decide for themselves which perspectives they prefer to hear will make more transparent the fact that a choice is being made.”

The question of “censorship” of ideas on private platforms deserves clarification. I use the word in a practical sense. Of course private social media companies are now legally free to limit who uses their platform, and that is legally not censorship. But when a platform dominates an important medium, it has the practical effect of censorship. Dominant social media are becoming essential utilities, and at least some kind of “common carrier” regulation that provides for “equal carriage” in posting -- but not equal reach in filtering -- may be needed.

Part 2: How an open market for filtering services addresses specific problems

Let’s review how an open market for filtering services addresses misinformation, virality, harmful content, “splinternets”, and exploitative business incentives.

A cognitive immune system for misinformation

This augmentation of human intelligence signals also promises a powerful solution to the seemingly intractable problem of detecting misinformation and disinformation by either pure AI/ML or human curation alone. AI/ML will not be up to the task any time soon, and designated expert moderators cannot deal with the scale or immediacy of the problems -- and are widely suspect as being biased in any case. But clever augmented intelligence algorithms can crowdsource human curation to be nearly as accurate as experts, as shown in studies by Pennycook and Rand, and by Epstein, Pennycook and Rand. Such methods are clearly superior to formal fact-checking as to speed, cost, and scalability. Think of this as a cognitive immune system that integrates signals emerging from a diversity of distributed sensors, in much the same way that our bodies coordinate an emergently learned response to pathogens as a kind of distributed intelligence. This can be used to drive circuit breakers to stop harmful virality, as described below. This too is being explored by Twitter in an initiative they call “Birdwatch.”

While Birdwatch and the studies cited above rely on explicit quality ratings from users, much the same could be done from a very much wider body of users, with no added user effort, by inference from the positive or negative reputations of those who like or share a given item. Social media already know who the malefactors are – they just have little motivation to care. This kind of inference may be imprecise, but massive numbers of signals can wash out the errors.

Note that this approach can also apply contextual nuance to avoid the pitfalls of seeking authoritative global truth, by being sensitive to “community law” standards and worldviews. Much as described above for surprising validators, augmentative algorithms can assess which human judgments come from which communities, and temper filtering and immune responses to be loose or restrictive accordingly.

That is exactly how augmented intelligence can shine. Only humans can understand all the nuance, but algorithms can learn to harvest and apply that wisdom. They can learn to read the signals from the humans to augment and amplify the positive dynamic of human mediation of consent. Then, they can feed that back to we humans – in our feeds. Instead of continuing to feed what Jeff Bezos referred to as “a nuance destruction machine,” we can augment human nuance with a virtuous feedback loop of “man-computer symbiosis.”

Filters and circuit breakers -- parallels in financial marketplaces

The parallels between our marketplace of ideas and our financial markets run deep. There is much to learn from and adapt, both at a technical and a regulatory level. Both kinds of markets require distilling the wisdom of the crowd -- and limiting the madness. The Reddit-inspired GameStop bubble that triggered circuit breakers in trading this January reminded us that the marketplaces of ideas and of securities feed into one another. The sensitivity of financial markets to information and volatility has driven development of sophisticated control regimes designed to keep the markets free and fair while limiting harmful instabilities. For nearly a century it has been the continually evolving mission of the SEC, working with exchanges/dealers/brokers/clearinghouses, to keep the markets free of manipulation -- free to be volatile, but subject to basic ground rules -- and the occasional temporary imposition of circuit-breakers.

Regulatory mechanisms properly apply much more loosely to our marketplace of ideas. But our social media markets of ideas need circuit breakers to limit instabilities by reducing extremes of velocity, without permanently constraining speech (unless clearly illegal or harmful). That suggests social media restrictions on postings can be rare, just as individual securities trades can be at foolish prices. Circuit breakers are applied in securities trading when the velocity of trades leads to such large and rapid market swings that decisions by large numbers of people become reactive and likely to lose touch with reality. Those market pauses give participants time to consider available information and regain an orderly flow in whatever direction then seems sensible to the participants. There is a similar need for friction and pauses in social media virality.

User-controlled filtering that serves each user should be the primary control on what we see, but the financial market analog supports the idea that circuit breakers are sometimes needed in social media. Filters controlled by individual users will not, themselves, limit flows to users who have different filters. To control excesses of viral velocity, access and sharing must be throttled across a critical mass of all filters that are in use.

The specific variables relevant to the guardrails needed for our marketplace of ideas are different from those in financial markets, but analogous. Broad throttling can be done by coordinating the platform posting and access functions using network-wide traffic data -- plus consolidated feedback on quality metrics from the filters, combined with velocity data from the platforms. As noted above, this crowdsourced quality data could drive a cognitive immune system to protect against misinformation and disinformation at Internet speed and scale.

A standard interface protocol could enable the filters to report problematic items. Such reports could be sent back to the platforms that are sourcing them, or to an Internet-wide coordination service, so it can be determined when such reports reach a threshold level that requires a circuit breaker to introduce friction into the user interfaces and delays in sharing. Signaling protocols could support sharing among the platforms and all the filtering services to coordinate warnings that downranking, delays, or other controls might be desired. To preserve individual user freedom, users might be free to opt-out of having their filters adhere to some or all such warnings.

Much as financial market circuit breakers are invoked by exchanges or clearinghouses in accord with oversight by the SEC, social media circuit breakers might be invoked by the network subsystems, in accord with oversight by a Digital Regulatory Agency, based on information flows across this new digital information market ecology.

Harmful content: controls and liability

User control of filters enables society to again rely primarily on the best kind of censorship: self-censorship -- and helps cut through much of the confusion that surrounds the current controversy over whether Section 230 of the Communications Act of 1996 should be repealed or modified to remove the safe harbor that limits the liability of the platforms. Many knowledgeable observers argue that Section 230 should not be repealed, but some advocate modifications to limit amplification (including writers from AOL, Facebook policy and Facebook data science). Harold Feld of Public Knowledge argues in The Case for the Digital Platform Actthat “elimination of Section 230 would do little to get at the kinds of harmful speech increasingly targeted by advocates” and is “irrelevant” to the issues of harmful speech on the platforms. He provides helpful background on the issues and suggests a variety of other routes and specific strategies for limiting amplification of bad content and promoting good content in ways that are sensitive to the nature of the medium.

Regardless of the legal mechanism, Feld’s summary of a Supreme Court ruling on an earlier law makes the central point that matters here: “the general rule for handling unwanted content is to require people who wish to avoid unwanted content to do so, rather than to silence speakers.” That puts the responsibility for limiting distribution of harmful content (other than clearly illegal content) squarely on users – or on the filtering services that should be acting as faithful agents for those users.

Nuanced regulation could depend on the form of moderation/amplification, as well as its transparency, degree of user “buy-in,” and scale of influence. So long as the filters work as faithful agents (fiduciaries) for each user, in accord with that user’s stated preferences, then they should not be liable for their operation. Regulators could facilitate and monitor adherence to guidelines on how to do that responsibly. Negligence in triggering and applying friction and downranking to slow the viral spread of borderline content might be a criterion for liability or regulatory penalties. Such nuanced guardrails would limit harm while keeping our marketplace of ideas open to what we each choose to have filtered for us.

If independent filtering middleware selected by users does this “moderation,” the posting/access platform remains effectively blind and neutral (and within the Section 230 safe harbor, to the extent that may be relevant). That narrowing of safe harbor (or other regulatory burdens) might help motivate the platforms to divest themselves of filtering -- or to at least yield control to the users. That is evidently a factor in Twitter’s Bluesky initiative. If the filtering middleware is spun out, the responsibility then shifts from the platforms to the filtering middleware services. Larger middleware services could dedicate significant resources to doing moderation and limiting harmful amplification well, while smaller ones would at worst be amplifying to few users. Users who were not happy with how moderation and amplification was being handled for them could freely switch to other service providers.

But if the dominant platforms retain the filters and fail to yield transparency and control to their essentially captive users, regulation might need to take a heavy hand. Their control would threaten the freedom of expression and of impression that is essential to our marketplace of ideas.

Some advocates are beginning to see how this may help address issues such as online harassment. Tracy Chou, founder of Block Party and cofounder of Project Include, wrote in Wired this month that “For platforms to open up APIs around moderation and safety is a win-win-win. It’s better for users, who have more control over how they engage. It’s better for platforms, who have happier users. And it enables a whole ecosystem of developers to collaborate on solving some of the most frustrating problems of abuse and harassment on platforms today.”

Social Internet or splinternets? – “I am large, I contain multitudes”

We are now seeing a breakdown into “splitnernets”. This reaction is occurring in both directions: nests of vipers with unchecked extremism on one side, and walled gardens of cultivated civility on the other. Pressure to exclude extremists leads them to separate services like Parler and Gab, and desire to escape toward the positive leads to services like Front Porch Forum and Polis. But this strategy is unstable: MeWe was “inspired by trust, control, and love,” but apparently there is a thin line between that ideal and the “alt-tech” fringe.

From the early vision of the internet as a connecting force the trend towards splinternets represent a potentially huge defeat. As we consider ways to avoid such an outcome, perhaps we can look for inspiration from a famous line of poetry by Walt Whitman:

Do I contradict myself? / Very well then I contradict myself,

(I am large, I contain multitudes.)

Human culture contains multitudes. It thrives on diversity channeled through a dynamically varying balance of openness and structured protection. Enclaves that have "better" values and quality have an important place, as safe spaces to nurture new ideas and new models for society. But once they are nurtured, more open interconnection is important to test, rebalance, and integrate the best ideas, while retaining the open diversity that ensures resiliency.

Only by designing for diversity can we maximize the power of our marketplace of ideas. We must tolerate fringe views but limit their ability to do harm -- being cautiously open to the fringe is how we learn and evolve. Flexible filters can let us decide when we want to stay in a comfort zone, and when to cast a wider net -- and in what directions. Ideally, niche services could take the form of walled-garden communities that layer on top of a universal interconnect infrastructure. That enables the walls to be semi-permeable or semi-transparent.

That argues for a broad move from platforms toward protocols, as Masnick proposes, and as the Internet has demonstrated the virtue of. We might first move toward a hybrid model that allows platforms to continue --with open filtering middleware -- but that gradually forces those beyond a given threshold mass to open more deeply to protocols. Network effects drive toward universality of posting and access, but this need not be centralized on a single platform – it can be distributed among services that interoperate in accord with protocols to appear as a single service, much as email does. And, as Aral argues: “Breaking up social media without ensuring interoperability is perhaps the worst of all possible approaches. …[not] ensuring that consumers can easily connect to one another across those networks will destroy much of the economic and social value created by social media without solving any of the problems…”

Walled gardens (with semi-permeable walls) can still be created on top of open protocols (sort of like Virtual Private Networks) to nurture new ideas. Extensions for new modes of interaction can be tested and then integrated into standard protocols, much as Web protocols have embraced new services, and email protocols have been gradually extended to support embedded images and HTML. We can migrate from the current monolithic social media to more federated and then fully open and interoperable assemblages of services. As noted above, Twitter’s Dorsey has already begun to explore that direction with his Bluesky initiative.

Realigning business incentives – attention capitalism and though-robber barons

There is a broadening consensus that “The Internet's Original Sin” is that advertising-based business models began to drive filtering/ranking/alerting algorithms to feed us junk food for the mind, even when toxic – and that increasingly crowds out much of the Internet’s potential virtue. The oligopolies rely on advertising are loath to risk any change to that, and uninterested in experimenting with emerging alternative business models. That is a powerful tide to swim against.

Regulators hesitate to meddle in business models, but even partial steps to open just this layer of filtering middleware could do much to decouple the filtering of our feeds from the sale of advertising. A competitive open market in filtering services would be driven by the demand of individual users, making them more “the customer” and less “the product.” Now the pull of advertising demand funds an industry of content farms that create clickbait and disinformation. Shoshana Zuboff’s tour de force diagnosis of the ills of “surveillance capitalism” has rightly raised awareness of the abuses we now face, but I suggest a rather different prescription. The more deadly problem is attention capitalism. Our attention and thought are far more valuable to us than our data, and the harms of misdirection of attention that robs us of reasoned thought are far more insidious to us as individuals and to our society than other harms from extraction of our data.

It is improper use of the data that does the harm. As outlined above, the extraction of our attention stems from the combination of platform control and perverse incentives. The cure is to regain control of our feeds, and to decouple those perverse incentives.

My work on innovative business models suggests how an even more transformative shift from advertising to user-based revenue could be feasible. Those methods could allow for user funding of social media in value-based ways that are affordable for all -- and that would align incentives to serve users as the customer, not the product.

As a half step toward those broader business model reforms, advertising could be more tolerable and less perverse in its incentives if users could negotiate its level and nature, and how that offsets the costs of service. That could be done with a form of “reverse metering” that credits users for their attention and data when viewing ads. Innovators are showing that even users who now block ads might be open to non-intrusive ads that deliver relevance or entertainment value -- and willing to provide their personal data to facilitate that.

Conclusion: The re-formation of social media

Given how far down the wrong path we have gone, and how much money is now at stake, reform will not be easy, and will likely require complex regulation, but there is no other effective solution. To recap the options currently being pursued:

- Current privacy, human rights, and antitrust initiatives are aimed at social media’s harms, but even if broken up or regulated, monolithic, ad-driven social media platforms will still have limited ability to protect our marketplace of ideas – and strongly perverse incentives not to.

- Simple fact-checking and warning labels have very limited effect -- more sophisticated psychology-based interventions have promise, but who combines the skill, wisdom, and motivation to apply them effectively, even if mandated to do so?

- Banning Trump was a draconian measure that dominant platforms rightly shied away from, understanding that censoring who can post is antithetical to a free society. Censorship and bans from “essential utilities” clearly lack legitimacy and due protection for human rights when decided by private companies -- or even by independent review boards.

As noted above (and outlined more fully elsewhere), a promising regulatory framework is emerging. This goes beyond ad hoc remedies aimed at specific harms, and provides for ongoing oversight by a specialized Digital Regulatory Agency that would work with industry and academic experts, much like the FCC and the SEC. Hopefully, the Biden administration will have the wisdom and will to undertake that (the UK is already proceeding).

The other deep remedy would be to end the Faustian bargain of the ad model very broadly, but that will take considerable regulatory resolve – or a groundswell of public revulsion. Neither seem imminent (but, as reported by Gilad Edelman, there were some tentative signs in the 3/25/21 Congressional hearings that perhaps that some significant move in that direction might not be out of the question). One strategy for finessing that is the “ratchet” model that I have proposed, inspired by how regulations ratchet vehicle manufacturers toward increasingly strict fuel economy standards has driven the industry to meet those challenges incrementally, in ways of their own devising. The idea is simple: mandate or apply taxes to shift social media revenue to a small, but then increasing, percentage of user-based revenue. But the focus of this article is on this more narrowly targeted and clearly feasible divestiture of filtering. And, as noted above, even a well-motivated monolith would have difficulty providing the diversity of filtering options needed for a well-functioning marketplace of ideas.

While regulators seem reluctant to meddle with business models, there is precedent for modularizing interoperating elements of a complex monolithic business through a functional breakup. The Bell System breakup separated services from equipment suppliers, and local service from long-distance. That was part of a series of regulatory actions that also required modular jacks to allow competitive terminal equipment (phones, faxes, modems, etc.), number portability, and many other liberating reforms that spurred a torrent of innovation and consumer choice. That was far too complex for legislation or the judiciary alone, but solvable by the FCC working with industry and independent experts in stages over many years.

Internet e-mail also serves as a relevant design model – it was designed to replace incompatible networks, enabling users of any “user agent” (like Outlook or Gmail) to send through any combination of cloud “transfer agents” to a recipient who used any other user agent. In the extreme, such models for liberation could lead to “protocols, not platforms,” as Masnick proposes. One move in that direction would allow multiple competing platforms to interoperate to allow posting and access across multiple platforms, each acting as a user messaging agent and a distributed data store. Also, as noted above, the model of financial markets seems very relevant, offering proven guiding principles.

But in any case, even short of an open filtering middleware market, it is essential to democracy to provide more control to each user over what information the dominant platforms feed us. Some users will still join cults and cabals, as people have always done. But the power and perverse incentives to distribute that Kool Aid widely can be weakened. Consider how water sloshing in an open tray forms much more uncontrollable waves than in a tray with a separating insert for making ice cubes. Even if the filters stay no better than they are now and users just pick them randomly, they will become more diverse and distinct – that, alone, would help damp out dangerous levels of virality and ad-driven sensationalism. The incentive of engagement that drives recommendations of pernicious content, people, and groups would be eliminated or at least made more indirect.

Practical action steps

For regulators, lawmakers and executives bold enough to pursue this vision, a number of practical action steps lie ahead:

- Policies should be reframed to treat filtering that targets and amplifies unsolicited reach to users as editorial authorship/curation/moderation of a feed (and thus potentially subject to regulation and liability). That might, itself, motivate the platforms to divest that function to avoid that risk to their core businesses, and to help design effective APIs that support those independent filtering services to add value to their core services. They could retain the ability to provide the raw firehose, filtered only in non-“editorial” ways -- by simple categories such as named friends or groups, geography, and subject, in reverse chronological order, with no ranking or amplification. When necessary, random sampling could reduce the message flow to each user to a manageable level. But, to foster competition and innovation, these rules should be strictly applied only when platforms exceed some threshold size or metric of dominance.

- Such a spinout could divest the platforms’ existing filtering services and staff into one or several new companies with clear functional boundaries and distinct subsets of the user base. The new entities might begin with the current software code base (possibly as open source), but then evolve independently to serve different communities of users -- but with requirements for data portability to facilitate switching.

- The spin-out should be guided (and mandated as necessary) by well-crafted regulation combined with ongoing adaptation. Regulators should define, enforce, and evolve basic guardrails on the APIs, protocols, and related practices, and circuit breakers on both sides -- and continually monitor and evolve that.

- Such structural changes alone would at least partially decouple filtering from the perverse effects of the ad model. However, as noted above, regulation may be needed to address that more forcefully by mandates (or taxes) that drive a shift to user-based revenue. A survey of some notable proposals for a Digital Regulatory Agency, as well as suggestions of what we should regulate for, not just against, is in an earlier piece of mine, Regulating our Platforms-- A Deeper Vision.

- The structural changes creating an open market would also motivate the new filtering middleware services to devise user interfaces and new algorithms to better provide value to users. The framework for reputation-based algorithms outlined above is more fully explained in The Augmented Wisdom of Crowds: Rate the Raters and Weight the Ratings.

- A new digital agency could also address the many other desirable objectives of Big Tech platform regulation, including consumer privacy, data usage, and human rights, standards and processes to remove clearly impermissible content, and limits on anticompetitive behavior, as well as Big Tech oligopoly issues beyond social media, such as search (Google) and retail (Amazon).

- Such an agency might also oversee development toward a more open, protocol-based architecture.

We need to move beyond “understanding and governing 21st-century technologies with a 20th-century mindset and 19th-century institutions”, as the World Economic Forum’s Klaus Schwab once argued. That echoes management theorist Peter Drucker’s insight that “The greatest danger in times of turbulence is not the turbulence, it is to act with yesterday’s logic.” Today’s logic must recognize the need for and power of algorithmic filters, and that we, the people, must control our personal filters. It is possible to reimagine social media as a pro-democratic force for the century ahead -- but only if we are willing to do the hard work of re-engineering the platforms to give users the freedom to manage the flow of information as they see fit. As our marketplace of ideas accelerates at Internet speed, it is best served by a marketplace of filters.

Authors