We Don't Need Google to Help "Reimagine" Election Misinformation

Mor Naaman, Alexios Mantzarlis / Sep 24, 2024Mor Naaman is the Don and Mibs Follett professor of Information Science at the Jacobs Technion-Cornell Institute at Cornell Tech; Alexios Mantzarlis is the director of the Security, Trust, and Safety Initiative at Cornell Tech.

Composite: the rear camera array on the Google Pixel 9 superimposed on an image of Alphabet CEO Sundar Pichai.

Google has promised to build AI tools that are “responsible from the start.” Its main generative AI tools avoid providing answers to election-related questions altogether. As a signatory of the AI Elections accord, Google has committed to helping prevent the creation of election deepfakes and facilitate their labeling. And yet, weeks before the United States presidential election, the company is putting an elections deepfake machine in the pockets of anyone willing to splurge on its Pixel 9 phone.

Google has developed multiple AI editing tools over the past year. But it is the “Reimagine” feature released in August with the Pixel 9 that crossed into truly risky territory. As researchers of AI and digital safety, and as former Google employees, we believe the company should reconsider this feature and consult more widely on the appropriate safety guardrails. This is especially important in the context of the upcoming U.S. elections with time running out for new regulatory protections.

With a Pixel 9, users can modify any photo they have taken by typing in a prompt within seconds. As The Verge has shown, the phone can be used to mislead by radically transforming a real photo.

It is not difficult to imagine how people might use this tool to manufacture evidence and disseminate election-related misinformation. This could be a particular problem in states such as Georgia and Pennsylvania, where vote counts may be delayed for days or weeks, leaving more time for false claims to proliferate.

With a Pixel 9, bad actors might “reimagine” an explosive device near a poll site in Philadelphia, with the goal of depressing voter turnout. They might “reimagine” a stack of ballot boxes in a photo of a ditch outside the county clerk’s office in Georgia and suggest they have been stolen. They could “reimagine” an image involving election workers in Detroit to falsely suggest vote-tampering and provoke online harassment and physical harm.

While Google has installed some guardrails, we were easily able to circumvent them and generate images of a credible-looking serious accident in a New York City polling location by swiping over a photo and typing a description of the requested edit.

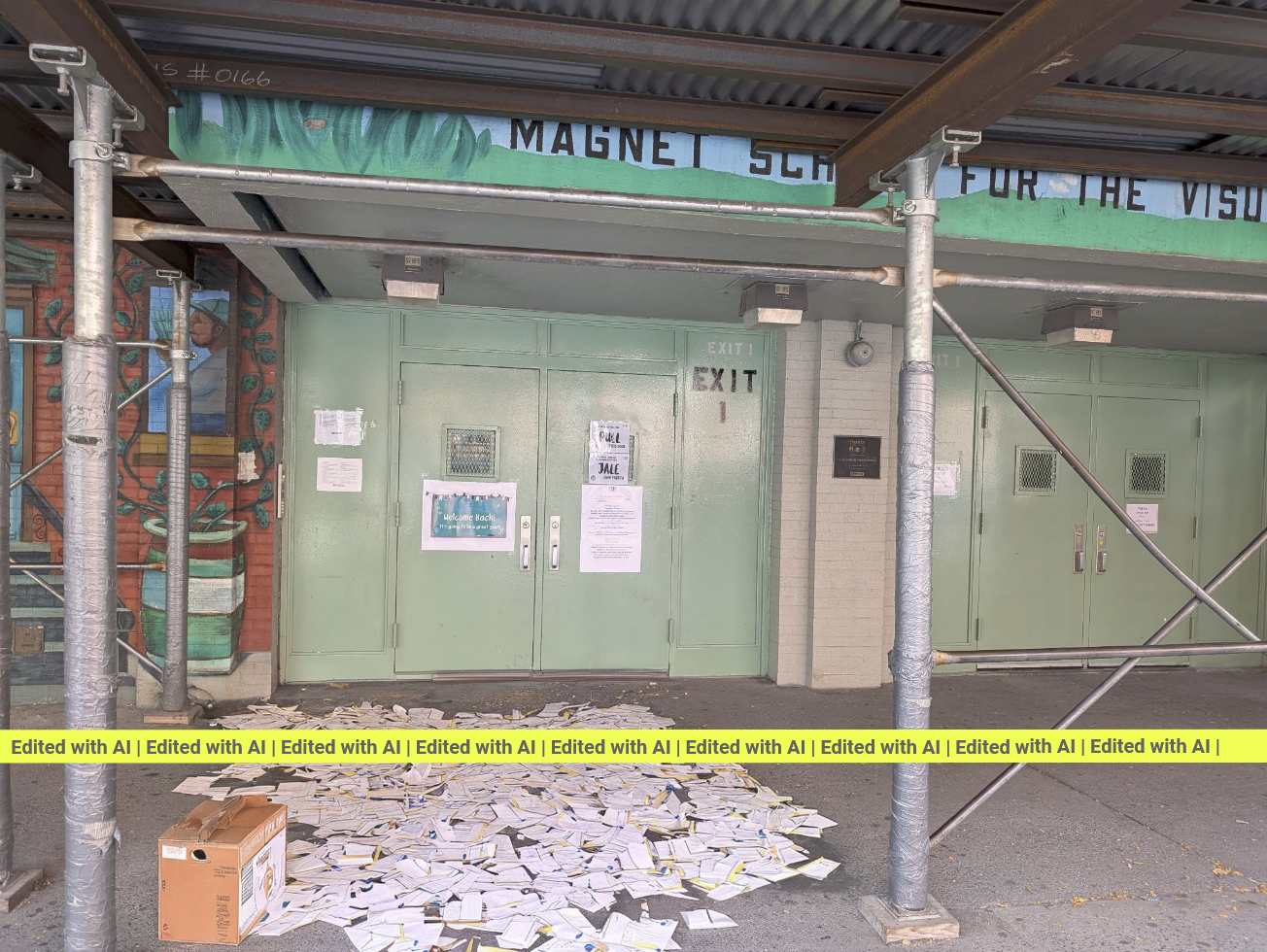

The original image of the entrance to a school that serves as a polling location in New York City, beneath scaffolding, taken with the Pixel 9.

The image “reimagined” with a box of ballots littering the ground.

The image “reimagined” with flames and a toxic-looking sludge in front of the entrance.

Even though much of the misinformation in 2020 relied on “shallow fakes” rather than AI-generated or heavily manipulated images, the processes one of us and other researchers documented back then show how risky this Reimagine launch might be. Just as in 2020, we expect many altered images will be posted and shared by powerful users to support false narratives in 2024. Only this year, for the first time, the accounts spreading them will be able to use even more realistic images grounded in real-time events and places.

The current US election cycle has already seen attempts to use AI to suppress voter turnout. Allegations that images are AI-generated in both federal and state election contexts risk eroding public understanding of important events. And Google’s own researchers have found that AI images are an increasing share of the misinformation debunked by fact-checkers globally.

This new Pixel feature may not only reduce trust in the election results, but also severely overload election boards and law enforcement, suffering an equivalent of denial-of-service attacks with a deluge of realistic-looking reports that are impossible to verify and may require action.

What is more, some of the standard tools currently used by journalists, fact-checkers and law enforcement to verify images will be rendered useless by Reimagine. Fact-checkers often use weather records, street signs and landmarks that can be verified with satellite imagery or Google Earth to confirm that an image is from where it claims to be. These verification tools will be increasingly ineffective against the blended pictures, taken at real locations and reimagined with Google’s Pixel phone.

Some dismiss these concerns as alarmist, pointing to the longstanding existence of Photoshop. What they do not recognize is how much easier it is to fake things with Reimagine compared to previous photo-editing tools. Within minutes, images can be taken on site, manipulated, and spread online to millions of people by anyone with the right phone. According to research one of us published, in 2020 most of the popular visuals used in the voter fraud misinformation campaign on X (then Twitter) reached their top popularity within three hours of first being posted. This short period leaves very little time to respond, especially to Pixel images that can now be posted to social networks almost in real time.

Additional precautions are possible and critically needed:

- More safety filters should be added to reject Reimagine prompts. Content-based guardrails taking into account election vulnerabilities, photo content and the nature of the edit should be developed.

- An AI label should be added to all “reimagined” images; a visible marker will be harder to remove than metadata and more likely to travel with the photo as it gets shared by email, messaging apps, and social networks.

- Geo-fencing could be used to prevent the use of Reimagine around sensitive locations like police stations, places of worship, schools, and polling places

- Digital signatures for original, non-modified images should be equally central to Pixel’s product vision. While far from sufficient, these signatures can provide users reporting real events a tool to signal partial reliability.

More broadly, Google should strongly consider pausing the launch of Reimagine for several months, at least until after the US election results are certified. The company can use the pause period to add safeguards like the ones above and fund digital literacy campaigns that explain what is possible with tools like Reimagine. These steps would inspire greater confidence in its capacity to protect elections worldwide.

Lawmakers could and should place additional guardrails on tools like Reimagine. Legal frameworks that could be appropriate for “slowing down” or limiting this type of feature have already been proposed. Other laws can target knowingly distributing fake images aimed to subvert the voting process or require platforms to label or remove such content.

For now, however, there is a patchwork of state-level deepfake laws whose scope may well be insufficient. Both the definition of deepfakes and their consistent detection remains challenging. Even if effective laws are enacted, the challenge of detecting and responding promptly to deceptively edited media in order to avoid the rapid proliferation of falsehoods may make them inadequate for breaking news events and other real-time crises.

We are thus left with Google. The company should live up to its promise to be both bold and responsible by curbing the runaway risks of its deepfake-generating Pixel phone.

Related reading

Authors