The Future of Fact-Checking Isn’t Either/Or — It’s Both

Baybars Orsek / Feb 28, 2025A decade ago, when I first joined the global fact-checking community — initially as a founder and later as the director of the International Fact-Checking Network (IFCN) for four years before moving to Logically — the mission was clear: to build a global coalition of annually audited fact-checkers to combat misinformation. And for a time, it worked. The network grew, platforms invested in fact-checking, and fact-checks became integral to public discourse.

Then, two seismic events — the COVID-19 pandemic and the 2020 US presidential election — propelled fact-checking into the global spotlight. Suddenly, fact-checking became a household term among politicians, journalists, academics, and observers worldwide. But with that visibility came challenges. Fact-checkers faced harassment and attacks for their work, and it became convenient — and profitable — for many to misrepresent this essential form of journalism as censorship.

To their credit, platforms led by Meta continued to grow their investments in integrity efforts while actively promoting their partnerships with professional fact-checking organizations to address the challenges of elections and other high-risk global events. These included the rapid spread of harmful narratives during military conflicts, natural disasters, social unrest, and financially driven misinformation — issues that some might dismiss as trivial but were, in reality, critical for hundreds of millions of users striving to navigate an online landscape rife with misleading and harmful content.

The landscape of misinformation has evolved, but the systems designed to combat it have lagged behind. Researchers have rigorously assessed the effectiveness of fact-checking, recognizing its impact while also underscoring its limitations in speed and scale. The question is no longer whether fact-checking should continue, but how it must adapt — and whether the next model can be scalable, technology-driven, and financially sustainable without relying on platform handouts.

New Strategy: Wisdom of the Crowds

Meta’s recent decision to end its third-party fact-checking program, starting in the US, marks a significant shift in how platforms approach misinformation. This move signals a broader trend in the industry — an eagerness to outsource the responsibility of fact-checking to crowdsourced solutions rather than supporting independent, professional verification efforts. Instead of investing in fact-checking organizations that bring methodological rigor and accountability, platforms are opting for safer, scalable alternatives that put the burden on users.

A prime example is X (formerly Twitter), which has doubled down on its Community Notes approach. While this model has shown promise in some cases, it is not a complete replacement for professional fact-checking. Community Notes rely on crowdsourced consensus, meaning that fact-based corrections may fail to gain traction if they contradict dominant narratives or become the target of brigading efforts. This makes the system more vulnerable to influence, introducing the need for stronger safeguards against coordinated misinformation campaigns.

The reality is that platforms are shifting fact-checking decisions away from independent professionals and onto the whims of user communities. While this is framed as democratizing verification, it is, in many ways, an abdication of responsibility. Fact-checking should not be left to popularity contests or crowdsourced opinions when the stakes include public health, election integrity, and security. I acknowledge that a community-based approach can introduce diverse perspectives, but having various viewpoints is not the same as possessing the expertise needed to assess the accuracy of information.

Why Current Fact-Checking Models No Longer Work

When IFCN was founded nearly ten years ago, fact-checking was largely a journalistic initiative: newsrooms debunked viral hoaxes, and partnerships with platforms helped distribute corrections. But today’s misinformation ecosystem is vastly different. It is faster and more algorithmic — fueled by AI-generated content, sophisticated disinformation networks, and virality-driven platforms. Fact-checking, as it was initially designed, cannot keep pace with the scale of today’s online manipulation tactics.

The network built credibility around a code of principles, ensuring that fact-checking organizations followed rigorous methodologies. But the model relies too heavily on slow, manual processes — it was built for a time when misinformation spread article by article, not at AI speed.

Financial sustainability remains a problem. Most fact-checking organizations depend on grant funding and short-term platform partnerships — a fragile model that is not even viable without regulatory pressures forcing platforms to take responsibility. Meta’s decision to drop its fact-checking partners underscores the risks of relying on tech platforms for funding and distribution. The reality is that professional fact-checking, as it exists today, cannot scale effectively without a technological reboot.

The Path Forward: A Scalable Fact-Checking Model

We need to shift from a fragmented, labor-intensive model to an AI-assisted, platform-integrated system that is applicable on a cross-platform scale. Instead of relying solely on reactive fact-checking, we need a hybrid ecosystem where AI, platforms, fact-checkers, and users enrolled in community notes models work together to verify content at speed and scale, focusing on harm and virality.

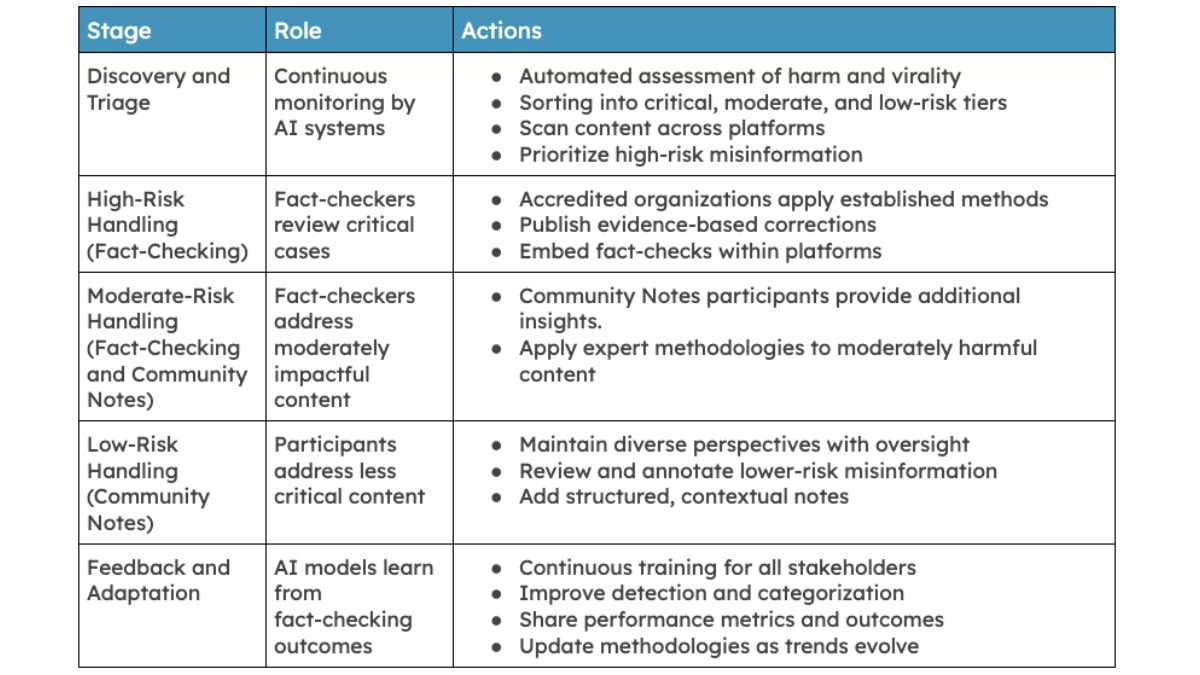

A tech-enabled future for fact-checking should prioritize a risk and virality-based approach, ensuring that emerging falsehoods are addressed at the right level of intervention:

- AI-driven prioritization & triage: Smart systems should identify and escalate misinformation based on its potential harm and virality, allowing accredited organizations and experts to intervene before falsehoods gain traction. Emerging narratives can be segmented based on risk levels, ensuring that high-impact claims receive expert attention, while lower-risk misinformation is managed through scalable community-driven mechanisms.

- Hybrid verification model: A tiered approach that blends expert fact-checking, AI automation, and structured community input — avoiding the pitfalls of unchecked crowdsourcing while ensuring a multi-layered response tailored to the scale and severity of misinformation.

- Integrated, contextual fact-checking: Fact-checks must move beyond static articles and instead be embedded directly within content streams, search results, and conversational AI. This ensures that corrections are contextual, frictionless, and surfaced where misinformation spreads.

- Long-tail moderation via Community Notes: While accredited experts and organizations tackle high-risk falsehoods, community-driven initiatives like Community Notes can efficiently handle lower-risk, long-tail misinformation — enabling a sustainable and scalable fact-checking ecosystem.

Approaches to scalable fact-checking

Who Needs to Step Up?

- To Platforms: Fact-checking is essential, but it must be redesigned for scale, speed, and integration into content systems. Invest in smarter verification tools instead of retreating from fact-checking altogether.

- To Fact-Checkers: The old IFCN model is no longer sufficient. To stay relevant in the fight against misinformation, we must embrace AI, automation, and platform integrations.

- To Policymakers: Misinformation regulations must incentivize platforms to adopt scalable, fact-based interventions — not just impose takedown policies that ignore the gray areas of online falsehoods.

- To Investors: The trust and safety market is expanding, and verification tools are an untapped commercial opportunity. Fact-checking isn’t just a public good — it’s a business opportunity for platforms, enterprises, and risk intelligence firms.

The Future of Fact-Checking Is Ours to Build

A decade ago, fact-checking was an answer to a growing problem. But today’s challenges require a new answer — one that is scalable, financially sustainable, and technologically sophisticated. The question is not whether fact-checking should survive, but whether we are willing to build something that actually works at the scale required to fight today’s digital misinformation threats.

This is our opportunity to build the future of fact-checking — before it’s too late.

We need to think bigger, act boldly, and envision a future where fact-checking is not reliant on the generosity of a single platform. Meta may be a dominant force online, but it did not create the internet — nor will its absence eliminate the misinformation crisis. Addressing this issue requires a cross-platform solution, one that isn’t dependent on the shifting priorities of tech giants. Rather than waiting for platforms to extend support, we must take the initiative to build a robust, independent system that compels their participation. Increasing our collective leverage over these platforms is essential — change will not come until we assert our influence and demand better solutions.

Authors