Meta Dropped Fact-Checking Because of Politics. But Could Its Alternative Produce Better Results?

Jonathan Stray, Eve Sneider / Feb 3, 2025The changes are politically motivated, yes, but the fact-checking program never really achieved trust or scale, write Jonathan Stray and Eve Sneider.

Composite: Mark Zuckerberg announces changes to Meta's policies. Source

On January 7, Meta founder and CEO Mark Zuckerberg announced various changes to the company’s content moderation policies, including ending its third-party fact-checking program in the United States and relaxing its posture on misinformation and some forms of speech targeted at minority groups. Zuckerberg cited concerns about the perception that fact-checkers are biased and the importance of allowing free expression on the company’s platforms. The move has been lauded by many conservatives, including President Donald Trump himself, as a “win for free speech,” while critics of Zuckerberg’s move condemned it as part of a series of political capitulations to the incoming administration.

The critics have plenty of evidence. Trump’s FCC chair sent a threatening letter to platforms about fact-checking after the November election. Zuckerberg ran his plans to roll back diversity programs at Meta past Trump advisor Stephen Miller, donated $1M to Trump’s inauguration, appointed Ultimate Fighting Championship CEO (and friend of Trump) Dana White to Meta’s board, and ultimately joined a coterie of tech executives in the Capitol Rotunda for Trump’s swearing-in. This week, The Wall Street Journal reported that after meeting with Trump at Mar-A-Lago, Zuckerberg “agreed to pay roughly $25 million to settle a 2021 lawsuit that President Trump brought against the company and its CEO after the social-media platform suspended his accounts following the attack on the US Capitol that year.”

But if you are willing to put aside Zuckerberg’s political motivations, it’s also true that–despite the excellent work of many individual fact-checkers–the fact-checking program as a whole was struggling in important ways. For such a program to work, it has to accomplish at least three things:

- Accurately and impartially label harmful falsehoods

- Maintain audience trust

- Be big enough and fast enough to make a difference

Here, we consider the evidence for all three. Despite repeated claims of bias, there’s good reason to believe fact-checkers were mostly accurate and fair. However, the program never really operated at platform scale or speed, and it rapidly became distrusted among the people who were most exposed to falsehoods.

There was, maybe, an alternate path that might have preserved trust among conservatives; we now have research that gives us a tantalizing what-if.

Fact-checking never achieved scale

Meta launched its fact-checking program in December 2016, following concerns about the role that misinformation played in that year’s US election. Over the years, it has worked with dozens of partners globally, including Poynter’s International Fact-Checking Network (IFCN).

Meta’s systems identified suspicious content based on signals like community feedback and how fast a post spread. Fact-checking partners could see these posts through an internal interface. They freely chose which claims to check and then did original reporting to determine the accuracy of those claims. After this, Meta would decide whether to apply a warning label, reduce distribution, or sometimes remove the post entirely.

Meta says it will stop fact-checking in the US (and only the US, it seems, for now), but this shouldn’t be confused with stopping content moderation. Almost all content that is removed on Meta’s platforms—millions of items per day—is reviewed by armies of content moderators (the company says there are 40,000 of them) and related AI systems, not fact-checkers. While moderation does target some politically contentious items, such as hate speech and health misinformation, it mostly removes fraud, spam, harassment, sexual material, graphic violence, and so on. Zuckerberg said that certain content moderation rules will be relaxed and the teams involved will move to Texas, but the massive operation appears otherwise unchanged. The update to moderation rules may turn out to be the most impactful change by far.

Fact-checkers, by comparison, can check only a handful of posts per day. Meta never released any data on the program's operation, but a 2020 Columbia Journalism Review article mentioned that all US fact checkers combined completed around 70 fact checks in five days – or 14 items per day. A more comprehensive 2020 report by Popular Information indicated not just the scope but also the speed:

There were 302 fact-checks of Facebook content in the US conducted last month. But much of that work was conducted far too slowly to make a difference. For example, Politifact conducted 54 fact checks of Facebook content in January 2020. But just nine of those fact checks were conducted within 24 hours of the content being posted to Facebook. And less than half of the fact checks, 23, were conducted within a week.

This is slightly less than 10 fact checks per day in the US. Of course, one might argue that this volume was, to some extent, always in Meta’s control. After all, isn’t Meta funding the fact-checkers, and couldn’t it decide to spend a fraction more of its billions in profit on the effort? Perhaps. But that still would not address the issue of speed. If fact checks typically take days to complete, then most people will view viral falsehoods before any label is applied. Even if there were five times as many fact-checkers, it’s hard to know whether it would have reduced exposure to false information by anywhere close to a similar multiple.

Fact-checking lost the trust of its most important audience

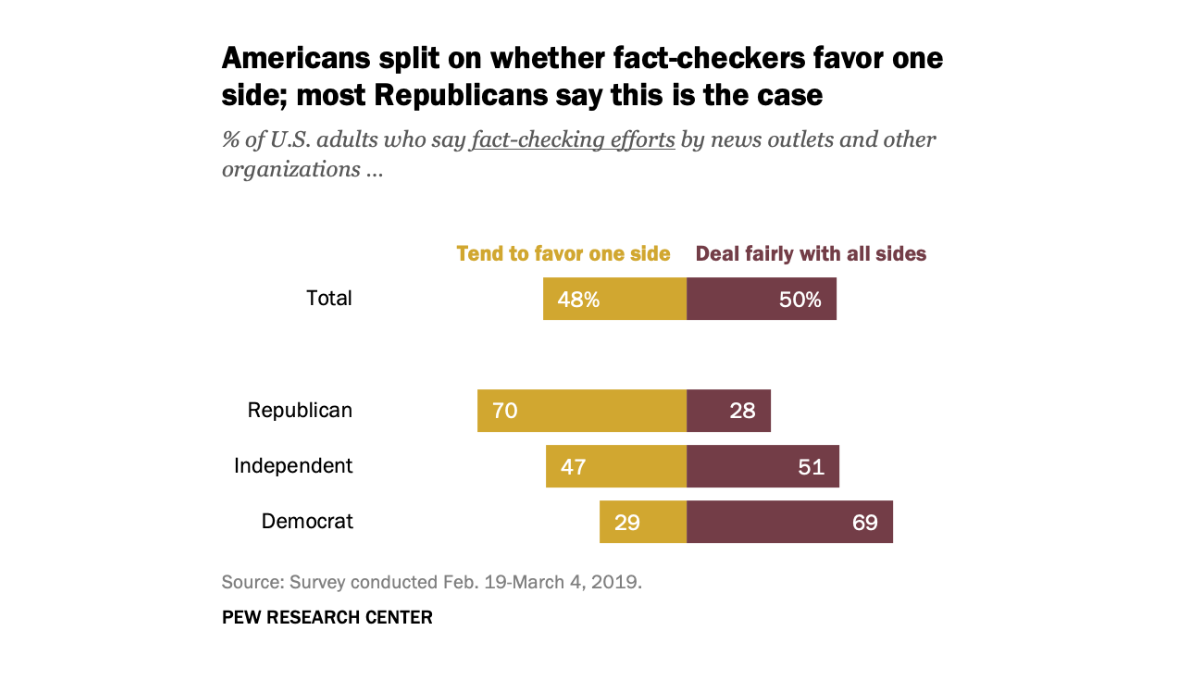

In the US, trust in fact-checkers quickly split along partisan lines. As a 2019 study from Pew found, Republicans, in particular, tend to think that fact-checkers are biased:

Generally speaking, many conservative Americans feel as negatively about fact-checkers as they do about mainstream “liberal” media. One reason for asymmetrical trust in fact-checking is that, simply put, there’s more misinformation on the right than on the left. No one really wants to review the evidence on this point—this statement probably sounds obvious to most readers who identify in the center or on the left, and obviously biased if you identify on the right. (See also: Proof That the 2020 Election Wasn’t Stolen, Which No One Will Read).

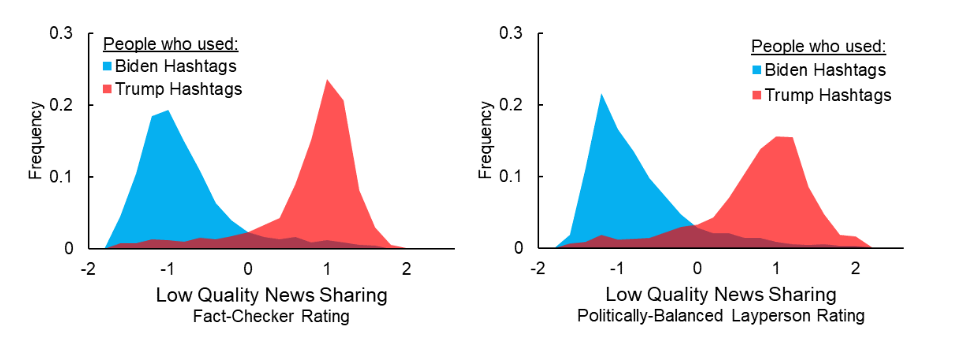

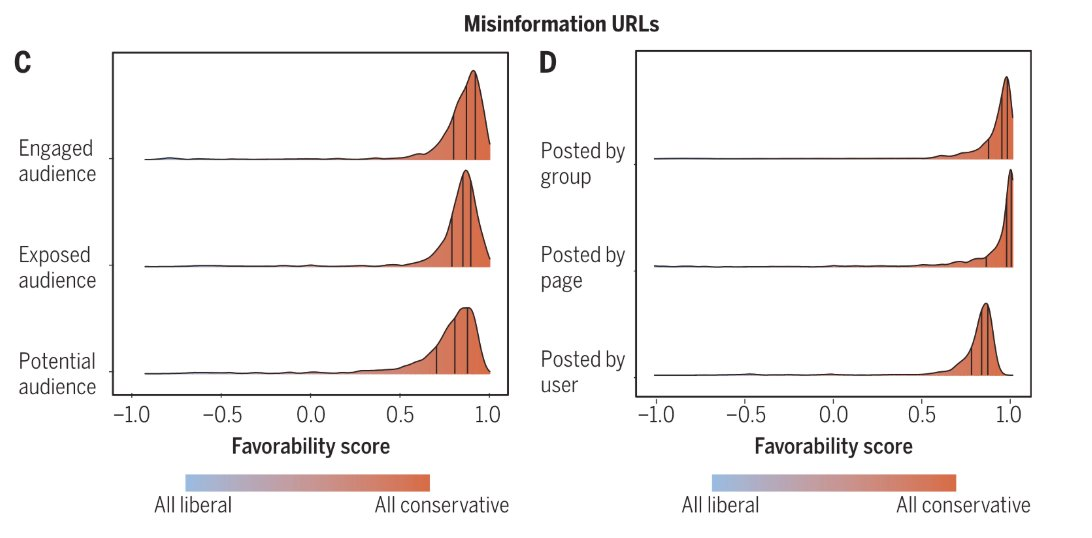

But just to drop a few links: in recent years, researchers have found a strong correlation between conservative politics and low-quality news sharing across platforms and that there’s way more misinformation on the right than on the left shared on Facebook. Even conservative journalists say that their side of the industry is lower quality, according to a 2020 research project. All of this means that Red Americans are disproportionately impacted even if the fact-checkers are being entirely fair.

Distribution of liberal vs. conservative misinformation found on different areas of Facebook. Source

Another good reason for conservative distrust of fact-checks is that journalists at major media organizations today are mostly quite left of center. Fact-checkers are regarded as de-facto censors, and it’s rational to distrust censors who don’t share your politics. Unsurprisingly, research bears this out. In a 2023 study, researchers found that fact-checks were much more likely to backfire (52% more likely!) when they came from a member of the political outgroup.

Conservatives usually agree with liberal fact-checkers

You may wonder how researchers know there’s more misinformation on the conservative side if potentially biased fact-checkers define misinformation. To account for this possibility, researchers at MIT recently compared the ratings of professional fact-checkers with those from politically-balanced groups of laypeople. They found that the assessments by such bipartisan groups differed little from those of the professional fact-checkers. This replicates an earlier study that found the same thing.

It’s important to note that a few controversial calls can destroy trust even if most of the rest are agreeable – trust is earned in drops and lost by buckets, as the saying goes. There are surely mistakes here, yet it was platforms, not fact-checkers, who made some of the most controversial choices. This includes Twitter’s handling of the Hunter Biden laptop story and Facebook’s suppression of the COVID lab leak theory (both of which the platforms later reversed course on as new evidence came to light).

What these studies show is that, by and large, the third-party professionals who Meta is throwing under the bus were, in fact, accurate and impartial. When you take the liberal labels off and include conservatives in the process, they largely agree with the calls that were made.

In retrospect, the obvious trust-building move would have been for fact-checking organizations to visibly employ conservative fact-checkers. This may have achieved goal #2, trust, without sacrificing goal #1, accuracy. Why didn’t this happen at the time? Our guess is that polarization made it nearly impossible for fact-checking organizations to hire conservatives. Deep suspicion runs both ways; there’s simply too much distrust of conservatives within much of professional journalism.

In fairness, Meta’s fact-checking program did take at least a few steps to include a politically diverse array of partners. In 2018, it took flak from left-leaning outlets for adding the Weekly Standard, a conservative magazine, as one of its fact-checking partners. Even more controversially, for a while the Daily Caller was a fact-checker and sometimes fact-checked other fact-checkers like Politifact. (Both the Weekly Standard and the Daily Caller were later removed from the program.) This is all culture war nonsense. But what would have happened if, say, Politifact had hired conservative staff directly and resolved disputes internally?

Will community notes be better?

Could some version of Community Notes, the system developed at Twitter that Zuckerberg said will be implemented instead of the third-party fact-checking program, be an improvement? It’s worth noting that YouTube also recently rolled out a similar program.

As Lena Slachmuijlder pointed out on the Tech and Cohesion blog, in the wake of the Meta news, the critical limitation facing Community Notes is timing. Slachmuijlder cites a study that found that this program does help reduce the spread of misinformation on X, but,

Notes typically become visible 15 hours after a tweet is posted… by which time 80% of retweets have already occurred. As a result, the overall reduction in misinformation reshares across all tweets is modest—estimated at around 11%.”

But, they add, efforts are already underway to leverage AI to “rapidly synthesize the most helpful elements of multiple user-generated notes into a single, high-quality ‘Supernote,’” which could significantly reduce the time before a misleading post is labeled.

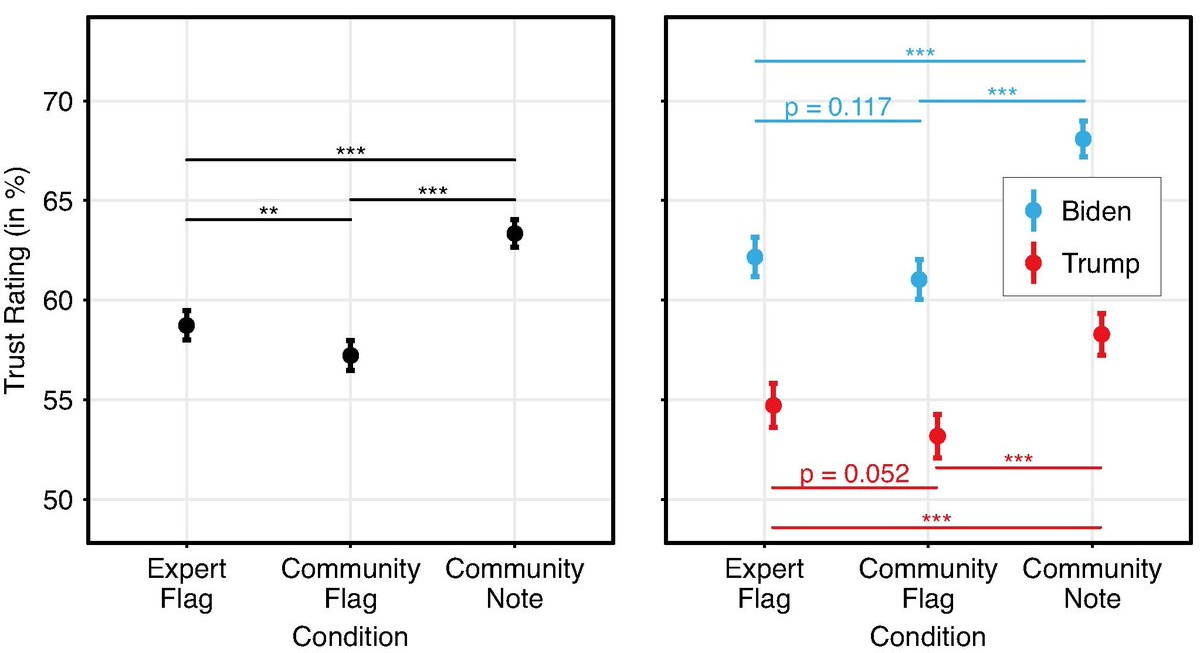

What’s more, Twitter’s original community notes experiments suggested that community-based fact-checking is effective in correcting belief in falsehoods and producing audience trust. Independent researchers recently replicated these results, finding that “Across both sides of the political spectrum, community notes were perceived as significantly more trustworthy than simple misinformation flags.”

Trust in fact checks, broken down by who writes them. Left: overall trust. Right: trust for Biden vs. Trump voters. (Source)

X’s Community Notes have thus far struggled to match the scope of false information on the platform. However, in all the criticism, we’ve never seen a direct comparison to the human fact-checking program in terms of scale, speed, or trust. Considering all available evidence, it’s not clear that professional checkers were ever any faster, more comprehensive, or trusted than this crowdsourced system. Notably, a broad array of platform professionals, academics, and civil society organizations recently endorsed the general approach behind Community Notes and similar “bridging” systems in a massive paper on the future of the digital public square.

Overall, we think losing professional fact-checking can’t be good for a platform. We do take concerns about bias seriously, but we now know that when conservatives are asked to fact-check, they mostly agree with professional fact-checker ratings. And however good the algorithms get, they aren’t a substitute for well-informed professionals watching what’s going viral. But if crowdsourced corrections are implemented well, they might rise to the twin challenges of scale and trust in a way that the fact-checking partnership never quite did.

Authors