Some Facts About Fact-Checking: Defending the Imperfect Search for Truth in an Era of Institutionalized Lying

Paul M. Barrett / Jan 27, 2025When President Donald Trump pardoned and heroized more than 1,500 of those who stormed the United States Capitol on January 6, 2021, he based his actions on lies: the lie that the violent insurrection was “a day of love” and the lie that those imprisoned were “patriots” and “hostages,” rather than partisan thugs.

Many Trump supporters have cheered the pardons and clemency grants based on another lie: that Capitol Police officers “waved in” the attackers, when in fact, the insurrectionists assaulted the outnumbered cops with bats, flag poles, bear spray, brass knuckles, metal barricades, stolen law enforcement shields, wooden furniture legs, a “tomahawk axe,” and other weapons. At least five members of the mob carried firearms. More than 150 police officers were injured, and several died shortly thereafter, two by suicide.

For accurate information correcting these false claims about January 6, I relied primarily on straightforward reports, including video evidence, assembled by specialized fact-checking teams at Reuters and Snopes.

This article addresses one relatively modest aspect of an ongoing debate about the importance of defending the distinction between facts and lies — namely, the effectiveness of fact-checking as applied to major social media platforms.

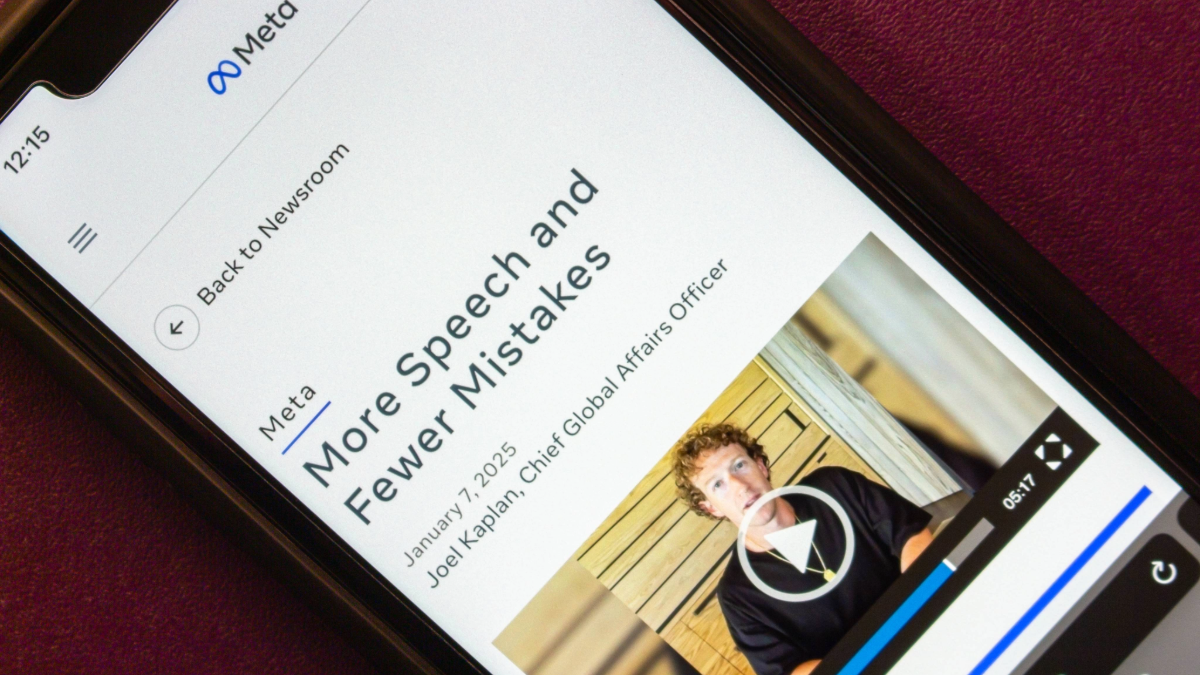

In the wake of Meta’s abandonment of third-party fact-checking — one of a number of gestures by Silicon Valley leaders to curry favor with the Trump administration — let’s debunk three faulty criticisms of fact-checking:

- that it is an illegitimate form of censorship, undermining free speech,

- that as practiced in connection with platforms such as Facebook, it is a partisan left-leaning exercise, and

- that it just “doesn’t work.”

While the cottage industry of fact-checking social media content represents only part of a much broader struggle over truth and trust in a hugely complicated digital information environment, consideration of its role sheds light on a moment when tens of millions of Americans readily embrace the non-stop torrent of Trump’s lies. That embrace is consequential. It helps explain Trump’s return to power, with all the dangers his autocratic, narcissistic agenda brings with it.

A number of factors contribute to the spread of Trump’s lies, starting with the president’s own sociopathic detachment from truth and the willingness of other political and economic elites to enable him, either out of fear of his wrath, a plan to profit from his hostility to regulation and the rule of law, or both. Various media, including partisan cable television, podcasters, and other online influencers, also play roles. Social media platforms — such as Meta’s Facebook and Instagram; TikTok, owned by the Chinese company ByteDance; Elon Musk’s X; and Trump’s own Truth Social — help inform hundreds of millions of users in the US and around the world. Often, these lines of communication intertwine, as when Trump’s denial of the reality of January 6 is amplified by Fox News hosts, whose broadcasts are excerpted and spread on social media.

Fact-checking: a brief history

For more than a quarter-century, a small corps of journalists have called themselves fact-checkers. They do not cover news events in the first instance. They apply traditional shoe-leather reporting techniques — interviewing people, scrutinizing public records, and, in the modern fashion, surveying the vast internet — to assess the veracity of public claims by politicians, other influential individuals, and digital crowds whose sharing of content makes it “go viral.”

In 2009, PolitiFact, a small nonprofit launched two years earlier by the St. Petersburg Times, won a Pulitzer Prize for examining 750 political claims during the 2008 presidential race and, in the words of the Pulitzer Board, “separating rhetoric from truth to enlighten voters.” PolitiFact’s founders hoped that this new spin on traditional journalism would help revive the news business by demonstrating that familiar news-gathering methods could be applied and digitally disseminated in a way that would arrest the flight of readers and advertisers.

Fact-checking persevered and proliferated modestly. But it didn’t slow the deterioration of the traditional news business. The once-highly regarded St. Pete Times bought out a competing paper and changed its name to the Tampa Bay Times, but the combined operation has continued to atrophy. In 2018, the Poynter Institute, a nonprofit journalism school, acquired PolitiFact, which takes philanthropic money and generates revenue from ads on its website and fees for its content paid by corporate customers like Meta and TikTok. Poynter also houses the International Fact-Checking Network, which vets and supports more than 100 fact-checking teams, some tucked into global news organizations like the French AFP, others stand-alone outfits like Snopes in the US, Boom in India, and Rappler in the Philippines.

Fact-checking received an unanticipated economic boost in 2017 as a result of the revelation that Russian operatives had maintained fake accounts on major US-based social media platforms to sow divisiveness in the American electorate and help Donald Trump in the 2016 presidential election. Meta, then known as Facebook, began hiring outside fact-checkers to evaluate a selection of content on the company’s platforms, Facebook, Instagram, and WhatsApp.

Debunking ‘provably false claims’

At its peak, the Meta fact-checking system relied on more than 90 third-party organizations certified by the International Fact-Checking Network. “The focus of the program,” Meta explained in 2021, “is to address viral misinformation — particularly clear hoaxes that have no basis in fact. Fact-checking partners prioritize provably false claims that are timely, trending and consequential.”

Because of the unfathomable ocean of content that washes across social media, fact-checking never pretended to address more than a tiny amount of the potential falsehoods circulating on major platforms. Moreover, fact-checkers never enforced their findings. It was entirely up to the social media companies to act on fact-checks. Meta did so in two main ways: by down-ranking and labeling provably false posts. False content did not disappear, but users were less likely to see it prominently placed in their feeds. When they did see the dubious posts, they might be labeled with a link back to the fact-checkers’ findings.

“We know this program is working,” Meta said in 2021. “People find value in the warning screens we apply to content after a fact-checking partner has rated it. We surveyed people who had seen these warning screens on-platform and found that 74% of people thought they saw the right amount or were open to seeing more false information labels.”

Even as Meta was boasting about its outside fact-checking, the practice got swept up into a broader conservative campaign designed to blur the distinction between truth and falsehood. Led by Trump, the campaign lumped together the mainstream media (“the enemy of the people,” in Trump’s words), social media companies, and ultimately the Biden administration and academic tech policy researchers in what was alleged to be a vast conspiracy to censor the political right and silence its leaders.

Pursued through lawsuits, congressional hearings, and agitation by Elon Musk and other online influencers, the conservative campaign gained considerable traction, even though its proponents never presented factual evidence of a systematic effort to marginalize right-leaning voices. Led by Musk, who rolled back content moderation, including fact-checking, when he acquired the platform he renamed X in 2022, social media companies diluted or reversed policies designed to reduce misinformation.

Trump’s retaking of the White House spurred Meta’s chief executive, Mark Zuckerberg, to end third-party fact-checking altogether, one of a number of genuflections meant to placate a mercurial leader who had once threatened to throw Zuckerberg in prison for his imagined antagonism toward the MAGA movement.

Zuckerberg and others attacking fact-checking typically mount three arguments, which, when disentangled, turn out to be myths.

Myth #1: Fact-checking censors free speech

In a video justifying ending outside fact-checking and scaling back other forms of content moderation, Zuckerberg associated the changes with Trump’s political victory: “The recent elections,” he said, “feel like a cultural tipping point toward once again prioritizing speech.” In an accompanying corporate blog post, his recently promoted number two executive, Joel Kaplan, a former Republican operative with ties to the Trump administration, said that fact-checking “too often became a tool to censor.”

These statements distort reality. Fact-checking organizations have never “censored” social media content. They provided information that Meta and other platforms could use to prevent the viral spread of what Meta called “clear hoaxes that have no basis in fact” and provided users who find the posts anyway with links back to fact-checkers’ findings. One such clear hoax is Trump’s contention that January 6, 2021, was mostly not violent. You can find the claim on social media without much difficulty, but under its former fact-checking system, Meta would have set its recommendation algorithm to limit the spread of the falsehood while also providing users with an alternative account rooted in the irrefutable, caught-on-video facts of that fateful day.

Back in 2018, Renée DiResta, now an associate research professor of public policy at Georgetown University, responded to politicians and pundits she described as miscasting content moderation as the demise of free speech online. She reminded these critics that on social media, “free speech does not mean free reach.” Her admonition still rings true.

Fact-checking is, in fact, an exceedingly modest, partial response to a central technological characteristic of social media: As DiResta noted, the algorithms that rank and recommend content — essentially determining which posts are seen by millions and which languish in obscurity — are designed to keep people watching and engaging (sharing, commenting and liking – or disliking). Social media companies tune their algorithms to prioritize screen time and engagement because advertisers rely on these metrics to parcel out the ad revenue that makes the companies so lucrative.

Unfortunately, sensational, hateful, divisive, and just plain false content generates extended screen time and engagement. Social media companies know this, but they have never significantly altered the technology that has made so many of their executives multimillionaires and even billionaires. Instead, in years past, they instituted partial measures, like fact-checking and automated content filtering, which removes false information, some forms of bigotry, and other harmful content. To please President Trump and his supporters, they have now decided to end or reduce even some of these modest measures.

Roger McNamee, the former Silicon Valley financier and mentor-turned-critic of Zuckerberg, once suggested to me over coffee that I should think of social media platforms as arsonists who set wildfires every hour by facilitating the spread of hateful and false content. Then, every afternoon, they try to tamp down a few of the conflagrations by means of fact-checking and other content moderation techniques. Meanwhile, the fires rage on, and the ad dollars continue to flow.

Fact-checking isn’t censorship. It doesn’t eliminate speech. It’s an imperfect attempt to limit the reach of at least a handful of the most potentially harmful claims about political intimidation and violence, public health, and other matters of civic importance.

Myth #2: Fact-checking is biased against conservatives

Trump and his supporters have railed against fact-checkers as part of baseless claims of a broader conspiracy against conservative expression online. Just days after the election last November, incoming Federal Communications Commission Chairman Brendan Carr singled out fact-checkers in a publicly released official letter threatening to investigate what he called a Silicon Valley “censorship cartel.”

Abandoning his past denials of partisanship, Zuckerberg now pleads guilty to past liberal partiality. “Fact-checkers,” he said in his video, citing zero evidence, “have just been too politically biased and have destroyed more trust than they created, especially in the US.”

It’s true, as noted in an article published in the journal Nature on January 10, 2025, that potential “misinformation from the political right does get fact-checked and flagged as problematic — on Facebook and other platforms — more often than does misinformation from the left.” But there’s a reason for that, and it isn’t partisan bias.

“It’s largely because the conservative misinformation is the stuff that is being spread more,” Jay Van Bavel, a psychologist at New York University, told Nature. “When one party, at least in the United States, is spreading most of the misinformation, it’s going to look like fact-checks are biased because they’re getting called out way more.”

A peer-reviewed study conducted by researchers from Oxford, Massachusetts Institute of Technology, Yale, and Cornell and published in the same journal last year supported this analysis. The research showed that, although politically conservative users on X were more likely to be suspended from the platform than were liberals, they were also more likely to share information from news sites judged as “low quality” by a bipartisan group of laypeople. (And this is far from the only study finding that conservatives are more likely to share dubious “news.”)

Meta has said that it will solve the unsubstantiated bias problem by replacing outside expert fact-checking with crowd-sourced commentary known as “community notes” and currently in effect on X. Skeptics have noted that X’s community notes often never actually get published or descend into partisan bickering. In any event, it’s not clear why Meta needed to kill fact-checking in order to experiment with crowdsourced commentary. The two methods are not mutually exclusive.

Myth #3: Fact-checking just ‘doesn’t work’

This contention takes two forms: that fact-checking is too limited in scope to be meaningful and that it doesn’t change people’s minds. Both versions fail by beginning with faulty premises.

In the wake of Meta’s announcement that it would stop buying any outside fact-checking services, a start-up called NewsGuard Technologies published a report finding that before the shutdown, Meta’s fact-checking program labeled only 14% of the Russian, Chinese, and Iranian “disinformation posts” that NewsGuard itself was able to identify.

NewsGuard is a for-profit analysis shop that, to my eye, does an impressive form of fact-checking that focuses more on the reliability of news sites rather than particular posts. For the sake of argument, let’s assume that NewsGuard does more effective fact-checking than the collection of mostly smaller organizations that had been employed by Meta — thus the 14% finding. But NewsGuard’s claim that Meta’s fact-checking only scrapes the surface of the falsehood edifice is a reason to learn from NewsGuard’s example — to do more and better fact-checking, not less.

Revealingly, when Meta was still in the market for outside fact-checking, it wanted nothing to do with NewsGuard, whose co-founder Steven Brill described fruitless sales trips to Meta’s offices in his 2024 book, The Death of Truth. “We didn’t know that they didn’t want to solve the problem we told them we could solve,” Brill wrote. “That problem was their business plan. Misinformation and disinformation were not bugs. They were features.”

Setting NewsGuard’s experience aside, a number of studies have concluded that fact-checking can at least partially reduce misperceptions about false claims. A broad review published in 2020 in the journal Political Communication examined the effectiveness of fact-checking in correcting political misinformation consumed by nearly 21,000 individuals. “Fact-checking has a significantly positive overall influence on political beliefs,” the researchers found. They acknowledged, however, that “the ability to correct political misinformation with fact-checking is substantially attenuated by participants’ preexisting beliefs, ideology, and knowledge.”

But the widely accepted observation that even scrupulous fact-checking may not cause hardened partisans to change their minds on polarizing issues should not be over-interpreted as a reason to abandon fact-checking. That’s because changing people’s minds is the wrong criterion for judging whether fact-checking — or any fact-based journalism — is worth doing.

The point is not to transform Robert F. Kennedy Jr. into a pro-vaccine zealot. The point is to put facts out into the world in hopes that, in the long run and in the aggregate, more factual information will contribute to a healthier debate about important civic issues. Measuring the broader effects of contributing more solid facts to the information ecosystem is difficult, so it generally hasn’t been done by social scientists or anyone else.

However limited it may be, fact-checking sends a signal that there’s a distinction between facts and falsehoods and that everything is not up for grabs simply because powerful people say it is. Meta has scorned and stifled that signal. Others should not follow suit.

Authors