The AI State is a Surveillance State

Eryk Salvaggio / Mar 12, 2025Eryk Salvaggio is a fellow at Tech Policy Press.

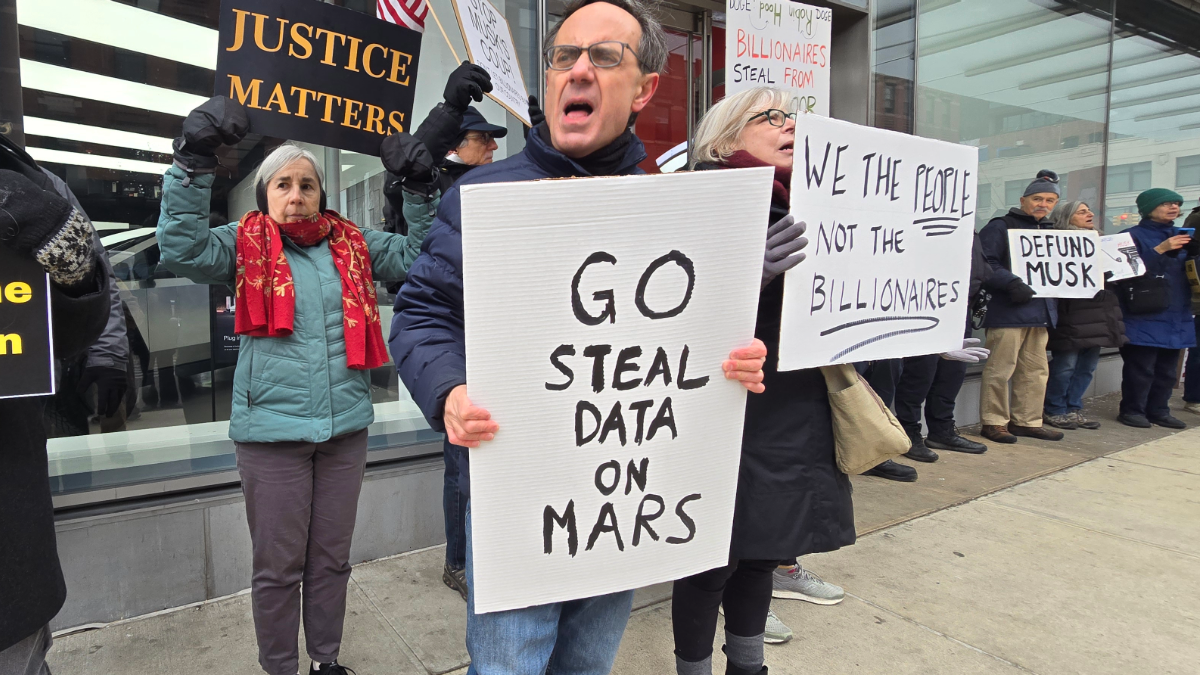

February 15, 2025—Demonstrators gather outside the Tesla showroom in Manhattan, holding signs and engaging in chants protesting Elon Musk, DOGE, and the influence of tech billionaires. Justin Hendrix/Tech Policy Press

It is important to be aware, as we embrace this new technology, that the computer, like the automobile, the skyscraper, and the jet airplane, may have some consequences for American society that we would prefer not to have thrust upon us without warning. Not the least of these is the danger that some record-keeping applications of computers will appear in retrospect to have been oversimplified solutions to complex problems, and that their victims will be some of our most disadvantaged citizens.

These are the words of Caspar Weinberger, President Richard Nixon’s Secretary of Health, Education, and Welfare, in a foreword to a committee report analyzing the rising threat that computer databases posed to the liberty of American citizens. In the report, members described an initial enthusiasm for the role of new computer technologies in the federal government. Such optimism would quickly sour, based on deeper engagement and case studies with other governments:

There is a growing concern that automated personal data systems present a serious potential for harmful consequences, including infringement of basic liberties. This has led to the belief that special safeguards should be developed to protect against potentially harmful consequences for privacy and due process.

In the end, the committee proposed a set of guiding principles for the management of data in the US government. These principles would inform the creation of the 1974 Privacy Act, which explicitly states that “in order to protect the privacy of individuals identified in information systems maintained by Federal agencies, it is necessary and proper for the Congress to regulate the collection, maintenance, use, and dissemination of information by such agencies.”

The resulting Privacy Act assured firewalls between government agencies to prevent the sharing of information between departments without clear justification and oversight. Despite the Nixon administration's best efforts, the consolidation of private data between agencies, in service to the automation of administrative decision-making, appears to be well underway in 2025. With some time, a reader might match the long list of DOGE data consolidation efforts to the policy guidance of the HEW report to see which ones contradict each other: a recent NPR report lists the agencies with personal data that DOGE has accessed.

Surveillance is Anonymity

Since the Trump administration was sworn into office, it has expanded the scale and pace of political offloading to artificial intelligence, assigning tasks once under the purview of elected officials to automated systems. DOGE announced it would use a Large Language Model to analyze mandatory weekly surveys of federal employees to determine who would keep their jobs. Wired reports that DOGE is working on software to automate firings, with sources at the CDC confirming that their human-vetted list of essential employees was completely disregarded. TechCrunch reports that a recently surfaced AI assistant (removed after its discovery) developed by DOGE insiders was trained on Musk’s Grok language model.

Decisions about the careers of government employees are merely one prong of the attack. Shortly after Secretary of State Marco Rubio announced that AI would be used to analyze social media accounts of all foreign nationals identified as sharing “terrorist sympathies,” ICE agents arrested Mahmoud Kahlil, a Palestinian student who mediated between protest groups and Columbia University officials during sit-ins last year, threatening him with deportation. Kahlil has said the case was built around social media reports he didn’t write.

This pairs with a broader executive order asserting that “the United States must ensure that admitted aliens and aliens otherwise already present in the United States do not bear hostile attitudes toward its citizens, culture, government, institutions, or founding principles.” The administration’s definition of “hostile attitudes” is ill-defined, and appears to encroach on a number of free speech protections — paired with the self-reported mandate to end “woke,” one wonders what boundaries separate hateful ideas from ideas President Trump simply hates.

Describing the technologies that make all of this possible as “AI” masks what they really are: government surveillance targeting free speech. In a 2022 keynote talk, I described an Orwellian adage of the social media era: “Surveillance is anonymity.” If everyone is being monitored, this thinking goes, why would anybody be singled out? This false sense of security was built on the bargain that services provided in exchange for our data were worthwhile. Today, the government’s use of that data threatens to deny rights while slashing government services, and the risk of being singled out hovers over anyone who disagrees with the administration.

Over time, corporations developed technologies, and private datasets were bought, sold, and cross-referenced. Today, links between these private datasets and government records, including taxpayer data from the IRS, introduce radical new ways to make inferences about our words and deeds.

The Fraud Fraud

Today it is much easier for computer-based record keeping to affect people than for people to affect computer-based record keeping. — The HEW Report

While initially operating under a memorandum stating they would not pursue individual taxpayer records, DOGE has nonetheless asserted rights to that information under the purview of finding fraud. “We meet late into the night in his office,” Speaker of the House Mike Johnson (R-LA) recently told Meet the Press. “What he's finding with his algorithms crawling through the data of the Social Security system is enormous amounts of fraud, waste, and abuse."

With “fraud and waste” emerging as justification for further surveillance, we should ask how exactly these algorithms are identifying such abuse. Determining fraud requires careful attention to agreements and analysis of evidence, as well as checking receipts against spending limits. Identifying fraud through statistical analysis is inconclusive: it is typically the first red flag of an investigation, not the final determining factor. Small online retailers do not even use state-of-the-art algorithmic fraud detection in isolation. Doing so for something as vast and complex as the Federal government is nonsense.

It is even more dubious when the numbers DOGE presents are themselves misleading. In one case, a $25 million contract was counted four times. It counts the entire budget in cases where only partial funding was revoked. One reported cut, an $8 billion contract, was, in fact, $8 million. Shockingly, we only know of these because DOGE admitted to them. Without any real oversight, our insight is limited to what Musk admits.

As I have written before, AI is less a technology than an excuse: it offers a temporary placeholder for critical thought as people do precisely what they want, ranging from shrinking government services, disassembling the social safety net, or punishing political enemies. When the AI is blamed, accountability is displaced. Speaker Johnson says “the algorithms” are finding the waste. But AI is a puppet, printing text in response to the words we feed it. Nonetheless, we are captivated when we can’t see the strings.

If the agency upending a nation’s expectations of data privacy isn’t even finding fraud, then what, we might ask, is the actual point?

The New Surveillance State

While much of the HEW report seems strikingly contemporary, one passage seems, sadly, out of date: the possibility of creating a dossier on anyone, anywhere, with a few clicks. The authors feared that “combining bits and pieces of personal data from various records is one way to create an intelligence record, or dossier” — but they state that no technical structure existed to build such a system. This would protect us, temporarily, from such widespread automated surveillance and the mass retrieval of personal information.

In the age of multi-billion parameter datasets, this assertion no longer holds. We should be clear that artificial intelligence is a product derived from datasets. When we consolidate multi-agency government data into a single point of reference, such as a Large Language Model, we are creating, definitionally, a single government database that connects the datasets of independent agencies.

Any AI model trained on government data is a central repository of data, and the more information consolidated within the model, the greater a violation of privacy rights it becomes. Prediction errors and statistical mistakes threaten our daily lives, as mirages in the desert of abstract data, so-called “hallucinations” can create false justifications for selective or targeted enforcement.

This would be a new level of government surveillance, tying the tactics of surveillance capitalism to the punitive power of the US government. This is not a conspiracy theory, and to merit concern, no coordinated conspiracy needs to exist. The presence of the apparatus is a concern unto itself. With a court order restricting access to Treasury Department data rescinded this week, DOGE now has access to even more sensitive, private information about US Citizens. In issuing that order, the judge pointed to a lack of evidence that meaningful harms have materialized: “Merely asserting that the Treasury DOGE Team’s operations increase the risk of a catastrophic data breach or public disclosure of sensitive information ... is not sufficient to support a preliminary injunction,” US District Judge Colleen Kollar-Kotelly wrote.

While sympathetic to the risks, the law understandably cannot function in anticipation of abuses. But we should be vigilant for what such abuses could look like.

Privacy Revisited

In the 1972 HEW report cited above, titled “Records, Computers, and the Rights of Citizens,” the authors warned of two abuses emerging from data consolidation.

First, consolidating government agencies could contribute to “dragnet behavior,” which they defined as “any systematic screening of all members of a population in order to discover a few members with specified characteristics.” This entails not only errors — searching for a name and finding another name — but also using shared databases to triangulate certain people with certain characteristics or behaviors in order to subject them to greater scrutiny. Rather than being flagged for suspicious behavior, they are scrutinized for unrelated characteristics in a search for suspicious behavior.

Second, the HEW report noted that unauthorized entry to government data was a technical and security concern. But more worrisome, they said, was “how to prevent ‘authorized’ access for ‘unauthorized’ purposes, since most leakage of data from personal data systems, both automated and manual, appears to result from improper actions of employees either bribed to obtain information, or supplying it to outsiders under a ‘buddy system’ arrangement.”

The “deep state” has, in many ways, become a “buddy system state” ruled by loosely affiliated tech titans and venture capitalists. This “buddy system” can be taken literally. In a declaration by Tiffany Flick, a Social Security Agency official who retired after handling DOGE requests, Flick states that DOGE employee Akash Bobba was demanding access to Social Security data remotely. She was concerned, she said, as “I also understood that Mr. Bobba would not view the data in a secure environment because he was living and working at the Office of Personnel Management around other DOGE, White House, and/or OPM employees.”

The dossier function of linked data is all the more concerning when the people with access to the data are working and reportedly living together in a General Services Administration building. But even without such an egregious “buddy system state” in place, the mere existence of an insecure data pipeline should give us pause. A system linking the views expressed on an individual’s social media accounts to the platforms gathering government data — most recently, child support payments — is an immediate threat to the freedom to express ourselves and live without fear of government interference in that expression.

Through fear of service denials, investigations, targeted audits, and other potential abuses, the existence of this apparatus leads citizens to curtail Constitutionally protected speech acts. Creating a situation where citizens reasonably fear that their speech will lead to a suspension of rights, denial of services, or taking on other risks that threaten democratic participation and debate.

What is clear, however, is that the moment such an incursion into rights can be articulated, there is a vast legal precedent under the Privacy Act that can be brought forward in response. For that reason, Americans need more, not less, public expression of diverse ideas and robust rebuttals to the intrusion of this public-private partnership into our civic life.

Authors