Sure, No One Knows What Happens Next. But Past Is Prologue When It Comes To AI

Emily Tavoulareas / Apr 2, 2024

OpenAI CEO Sam Altman attends the artificial intelligence (AI) Revolution Forum in Taipei on September 25, 2023. Jameson Wu/Shutterstock

Last month, OpenAI’s ChatGPT began responding with nonsense, and no one could explain why. The internet did its thing, and people poked fun at it, argued with each other, and then over-intellectualized what it means. Amid the chatter and the noise, an article in Fast Company by Chris Stokel-Walker titled “ChatGPT Is Behaving Weirdly (and You’re Probably Reading Too Much Into It)” caught my eye, and then promptly raised my blood pressure.

The article makes great points about the absurd humanization of the chatbot. It is directed at people who read the bizarre outputs of ChatGPT as an indicator of sentience and the coming robot apocalypse. I enjoyed the pushback. However, it’s not the robot apocalypse that I am concerned about; I am concerned because people and organizations are integrating LLMs and chatbots into their lives, workflows, products, and services as if this new generation of AI is reliable technology. It is not.

“A barely useful cell phone.”

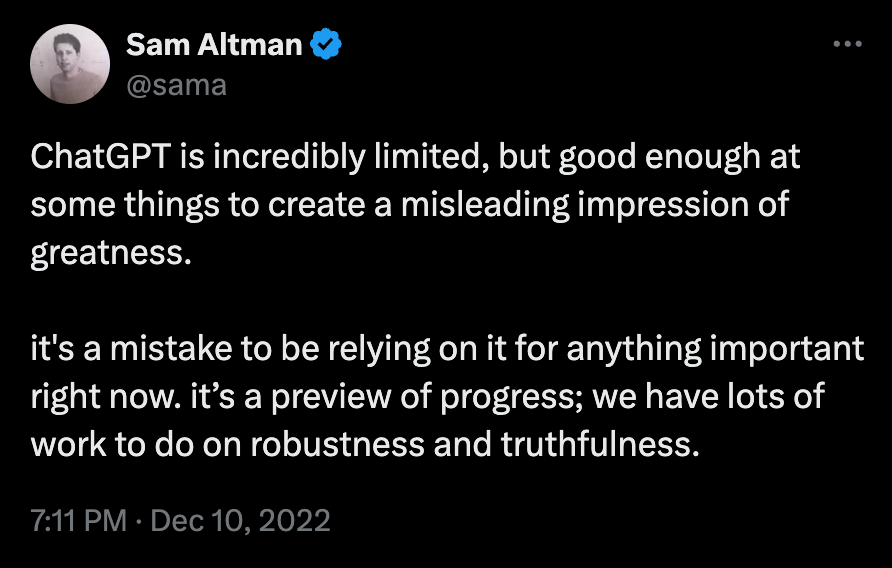

One reason there is confusion in the marketplace is that companies like OpenAI send mixed messages about the reliability of their products. In February Sam Altman, CEO of OpenAI, said that ChatGPT is like a “barely useful cell phone.” He added that in about ten years, it will be "pretty remarkable." While my instinct is to say Oh really? Perhaps you should have said that sooner…, it’s not the first time he has made a statement like this. Just after ChatGPT launched, Altman posted this statement to Twitter (now X):

Yet just a few months later, OpenAI released an API enabling any developer to integrate ChatGPT instantly, and Altman went on a world tour convincing world leaders and the public to embrace the technology and help shape it, or get left behind. The hype cycle around generative AI – and statements about existential risk from experts – created a sense of panic, and that panic has large dollar signs attached to it.

To be fair, perhaps it is unavoidable that AI developers must toe the line between hyping what is possible in the future and the limitations of the technology today. They are working on a novel new technology. It is emerging, it is experimental, and there are many problems with it. Of course there will be errors—lots of them. That is what happens in product development.

That is also why a basic best practice of product development is to slowly increase access so the product team can effectively respond to how it’s being used. A metaphor I often use for this is plumbing in a house. You want to test the pipes before you close the walls, so you turn on the water SLOWLY and look for leaks. With the release of its API, OpenAI basically connected the pipes to a firehose.

Of course, it is not surprising to find companies chasing the market. What is baffling is that people and organizations working in the public trust are scrambling to integrate — not just experiment with, integrate — technology that is, in the words of its founder, “a barely useful cell phone” into some of our most foundational and critical systems: education, the military, caretaking, and healthcare.

I am not saying don’t innovate. I am not saying don’t dream and experiment and pilot new approaches to stay current with developments. What I am saying is be clear-eyed about the fact that LLMs and the products they power (like ChatGPT and Bard) are an emerging and experimental technology. This is not a critique, it’s an empirical reality, and one that early adopters must take to heart — especially those working in the public trust, caregiving, and high-stakes scenarios.

Earlier this year a new study found that large language models used widely for medical assessments cannot back up the claims that they make. Yet the FDA has approved over 700 AI medical devices. In New York City, a government chatbot designed to provide information on housing policy, worker rights, and rules for businesses was found to supply inaccurate information and encourage illegal actions. And a recent experiment with five leading chatbots found them to deliver false or misleading election information nearly half the time. Why are we rushing to use emerging and experimental technology in such high-stakes settings?

The bottom line is that no one knows how this technology works. In his Fast Company article, Stokel-Walker reports that Sasha Luccioni, of the AI company Hugging Face, “admits it’s hard to say what’s going on—one of the things about AI systems is that even their creators don’t always know what goes on under the hood.” In MIT’s The Algorithm, Melissa Heikkila spells it out: “Because of their unpredictability, out-of-control biases, security vulnerabilities, and propensity to make things up, their usefulness is extremely limited.”

“No-one knows what happens next.”

Above his desk, Altman reportedly has a sign that reads: "No-one knows what happens next."

While this is true, it is also true that the past is often prologue — at least in terms of how “disruptive” technology will play out in real-world settings. If we want a preview of what’s coming, all we need to do is look at the recent past. After several cycles of “disruptive” technology, I think we can safely say that the potential of new technology often breaks down in its implementation.

Let’s go with a somewhat mundane example: automatic sinks. Is there potential for these to be useful? Sure. How often do I find an automatic sink that actually works? Rarely. Am I constantly grumbling like a Luddite as I wave my hands around trying to convince a sink that my hands are ready for water, and when it finally turns on wince because it's either ice cold or scalding hot? All the time.

We see this with the technology we currently have, everywhere. From AI that can’t read dark skin tones to software errors leading to wrongful imprisonment of postal workers in the UK, from an over-reliance on predictive algorithms for sentencing decisions sending people to jail to iPads that in practice become a replacement for teaching as opposed to a supplement.

So much of how a technology (or anything) works in the world is affected by a combination of its design, human behavior, and the environment that it is embedded in. That includes how the technology will work in the environment, and how humans will use it. But something we often overlook is the capacity of institutions and organizations to effectively implement and manage the technology.

As Nancy Levenson, Professor of Aeronautics and Astronautics at MIT who specializes in software safety, said years ago: “The problem is that we are attempting to build systems that are beyond our ability to intellectually manage.”

Amen to that, with an addendum: we are adopting technology beyond our ability to implement safely or even effectively. Perhaps we can’t tell the future, but executives, policymakers, and decision makers across every sector of society should acknowledge the lessons of the past, and take care not to repeat our mistakes.

Authors