Navigating Trust in Transformative Technologies

Manuel Gustavo Isaac / Jan 17, 2025

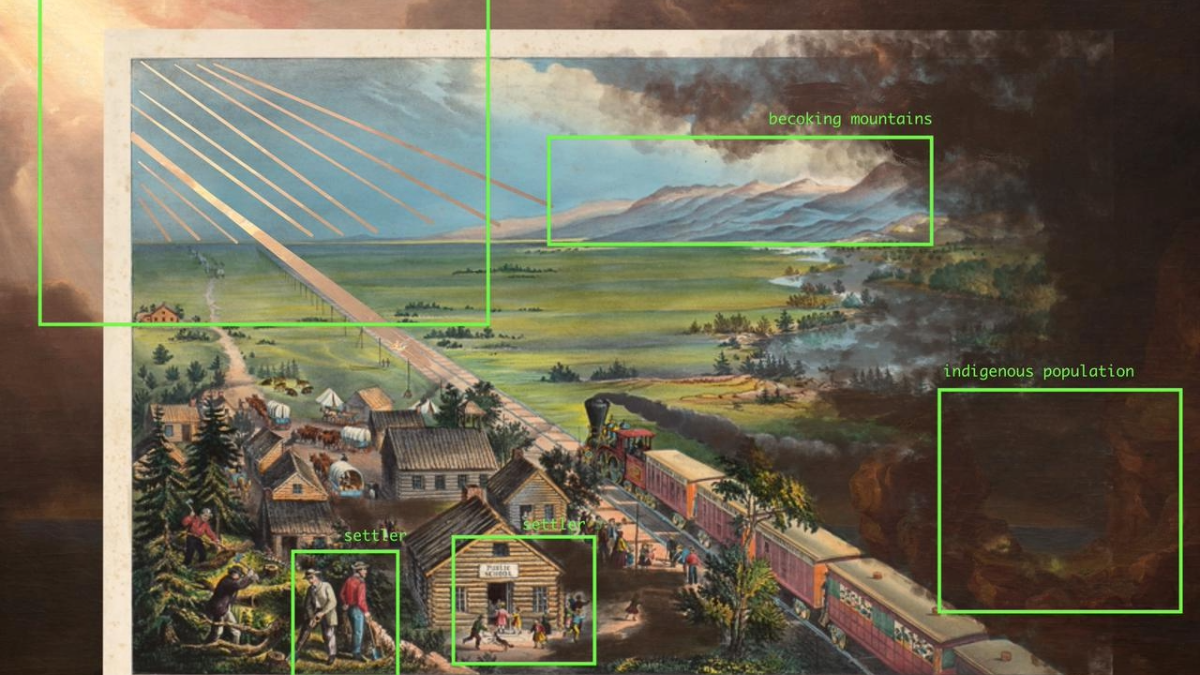

Hanna Barakat + AIxDESIGN & Archival Images of AI / Better Images of AI / Frontier Models 1 / CC-BY 4.0

As transformative technologies reshape our daily lives and environments, they tend to generate conceptual uncertainties, forcing us to question how familiar concepts apply to novel situations. They are, in essence, ‘conceptually disruptive.’

Conceptually Disruptive Technologies

Take today’s LLM-based chatbots like ChatGPT, Claude, or Gemini, for instance. Are we willing to grant their generative outputs a form of creativity? A large swath of the ever-increasing copyright infringement lawsuits revolve around this question, with dozens of actions filed against Google, Microsoft, OpenAI, and others in 2024, according to GWU’s AI Litigation Database.

Or consider decision-making systems with medium to high-action autonomy, known in technical terms as ‘human-in-the-loop’ and ‘human-out-of-the-loop’ systems, respectively. How do we attribute responsibility for the outcomes of these systems’ decisions? The answer will partly hinge on how we construe the notion of control that underpins responsibility attributions. For example, a strict notion of control requiring direct human causal influence over system outputs creates ‘responsibility gaps’ in autonomous decision-making systems. And this conundrum will become a critical liability issue, typically, as car manufacturers advance toward higher SAE levels of driving automation.

Such conceptual disruptions are not merely terminological quibbles. How we contend with them fundamentally shapes the responsible integration and use of innovative and potentially disruptive technologies. Nowhere is this more evident than with the notion of trust embedded in ‘trustworthy technologies’ applied to artificial intelligence (AI) systems — ‘trustworthy AI,’ for short.

The Many Shades of Trust

Trust, when examined closely, reveals two distinct conceptions: one moral, referring to our reliance on others based on some expected values-based behavior, like honesty or benevolence, and one epistemic, referring to our reliance on others’ knowledge or expertise based on expected truthfulness. Notably, the moral conception involves a notion of moral agency that many would hesitate to bestow on AI systems, while the epistemic conception better captures the gist of humans’ reliance on these systems.

Within human-AI interactions, the epistemic conception of trust unveils yet another crucial distinction, this time between individual and collective trust. Individual trust operates in one-to-one relationships, while collective trust emerges in one-to-many or many-to-many relationships. As any careful operationalization of trustworthiness — through lenses of transparency, explainability, and accuracy — demonstrates, the conception of trust at stake in human-AI interactions extends beyond the single technical device (that is, an AI model) to encompass its whole socio-technical system (including the socio-economic context, people, and the environment alongside data, input, tasks, and outputs as per OECD’s classification). In other words, we confront a collective, not inter-individual, conception of trust in human-AI interactions.

Moreover, operationalizations of the epistemic and collective conception of trust also show us that it transcends mere AI outputs (such as predictions, recommendations, or decisions) to include the entire process generating these outputs and their systemic impact across the AI lifecycle. This multifaceted nature of trust sets the stage for a deeper problem.

A Fragmented Trust?

Like light through a prism, our simplistic analysis of trust in ‘trustworthy AI’ yields a two-by-two-by-two array of three dimensions: moral vs. epistemic, individual vs. collective, and output-focused vs. process-inclusive. Yet, from the eight resulting conceptions of trust, only one — the epistemic, collective, process-inclusive conception — appropriately applies to human-AI interactions.

This kind of conceptual fragmentation poses immediate practical challenges for organizations and individuals to understand, prepare for, and leverage transformative technologies. Crucially, it is bound to breed misalignments between stakeholders who attach different meanings to the same term. In the present case, such disconnects emerge when, for instance, AI developers or deployers employ trust in an epistemic, collective, process-inclusive sense while end-users interpret it through a moral, individual, output-focused lens.

Here lies the critical tension: trust in the moral, individual, output-focused sense not only disregards but actively resists transparency, resting instead on an ‘unquestioning attitude’ from the trustor. Such a mindset manifests in a recent Capgemini report finding that 73% of consumers worldwide “trust” AI-generated content, while 49% remain unconcerned about its potential for creating fake news. This stance contradicts the empowering aspiration driving ‘trustworthy AI’ since its introduction by the EU AI HLEG to foster technological innovation benefiting all. Instead, it triggers a misguided framing of the human-AI interaction, potentially fomenting detrimental narratives about technology betraying us and thus catalyzing massive societal rejection, as suggested by the 2024 Edelman Trust Barometer’s 28-nation survey.

Toward Conceptual Alignments

Where does that leave us? While ‘trustworthiness’ terminology is too deeply entrenched in technology contexts to change it, understanding its complexities enables thoughtful application. Success in building trustworthiness will require inclusive and participatory conceptual alignments among stakeholders across the value chain to ensure responsible innovation where transformative technologies serve society’s interests. This starts with making our conceptual frameworks explicit without presupposing any common understanding. Once established, trustworthiness will foster, in turn, trust in technologies by society. Such virtuous self-reinforcement will prove pivotal in shaping societies’ adoption and integration of future transformative technologies.

Authors