Imagining Our AI Future to Inform Today’s Policy Discussions

Henry Lee / Jan 23, 2025

Clarote & AI4Media / Better Images of AI / AI Mural / CC-BY 4.0

Henry Lee was a Fall 2024 visiting fellow at the Georgetown McCourt School of Public Policy.

Introduction

Policymakers navigating the complex risks and benefits of a rapidly changing technology landscape over the next few decades should embrace Futures studies as a crucial wayfinding tool. As we face major changes in our geopolitical systems, as well as potential crises in climate and public health, whether, when, and how we regulate core technologies like artificial intelligence will fundamentally shape what lies ahead for humanity.

Futures studies, or Futures, encourage a proactive rather than reactive stance that asks practitioners to deeply consider possible paths ahead and then design ideas and policies that predict rather than respond to world events. As a discipline, Futures is a creative process of imagining what the future may look like and understanding how we might be best prepared for it through a wide variety of forecasting and strategic foresight methods. This creative process places a heavy emphasis on the plurality of the word futures, as opposed to a single future. It also emphasizes transdisciplinarity–no single discipline can respond to all anticipated challenges, often intertwined with each other, just as there are many possible outcomes.

Understanding and applying Futures will equip policymakers grappling with artificial intelligence with specific tools, like the ones discussed below, to anticipate long-term challenges and opportunities.

Futures have been used in AI policy-making before. In a recent example, the Organisation for Economic Co-operation and Development (OECD) released a report in November 2024 titled Assessing Potential Future Artificial Intelligence Risks, Benefits, and Policy Imperatives, which was jointly supported by their Strategic Foresight Unit (SFU). The OECD website states that “...foresight cultivates the capacity to anticipate alternative futures and an ability to imagine multiple and non-linear possible consequences.”

The report identifies ten future risks and benefits, along with ten policy suggestions. Finally, the report states that “Governments are working to build strategic foresight capacities… This is critical given the rapid development of AI, its unknowns and the potential costs of falling behind.” Indeed, AI is outpacing any ability to govern it; it’s critical that a more agile approach like Futures be applied to AI so that creative minds across disciplines can be harnessed to get ahead of or attempt to keep in step with its whirlwind evolution.

To illustrate how Futures can be applied to AI forecasting in practice, this article looks at workshops, two at Georgetown University and others with private clients, that employed Futures methods. We hope policymakers, as well as future policy leaders, will see these as models for their work.

Workshop 1 - Futures Cone

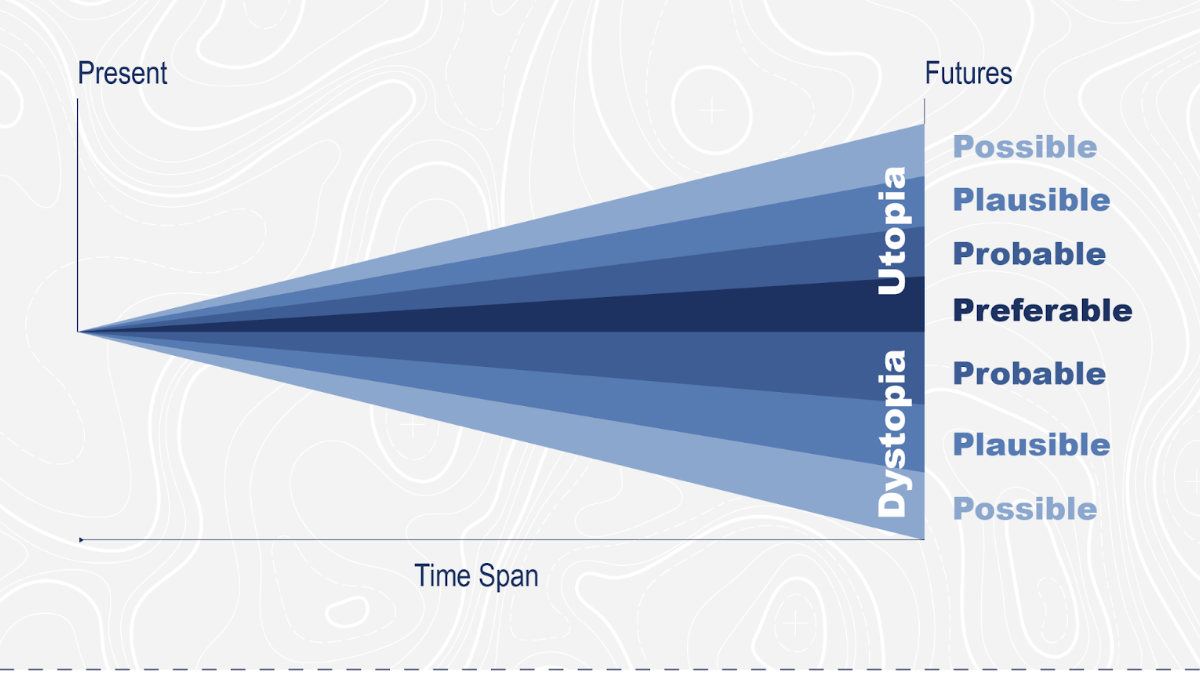

One proven method of Futures is the 'Futures Cone,' which is representative of the outlook that Futures practitioners possess and apply. The Cone, pictured below, visualizes four kinds of possible futures emanating from the present. The futures are possible, plausible, probable, and preferable. More complex cones also split into future utopias or dystopias, as displayed in Figure 1. In short, the user of the cone chooses a time frame, usually somewhere between 5 to 50 years, and then begins mapping out possible multiple futures in relation to a specific topic.

Figure 1 - 'Futures Cone' visualizing four kinds of possible futures

A recent workshop I developed with a colleague in my position as Tech & Public Policy Visiting Fellow at Georgetown tasked participants, including students and faculty, with envisioning multiple AI futures based on the four future cone types or, essentially, how can we prepare for a variety of futures that may take place within the next 5 to 50 years? Participants were then asked to write basic policies for each of these futures in a short time frame. The result was a series of AI futures with adjoining policies to address the positives and negatives that might happen. Prototyping and hosting the workshop aided policy-makers in being prepared for different outcomes and was a proactive measure instead of a reactive one.

Workshop 2 - Four Societal Arcs

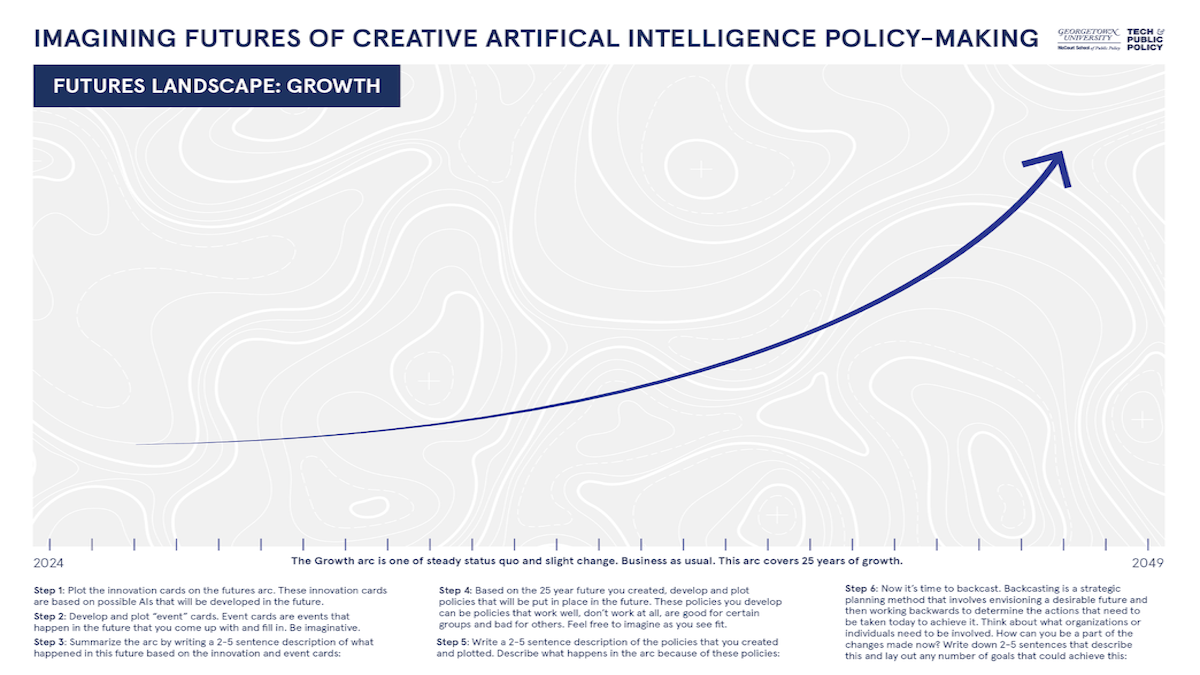

Another workshop I designed for Georgetown employed a mixture of Futures methods. These included Dator’s and Smart’s Four Futures Arcs, Horizon Scanning or Signals of Change, and Back-casting. For this activity, I created an exercise using a 25-year future arc. Participants looked at what may happen between now and 2049 using four different possible outcomes, including societal growth, collapse, continuation, and transformation. Figure 2 displays what one of the four arcs looked like before the activity.

Figure 2 - Growth arc

Based on one of the four arcs, participants were asked to map out various scenarios where AI tools may affect the societal arc. For example, how does an AI that generates images contribute to societal issues surrounding deepfakes (images that appear real but are fake) over the next 25 years? Does it cause growth or collapse? Participants were then tasked with writing policy, such as a regulation prohibiting deep fakes used in political ads, that would address these potential AI issues over the next 25 years, and to plot when and how that policy would need to go into effect.

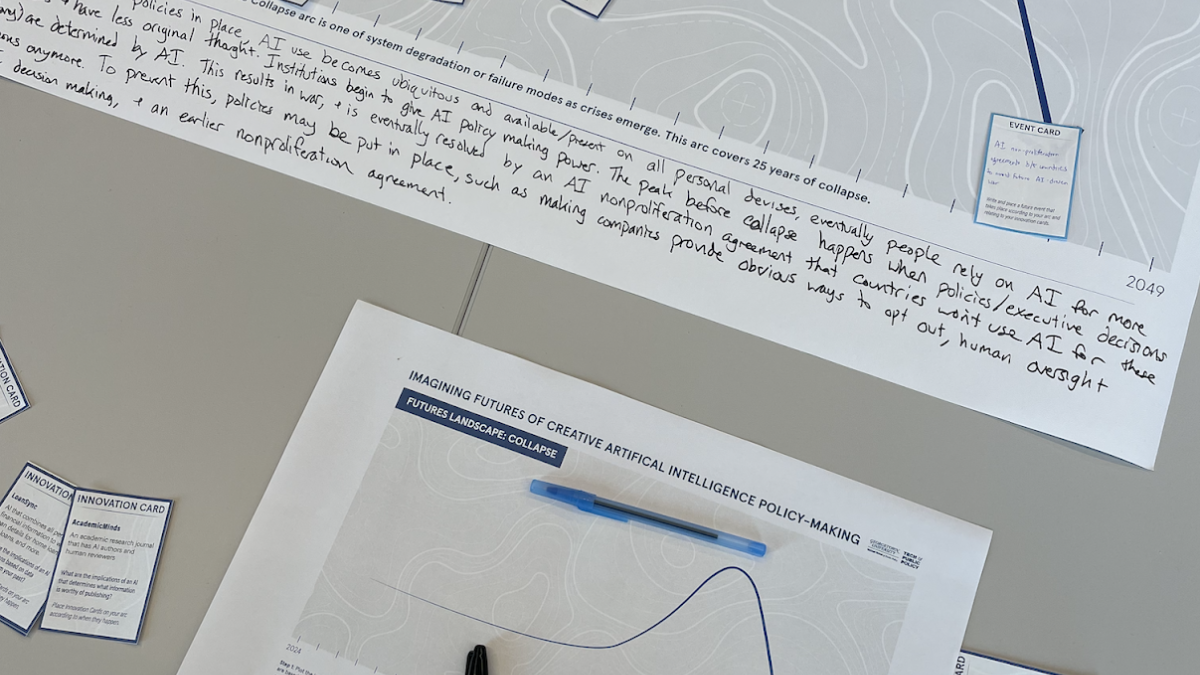

One way to consider this is imagining how AI images might change over the next 25 years; for example, will AIs still be producing screen-based or pixel-based images? Or will they be producing images in 3D or 4D? Will they be able to produce a hologram for a visual likeness of a person that is hard to distinguish from an actual human? How will individuals view them, and how might this impact political advertising or even voting? Figure 3 shows what the one the finished collapse arcs looked like. The workshop helped to shift the perspectives of the policymaker participants into a mindset that considered what needs to be done for the future and what needs to be done now to achieve that future (a method known as back-casting).

Figure 3 - Collapse Arcs

Conclusion

These workshops are simple examples of how to go about incorporating Futures studies into policymaking related to artificial intelligence but there are a number of other Futures methods that can be redesigned for policy purposes. AI is becoming ubiquitous. Over the past few years I have been able to work regularly in the field of AI, including teaching it, designing it, and using it. It’s essential for creative professionals to consider not just how their work might be ingested, fairly or not, by AI but how they might apply their creative skills to inform AI policy formation.

Jim Dator, a political scientist and founding futurist, said, “Most people assume there is a single future ‘out there’ that can be accurately identified beforehand. That might have been a reasonable assumption a long time ago, but it is not a good bet now.” Futures practitioners and methods will move AI policy forward past the idea of a single future so that we can prepare for any possible, plausible, and probable worlds that may lie ahead - and perhaps achieve preferable futures.

Authors