How Can Governments Regulate Default Settings to Unlock User-Friendly Features?

Rehan Mirza, Tommaso Giardini, Maria Buza / Jan 14, 2025

Photo by Shutter Speed on Unsplash

Social media platforms offer powerful tools to enhance privacy, content quality, and consumer protection. However, many of these features are turned off by default, leaving users to navigate complex interfaces to access them – if they are even aware they exist.

Given the potential of these features, governments worldwide are stepping in to regulate default settings and users’ ability to modify them. But, these regulatory efforts lack coordination and can lead to unintended consequences. To support more international coordination on this issue, Digital Policy Alert drew upon its daily monitoring of developments in the G20 countries to examine how governments regulate default settings and users’ ability to change them. We developed key lessons for governments to consider as they pursue these regulations.

Default settings and complex interfaces obfuscate user-friendly features

Platforms offer user-friendly features that can improve users’ experience – from data protection to content moderation to consumer protection. To protect data, Meta, YouTube, X (formerly Twitter), and TikTok allow users to manage data sharing and targeted advertising. To improve content feeds, Meta platforms (Facebook, Instagram, and Threads) let users adjust settings to reduce"false,""low-quality," and"unoriginal" content. To support a healthy online experience, Instagram and TikTok let users limit daily screen time and set prompts to take a break to prevent endless scrolling. (Annex I provides a non-exhaustive overview of these user-friendly features.)

While these user-friendly features hold great potential to address potential user harms, their impact is often limited by two issues. Firstly, user-friendly features are rarely activated as a default setting. This may reflect a conflict with commercial incentives, as platforms prioritize engagement-driven features that maximize revenue. Meta’s Project Daisy, an initiative to reduce the visibility of likes on user posts, provides a case study. When testing showed positive effects for teens, the team recognized its reputational value. However, the change negatively impacted revenue and engagement metrics. When the feature was launched, it was switched off by default, with Meta emphasizing user choice in its announcement.

Second, complex interfaces limit users’ ability to change default settings. Users accept defaults due to status quo bias. Since users carry the cognitive burden of changing settings with limited time and attention, they rarely follow through. Even if users try to adjust settings, they often face hurdles, including hidden menus, lengthy and confusing descriptions, or disruptive pop-ups. Such “dark patterns” nudge users into taking actions they may not intend to. For instance, the Privacy Commissioner of Canada found that 97% of websites and mobile apps employed deceptive design patterns that undermine privacy (see Figure 1). Annex II provides an example of a path for users to change default data-sharing settings.

Regulations addressing default settings and complex interfaces

Governments worldwide are increasingly recognizing the importance of default settings and complex interfaces on digital platforms and are taking steps to regulate them. Their efforts focus on two key areas: mandatory defaults and user-friendly interfaces. Rules on “mandatory defaults” require platforms to enable user-friendly features by default. Rules on “user-friendly interfaces” empower users to change settings more easily, tackling the issue of complex interfaces. These regulations address user concerns in key areas such as data protection, content moderation, consumer protection, and access restrictions for minors.

Data protection

Data protection rules often mandate defaults for data protection. The European Union’s General Data Protection Regulation enforces principles like data minimization and data protection by default. The United Kingdom’s age-appropriate design code requires platforms and services targeting children to implement high-privacy settings by default. State-level laws in the United States, including California and Maryland, impose similar requirements. When WhatsApp changed its privacy policy to include data sharing with other Meta platforms, regulators worldwide, including in South Africa and Brazil, raised concerns and alleged that users were coerced to accept new default settings (or lose access to Whatsapp).

Governments also aim to simplify how users can adjust settings, striving for user-friendly interfaces. For instance, the California and Maryland design codes mandate a simplified process to change privacy settings and explicitly prohibit deceptive design practices that nudge users toward reduced privacy. Recently, the Irish Data Protection Commission fined TikTok EUR 345 million for deploying a user interface that nudged users toward privacy-intrusive settings, among other violations.

Data protection rules on default settings and user-friendly interfaces can easily overlap, as evidenced by the EU’s rules on cookies. The European Union’s ePrivacy Directive requires companies to ask users for consent to use cookies, mandating a user-friendly default setting. Companies thus introduced cookie banners, asking users to consent before they could access websites, prompting debates concerning the banners’ compliance with the directive. For example, the Spanish Data Protection Authority recently issued a fine because users could not refuse non-essential cookies with a single click. The European Union is deliberating the ePrivacy Regulation, aiming to replace the ePrivacy Directive, including creating new rules to simplify opt-outs for cookies.

Content moderation

Mandatory defaults on content moderation often target illegal content and the exposure of minors to “lawful but awful” (harmful) content. Rules on illegal content are common around the globe, with recent developments in China, Indonesia, Vietnam, and Turkey. Mandates range from upload filters – the strictest mandatory defaults – to notice-and-takedown procedures to general monitoring obligations. Regarding the protection of minors, the United Kingdom’s Online Safety Act requires websites and platforms to block access to harmful content, such as pornography, for users under 18. Similarly, the European Union’s Digital Services Act requires platforms to restrict minors’ access to inappropriate content. The European Commission is currently investigating the compliance of Snap, TikTok, Meta, Xvideos, Stripchat, and Pornhub with these measures.

For adults, rules emphasize user-friendly interfaces, empowering users to filter harmful or unwanted content. The UK’s Online Safety Act requires platforms to offer controls to filter content from unverified users and customize content based on engagement preferences. China’s regulation on recommendation algorithms allows users the option to turn off algorithmic suggestions altogether. In addition, users can modify the single tags that influence content recommendations.

One reason why harmful content rules focus on user-friendly interfaces rather than mandatory defaults is free speech. Content moderation by default can be perceived as government overreach based on First Amendment laws in the United States or government censorship concerns elsewhere. Empowering users to select content controls provides an alternative, less intrusive approach for governments.

Consumer protection

Increasingly, consumer protection laws that include mandatory defaults to protect users are spreading across the globe. The Republic of Korea’s amended E-Commerce Act bans pre-selected subscriptions and add-ons without consent. India’s guidelines on dark patterns prohibit pre-selected add-ons and subscription options with hidden fees that appear post-transaction. The European Commission recently argued for “positive duties” requiring platforms to enable “consumer safety by default.” The currently deliberated Digital Fairness Act may well formalize such duties.

Governments are also targeting deceptive and complex interfaces. The European Data Protection Board issued guidelines demanding social media platforms use clear language to explain user choices, provide simple controls, and regularly audit user interfaces regarding transparency and functionality. The European Union’s Unfair Commercial Practices Directive prohibits deceptive designs that could harm consumers' economic interests. The Indian guidelines on dark patterns also prohibit deceptive practices related to user interfaces, such as using language to ‘shame’ a user from making a particular choice and manipulating user interfaces to highlight or obscure certain information. Finally, the above-mentioned Irish TikTok fine exemplifies the interplay between defaults and interfaces when users first access a platform. The initial selection of personal preferences locks into default settings – registration interfaces can thus nudge users towards non-user-friendly features.

Minor access restrictions

Governments are also increasing their efforts to restrict minors’ access to parts of the internet. Beyond the content restrictions and privacy settings for minors outlined above, certain rules block minors’ access to platforms altogether – through blanket bans or parental controls.

Australia fast-tracked a bill to ban social media for users under 16. Florida enacted a bill prohibiting users under 14 from joining social media, although this measure is being challenged in court. France has adopted legislation requiring social networks to verify users’ age and refuse registration for users below 15 until parental consent is obtained. France is now advocating for the European Union to adopt similar rules. China restricts usage time on gaming and streaming platforms for users under 18 and prohibits users under 16 from registering entirely. Vietnam also requires gaming providers to limit playtime for users under 18.

Other governments pursue an alternative approach, emphasizing user control by putting the responsibility of managing minors’ online activity on parents. The UK’s age-appropriate design code requires platforms to provide parental tools to manage their children’s online activity. Australia and Singapore also require social media platforms to provide parental controls. This approach is also taken by the US Kids Online Safety Act (KOSA), which would require platforms to provide parents with tools to monitor time spent online, toggle categories of permitted content, and see real-time activity.

Regulatory trade-offs and learnings for governments seeking to regulate defaults

The regulation of defaults presents lessons for governments regarding both regulatory diversity and trade-offs between policy objectives. For example, age verification requirements supporting minor protection in different countries vary in scope, verification methods, and even in defining a “minor.” Such divergent regulatory frameworks can create fragmentation through firms’ bottom line and lead companies to refrain from expansion or to adopt blanket policies. This can lead to unintended consequences, including an “adults-only” internet. In addition, if governments aim to empower users, creating asymmetric protections across borders undermines the universality of this objective. Governments could instead pursue international alignment in recognition that they are addressing the same issues.

The regulation of defaults can also lead to trade-offs between policy objectives. Recent discussions have highlighted how age verification requirements, aiming to protect minors, introduce privacy and security concerns. Apple's launch of its App Tracking Transparency (ATT) underscores a potential conflict between privacy and competition as it required all iOS apps to ask users for permission to share their data, through a pop-up notification. As only 4% of US users and 12% of global users opted in, ATT decreased user tracking, advancing the presumed objective of privacy rules. However, ATT exacerbated concerns of market power concentration by advantaging Apple’s digital ad business. Competition authorities in France, Germany, Italy, among others, promptly launched investigations to analyze whether the conditions imposed on third-party developers favored Apple.

Regulatory diversity and trade-offs between policy areas are often unwanted consequences of regulatory processes. Time-strapped regulators pursue their mandates as best they can, driven by the policy objectives of their domain. However, without a holistic approach, governments will create friction between policy domains – willingly or not. Similarly, governments are regulating defaults unilaterally because they lack a common language and information basis, causing fragmentation. To avoid some of these pitfalls, governments should consider the following findings:

- Mandatory defaults and user-friendly interfaces are different solutions to different problems – albeit with significant overlaps. Mandatory defaults are more direct and thus often used more restrictively. Since defaults are hard to change, mandating one-sided defaults can have unintended consequences.

- Without a holistic approach, well-intentioned default regulations will create conflicts between policy objectives. These trade-offs must be addressed, especially when regulating mandatory defaults.

- On the current path, default regulations will be different across borders, leading to fragmentation. Governments should embrace their shared goals and, by systematizing the issues and recognizing similarities in how others approach each issue, pursue international alignment.

- Default regulations are one of many tools for governments to regulate digital platforms. Despite the similarity with other concerns, such as hidden fees or intrusive pop-ups, defaults demand a distinct approach.

Regulation is an important mechanism but not the only one to unlock user-friendly features. User awareness, platforms, and also third-party solutions can contribute. Block Party’s anti-harassment and privacy plug-ins provide controls to change default settings with minimal user effort. Similarly, the Trust & Safety Tooling Consortium at Columbia aims to design open-source, interoperable solutions that can be adopted across platforms to enhance user safety and transparency.

Annex I: User-friendly features and default settings

This table provides a (non-exhaustive) selection of default settings on social media and other digital platforms for features across privacy, content moderation, and minor protection. The information was verified as of January 8, 2025.

Annex II: A path to change default settings

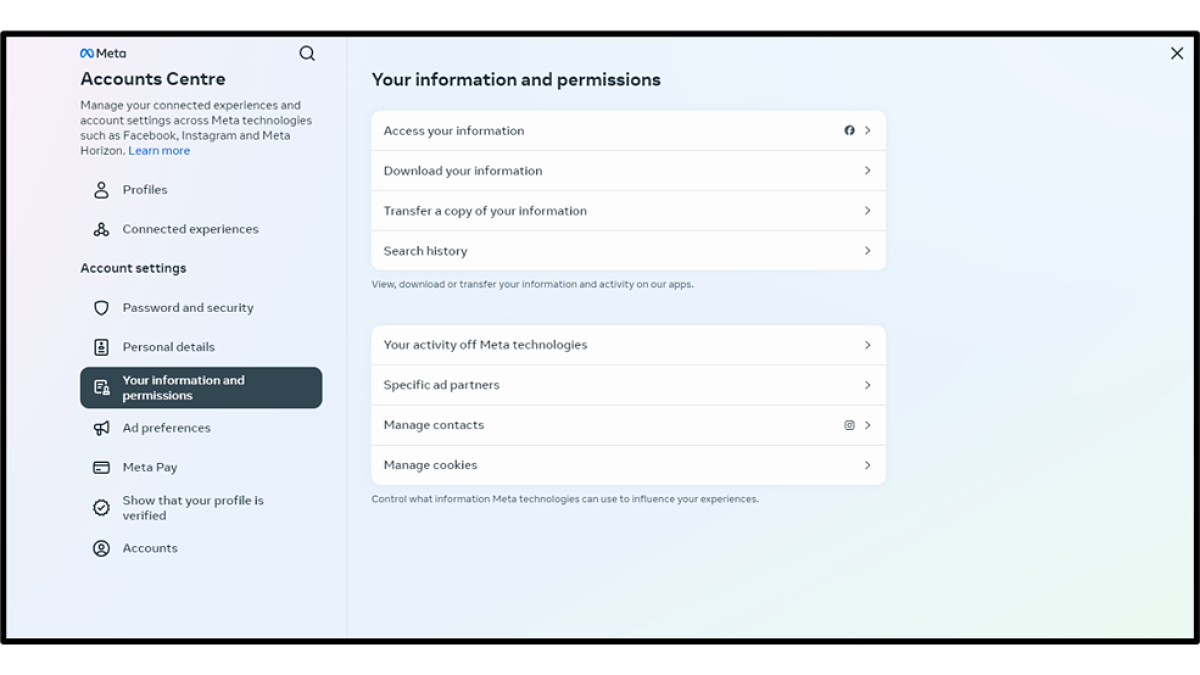

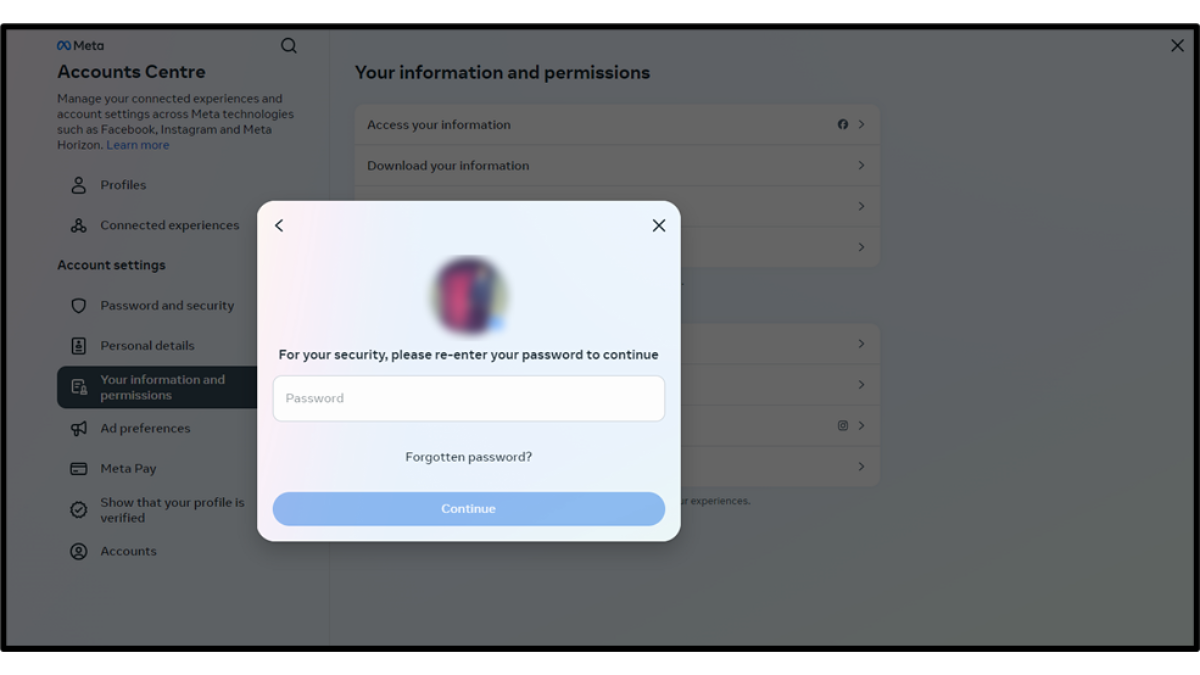

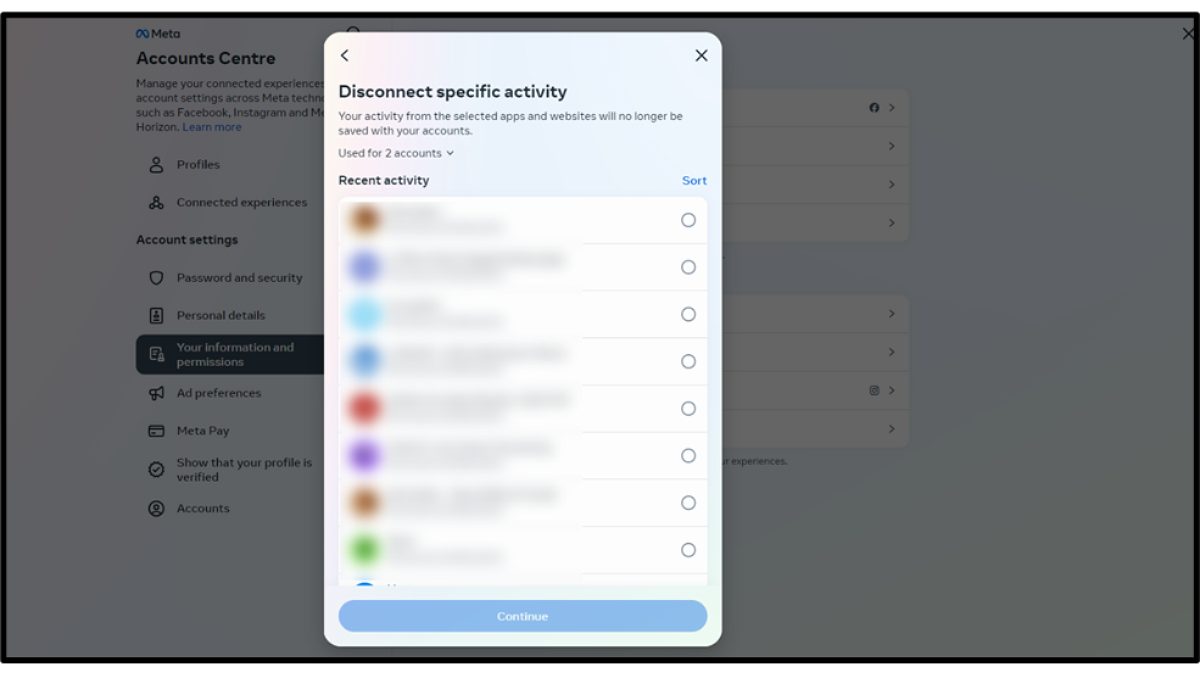

This Annex provides an example of the steps users have to take to change Meta’s default settings on data sharing. We tested the approach in the European Union, United Kingdom, and United States. Screenshots were captured on December 17, 2024.

It takes five steps to start changing the default settings: Facebook landing page → Settings & privacy → Settings → See more in Accounts Centre → Your information and permissions → Your activity off Meta technologies.

The default data sharing settings must be switched off on an individual app basis or by completely switching off data sharing. Users have to digest the settings descriptions to understand what each setting controls, which creates additional cognitive burden.

Re-authentication is required to confirm changes to default settings, for security purposes.

Authors