February 2023 U.S. Tech Policy Roundup

Kennedy Patlan, Rachel Lau, Carly Cramer / Mar 1, 2023Kennedy Patlan, Rachel Lau, and Carly Cramer are associates at Freedman Consulting, LLC, where they work with leading public interest foundations and nonprofits on technology policy issues.

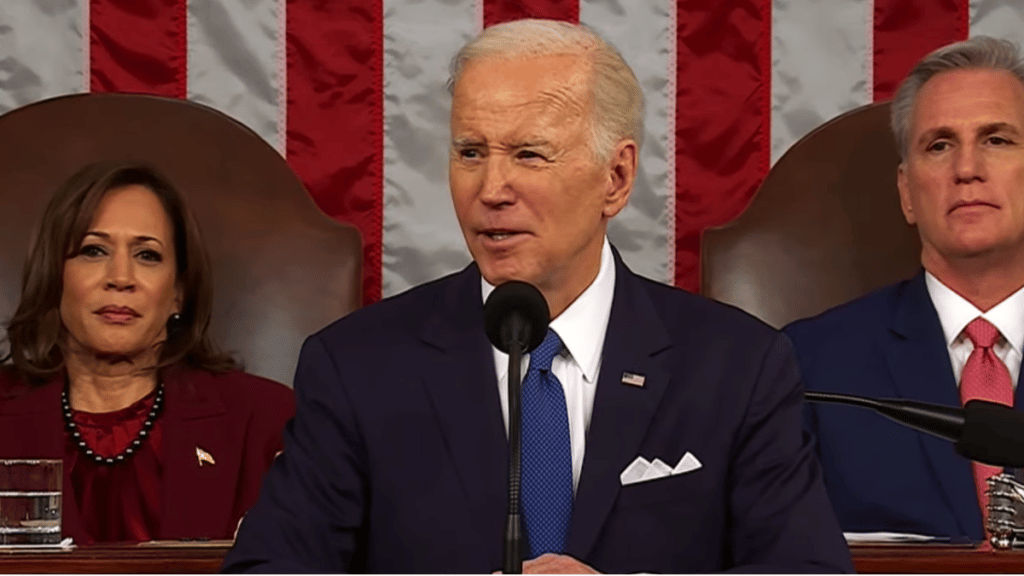

In February, President Joe Biden put tech issues on the national agenda during his second State of the Union address. Meanwhile, there was substantial turnover in key tech policy and regulatory roles at the federal level. Dr. Alondra Nelson stepped down from her role as the deputy director for science and society at the Office of Science & Technology Policy. Gigi Sohn sat for a third confirmation hearing in hopes of finally securing a seat on the Federal Communications Commission. President Biden renominated Federal Trade Commissioner Rebecca Kelly Slaughter to serve a second term, while Republican Commissioner Christine Wilson resigned from her post in protest of Chair Lina Khan’s leadership.

The FTC also announced it will launch a new Office of Technology, which will focus on tech sector oversight, more than doubling the agency’s technologist capacity. The National Telecommunications and Information Administration (NTIA) released a request for comments that will inform a report on how commercial data practices can lead to inequities for marginalized or disadvantaged communities. Finally, the EEOC made comments in response to their draft Strategic Enforcement plan publicly available. Among the public comments include feedback from the ACLU, NAACP, and the Center for Democracy & Technology.

In Congress, lawmakers introduced a wide variety of legislation and advanced several bills through committee action in February. Representatives David Cicilline (D-RI) and Ken Buck (R-CO) announced their plans for the Congressional Antitrust Caucus, which will act as a bipartisan effort to hold hearings, push legislation, and act as a “brain trust” in addressing antitrust issues in the U.S. The House Oversight and Accountability Committee also held a hearing with Twitter executives to investigate claims of anti-conservative censorship. Meanwhile, the House Energy and Commerce Committee’s Innovation, Data, and Commerce Subcommittee held a markup of five technology bills that seek to address potential security threats posed by China.

In February, the FTC lost its case against Meta regarding the platform’s acquisition of Within Unlimited, a VR content maker. The agency announced it would not appeal. However, the FTC successfully settled with GoodRx, a telehealth platform that was found to be deceptively sharing user data with Big Tech companies. The agency also warned AI companies against deceptive claims about their products’ capabilities and limitations.

TikTok continued to face scrutiny from the Hill, as Senate Majority Leader Chuck Schumer (D-NY) called for Congress to look into a complete ban of the platform and a House committee advanced legislation that could serve to ban it altogether. Senators Richard Blumenthal (D-CT) and Jerry Moran (R-KS) also wrote a letter to the Treasury Secretary, Janet Yellen,, demanding that the administration “impose strict structural restrictions” between the company’s US and China operations. Senators Marco Rubio (R-FL) and Angus King (I-ME) re-introduced legislation to ban TikTok across the country, as other Republican policymakers introduced a new, but related bill.

The below analysis is based on techpolicytracker.org, where we maintain a comprehensive database of legislation and other public policy proposals related to platforms, artificial intelligence, and relevant tech policy issues.

Read on to learn more about February U.S. tech policy highlights from the White House, Congress, and beyond.

Biden Highlights Tech in the State of the Union

- Summary: President Biden delivered his second State of the Union address in early February, mentioning multiple tech policy issues. Focusing on the impact of social media on the mental health of youth, the President called for better data privacy protection and a ban on targeted online advertising for youth, pushing Congress to “pass bipartisan legislation to stop Big Tech from collecting personal data on our kids and teenagers online, ban targeted advertising to children, and impose stricter limits on the personal data these companies collect on all of us.” Ahead of the speech, the White House released a fact sheet on Biden’s remarks, outlining the president’s call for more robust data privacy and platform transparency. The White House also called out geolocation and health information as especially sensitive data and pushed for data minimization, algorithmic transparency, and limits on targeted advertising in the tech sector.

- On the Hill: Children’s online safety is an issue with bipartisan potential, and Biden’s support potentially strengthens the position of kids privacy proposals like the Children and Teens’ Online Privacy Protection Act (COPPA 2.0*) and the Kids Online Safety Act (KOSA*), both of which stalled in Congress last year. Senator Ed Markey (D-MA) plans to continue to spearhead the COPPA 2.0 effort, which seeks to expand existing protections to cover kids 13-16 years old, ban targeted advertising to children, and create a youth-focused division at the Federal Trade Commission. Senators Richard Blumenthal (D-CT) and Marsha Blackburn (R-TN) also plan to reintroduce KOSA in the new Congress, although they face criticism from activists arguing that the bill threatens the online freedom of LGBTQ+ youth and that the bill’s provisions could unwittingly harm adults.

- Following the President’s address, the Senate Judiciary Committee held a hearing titled “Protecting Our Children Online”, where child safety advocates testified about the harms and impacts of social media on children and young adults. At the hearing, Committee Chairman Sen. Dick Durbin (D-IL) announced plans to invite tech leaders to share their thoughts on these topics and also pledged to mark up related proposals. The hearing came shortly after Democratic lawmakers introduced the Clean Slate for Kids Online Act (S.395), which would update the Children’s Online Privacy Protection Act of 1998 (COPPA) by creating an enforceable legal right that allows platform users to demand that technology companies delete information collected on users under 13 years old. Note: These bills were introduced by lawmakers during the 117th Congress and will not advance unless they are officially reintroduced in the 118th Congress.

- Stakeholder Response: Representatives from trade associations TechNet and the App Association supported Biden’s statements, praising the push for a federal privacy law. Following Biden’s mention of kids' online safety in the State of the Union, both COPPA 2.0 and KOSA faced criticism from advocacy groups like Fight for the Future, who argued that Congress should push for federal digital privacy laws that cover all Americans and not just children. Fight for the Future Director Evan Greer argued that lawmakers should pass a robust data privacy law rather than KOSA, which she described as “authoritarian.”As Congress aims to identify a path forward for children’s safety and broader privacy policy online following Biden’s presidential push, advocates are more optimistic about solutions coming from the states first: “Ultimately, the solution will probably come first from the state level or Europe, as it does for all significant privacy legislation," said Common Sense Media Chief Executive Jim Steyer. Representatives from The Tech Oversight Project also praised the State of the Union, noting that the speech included the first use of the word “antitrust” in the State of the Union since 1979.

- What We’re Reading: The Washington Postreported on the people and factors at play in the development of tech messaging in the State of the Union. WIRED highlighted the growing public attention on data privacy. John Perrino wrote at Tech Policy Pressabout President Biden’s mention of kids online safety. CNN examined the ongoing efforts in Congress to pass kids’ online safety legislation. The Washington Post covered progress on children’s privacy policy at the state level.

New Equity Executive Order Tackles AI; Concerns Multiply About Use of Discriminatory Screening Tools

- Summary: On February 16, President Biden issued an Executive Order on Advancing Racial Equity and Support for Underserved Communities Through the Federal Government with major implications for artificial intelligence policy and justice. The new directive, which supplements a day one executive order, contains several key provisions that call on agencies to "root out bias in the design and use of new technologies," "ensure that their respective civil rights offices are consulted on decisions regarding … artificial intelligence and automated systems," and promote the recommendations issued by the Interagency Working Group on Equitable Data. It includes many other provisions involving interagency coordination and implementation of recommendations from the interagency process.

- Stakeholder Response: Maya Wiley, President and CEO of The Leadership Conference on Civil and Human Rights issued a statement in support of the "order's focus on… addressing bias in algorithms and artificial intelligence, and promoting data equity and transparency." However, she also noted that "executive orders are only as strong as their implementation." The ACLU’s ReNika Moore celebrated the new executive order as a "major step forward," and noted that "critically, it directs agencies to act on modern day tools of discrimination such as artificial intelligence, and calls for increased engagement and coordination with impacted communities." The Electronic Privacy Information Center praised President Biden's decision to include the definition of algorithmic bias dictated by his administration's Blueprint for an AI Bill of Rights, which was released last October. Senator Markey (D-MA) issued a statement applauding the order for its focus on algorithmic discrimination, while right-wing activists have referred to the order as “a special mandate for woke AI.”

- What We’re Reading: Bloomberg Law explored how AI’s increasing prevalence will impact criminal justice and civil rights. FCW examines the Executive Order’s potential ramifications for artificial intelligence. Janet Haven, Executive Director of the Data & Society Research Group, evaluated the potential efficacy of the order, pointing to three key limiting factors. Zach Graves, Daniel Schuman, and Marci Harris investigated the potential impacts of emerging AI tools in the legislative branch for Tech Policy Press. Tim Guilliams celebrated the Blueprint for an AI Bill of Rights, but argued in Forbes that the U.S. still has a long way to go in confronting algorithmic bias.

Other AI News

- Representative Ted Lieu (D-CA) introduced the first-ever federal resolution “written” by artificial intelligence, H.Res.66, late January after asking ChatGPT to compose "a comprehensive congressional resolution generally expressing support for Congress to focus on AI.”

- Senators Markey (D-MA), Jeff Merkley (D-OR), Cory Booker (D-NJ), Elizabeth Warren (D-MA), and Bernie Sanders (I-VT) coauthored a letter to Transportation Security Administration head David Pekoske, calling on the agency to immediately halt its deployment of facial recognition, as "increasing biometric surveillance of Americans by the government represents a risk to civil liberties and privacy rights."

- The U.S. Customs and Border Patrol's new mobile app, which is currently the only method for migrants arriving at the U.S. border to apply for asylum, is failing to register many people with darker skin colors, effectively preventing them from accessing their right to request entry to the United States. Senator Markey sent the agency a letter in late February calling on them to stop using the app.

Big Tech Faces the Supreme Court

- Summary: In late February, Supreme Court justices heard oral arguments for two pivotal tech cases, Gonzalez v. Google and Twitter vs. Taamneh, the results of which may impact the nation’s standards for tech accountability and corporate liability. At the forefront of the first case, Gonzalez v. Google, is Section 230, which grants platforms liability protection for third-party content. The family and estate of Nohemi Gonzalez are the plaintiffs accusing YouTube (owned by Google) of playing an active role in radicalizing ISIS terrorists in a 2015 Paris attack through the platform’s recommendation algorithms. The second case, Twitter vs. Taamneh,has the social media company defending itself against the possibility of being held liable under the Justice Against the Sponsors of Terrorism Act (JASTA) due to accusations that the platform aided and abetted terrorists through its promotion of ISIS content. Anticipated rulings on both cases have tech platforms and tech policy advocates abuzz about what the decisions may mean for the future of internet litigation. Decisions are expected to be announced in the summer, just before the Court’s recess.

- Stakeholder Response: The oral argument focused on Gonzalez v. Google. That day, justices apparently sought to find a middle ground between the two opposing sides, with some expressing confusion about how the plaintiffs proposed maneuvering potential new liability standards for posting content, (i.e., the ability to sue the individual creators vs. the platform), as they grappled with potentially major implications that a ruling could have on the Internet at large. Justice Elena Kagan acknowledged how times have changed since Section 230’s inception, and Justice Ketanji Brown Jackson argued that the original Section 230 was intended “to be narrower than its interpretations.” Justice Brett Kavanaugh expressed belief that policymaking for this case should fall under the purview of Congress and alluded to the breadth of lawsuitsthat a ruling in favor of the plaintiffs could create. Justice Clarence Thomas wanted more insights into policy recommendations before making a decision.

- Tech Policy Press published perspectives on Gonzalez v. Google from ADL, Free Press Action, NYU Stern's Center for Business and Human Rights, Public Knowledge, the Cato Institute, the Center for Democracy and Technology, Common Sense Media, and the Lawyers' Committee for Civil Rights Under the Law. And, Article 19 legal officer Chantal Joris explained what's at stake in these cases, and Ben Lennett explored why there are no easy answers for the Court. Georgetown professors Anupam Chander and Mary McCord and Lawyers' Committee for Civil Rights Under the Law Managing Attorney David Brody detailed their varied perspectives on the Tech Policy Press podcast.

- During the second hearing, Twitter vs. Taamneh, the Associated Press reported justices appeared to be leaning in favor of Twitter. Justices Amy Coney Barrett and Neil Gorsuch each sought more details on how the plaintiff’s complaints met the Justice Against the Sponsors of Terrorism Act statute requirements, while Justices Samuel Alito and Kavanaugh discussed the implications for businesses that offer services on a “widely available basis,” including telephone companies. However, Justice Kagan made the parallel to banking institutions that are often held accountable for criminal activity. She acknowledged that the Court may not be used to the idea that “social media platforms provide important services to terrorists,” even though other services are often held accountable under similar circumstances.

- What We’re Reading: The New York Times dedicated an episode of The Daily to discussing the potential implications of Gonzalez v. Google. The Washington Post and The Verge provided real-time updates on each case.CBS Newsprovided opinions on the issuefrom tech advocates and elected officials. Vox talked about the Supreme Court’s seeming confusion about how to handle the Twitter vs. Taamneh case.

Other Legislation and Policy Updates

The following bill passed the House this month:

- Informing Consumers about Smart Devices Act (H.R. 538, sponsored by Rep. John Curtis (R-UT) and Rep. Seth Moulton (D-MA)): Proposed last month, this act would require manufacturers to disclose when internet-connected devices contained cameras or microphones. In February, the House passed the act. The Senate companion bill, S. 90, was introduced by Sen. Ted Cruz (R-TX) and co-sponsored by Sen. Maria Cantwell (D-WA) in January.

The following bills were introduced and marked up in committee in February:

- Deterring America’s Technological Adversaries (DATA) Act (H.R. 1153, sponsored by Rep. John Curtis (R-UT)): The DATA Act names concerns about the potential national security threat posed by TikTok and parent company ByteDance, requiring the Biden Administration to investigate the company for knowingly transferring user data to any “foreign person” under Beijing’s influence and to impose penalties up to a ban if a transfer is discovered. The act would mandate sanctions if the companies are found to have helped Beijing influence US elections or policymaking. The act would also weaken the Berman Amendment to IEEPA, which currently prohibits the US government restriction of “information materials” to foreign countries. The ACLU strongly opposed the bill, arguing that the effective ban on TikTok would violate the First Amendment rights of American users. The House Foreign Affairs Committee marked up and advanced the bill on March 1 with all Democrats voting no, potentially showcasing an emerging split on what had been a largely bipartisan issue thus far.

- Protecting Speech from Government Interference Act (H.R. 140, sponsored by Reps. James Comer (R-KY), Cathy McMorris Rodgers (R-WA), Jim Jordan (R-OH)): This bill would prohibit federal employees from using their authority to advocate for censorship of speech, including speech on third party platforms or private entities. Penalties would be established for those who violate the act, including a civil penalty, removal and debarment of employment, and reduction in pay grade. In markup in the House Oversight Committee, the bill was amended to remove specific mentions of executive branch employees; added exceptions for legitimate law enforcement related to child pornography, human trafficking, controlled substances, or classified national security information; and established reporting mechanisms.

- Accountability for Government Censorship Act (H.R. 1162, sponsored by Rep. Scott Perry (R-PA)): The Accountability for Government Censorship Act would mandate the Office of Management and Budget to investigate executive branch employees’ censorship of lawful speech on any platform and disclose findings to Congress. In markup in the House Oversight Committee, the bill was amended to only apply to communications with “interactive computer services” rather than all platforms andadded a reporting exception for legitimate law enforcement related to child pornography, human trafficking, controlled substances, or classified national security information.

- Telling Everyone the Location of Data Leaving the U.S. Act (H.R. 742, sponsored by Reps. Jeff Duncan (R-SC), Marcy Kaptur (D-OH), and Scott Perry (R-PA)): This bill would require any person maintaining a website or selling an app to disclose to users if their data is being stored in China. The Federal Trade Commission would be in charge of administering penalties and enforcing this act. The bill was marked up in the House Energy and Commerce Subcommittee on Consumer Protection and Commerce.

The following bills were introduced in February:

- Internet Application Integrity and Disclosure Act (H.R. 784, sponsored by Reps. Russ Fulcher (R-ID) and Chris Pappas (D-NH)): This bill would require anyone who maintains a website or sells an app to disclose to users when a platform is owned by any Chinese entity. The Federal Trade Commission would be in charge of enforcing this act.

- The Chinese-Owned Applications Using The Information of Our Nation Act of 2023 (H.R. 750, sponsored by Reps. Kat Cammack (R-FL) and Darren Soto (D-FL)): This bill would require anyone who sells or distributes a mobile app to notify users before downloading or updating the app if the app has been banned from federal devices. The Federal Trade Commission would be in charge of enforcing penalties, which could include a maximum civil penalty of $46,517 per violation.

- Averting the National Threat of Internet Surveillance, Oppressive Censorship and Influence, and Algorithmic Learning by the Chinese Communist Party (ANTI-SOCIAL CCP) Act (S.347, sponsored by Sens. Marco Rubio (R-FL) and Angus King (I-ME)): Senators Marco Rubio and Angus King reintroduced the ANTI-SOCIAL CCP Act after introducing the bill for the first time in December for the 117th Congress. The act would prohibit transactions from social media companies operating in or under the influence of foreign adversaries, including China, Russia, Iran, North Korea, Cuba, and Venezuela. TikTok, ByteDance, and any other of their subsidiaries would be banned from operating the U.S. under this act.

- Data Privacy Act of 2023 (H.R. 1165, sponsored by Rep. Patrick McHenry (R-NC)): This bill creates new consumer privacy protections in the financial sector, including a state pre-emption clause seeking to create a federal standard. The bill, introduced this month, will likely be voted on by the House Financial Services Committee in the first week of March.

- Clean Slate for Kids Online Act (S.395, sponsored by Sens. Richard Durbin (D-IL), Richard Blumenthal (D-CT), and Mazie Hirono (D-HI)): The Clean Slate for Kids Online Act would modify the Children’s Online Privacy Protection Act of 1998 (COPPA) to provide consumers with a “right to have website operators delete information that was collected on them while they were under 13” or information collected about them when they were under 13. This bill was introduced and referred to the Committee on Commerce, Science, and Transportation. Bill text is not yet available online.

Public Opinion Spotlight

Monmouth University conducted a telephone poll of 805 U.S. adults between January 26-30, 2023 that asked respondents about their awareness of artificial intelligence. The poll found:

- 55 percent of the American public favors the proposal of an AI regulatory agency.

- 76 percent of adults under 35 years old support the creation of an agency.

- More Democrats (70 percent) and independents (56 percent) than Republicans (36 percent) expressed support for a federal regulatory agency for AI.

- 60 percent of Americans have heard of ChatGPT, and 72 percent “believe that there will be a time when entire news articles are written by artificial intelligence.”

- 78 percent of U.S. adults say that news articles written by AI would be a negative outcome.

- 65 percent of respondents said that it was very likely that programs like ChatGPT will be used by students to cheat on their school assignments.

- 9 percent of Americans believe that computer scientists’ ability to develop AI would do more good than harm to society; “The remainder are divided between saying AI would do equal amounts of harm and good (46 percent) or that it would actually do more harm to society overall (41 percent)”

- 73 percent of Americans feel that machines with the ability to think and feel for themselves would have a negative impact on jobs and the economy, and 56 percent said that such machines would damage humans’ overall quality of life.

- 55 percent of Americans are at least somewhat worried that artificially intelligent machines could one day pose a threat to the existence of the human race.

A Pew Research Center survey of 11,004 U.S. adults conducted between December 12-18, 2022 investigated public awareness of artificial intelligence in their everyday activities, and found that:

- 27 percent of Americans say that they interact with AI at least several times a day. An additional 28 percent think that they interact with it about once a day or several times a week.

- Just 15 percent of U.S. adults say that they are more excited than concerned about the increasing use of AI in daily life, compared with 38 percent who are more concerned than excited.

- 44 percent of Americans think that they do not regularly interact with AI.

- 30 percent of U.S. adults can correctly identify six common uses of AI, including music playlist recommendations, an email service categorizing a message as spam, and targeted advertising.

On behalf of the American Principles Project, a right-leaning think tank, One Message Inc. surveyed 1,000 likely GOP primary voters between January 30-February 5, 2023 via phone interview and online survey methods. When asked about issues related to Big Tech, the poll found that:

- 80 percent of GOP voters want Congress to use antitrust enforcements to rein in Big Tech.

- 73 percent of GOP voters want Congress to reform Section 230 or pass a related law to stop censorship on online platforms.

Morning Consult asked a representative sample of 2,220 and 2,201 adults about their perceptions of health apps and related privacy topics between September 24-27, 2021 and January 23-25, 2023 respectively. Assessment of the two surveys found that:

- U.S. adults who said they were either “very” or “somewhat” concerned about their data privacy while using health apps declined between the September 2021 survey and the January 2023 survey.

- Half of Gen Z adults remained concerned about data privacy for health apps across both time periods.

- Baby boomers remained the most concerned about the privacy of their health data, with 72 percent of baby boomers expressing concern in September 2021. However, baby boomers also declined in their health data privacy concern, dropping 10 points to 62 percent in January 2023.

Morning Consult also surveyed the public’s favorability of Facebook after the company reinstated former President Trump’s privileges on the platform. The polling was completed among 3,477 respondents between January 2-February 5, 2023. The poll found that:

- Facebook’s favorability rose 4 percentage points (at 61 percent), a rise in favorability that was driven by Republican respondents

- Trust in Meta Platforms was reported at 31 percent among Republicans and 30 percent among Democrats, an 8 point increase and 5 point decrease among party lines respectively.

We welcome feedback on how this roundup and the underlying tracker could be most helpful in your work – please contact Alex Hart and Kennedy Patlan with your thoughts.

Authors