Could a Global Citizens’ Assembly Rebalance the Nationalist AI Arms Race?

Reema Patel, Rich Wilson / Mar 4, 2025This essay is part of a collection of reflections from participants in the Participatory AI Research & Practice Symposium (PAIRS) that preceded the Paris AI Action Summit. Read more from the series here.

A Global Citizens Assembly, an institution that activates and educates millions of people about the future of AI governance, provides great potential for us to rebalance the nationalist AI arms race in the interests of people and the planet. Indeed, such an institution is currently being set up, albeit with an initial focus on climate and COP30.

In particular, it can inject much-needed public voices into the AI debate, precious few of which were included at the Paris AI Action Summit. As expected, civil society wasn’t able to exert much influence on the proceedings. Instead, the dynamics at the Summit reflected the emergence of a no-holds-barred AI arms race between the four big AI power blocks of the USA, EU, India, and China, once described by Ian Hogarth in 2018 as “AI nationalism.”.

The current situation

The rise of AI nationalism was further illustrated by the response to China’s recent release of DeepSeek, with some US tech leaders questioning whether its claims stood up to reality. Others, such as the Indian government, have banned the use of DeepSeek entirely. Last month saw world leaders “vying for AI domination’ at the Summit,” with almost all the focus on a dash for growth, not how to responsibly manage AI. Responsible AI advocate Professor Gina Neff has highlighted a ‘vacuum for global leadership’ on AI. There’s been close to zero progress on any effective AI global regulatory regime that can hold the main developers of the technology to account, and the general consensus is that Trump's recent election has made that much harder.

Where does power lie?

Power in shaping AI currently lies at the global level, not at the national level. So far, national-level regulation tends to be predominantly retrospective and harm-based, not fit for the purpose and nature of the technology we are dealing with.

A handful of powerful organizations, spanning Silicon Valley giants and state-backed research labs, are shaping the future of AI. OpenAI, backed by Microsoft, is at the forefront with its cutting-edge language models, while Google DeepMind continues to push the boundaries of AI through breakthroughs in deep learning and reinforcement learning. Meanwhile, China’s government—through tech behemoths like Baidu and Tencent—is driving an aggressive AI strategy aimed at global dominance. Regulators such as the European Union’s AI Act and initiatives like the UK’s AI Safety Institute are scrambling to develop and impose ethical guardrails but are getting limited traction so far.

The geopolitics of AI nationalism—a troubling dynamic whereby states compete to dominate AI development worsens these issues. It has led to a dangerous race to the bottom, prioritizing economic and geopolitical advantage over the development of safe and responsible AI. This narrow, competitive approach is failing us.

AI nationalism has eroded opportunities for international cooperation on AI governance, has marginalized and excluded public voices from decision-making about AI, and contributed to overlooking urgent challenges. AI is already playing a lead role in advancing autonomous weapons and cyber warfare, and AI-driven misinformation and deepfakes have undermined truth and democracy.

As AI continues to accelerate resource-intensive extractive economic models that place pressures and demands on labor and data across the AI supply chain, reinforce racial and structural inequalities, and entrench emissions-heavy uses and applications, we must ask: Who is holding AI decision-makers to account?

Given that we have a decent idea of where the power lies, the question becomes, how have citizens shaped technologies before, and what might work now?

A power literate strategy for shaping AI:

To answer this question, we’ve identified five big ideas currently in play.

- Regulation, with one example being the implementation of the EU AI Act which marks some progress on AI standards and AI risk assessment, but has so far done little to counter the big threats such as the erosion of truth and democracy, or to stave off AI militarization or the threat of mass unemployment. Part of the problem is that all the meaningful regulation we’ve had so far has been at the national level, retrospective, and harm-based, and thus not fit for purpose. What we need, given the global nature of the AI power holders, is a forward-looking global regulatory regime.

- Social movements championed in the 2023 AI Dilemma Talk have already shaped AI policy, from seeking to ban biased facial recognition systems after Black Lives Matter protests to halting Google’s military AI project through worker activism. Climate groups have pressured tech giants to address AI’s carbon footprint. Similar activism could counter the global AI arms race, but AI isn’t the same as nuclear weapons, which are often held up as the benchmark for movements reducing tech risk. AI is moving faster, is more distributed, is less under the control of states, and has obvious benefits (hundreds of millions of us already use ChatGPT) and hidden costs.

- Improved AI corporate governance, as championed by Professor Isabelle Ferreras, involves empowering workers, shareholders, and investors with a significant role in corporate decision-making to mitigate AI's potential harms. Ferreras emphasizes that restructuring corporate frameworks should be central to discussions, moving beyond mere whistleblower protections. This promising idea needs some corporate champions to get traction.

- Public-interest AIs could offer a viable alternative to dominant tech companies. By prioritizing transparency, inclusivity, and ethical governance, these AIs would focus on serving the public good rather than corporate profits. Championed by Audrey Tang, such models can empower citizens, ensure accountability, and promote collective decision-making in AI development.

- Participatory democracy initiatives on AI have been in play for over a decade but were boosted when tech leaders like Sam Altman pointed to them as a possible solution to regulatory failure. The newly announced Current AI initiative led by France also aims to focus on public participation. Since then, there’s been a lot of experimentation in citizen deliberation on AI, but little with a power literate change strategy that can match the techno-industrial cold war reality. Citizen deliberation is proving to be a very effective tool in places like AI, where there are governance failures. There have been over 200 climate assemblies globally driving more ambitious climate action and institutions like the Danish Board of Technology in Denmark and Sciencewise in the UK have hardwired citizen deliberation into national tech policy systems.

How might we design a Global Citizens Assembly on AI?

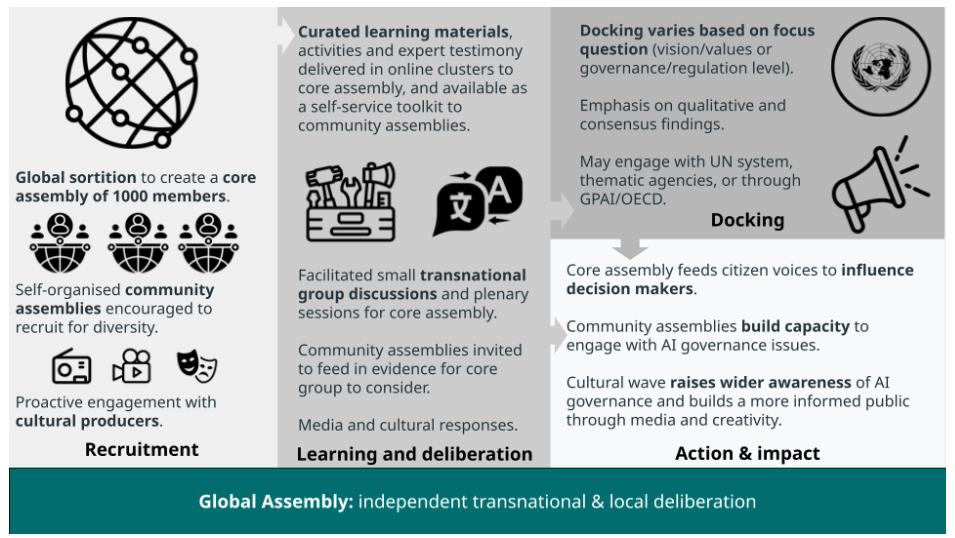

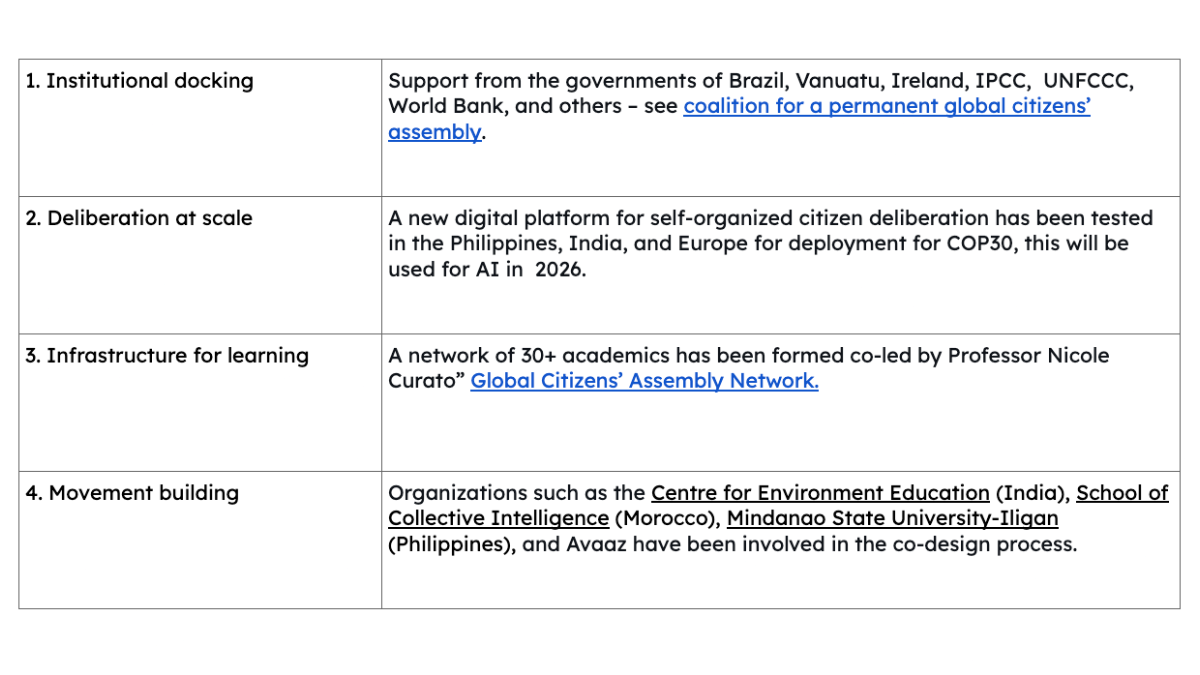

A permanent Global Citizens’ Assembly was launched in New York in parallel to the UN Summit of the Future in September 2024, backed by Brazil, Ireland, Vanuatu, and others. This might provide some answers to how we get real about democratizing AI. Last year Connected by Data, Iswe and Kiito undertook a series of online and face-to-face convenings with AI and citizen engagement experts to develop an Options Paper on Global Citizen Deliberation on Artificial Intelligence launched in New York and Washington. It outlined what a Global Citizens’ Assembly might look like (see Figure below) and four next steps “to embed citizen voices from across the globe in defining the future of AI:” 1. Develop institutional docking; 2. Invest in deliberation at scale; 3. Create infrastructure for learning; and 4. Connect with movement building.

Figure 1. A Global Citizens’ Assembly

Each of these recommendations has now been integrated into the permanent Global Citizens’ Assembly (see Table below), though much remains to be done.

Figure 2. Recommendations integrated into the Global Citizens’ Assembly

The plan is that the Global Citizens’ Assembly will start deliberations on AI in early 2026. That means this year, in 2025, we will need to co-create the AI Global Citizens’ Assembly with movements, academics, media, and the key AI power holders in tech companies and government. If you would like to co-host an event to shape the AI Global Citizens’ Assembly or participate in any way, please get in touch.

Authors