Child Endangerment in “Los Picus” Fan Groups: Facebook (Still) Has a Child Predation Problem

Lara Putnam / Jan 23, 2024

A collage of screenshots referenced below.

On January 31st, the Senate Judiciary Committee will hold a hearing on the topic of “online child sexual exploitation.” This topic is sometimes treated as synonymous with the issue of Child Sexual Abuse Materials, or CSAM, images and videos that might previously have been referred to as “child pornography.” But the main role that major public social media platforms play in the ecosystem of child sexual exploitation is not as conduits for the exchange of CSAM. It is as a space of predation, where adults can meet children who have internet access and devices with cameras.

Predators often first make connections to children in public virtual spaces—sometimes pretending to be children themselves—and then seek to move the interaction into private channels like Messenger or WhatsApp, where they can deceive or pressure the child into virtual sexual interactions. These interactions can produce CSAM (sometimes called self-generated CSAM, SG-CSAM) that the adult may trade on other non-public channels. But researchers stress that the process of virtual abuse in itself may be part of what the predator desires: and also, that the experience of sexual victimization, even if it remains fully online, can be profoundly traumatizing for child victims.

In December 2023, I stumbled across multiple Facebook groups, framed as fan sites for the Mexican child hip hop group Los Picus, which were suffused with exactly the same kind of child predation and grooming content that I wrote about in Wired in 2022. Each group had thousands or tens of thousands of members, including many active posters who seem to be authentic pre-teen fans.

In these fan groups, intermixed among links to videos and images of “Los Picus” (three brothers: Luigi, now 12; Tony, now 10; and Dominick, now 8) were a familiar range of gamified posts asking children to specify their ages and then “Agrégame” (add me) or “Mande solicitud” (send a friend request): that is, to authorize contact so that the poster could continue interacting with the child privately via Messenger or WhatsApp.

A typical post is screenshotted below. The poster uses the screen name Tony Picus, impersonating the 10 year old pop star. The text reads (my translation, here and subsequently): “Only for 7 8 9 10 11 and 12 year old girls [heart emojis]: Where would you kiss me?” The image accompanying is of Tony, taken from his Instagram account from 2021, when he was 8. In the version shared in the Facebook group, numbers are superimposed on Tony’s chin, chest, stomach, wrist, shoulder, arm, and crotch. When I first saw this post it already had over 200 replies. Most said 1, or 1 and 7, sometimes accompanied with emojis. “I am 9 years old and on the 7” said one. “On the 1,2,6 [smiley face] i love you alot toni” said another. “I am 8 years old and on the 7 and 1” said another, to which a different user replied, “Add me?”

An image, originally shared on a social media account run by Los Picus, depicted as edited and recirculated by a false Tony Picus account. We have obscured the child celebrity's face since this post was nonconsensual. Screenshot provided by Lara Putnam.

“Los Picus” are three brothers from Mexico City who have been performing hip hop online since 2021, when they were 6, 8 and 10 years old. Today they have an active cross-platform presence, with more than 1.3 million followers on Instagram, 4 million subscribers to their YouTube channel, and 6.8 million followers on TikTok. The older two brothers, Luigi (now 12) and Tony (now 10) both showcase ongoing relationships with their “novias” (girlfriends), Allison Mia and Paulina, who are themselves also significant TikTok / Instagram / YouTube influencers. Their official accounts provide a constant supply of images and narratives about the two “couples” echoing adult sexual and romantic behavior: kissing, getting “jealous,” and performing suggestive dance moves.

In the fan groups I found, it was clear how this material could be used to normalize children performing sexually for an audience via smartphone camera. This is not me making an interpretive leap. Literally mixed in among the reposted Tony and Pau and Luigi and Allison content in the groups were links to pornography Facebook groups. The linked images routinely showed video stills of adult women’s fully nude breasts, buttocks, and sometimes genitalia.

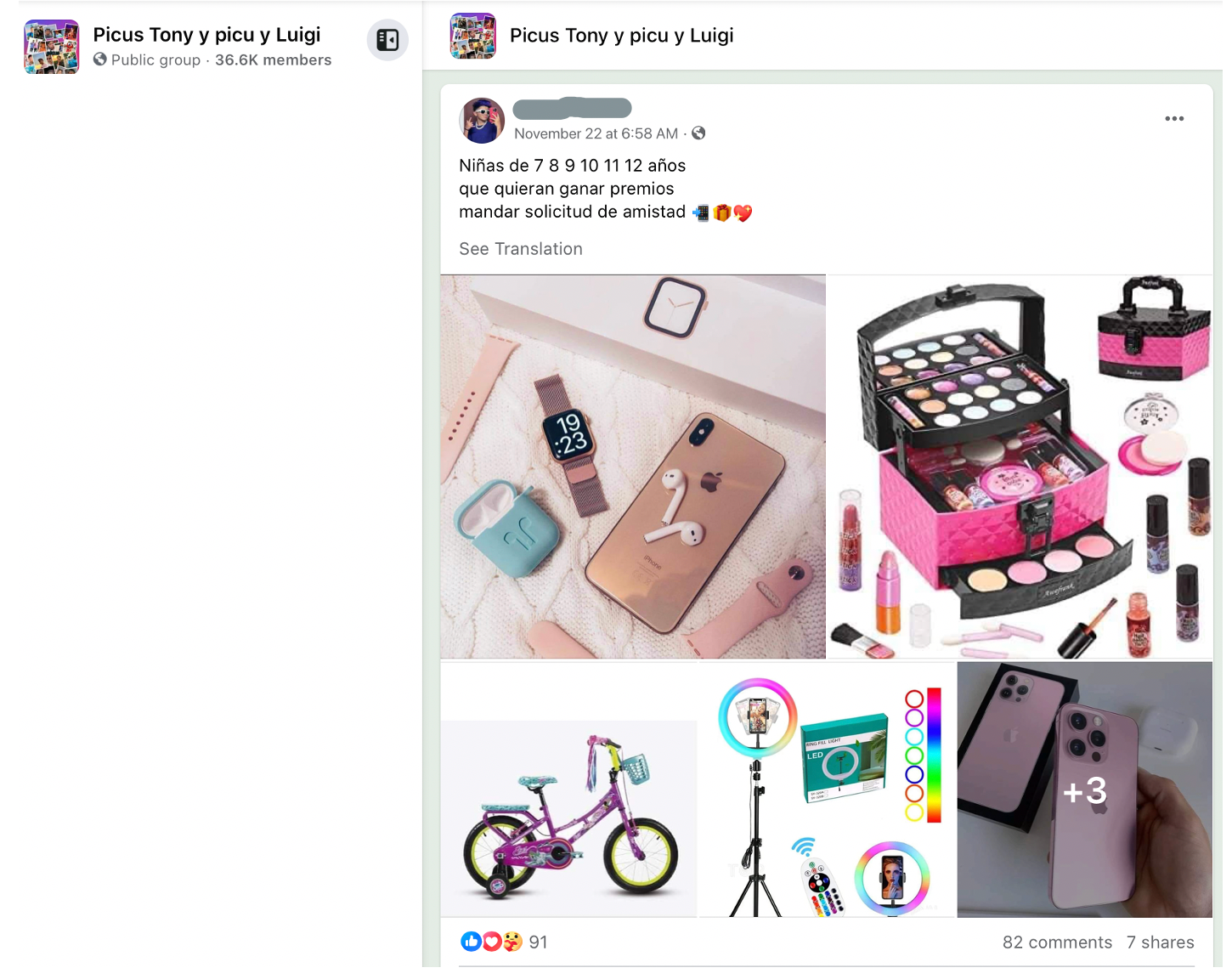

There were a wide variety of posts geared towards getting children to send Friend requests to the poster, often while specifying the ages of the children sought. Many did this by posting from accounts pretending to belong to Tony himself. Others offered prizes. For instance, the post in the screenshot below says: “Girls who are 7 8 9 10 11 or 12 years old, who want to win prizes, send a friend request,” with accompanying photos of make-up kits and pink smartphones and accessories. At the time that I took a screenshot of it, this post had 82 responses.

Screenshot provided by Lara Putnam.

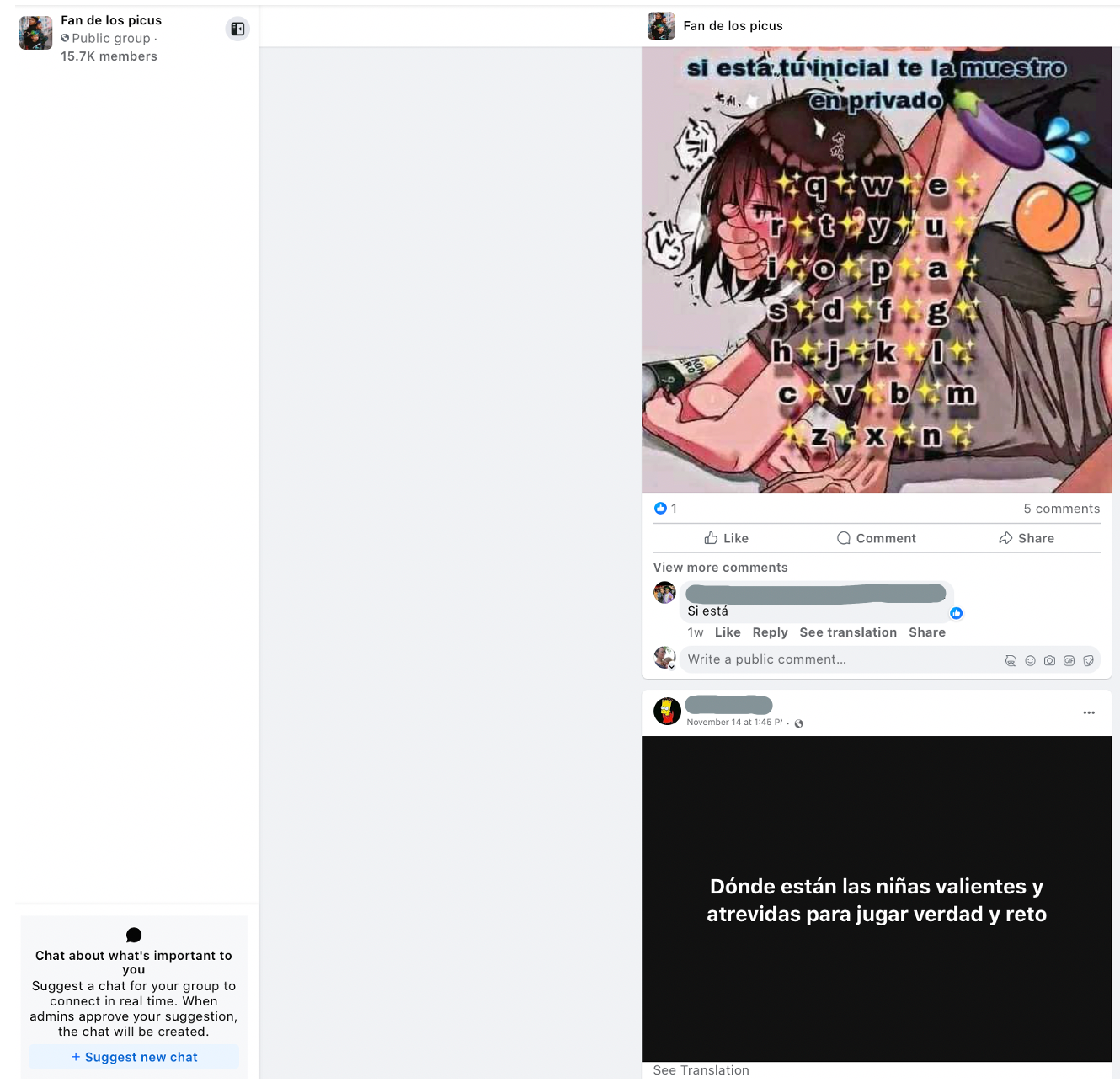

Other posts were explicitly sexual in their approach. For instance, in the following screenshot, the background cartoon image shows a girl lying on her stomach with a man’s hand reaching from behind to cover her mouth, in a pose suggesting a sex act is underway. The words say: “If you see your initial I’ll show it to you in private [eggplant emoji].” The post that followed in the feed called for replies with this invitation: “Where are the brave and daring girls to play truth or dare?”

Screenshot provided by Lara Putnam.

The “related groups” links featured alongside each such group led me to others, likewise framed as Los Picus fan groups, with an identical mix of postings. I used Facebook’s in-app reporting tool to flag multiple groups as well as multiple particularly egregious individual posts. None of the groups I reported have been, as far as I have been notified, taken down. The only posts I reported for which I received confirmation of takedown were the few that included video stills of children in apparently sexual situations. Sometimes these included the phrase “yo si lo paso” (broken up by emojis), which is a standard phrasing of an offer to share CSAM.

None of the posts I reported that asked for Friend requests from children of specified ages were judged to violate norms. Note that such posts generated hundreds of replies from users who gave their ages as 11, 10, 9, even 8 years old, asking to be included. Routinely, either the original poster or other users had responded to individual replies telling the child to “Add me.” It seems clear that this approach is circumventing controls designed to limit unknown adults from directly messaging young users.

In one group, I saw a post purporting to be from “Tony Piculin” warning group members to “be careful girls” because “this group is false.” A reply noted that on Instagram, Tony had asked fans to report as false all the Facebook accounts operating in the Picus’ names. This suggests that the spurious accounts and associated predation were extensive enough to draw outside attention, possibly including from the managers of Los Picus themselves.

Periodically over subsequent weeks I checked back to see whether these groups, or at least the toxic content within them, had been eradicated. While some have waned in activity, others have grown.

Attempting to get a sense of the scale of the problem, over the course of a few days I documented twenty different active groups, ranging in size from 12,000 to 60,000 members. Most of these groups were presented to me as “related groups” from previous groups I visited. A few of them are groups that appeared when I searched for the phrase “si ves tu edad”—“if you see your age…” In just a few hours, I generated a 114 page compilation of screenshots of child-endangering content from these twenty groups, which as of this writing have over 558,000 members.

The patterns of posts seeking access to children in a gamified sexual or romantic frame is extremely consistent. Sometimes it is identical graphics being shared across different groups; more often it is endless variations on the same theme: asking for children of listed ages to add the poster as contact (“agrégame”) or send a friend request (“mande soli” or “mande solicitud”) or join a whatsapp group or a videochat. Again and again, dozens or hundreds of children reply, giving their ages, which range from 8 to 12.

At a minimum, it is shocking that no algorithms have been put in place to be triggered by this explicit and constant evidence of the presence of underage children on the platform, and of adult users’ age-explicit solicitation of them.

More fundamentally this nexus of accounts underlines the inadequacy of “child safety” measures built around reporting and takedowns of individual posts, or individual accounts, or even individual groups. There are Los Picus fan groups on Facebook not yet beset by predators and their posts. A handful of them are as large and active as these; the great majority are currently smaller. If all the groups I identified were taken down tomorrow, but with no further action taken, nothing would stop the predators who are currently trawling for victims in the taken-down groups from moving on to the next fan group full of eager-to-engage, un-self-protecting, underage children.

Indeed, given the dynamics I have seen over a few weeks of even cursory attention, it seems that individual groups already follow a surge and abandonment lifecycle without slowing the pace of predation as a whole. A child-attracting fan group becomes known to predators and begins rapid growth as ill-intentioned users flock there, filling the group with age-trawling posts. This surge of gamified and engaging content pushes the group up in recommendation and search algorithms, pulling in more child users even as it is also attracting more predators. Growth explodes. (Many of the groups I documented are labeled as having gained over six thousand new members in the past week.) But, sooner or later, enough explicit adult pornography group links or CSAM-exchange links are posted to the group that either automated takedowns or exasperated departures by actual child users disrupt growth, and the group becomes de facto inactive even faster than it once grew. Predators easily shift their focus elsewhere within the range of multiple groups where they were already also participating.

Meanwhile—crucially—the process has generated some unknowable-to-outsiders number of direct relationships between predators and children, which may now proceed onward in private Messenger chats or WhatsApp groups. The heyday of age-trawling posts in public groups attractive to underage users is simply the first step towards online sexual exploitation of individual children. But it is the crucial “but-for,” as jurists would say: the essential step that pulls a new set of unsuspecting children with smartphone access into relationships with physically distant adults who seek to harm them.

It is possible that the predator-filled groups I have described would have garnered more attention within Facebook if they were happening in English-language groups, or in fan groups for US child stars. That possibility does not reassure. Spanish is the second most common native language worldwide, after Mandarin Chinese: it has nearly 500 million native speakers. In the United States alone more than 40 million people speak Spanish in the home. Music fandoms and online phenomena span national borders routinely; child safety must as well.

Given the dynamics described, improved automated screening for phrases like “Si ves tu edad...” and for posts including numerical pre-teen age sequences is essential, but inadequate. Ultimately the problem stems from the number of child users of Facebook, the number of adult predators using Facebook, and the engagement and recommendation algorithms that easily enable the latter to find the former. Reducing the problem is going to require mechanisms at scale, which may include permanent device-level or IP address-level blocking of users who take part in age-trawling predator swarms in groups that are attractive to child users. Unfortunately, as long as Meta refuses to acknowledge the obvious reality that Facebook has millions of child users, alongside untold numbers of adults eager to prey on them, building solutions at scale will apparently not begin.

To see gamified, sexualized age-trawling posts basically identical to those used in the groups that I called out in Wired two years ago being used with apparent impunity across a vast new range of groups is disheartening, to say the least. Likewise, the fact that child-attracting groups and predator swarms remain so common on Facebook that they can be repeatedly found by me—a mother of four and full-time teacher with zero access to internal platform data or metrics—calls into serious question platform executives’ claims to be doing everything they possibly can to prioritize child safety online.

If the upcoming Senate Judiciary Committee hearing remains focused primarily on CSAM interdiction, the platforms’ representatives will have successfully avoided discussion of the dimension where social media’s role is, grimly, most uniquely important: as the open door to crimes that will be committed elsewhere, away from public view.

Authors