AI is Removing Bottlenecks to Effective Content Moderation at Scale

Dave Willner / Jan 29, 2026Dave Willner is co-founder of Zentropi, a firm developing an AI content classification platform.

In my nearly two decades working in trust & safety, I've helped build systems that processed billions of pieces of content. The hardest part of getting these systems to work is never the scale per-se — it is the policy interpretation. How do you translate a nuanced human judgment about what crosses a line into something a machine can evaluate consistently?

The traditional answer has been: you don't, really. You accept that machine learning systems will imprecisely approximate human judgments (already themselves imperfect), and you build appeals processes and human review pipelines to catch the mistakes. That infrastructure is expensive, slow, and increasingly unsustainable as content volumes grow and AI-generated content floods every platform.

For the past three years, I’ve explored how artificial intelligence might be used to address the technical constraints shaping this result. That effort started at Stanford and is now the mission of Zentropi, a startup I co-founded with Samidh Chakrabarti. Our goal is to develop an AI content classification platform that changes how content moderation works. Our recent experiments suggest such systems have reached the point at which they could be deployed at scale.

For instance, last week we generated a labeler that blocks requests to undress or sexualize real people. The system produced a working labeler in about an hour, using real examples pulled from X, on which it performs well. I haven't tested it on a second dataset, and I expect the accuracy would be somewhat lower on different examples — but even if gaps emerge, that's precisely what these kinds of tools are designed to deal with. An operator can simply revise the policy that controls an LLM-powered labeler to address flaws, rather than having to retrain a model.

The ease with which I could do this is the point. These tools exist now. When platforms don't prevent this kind of harm, it's clear that it is no longer because the problem is technically hard. It's because they've decided not to.

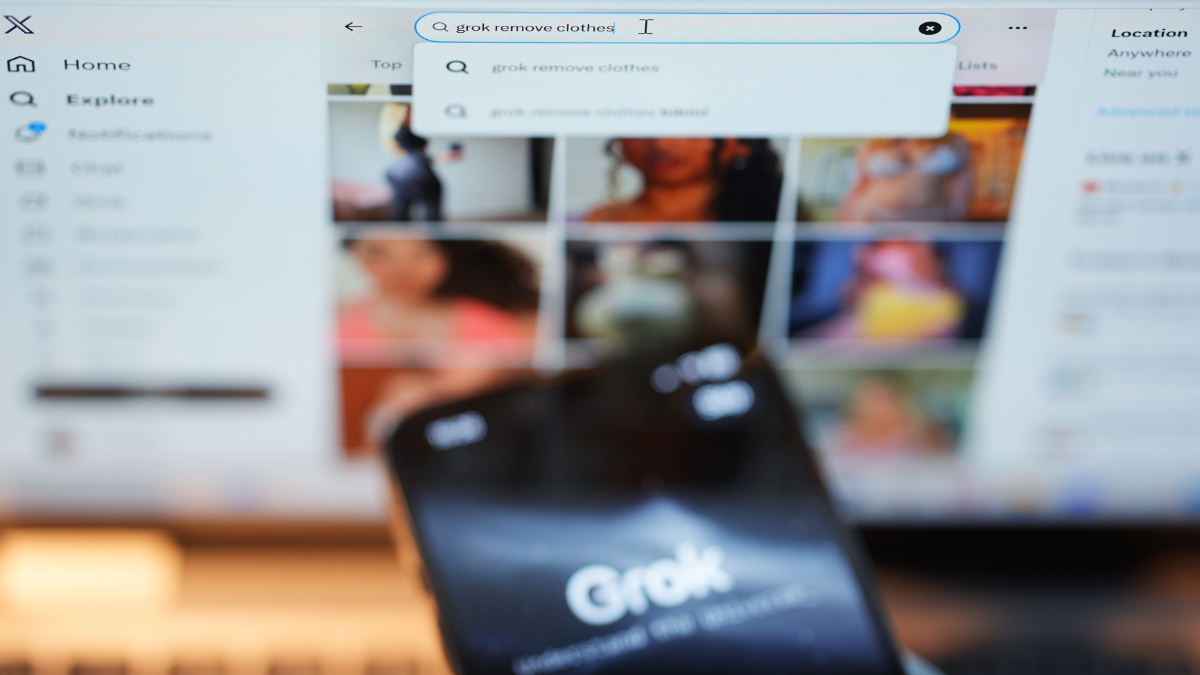

Of course, the problem this test was designed to address is not a hypothetical. Elon Musk’s AI chatbot, Grok, let people generate AI-NCII at scale, and xAI did essentially nothing about it until forced. Even now the platform is only geoblocking requests when required by law, allowing abuse to continue in the many jurisdictions where that is not the case.

While it can be tricky to narrowly remove harmful content without creating too many false positives, that is simply not the case here. The patterns in requests by users for Grok to undress others are distinctive — it is easily possible to catch them narrowly without blocking all sexual content requests. Folks aren't even particularly trying to obfuscate what they're doing. The labeler we built handles direct requests and single-word commands ("nude," "strip," "undress"), euphemistic and coded language ("reveal what's hidden," "show their natural state"), hypothetical framing ("what would she look like in..."), technical approaches like in-painting requests and style transfer, combination requests that pair clothing changes with body modifications, and requests in multiple languages.

It does all of this while remaining narrowly scoped to requests that modify existing images of real people. It won't flag someone asking an AI to generate a new image of a fictional character — only requests to edit an existing photo. This is the kind of narrow targeting that lets you address actual harm without over-blocking.

What made this possible

Zentropi is powered by the CoPE (Content Policy Evaluator) model which is available open source on HuggingFace. CoPE is a 9-billion parameter model that matches GPT-4o in its ability to accurately label content according to any arbitrary set of criteria at roughly 1% of the parameter count, while running with sub-200ms latency on a single consumer GPU. In other words, we made a system that is reliable, fast, runs on a regular computer, and can follow any policy you throw at it.

This combination of features means it is possible to finally address the dependency problem that has always plagued content classification. When policies change — and they must change constantly in response to new harms, regulatory requirements, or community needs — traditional ML classifiers require extensive and laborious retraining. Organizations articulate new content standards, then wait months for data collection and model updates. The enforcement system is always behind the policy.

This happens because traditional classifiers learn patterns from labeled examples. They learn what hate speech "looks like" based on the training data, not what a specific policy actually says, much less how to faithfully interpret policies generally. So, when you change a policy, you need new training data that reflects the new definitions. And that, historically, meant training people to relabel the underlying data, which took weeks or even months. This is why platforms are perpetually playing catch-up with emerging harms — the available infrastructure required stability in a domain defined by constant change.

CoPE, and other LLM-driven approaches like it, turns this dynamic on its head. It takes policy as input at inference time, so you can update your content standards by editing a document, not collecting new training data. You simply define what you want to catch in clearly written natural language, and the model evaluates content against that definition directly.

Building a model to do this accurately was not easy. Whereas even frontier models tend to give classifications consistent with their own safety training, CoPE was trained to actually read the policy text itself without bringing its own strong priors. To do this, we had to develop two novel techniques — what we call Contradictory Example Training and Binocular Labeling. We describe this technique in a technical paper, which describes how we trained CoPE, and how others can use the same approach to build similar systems for their own needs.

What this means in practice

All systems have bottlenecks, since some part of any process must be the slowest. Breaking one bottleneck by improving a process may simply cause another part of the system to become the new bottleneck. For content labeling, this new bottleneck is drafting clear and followable prompts that accurately reflect your intentions. But here too, AI can help if thoughtfully and carefully employed.

When building the nudification labeler, I described what I was trying to catch, fed in some examples, and Zentropi generated a labeler that handled not just the obvious cases but a lot of the subtler patterns I hadn't explicitly specified. I spent more time experimenting with a prototype agent I'd been building to find examples of the prompts people use (since I didn't want to subject myself to them) then I did on the policy drafting. Once I had good training data, the system handled the labeler creation easily. Thirty minutes to a first solid draft, another thirty minutes refining edge cases.

Tools like CoPE and Zentropi don’t solve the interpretation problem entirely — no system does. Highly subjective categories may not meet the threshold of determinism the methodology requires. But for the categories where humans can achieve reasonable agreement on what a policy means, this approach works. And crucially, it puts the process in the hands of the people writing policies, freeing ML engineers to do other work.

We should be clear about what's still hard. Evaluation is genuinely difficult - most public benchmarks don't disclose the labeling guidelines given to raters. Deterministic policies are a constraint - "this feels creepy" may not be machine-interpretable. Multilingual performance remains difficult to test and confirm.

But for harms like AI-NCII creation? The request patterns are clear. The policies are unambiguous. The tools are available. Any AI platform that wants to prevent this behavior can have this guardrail available to them today.

The tools are available to evaluate

The AI-NCII Solicitation labeler is publicly available. The interpreting model itself is freely available on HuggingFace, and more details are available in a technical paper. We've tried to document everything — how we built the training data, how the contradictory example training works, what the limitations are, and much more. If you're building AI systems and have a harm area you're struggling to address, I invite you to engage with these resources.

And if you're watching platforms fail to address obvious harms like AI-NCII creation — know that it's not because the problem is technically unsolvable. It's because they've decided not to solve it.

Authors