Researcher Data Access Under the DSA: Lessons from TikTok's API Issues During the 2024 European Elections

Philipp Darius / Sep 24, 2024

Shutterstock

In the months leading up to the 2024 European parliamentary elections, my collaborators from the SPARTA project and I collected the social media communication of roughly 400 European political parties across platforms including Facebook, Instagram, TikTok, Twitter/X and YouTube. However, some of the data delivered by platforms was unreliable, as we identified significant data quality issues with the TikTok Research API. While TikTok repaired the API in July 2024, it is very likely the data flaw impacted other researchers who collected, for instance, political communication data ahead of the 2024 European Parliament elections. The experience also raised questions for us about how platforms could be encouraged to allocate more resources to building reliable research infrastructures and whether an independent institution could assess and ensure the data quality of platform APIs provided under DSA 40(12) and data provided for data access requests under DSA 40(8).

In light of these issues, the article will first briefly summarize the data access regime under the EU Digital Services Act and then illustrate the data quality issues we identified with TikTok’s Research API during the EU’s 2024 elections that lasted until July 2024, when TikTok repaired the API. These data quality problems can lead to false or misleading results, undermining the EU Digital Services Act's aim of fostering better research and understanding of digital information environments.

Data Access and the TikTok API

The relatively new data access provision under Art. 40 of the DSA mandate that very large online platforms, so-called VLOPs, and very large search engines, so-called VLOSEs, provide researchers with data to investigate systemic risks of online services. The goal of this access is to contribute to a better understanding of a platform’s content mechanisms, potential socio-political risks, and their mitigations. For readers unfamiliar with DSA Article 40, my colleagues at the Center for Digital Governance at the Hertie School and I have written a policy brief explaining the data access regime and the role of researchers.

Using the DSA’s data access framework, starting in April 2024 and continuing until two weeks after the European elections, my colleagues and I collected social media data on roughly 400 European political parties. The data set we gathered includes data from Facebook, Instagram, TikTok, Twitter/X, and YouTube. Using the party handles of relevant European parties, we collected party communication during the election campaign period and published the EUDigiParty dataset, including the social media handles of 401 European parties, party data, and identifiers to link the data to other party databases.

When cross-checking the collected data, however, we noticed significant deviations between the data provided by the TikTok API and the data shown on the TikTok app or website. A systematic check revealed several issues regarding the string matching of account names, interruptions of follower collection after roughly 3,000 accounts, and extreme underreporting of the “Share” and “View” counts of collected videos by the API.

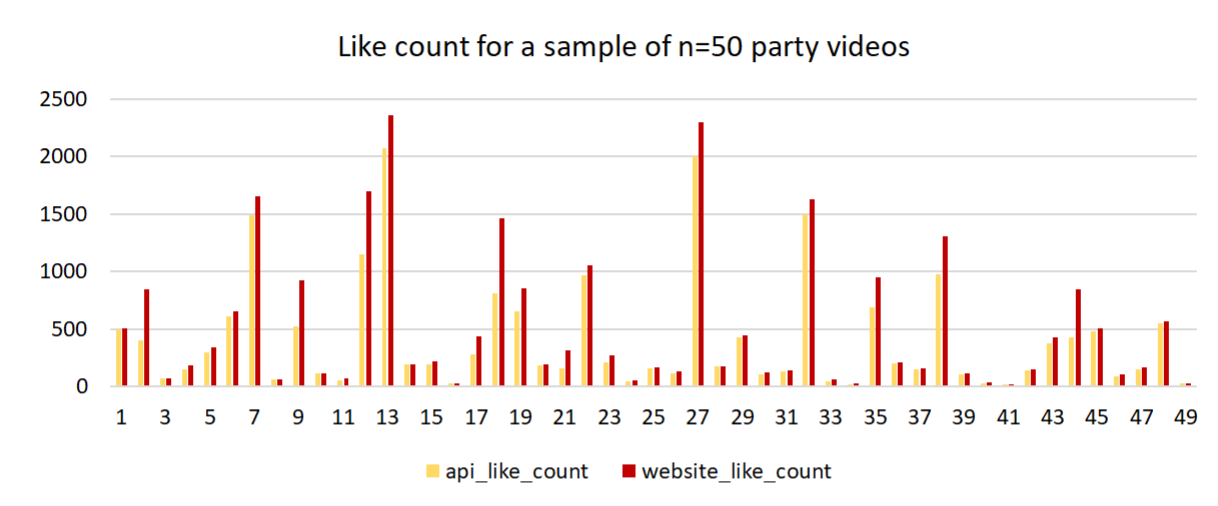

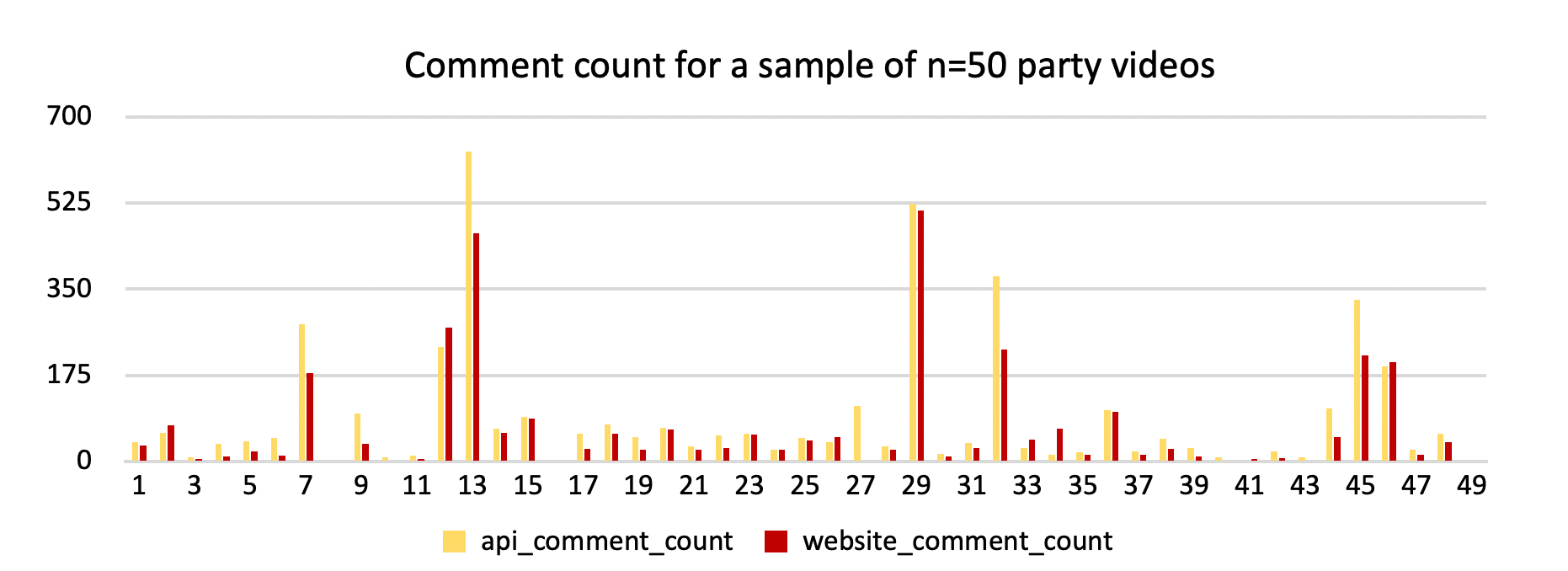

The bar charts below illustrate the reported metrics for a random sample of our party video data, comparing data retrieved via the API with the data displayed on the website and app. The Like metric in Figure 1 shows only a slight deviation, likely due to the TikTok API being separate from TikTok’s main operating system, causing minor time lags. Moreover, in a call with us, the TikTok employees mentioned fixing the Like metric after receiving feedback from the research community. The Comment Metric count (Figure 2) also functioned relatively well through the API. However, it aggregated comments and saved videos into one number, not separately, as shown in the app or website.

Figure 1: The Like count metric reports correctly with minor deviations that are common for API metrics

Figure 2: The comment count metric deviates because comments reported by the API were the sum of comments and saves of the video, rather than reported separately as shown on the website or app.

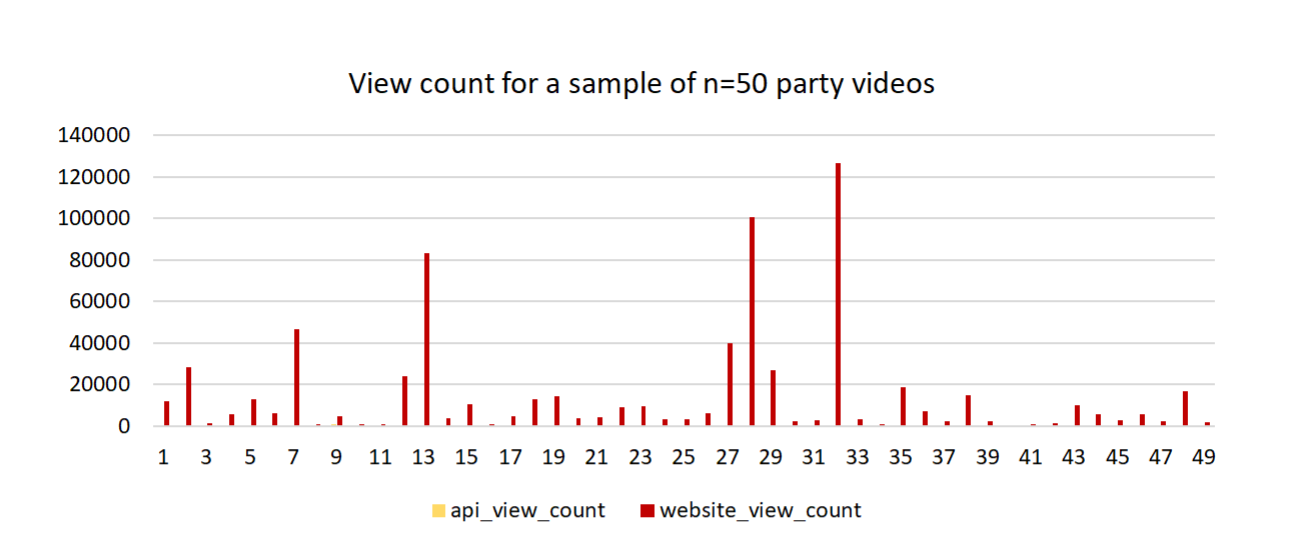

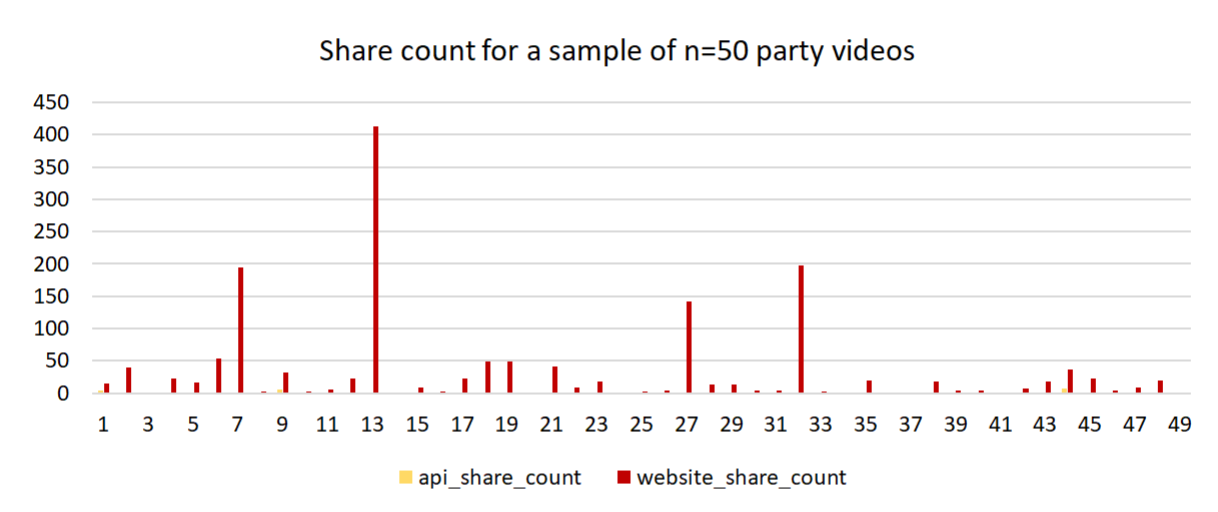

In addition, the TikTok API failed to report view count (Figure 3) and share count (Figure 4), and underreported share and view metrics. These problems created significant challenges for researchers who may have based their analysis on inaccurate data without being aware of these underlying data quality issues. We informed the TikTok Research API team, who promised to repair the API as soon as possible, as well as fellow researchers at the Annual Conference of the European Digital Media Observatory (EDMO) in Brussels and the German Digital Service Coordinator (DSC), the Federal Network Agency “Bundesnetzagentur,” and team members of DG Connect at the European Commission. The results of the researcher exchange on APIs were summarized in an EDMO report. Additionally, other researchers, including Pierri and Corso, also reported issues with TikTok’s Research API.

Figure 3: The API underreported the view count metric compared to the view counts indicated on the website for a sample of videos of our European political parties.

Figure 4: The API underreports share count metrics for a sample of videos of our European political parties, similar to the view count metric. The API seems to fetch from an outdated or incorrect database.

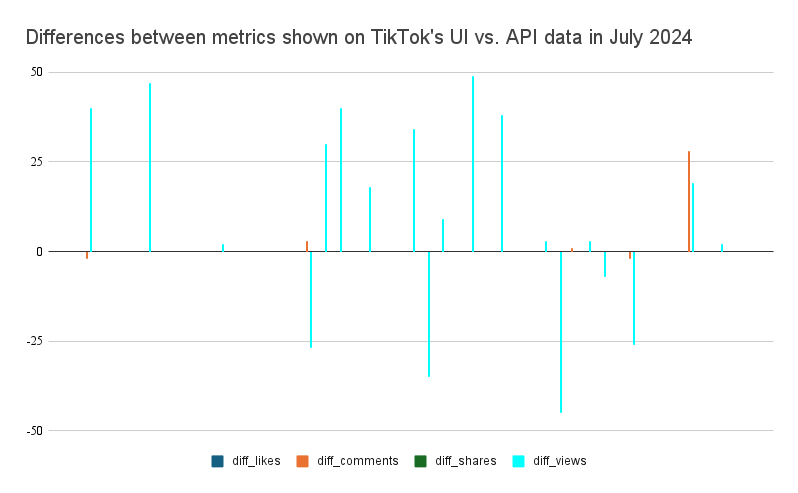

By July 2024, the differences in a random video sample of our data displayed in Figure 5 were only marginal, indicating that TikTok had repaired the API. Figure 5 shows only marginal deviances, which appear in distributed systems and should not affect the results of research conducted using data collected with TikTok’s Research API. As a result, researchers working with TikTok data during the EU Elections should consider recollecting data gathered via the API between March and July 2024. TikTok should also inform its API users about the data quality issues during the time period.

Figure 5: Contrasting data collected via the API versus data shown on TikTok’s user interface shows only marginal differences. This indicates that TikTok has repaired the Share and View metric in the API.

Learnings and Policy Recommendations

While the TikTok API seems to have been repaired and now only deviates marginally from the metrics seen by users on its app or website, the data issues led me to think about lessons for policymakers concerning the European data access regime and beyond.

For example, building reliable research infrastructures takes time and requires companies to allocate internal resources, such as software engineers and server capacity, to build and maintain research services they cannot monetize directly. While platforms will benefit from a better understanding and risk mitigation within their services, they lack incentives to allocate engineering resources to provide ‘free’ and well-maintained research data access, e.g., via APIs. To ensure platforms allocate resources to non-compensated research data infrastructures, they must face a reliable threat of fines for non-compliance.

Moreover, we only found TikTok’s API issues because some of the data seemed off when manually assessing it. Then, it was only possible to systematically compare the API data to the user interface data by using a web scraper. Recently, however, scraping platform data has become increasingly difficult. For example, X now requires a login to view content, raising legal questions about whether the data can still be classified as public. Moreover, X has been rejecting many researchers’ API access applications, as outlined by colleagues from the Weizenbaum-Institute in a policy paper on early DSA compliance issues. From a researcher’s perspective, it is crucial to be able to collect data independently, and platforms should be obliged to make their services ‘scrapable.’ While this could be limited to registered researchers, it is generally recognized that public data should be accessible for researchers from NGOs and universities under Art. 40 (12) of the DSA.

The reliance of researchers on platform-provided research infrastructure and the quality of the data provided also highlights another learning. There needs to be an entity independent of platforms that should test and ensure the quality of the data made available under DSA 40(12) via APIs, and as much as possible also for data provided to vetted researchers under 40(4) and 40(8). Current discussions focus on organizing data quality assurance and testing, as well as further development of research methods in the independent intermediary board recommended by an EDMO working group on data access. This initiative, however, requires significant resources and cannot rely solely on volunteer contributions from the research community.

Authors