What the WGA Contract Tells Us About Workers Navigating AI

Eryk Salvaggio / Sep 28, 2023The AI provisions in the Writer's Guild of America agreement demonstrate the potential of social choice in the application of technology, says Eryk Salvaggio, a Research Advisor for Emerging Technology at the Siegel Family Endowment.

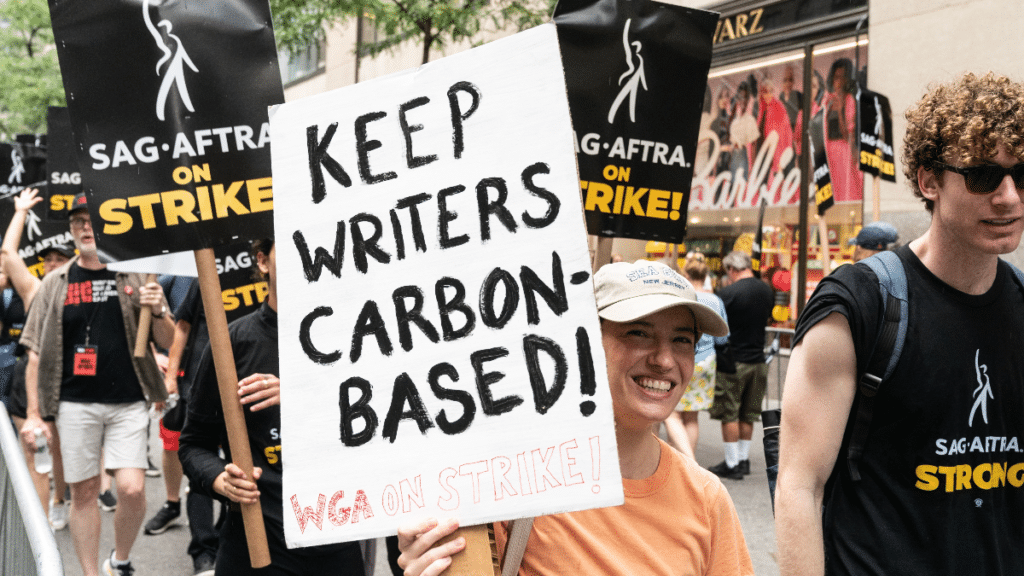

This week, the Writer’s Guild of America announced it reached an agreement to end the nearly five month writers strike. The agreement included provisions around the use of artificial intelligence, which was one of the concerns of the union. While the ink is still drying, it’s a useful time to reflect on the ways the WGA strike challenged use cases of generative artificial intelligence. AI may not have been the key concern of the union when the strike started, but the timing offers a glimmer of optimism: generative AI is rising, but so is worker power. The result may be something that policymakers could not achieve on their own.

The tentative agreement addresses four key points about the use of AI in writer’s projects. Specifically, these terms apply to generative AI, defined as “a subset of artificial intelligence that learns patterns from data and produces content, including written material, based on those patterns, and may employ algorithmic methods (e.g., ChatGPT, Llama, MidJourney, Dall-E).” This carves out a specific suite of tools related to content generation, as opposed to algorithms for computer graphics.

Taken directly from the WGA’s summary, the four main points on AI are these:

- AI can’t write or rewrite literary material, and AI-generated material will not be considered source material under the minimum basic agreement (MBA), meaning that AI-generated material can’t be used to undermine a writer’s credit or separated rights.

- A writer can choose to use AI when performing writing services, if the company consents and provided that the writer follows applicable company policies, but the company can’t require the writer to use AI software (e.g., ChatGPT) when performing writing services.

- The Company must disclose to the writer if any materials given to the writer have been generated by AI or incorporate AI-generated material.

- The WGA reserves the right to assert that exploitation of writers’ material to train AI is prohibited by MBA or other law.

I am a creative myself – most recently, I was the education designer for a six-month residency program with SubLab and AIxDesign. The StoryxCode program paired animators and creative technologists to create a short film project. Through that experience – and in writing our report on the outcomes – I was able to see firsthand the way that creatives negotiate the use of AI into their workflows. In the end, every artist was different, navigating a number of useful tensions between themselves and the technologies they used, but also the surrounding ethical concerns and social impacts that surround AI in creative contexts.

Through that experience, I see the four points of the WGA agreement on AI through a very specific lens: that of a creative with an interest in AI and policy. Broken down, I see these terms in alignment with a set of values that are helpful in navigating generative AI: authority, agency, disclosure, and consent.

- Authority negotiates who has the right to deploy AI in a project, and when.

- Agency negotiates how AI is integrated into a workflow.

- Disclosure refers to acknowledging the generative origins of any material.

- Consent allows writers to negotiate terms for training generative systems on the material they make.

What might we learn from these principles to structure broader AI policy for workers?

Authority: Who Decides When to Use AI?

Authority speaks to a key tension in the debate around AI and creativity today: If we can use AI to generate thousands of ideas, why wouldn’t we? This reflects a quantifiable, production-focused view of the creative process. To most creatives, it seems like the wrong focus. Creatives are skilled at generating ideas – this is what they do. It’s not the speed of idea generation that concerns anyone. It’s the quality and meaning of those ideas, the way those ideas are crafted: the stories they tell.

A reasonable suspicion of generative AI is that the quality of service and craft is diminished by replacing skilled human workers with untested statistical engines. While it would be easy to imagine AI writing screenplays based on the lengthy texts it can generate, generative writing is not capable of reliably crafting unique narrative structures, surprising characters or other lures for a viewer's curiosity. These are the things that make good stories. Rather, AI generated content is the result of a statistical analysis of patterns, deciding which words occur next to one another in response to a prompt, then extrapolating that to larger, predictable output – wherein any surprises emerge chiefly from accidental collisions.

While the fear that today’s AI could rival human creativity is largely unfounded, that doesn’t mean industry won’t try anyway. MIT professor Daron Acemoglu has warned against the rise of “so-so technologies,” wherein workers are displaced but without any standard rise in productivity or returns. Losing writers by automating bad, commercially untenable but cheaply produced ideas isn’t just bad for art, labor, and audiences, it may ultimately be bad for the studios themselves.

Who gets to deploy AI, and to what purpose, is a core question for determining sensible AI policy. At the top of organizations, where cost is a core metric, the decisions to automate may seem easy. Whether it is building characters or building cars, the perspective of workers on the floor needs to be included in decisions about automation. The WGA fought for the right for writers to shape those decisions themselves.

By specifically stating that AI cannot be the origin of content, the writers assert that the creative process cannot belong to budget-minded managers or studio executives. Notably, it could also stifle the production of AI-generated stories if the resulting intellectual property cannot be used as source material for films. Altogether, it sets a boundary for AI incursion into a profitable industry. It also frames this work in the lens of human dignity, ensuring that workers are not hired to sort through endless, cheaply produced concepts. Rather, they are given a central role in the things they make.

Agency: Who Decides How to Use AI?

Authority is about the structure of an organization, with AI integrated into that organization from the top-down. Agency is more granular: the capacity to act and make decisions. The WGA Memorandum of Understanding (MOA) asserts that it is writers who decide how they will use AI.

It also clarifies that human authors, rather than their tools, hold the rights over the material they make: “because neither traditional AI nor generative AI is a person, neither is a ‘writer’ or ‘professional writer’.” (MOA, page 68). This is an argument at odds with the Copyright Office, which currently holds that AI “makes” art and stories. The WGA MOA argues the exact opposite: that when writers use AI, they remain the authors of the resulting work.

The contract explicitly states that it is writers who decide – with disclosure to the studios – how to integrate AI into their work. It centers the autonomy of a skilled worker to choose for themselves which part of their workflow can be automated, and which parts are best kept under their control. It affirms that companies cannot insist on the use of AI in ways that compromise that autonomy in the search of faster production or ideation.

What’s powerful about this agreement is that the WGA has successfully integrated productivity for workers, rather than maximizing productivity for the exclusive benefit of studios. If AI offers any benefits, workers can decide how to deploy them. These efficiencies can trickle up, especially in creative work. But they also overlap with efforts in manufacturing to give workers greater agency over the use of technology.

The inclusive innovation cycle is the subject of professor Nichola Lowe’s work with The University of North Carolina at Chapel Hill and the Urban Manufacturing Alliance. The inclusivity reflects a trust-based process wherein workers and executives identify areas where technology would most likely benefit their work, save time, and cut costs – together. It is at once a means of identifying meaningful interventions for automation, while distributing the benefits of those interventions more equitably.

Centering the agency of workers in automation is also an incentive for innovation. Workers will develop workflows, find new tools, and perhaps even participate in open source movements and projects that cater to creatives in meaningful ways. The result is ground-level innovation that speaks to a broader base of users and creates a climate for more participation in technological development.

Disclosure: What are we working with?

Disclosure of AI-generated content in projects where writers are brought on board is part of a two-pronged approach to consent. Workers have the right to know if the idea was the result of human thinking or machine analytics, in order to decide how – and if – to engage with that material, but also, how to ensure adequate payment for the work they do on its revision. Otherwise, a studio may generate a cheap, roughly sketched concept, then hire human writers to “revise” this concept into a full story and plot. Because that work was previously considered a revision, it paid less than if that same writer developed a full script themselves.

Likewise, policies on the labeling of AI generated content would be valuable for consumers – not just of media, but of any material referenced for the purpose of making decisions. It helps consumers clarify what to trust, but can also help human writers determine their role in the production pipeline. A writer can refuse to work with AI generated content if the origin is disclosed, or demand higher wages for so-called “revisions” of a generative AI text. This matters, because generated content is generally not considered copyrightable without human embellishment. In other words, if a studio comes across an algorithmically generated blockbuster, they can’t claim IP rights until a human user shapes it into something else. Now, the studios are obligated to make that disclosure, so that the writers brought in to work on these projects can know their value. This agreement benefits studios, too, in determining if a human-authored work made with generative AI is copyrightable: a human author pitching a generated screenplay has to be clear about the role they played, and the role the AI played, to ensure that the studio can purchase those rights.

Disclosing the role that generative AI played in a script is useful to all parties. Yet, if a consumer wants to make decisions about a product based on the recommendations of a large language model, nothing assures a similar degree of disclosure. Are we talking to a human or a chatbot about our life insurance policy, or car loan? If my pet is sick, can I trust the telehealth chatbot to be a trained veterinarian, or is it generating “statistically likely” medical advice? If I am asked to “edit” a document or report in my workplace, knowing the foundational text was generated can tell me that the job will be far more intensive than editing.

Disclosure of AI generated material in any decision, including in determining the value of one’s work, is a sound and simple policy that extends far beyond the entertainment industry. Similar proposals have passed around the use of chatbots in California and Europe, and the US Federal Trade Commission (FTC) has made clear that misleading users can be penalized. Should we also advocate for disclosure of generative origins for any text copy that could be read as persuasive? At a minimum, we should acknowledge that generated AI often requires greater diligence of critical analysis and verification, not less – and compensate workers accordingly.

Consent: Who Trains Tomorrow’s Models?

The final piece of the WGA strike asserts that writers have authority to determine how and when their writing is used in training generative models. Given recent developments in generative AI – including Getty’s generative model trained on its own photographic archive – this is a powerful collective mechanism for writers in the Guild. Writers of all kinds should have similar protections.

Artists and writers who share their work online see themselves as doing what artists and writers do. Right now, however, anyone making a website to share their drawings or stories is also building datasets for AI training. Those datasets should be theirs to sell. Published authors can point to specific financial value in their words. Even the amateurs writing dozens of absolutely painful science fiction stories are producing something valuable: a collection of novel, human-written sentences for machines.

A book, or a blog post, or poem, or social media post, is a dataset of word patterns. A small collection of drawings you post online is an image dataset. Artists and writers have the right to accept or reject pennies, or a few dollars, in compensation for creating and collecting those datasets, or to refuse those pennies on principle. It’s not about generating wealth, but about enabling consent – and establishing frameworks for generative AI that center consent. Some of these may be technical – mechanisms for evaluating the content of training data already exist. If Getty’s image model is a sign of things to come – where we will see smaller, enclosed archives used to train their own “flavors” of generative AI – then establishing clear rules and norms for training from data from anyone contributing to these archives is vital. As it will be for any platform with an interest in selling and sharing this data.

Conclusion: Social Choice in Machine Learning

The WGA strike has reminded me of an essay from 1977 by David Noble, “Social Choice in Machine Design: The Case of Automatically Controlled Machine Tools, and a Challenge for Labor.” The simple existence of this article, nearly 50 years ago, suggests that technology may take new forms, but questions around automation and agency have been with us for generations.

Noble bemoans the sense of inevitability attached to technological displacement with an admirably belligerent passion:

For lazy revolutionaries, who proclaim liberation through technology, and prophets of doom, who forecast ultimate disaster through the same medium, such notions offer justifications for inactivity. And, for the vast majority of us, numbed into a passive complicity in “progress” by the consumption of goods and by daily chants echoing the slogan of the 1933 World’s Fair – SCIENCE FINDS, INDUSTRY APPLIES, MAN CONFORMS – such conceptions provide a convenient, albeit often unhappy, excuse for resignation, for avoiding the always difficult task of critically evaluating our circumstances, of exercising our imagination and freedom.

The WGA strike can be seen as evidence that technological development is not an inevitable or autonomous process, but one that is shaped by the contexts and frictions of its deployment. Workers have the power to challenge technology’s incursions, and they have exercised it. The question is, will policymakers continue to listen to these voices on the thorny subject of integration, or will workers be cast aside – not for robots or synthetic minds, but for the profit incentives of the companies that deploy them?

Authors