Voice clone of Anthony Bourdain prompts synthetic media ethics questions

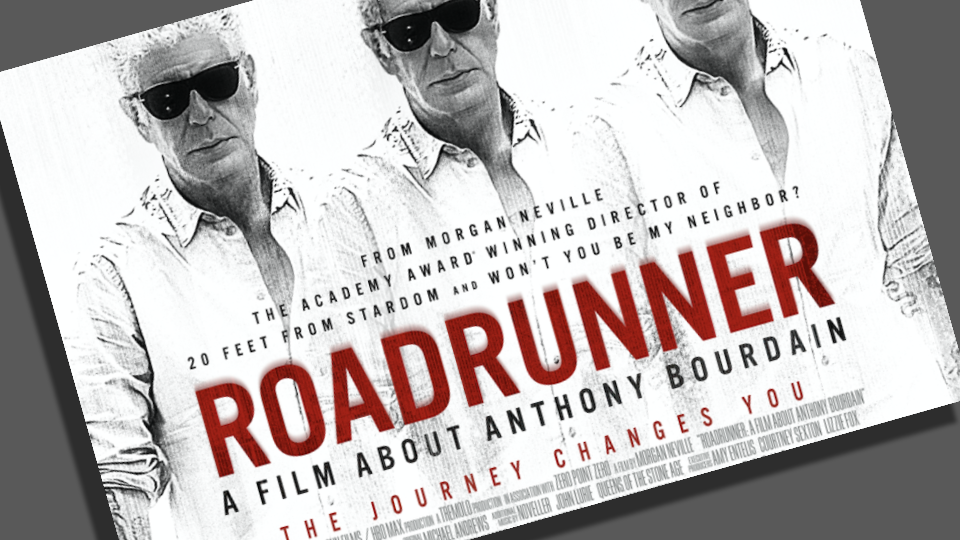

Justin Hendrix / Jul 16, 2021A New Yorker review of “Roadrunner,” a documentary about the deceased celebrity chef Anthony Bourdain by the Oscar-winning filmmaker Morgan Neville, reveals that a peculiar method was used to create a voice over of an email written by Bourdain. In addition to using clips of Bourdain's voice from various media appearances, the filmmaker says he had an "A.I. model" of Bourdain's voice created in order to complete the effect of Bourdain 'reading' from his own email in the film. “If you watch the film, other than that line you mentioned, you probably don’t know what the other lines are that were spoken by the A.I., and you’re not going to know,” Neville told the reviewer, Helen Rosner. “We can have a documentary-ethics panel about it later.”

On Twitter, some media observers decided to start the panel right away.

"This is unsettling," tweeted Mark Berman, a reporter at the Washington Post, while ProPublica reporter and media manipulation expert Craig Silverman tweeted "this is not okay, especially if you don’t disclose to viewers when the AI is talking." Indeed, "The 'ethics panel' is supposed to happen BEFORE they release the project," tweeted David Friend, Entertainment reporter at The Canadian Press.

In order to get a better sense of how this episode plays into concerns around synthetic media, deepfakes and ethics, I spoke to Sam Gregory, Program Director of WITNESS, a nonprofit which helps people use video and technology to protect human rights. Sam is an expert on synthetic media and ethics, and recently wrote a piece in Wired arguing the world needs more such experts to address the looming problems posed by these new technologies, which offer enormous creative potential along with frightening epistemic implications.

Justin Hendrix:

So today on Twitter, I'm seeing people argue about a documentary review about Anthony Bourdain, the famous traveling chef who died, sadly, by suicide in June 2018. And folks are really needling in on this one particular segment of this review, where the director is talking about a peculiar tactic that he used to voice Anthony Bourdain's ideas. Can you tell folks what's going on?

Sam Gregory:

I haven't seen the film, but it's described quite well, I think, in the New Yorker piece. And it basically notes that he is voicing over some emails that Anthony Bourdain sent to a friend. And, he has what sounds like the voice of Anthony Bourdain saying some of the lines in the email. And as it turns out in this article, the director reveals to the writer that he used one of the proliferating number of ways you can generate audio that sounds like someone. Sort of deep fake audio to recreate those words.

And I think that's shocked people, right? What annoys people is he then goes on to say, "Oh, maybe someday we should have a documentary ethics panel on this." This is one of those discussions we need to be having about ‘when is it okay to synthesize someone's face or audio or body and use it’. And I think it's bringing up all these questions for folks around consent and disclosure and appropriateness.

Justin Hendrix:

It turns out you've been having ethics discussions about these things for quite some time. Can you tell us a little bit about that, and some of the ideas that you've arrived at?

Sam Gregory:

So, obviously there are deep fakes, which is a term that people use for a whole range of synthetic media generation. In audio that includes everything from making someone say something, to making a voice that sounds like someone, to making a voice that doesn't sound like a particular person but sounds real- that's all been exploding the last three or four years. At Witness, we made a decision about three and a half years ago that it was really important to have a group that thinks globally and from a human rights perspective about what truth, trust and credibility in audiovisual media engage with critical questions as we moved into this era where more and more content might be visually and audibly fake-able in ways that people might not see, and in ways that make it look like a real person said or did something they never did. And so we spent the last three years focused on bringing together the perspectives of people globally who faced how these types of tools have been used maliciously in the past with people who are building these tools and people who are trying to commercialize them.

One of the areas we've really focused on in the last year is what guardrails we need as we go into an era where there will be more and more synthetic content, and more and more synthetic content mixed in with real content, right? So it's less this idea that there's this spectacular novelty- like the Tom Cruise deep fake. It's the idea that in our daily consumption of news, or of content on YouTube or TikTok, we're going to see more and more content that is this hybridized content. And so then, how do we think about the necessary laws? We might have laws that say you can't use someone's likeness without their permission, as we do in some countries, right? But also, what are the kinds of non-legal guard rails and the norms and principles around it?

this is unsettling https://t.co/s8qWqEpofa pic.twitter.com/imHHLLQnbH

— Mark Berman (@markberman) July 15, 2021

There’s a set of norms that people are grappling with in regard to this statement from the director of the Bourdain documentary. They're asking questions around consent, right? Who consents to someone taking your voice and using it? In this case, the voiceover of a private email. And what if that was something that, if the person was alive, they might not have wanted. You've seen that commentary online, and people saying, "This is the last thing Anthony Bourdain would have wanted for someone to do this with his voice." So the consent issue is one of the things that is bubbling here. The second is a disclosure issue, which is, when do you know that something's been manipulated? And again, here in this example, the director is saying, I didn't tell people that I had created this voice saying the words and I perhaps would have not told people unless it had come up in the interview. So these are bubbling away here, these issues of consent and disclosure.

But the backdrop is of course that there is a question of, in what contexts are we used to things being faked and where we're not. There's a conversation we need to be having as synthetic media gets more and more prevalent, about where it's okay, and where does it need to be labeled or disclosed? Where do we expect consent? Where do we not? Where would we be surprised if you use synthetic media? And where we might be surprised that you use real media, right? If you're in a gaming environment, you are kind of surprised if something real appears. If you're in a news environment, you'd be surprised if someone told you that you had faked a politician appearing in that news clip.

And documentaries fall right in the middle, because of course,documentaries as a genre includes everything from reality TV, where we know it's essentially all staged, and nature documentaries, where, of course, they didn't actually film all those animals in the wild, doing those things. Right through to a documentary that's like a Frederick Wiseman documentary where he sat in on an institution for a year and filmed everything that happened, and says, "I didn't stage anything."

And so this documentary is falling right in the middle of that discussion about what do we expect in a documentary as more and more synthetic media appears? And how do these issues of consent and disclosure play out?

Justin Hendrix:

So you're telling me that Neil deGrasse Tyson didn't actually travel to a quasar in Cosmos.

Sam Gregory:

It's stunning to realize that amongst his powers is not the ability to fly into a quasar. And that when you see two praying mantises mate, it wasn't necessarily because someone tracked through the forest and waited until they happened to see one under their foot and then filmed it.

Justin Hendrix:

So you've got a draft of commitments that media companies and content publishers could make. What types of things do you think media companies and publishers should commit to?

Sam Gregory:

The norms and principles are something we've been working on and talking to about with a lot of the players in this space. There was a great article by Karen Hao, from MIT Tech Review just last week that I highly recommend, that talks to some of the players in the synthetic audio space. And the article shows this commercial space is really growing, driven by improving technology. It’s both audio and video. There are a lot of companies who are starting to create synthetic avatars that look realistic. For example, you can create someone who gives a training video. And then you might get that synthetic avatar, for example, to speak in multiple languages or multiple voices. And of course, companies that have cost centers that are around human voices and performance, or have diverse customers or diverse staff who they want to engage with in customized, language-specific ways are gravitating towards these types of things.

And at the same time, of course, you've got news producers and documentary producers, much like the producer of this documentary, going, what cool things can I do with these types of tools? And so we've started to talk to a range of those players to think about: if we want to do this in a way that avoids explicitly malicious usages and also tries to do things like preserve audience trust, in news media, what do we need to do?

The easiest areas to agree on are the explicitly malicious. Most legitimate news outlets, most documentary makers, many content producers, even in a distributed environment like YouTube, would not go down the direction of these red lines. They will not produce nonconsensual sexual images and use them to attack individuals, right? Which is of course, one of the most pernicious uses of deep fakes now.

Justin Hendrix:

And the most prevalent.

Sam Gregory:

And certainly the most prevalent in harmful usages. Although, if you think about it, if you use Impressions or Reface or any of those apps online, there are probably many more of those silly ones where you've been stuck in Pirates of the Caribbean than there are malicious sexual images at this point. But certainly the most harmful use of deep fakes to date- what we've heard globally, and what you see in the research- is nonconsensual sexual images. So most legitimate companies, most legitimate media producers, don't want to go near that. So there's some obvious red lines.

So once we step back from the obvious redlines, what we've been looking at in the discussion around principles goes back to first to questions of consent. How do you have transparent and informed consent appropriate to a product or a type of media? The ‘appropriate to product’ part is important because there are different types of content and synthesis products. There might be products where a voice actor licenses their voice and says, "Look, you can use this in all kinds of different ways and maybe I get a retainer or a reuse fee as you use it." There might be an example where an actor allows the additional voice dubbing that often happens in post-production to happen with this, because they're not available for the post production. So consent isn't a totally fixed concept, but consent needs to be at the center of it.

Then the second area to engage with is labeling appropriate to the media environment. So for example, if you had a news broadcast and you use the synthetic figure in that news broadcast, the audience expectation is likely that you should know that the figure is synthetic.

I don't think that's changing all that fast. I'd want to know if someone stuck an extra figure into the G7 photo or whatever it is, right? I want to know that's a fake one. So in that case, labeling might mean you put a halo around someone's head so it’s super-clear where there is synthetic content On the other hand, there are other environments where people might very quickly say, "Actually, we're kind of expecting that people are going to start using synthetic media," right? Maybe we'll start to see reality TV with synthetic media. And labeling might just mean, at the beginning, you say, "Look, we synthetically made X, Y, and Z.” So labeling is again contextual. And that disclosure of when you used it, and this is one of the hardest things, because there are very different norms and a different set of content production settings between a game versus reality TV versus news production versus weather. Already in the weather, you have synthetic weather broadcasters who are generated and customized for you.

And then the third area is around malicious deception. Clearly you can have artistic and critical media that is deceptive or satirical. We need to protect that. But when deception is malicious and the deception is disguised in a way that there's no way for an audience to understand that it has been changed.. And I think that's where we start to get into a lot of the discussion that is for audio and for video deep fakes and similar manipulations. Then people say, "Oh, you didn't get the joke," when someone fakes something, and it's clearly that someone did it with malicious intent and they're gaslighting us when they say we didn't get the joke. They just shared it in a way that was designed to cause harm and without any intention for it to actually be satire, parody, drama, art even if they claim it was. We’ve been working with MIT on the Deepfakery web series on how to grapple with this intersection of satire, parody and gaslighting.

And then the other set of things that we've been workshopping with folks are really around commitments to be explicit about the policy. If you're a media producer, you'd publish policy on how to handle synthetic media, both around the stuff you make, but also what you publish. So if you share synthetic media from someone else, will you label it? If you mistakenly share something you thought was real, but turns out to be fake, will you correct it? So I think those are the two sides of what's happening now, the focus on clarity around consent, disclosure, and labelling appropriate to your production context and audience, and then making those norms and practices clear to your audience.

In some sense, media production is the easiest place for this. The harder place in the long run is how to do this well in the context of apps and more generic tools: as it becomes easier and easier to use an app or any kind of generic tool to create audio or deep fakes, those guardrails don't exist, because there's not a producer or a gatekeeper. So in a weird way, we're having this conversation around this Bourdain documentary, but that is maybe a lower hanging fruit when you're starting to deal with an almost institutional, and kind of professionalized zones of production, like documentary makers, media outlets, versus what is acceptable to do if you're using an app that can do many different functionalities.

Justin Hendrix:

Is there anything else that you want to get across about this, Sam?

Sam Gregory:

One thing is just, again, it's the value of the thought experiments of what we would consider acceptable. I think it's very valuable for this to be a broad conversation. Now, we've had a really heavy emphasis in our WITNESS work on "prepare, don't panic", right? And I think it's important to note we're not surrounded by fake voices everywhere around us. Most people calling us on the phone are real people, but it's actually very important to have a public conversation about expectations here, and to look at these scenarios.

I was actually doing a thought experiment myself before this and saying, "If they'd animated Anthony Bourdain's face and made him say these things, how would the people have felt about that?" And I think there would have been an even stronger reaction there, because it would have been like you're appropriating his likeness.

The inherent squickiness of replication tech + people’s intensely personal ties to Bourdain work together to cloud the fact that this is basically someone reading My Dearest Annabel Today We March Toward Gettysburg over Ashokan Farewell, and that cloud itself is fascinating

— Helen Rosner (@hels) July 15, 2021

Whereas when you're making it seem like he spoke these words, there is something going on here that is actually a classic documentary technique, right? You see it in a Ken Burns documentary. Someone reads the letter from the Civil War soldier to his beloved. So there is something they're playing with, an existing documentary thing. If you actually saw him being recreated, speaking out, I think there would be an even more strong reaction.

I think we really need to have public conversation now around expectations and norms, as this starts to become commercially viable for content producers and media to use, and then more easily available for any of us to do

Justin Hendrix:

Thank you very much.

Sam Gregory:

Thank you.

Authors