US Senate AI ‘Insight Forum’ Tracker

Gabby Miller / Dec 8, 2023

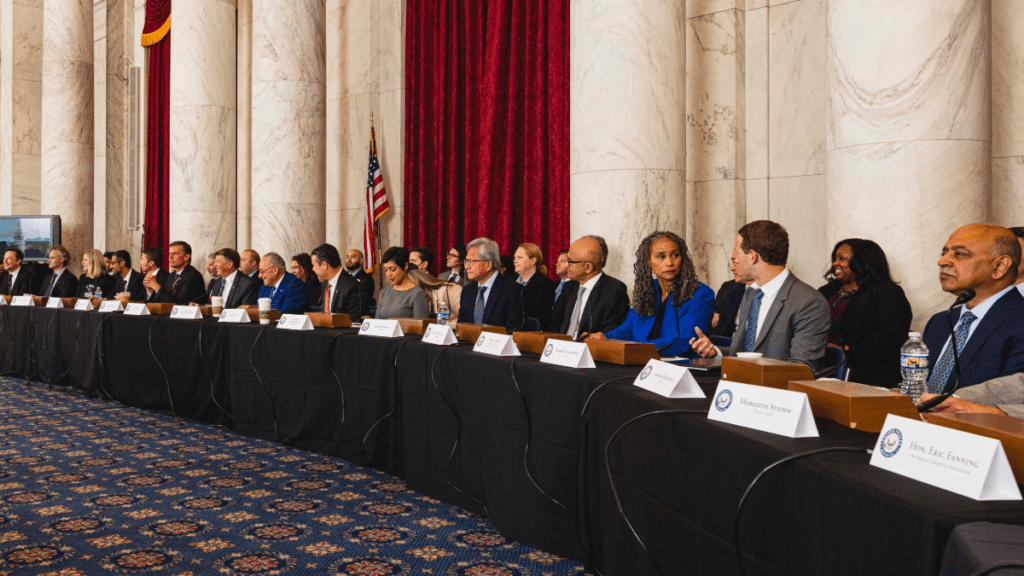

Photograph of participants in the first ever Senate 'AI Insight Forum,' September 13, 2023. Source.

The tracker was first published on September 21, 2023 and last updated on December 8, 2023.

Background

In June, Senate Majority Leader Chuck Schumer (D-NY) announced his SAFE Innovation Framework, policy objectives for what he calls an “all-hands-on-deck effort” to contend with artificial intelligence (AI). He called this a “moment of revolution” that will lead to “profound, and dramatic change,” and invoked experts who “predict that in just a few years the world could be wholly unrecognizable from the one we live in today.”

Asserting that the “traditional approach of committee hearings” is not sufficient to meet the moment, Sen. Schumer promised a series of AI “Insight Forums” on topics to include:

- “Asking the right questions”

- “AI innovation”

- “Copyright and IP”

- “Use cases and risk management”

- “Workforce”

- “National security”

- “Guarding against doomsday scenarios

- “AI’s role in our social world

- “Transparency, explainability, and alignment”

- “Privacy and liability”

Tech Policy Press is rounding up what we do (and don’t) know about the forums and will continue to catalog what information comes out of future meetings. Our goal is to create a central resource to track attendees, key issues discussed, and major takeaways from each event, in order to understand what ideas, individuals, and organizations are influencing lawmakers. This piece will be updated as new details become available on each of the planned forums.

(If you participated in one of the forums and have materials or other details to share with Tech Policy Press, get in touch.)

The Senate Hosts:

- Senate Majority Leader Charles E. Schumer (Opening statement)

- Senator Mike Rounds (R-SD) (Opening statement)

- Senator Martin Heinrich (D-NM) (Opening statement)

- Senator Todd Young (R-IN) (Post-forum statement)

Attendee Database:

Forums Eight and Nine

The final two forums in the private, nine-part series wrapped on Wednesday, Dec. 6, with a focus on ‘doomsday scenarios’ and ‘national security.’ Forum eight’s ‘doomsday’ exchange focused primarily on the near and long-term risks posed by AI and solutions to help mitigate them, while forum nine’s national security discussion focused on how AI development can be maximized to bolster America’s military capabilities, according to Sen. Schumer’s office.

After the forums, Senate co-hosts indicated that key committees will begin ramping up efforts to craft bipartisan AI legislation in the coming months. “Now it’s time for the committees to actually step in and say what they have an interest in,” forum co-host Sen. Mike Rounds (R-SD) told the press.

Forum Nine

National Security

The Senate’s ninth ‘AI Insight Forum’ focused on national security. Dec. 6, 2023.

Attendees:

- Gregory C. Allen – Center for Strategic and International Studies, Director of Wadhwani Center for AI and Advanced Technologies

- John Antal – Author, Colonel (ret.)

- Matthew Biggs – International Federation of Professional and Technical Engineers (IFPTE), President

- Teresa Carlson – General Catalyst, Advisor and Investor

- William Chappell – Microsoft, Vice President and CTO, Strategic Missions and Technologies

- Eric Fanning – Aerospace Industries Association, President and CEO

- Michèle Flournoy – WestExec Advisors, Co-Founder and Managing Partner; Co-Founder and Chair of the Center for a New American Security (CNAS)

- Alex Karp – Palantir, CEO

- Charles McMillan – Los Alamos National Laboratory, Director (ret.)

- Faiza Patel – Brennan Center for Justice, Senior Director of the Liberty and National Security Program

- Scott Philips – Vannevar Labs, CTO

- Rob Portman – Former Senator (R-OH) and Co-founder of the Senate Artificial Intelligence Caucus

- Anna Puglisi – Georgetown University Center for Security and Emerging Technology, Senior Fellow

- Devaki Raj – SAAB Strategy Office, Chief Digital and AI Officer

- Horacio Rozanski – Booz Allen Hamilton, President and CEO

- Eric Schmidt – Special Competitive Studies Project, Founder and Chair; former Google CEO

- Jack Shanahan – Lieutenant General (USAF, Ret.); Center for a New American Security (CNAS), Adjunct Senior Fellow of the Technology & National Security Program

- Brian Schimpf – Anduril Industries, CEO and Co-founder

- Patrick Toomey – American Civil Liberties Union, Deputy Director of the National Security Project

- Brandon Tseng – Shield AI, Co-Founder and CEO

- Alex Wang – Scale AI, Founder and CEO

Senators who attended at least part of forum nine (in addition to the hosts):

- Sen. Bill Cassidy (R-LA)

- Sen. Maggie Hassan (D-NH)

- Sen. John Hickenlooper (D-CO)

- Sen. Amy Klobuchar (D-MN)

- Sen. Ed Markey (D-MA)

Issues discussed:

- Competition with China

- Funding and procurement

- Adopting and scaling AI military capabilities

- AI leveraged in the Russia-Ukraine war

- Recruiting and retaining top tech talent

- Prioritizing security clearances for tech workers

- "Modernizing" public/private partnerships on AI

- Making “big bets” on AI

The ‘national security’ forum was perhaps the least balanced out of the nine total forums held in terms of sector representation. Of the 21 total attendees, just two civil society groups, the ACLU and Brennan Center for Justice, and only a single labor union, the International Federation of Professional and Technical Engineers, served as counterweights to a tech and defense industry-heavy session. Former Google CEO and current Chair of the Special Competitive Studies Project Eric Schmidt was also on the guest list—only one of two speakers to have attended more than one forum. (Schmidt also attended the first event in the series.)

Sen. Schumer, in his roughly 450 word opening remarks, mentioned ‘China,’ the ‘Chinese government,’ or ‘Chinese investors’ eight times, stating that, “no discussion of AI for national security purposes can ignore the importance of competition with the Chinese government on AI.” After establishing this framing, Sen. Schumer then tossed the first few questions to Schmidt around the need to develop AI capabilities for defense and intelligence purposes in order to maintain the US’ national security advantages. Schmidt, along with most tech industry representatives present, in turn emphasized the need for more government funding to accelerate the development of relevant AI technologies, according to sources Tech Policy Press spoke with.

Whether the government should increase funding and procurement for its AI military capabilities and the risks and dangers of doing so were not discussed much. And while the Department of Defense (DoD) made commitments to the ethical deployment of AI in June, and the Department of Homeland Security unveiled similar AI guardrails in September, they remain voluntary. “National security uses of AI are among the federal government’s most advanced, opaque, and consequential,” said attendee Patrick Toomey, deputy director of the ACLU National Security Project, in an emailed statement to Tech Policy Press. “Although national security agencies have raced to deploy AI, enforceable safeguards for civil rights and civil liberties have barely taken shape. Congress must fill this gap before flawed systems are entrenched and continue to harm the American people,” Toomey added.

Another concern raised by civil society representatives present at the forum was around the national security sector being inoculated from AI regulatory developments. In its written statement, the Brennan Center for Justice extensively outlined the exemptions granted to the Intelligence Community and Defense Department for use case inventories; for sensitive law enforcement, national security, and other protected information; and for national security systems through the Advancing American AI Act and President Biden’s Executive Order on “Safe, Secure, and Trustworthy Development and Use of Artificial Intelligence. And the ACLU, in its written statement, echoed concerns about policymakers adopting a “two-tiered approach” to AI safeguards, which are robust for most government agencies but embrace “sweeping exemptions and carve-outs for ‘national security systems,’ intelligence agencies, and defense agencies.”

Guiding principles on the responsible and ethical use of AI, like those adopted by the Department of Defense, were considered by others to be a “gating question” that served as a backdrop for the entirety of the discussion. “Nobody's talking about adopting [AI] willy nilly. [The forum] was more, you know, how do we make sure that to the extent we can do so responsibly, that we're scaling with speed,” said WestExec co-founder and managing partner Michèle Flournoy in an interview with Tech Policy Press. The forum also included a robust discussion around upskilling the existing workforce around AI literacy; attracting and retaining top tech talent; creating new pathways for digital workers, like a digital service academy or a digital non-military Reserve Officers' Training Corps (ROTC); and speeding up the security clearance process, according to Flournoy, who also served as the Under Secretary of Defense for Policy from 2009 to 2012.

The International Federation of Professional and Technical Engineers President Matthew Biggs spoke at length about labor issues. Biggs emphasized that not only should humans, rather than AI, be involved in all decision-making processes around national and homeland security issues, but also that federal core capabilities should remain in-house, rather than outsourced to contractors. Meanwhile, tech firms at the forum largely pushed for reforms that would allow the Pentagon to be ‘better customers’ of the AI-enabled products they sell, Flournoy recounted.

Many of the tech industry attendees also asked the government to “make big bets on AI,” which Biggs pushed back on. “It prompted me to say, look, let's not forget what we're talking about here: the expenditure of billions and billions of taxpayer dollars,’' he said. “There has to be strict oversight of the way that money is expended. We shouldn't be willy nilly throwing billions of dollars out the window with something that we're not even sure about,” Biggs added.

In addition to the focus on strategic competition with China, attendees also briefly discussed applications of AI in the Russia-Ukraine war, which some in the room cited approvingly.

Forum Eight

Risk, Alignment, & Guarding Against Doomsday Scenarios

Senator Schumer co-hosts the Senate’s 8th AI Insight Forum, focused on preventing long-term risks and doomsday scenarios. Source

Attendees:

- Vijay Balasubramaniyan – Pindrop, CEO and Co-founder

- Amanda Ballantyne – AFL-CIO Technology Institute, Director

- Okezue Bell – Fidutam, President

- Yoshua Bengio – University of Montreal, Professor

- Malo Bourgon – Machine Intelligence Research Institute, CEO

- Martin Casado – Andreessen Horowitz, General Partner

- Rocco Casagrande – Gryphon Scientific, Executive Chairman

- Renée Cummings – University of Virginia, Assistant Professor of Data Science

- Huey-Meei Chang – Georgetown’s Center for Security and Emerging Technology, Senior China Science & Technology Specialist

- Janet Haven – Data & Society, Executive Director

- Jared Kaplan – Anthropic, Co-Founder and Chief Science Officer

- Aleksander Mądry – OpenAI, Head of Preparedness

- Andrew Ng – AI Fund, Managing General Partner

- Hodan Omaar – Information Technology and Innovation Foundation (ITIF), Senior Policy Analyst

- Robert Playter – Boston Dynamics, CEO

- Stuart Russell – UC Berkeley, Professor of Computer Science

- Alexander Titus – USC Information Science Institute, Principal Scientist

Senators who attended at least part of forum eight (in addition to the hosts):

- Sen. Tammy Baldwin (D-WI)

- Sen. Alex Padilla (D-CA)

Issues discussed:

- AI p(doom) and p(hope)

- Artificial general intelligence (AGI)

- Long-term versus short-term risks of AI

- Regulatory capture and innovation

- Competition with China

- AI alarmism and doomerism

- Nuclear and bioweapons

- Deepfakes

- AI regulatory bodies

- Risk thresholds

On Wednesday morning, Sen. Schumer kicked off a double-header day of ‘AI Insight Forums’ to a more-crowded-than-usual room of guests and congressional staffers. Academics like University of Virginia data scientist Renée Cummings and USC Information Science Institute principal scientist Alexander Titus appeared alongside tech industry representatives such as OpenAI Head of Preparedness Aleksander Madry and Anthropic Co-founder Jared Kaplan.

The eighth forum, focused on ‘doomsday scenarios,’ was divided into two parts, with the first hour dedicated to AI risks and the second on solutions for risk mitigation. It started with a question posed by Sen. Schumer, asking the seventeen participants to state their respective “p(doom)” and “p(hope)” probabilities for artificial general intelligence (AGI) in thirty seconds or less.

Some said it was ‘surreal’ and ‘mind boggling’ that p(dooms) were brought up in a Senate forum. “It’s encouraging that the conversation is happening, that we’re actually taking things seriously enough to be talking about that kind of topic here,” Malo Bourgon, CEO of the Machine Intelligence Research Institute (MIRI), told Tech Policy Press. Bourgon provided a p(doom) somewhere in the double digits, but doesn’t think it’s particularly useful to try and nail down an exact number. “I don’t really think about it very much. Whether it's a coin flip, or two heads in a row, or Russian Roulette, you know, any of these probabilities are all unacceptably high in my view,” he said.

Others rejected Sen. Schumer’s question outright. “[The threat of AI doom] is not a probability, it’s a decision problem, and we need to decide the right thing. We’re not just trying to predict the weather here, we're actually deciding what's going to happen,” said Stuart Russell, professor of computer science at University of California Berkeley, in an interview with Tech Policy Press.

Russell was one of the main signatories of an open petition published in March calling for “all AI labs to immediately pause for at least 6 months the training of AI systems more powerful than GPT-4,” which has since acquired nearly 34,000 signatures. Other lead signatories included University of Montréal Turing Laureate Yoshua Bengio, who also attended the ‘doomsday’ forum, as well as tech executives such as Elon Musk, Apple cofounder Steve Wozniak, and Stability AI CEO Emad Mostaque.

“A number of people said their p(doom) was zero. Interestingly, that coincided almost perfectly with the people who have a financial interest in the matter,” Russell said, including those who work for or invest in AI companies. “It’s a bit disappointing how much people’s interests dictate their beliefs on this topic,” he said, later adding that he was “a little surprised” the Senators invited people with a vested interest in saying extreme risk doesn’t exist to a discussion about extreme risk.

One of the most contentious topics was not necessarily around whether AGI can be achieved – although many attendees think it can and will very soon – but rather if AGI should be the principal doomsday scenario to be concerned about, according to Fidutam President Okezue Bell. “A lot of perspectives were voiced around how tackling these near term concerns will help ensure that there aren't long term risks posed with AI,” Bell told Tech Policy Press. Some near term high-risk scenarios that Fidutam highlighted include discrimination and disinformation that might “snowball into existential, large-scale risks posed by [AI] technologies,” which haven’t yet been fully grappled, according to Bell.

A conversation also ensued around whether AI ‘alarmism’ comes from a place of genuine fear or is motivated by other factors, like regulatory capture, according to Hodan Omaar, senior policy analyst at the Information Technology and Innovation Foundation (ITIF). Attendees told Tech Policy Press that the ‘regulatory capture’ theory – the idea that regulation could stifle innovation or is an attempt by companies like OpenAI to prevent other companies from getting into the AI-building business – was shut down swiftly by the room.

Omaar additionally characterized the forum as 'constructive,’ and framed her response to p(doom) more broadly. “The way I think about it is that my range for what p(doom) for AGI is is somewhere around what my p(doom) for other hypothetical, but yet unproven, risks are, rather than necessarily putting a number on it,” Omaar told Tech Policy Press. She thinks considering other domains, like the p(doom) of physicists creating a black hole with particle accelerators, can be instructive for thinking about p(doom) for AGI.

More time could have been dedicated to solutions for theoretical doomsday scenarios for some attendees. Solutions, according to Russell, should preclude any skepticism one has around AI, particularly around artificial general intelligence. “You're entitled to be skeptical about the risk if you already know how to solve that problem. But nobody was putting their hand up and saying we know how to solve that problem, and a lot of people were saying there is no risk,” said Russell. A deeper understanding of how these machines work is also necessary to avoid loss of control, according to MIRI’s Bourgon, who drove home that there’s a fundamental common sense risk associated with systems if they can function in ways outside their intended design.

Forum Seven

Transparency & Explainability and Intellectual Property & Copyright

The Senate’s seventh ‘AI Insight Forum’ focused on transparency, explainability, intellectual property, and copyright. Source.

On Wednesday, Nov. 29, Sen. Schumer brought together labor union and trade association leaders from the news media, music, and motion picture industries alongside tech leaders, academics, and more for the seventh ‘AI Insight Forum.’ The forum explored AI transparency – how to define it and the limitations of potential transparency regimes – as well as what AI means for creators and inventors, particularly with regard to intellectual property and copyright, according to a statement from Sen. Schumer’s office.

Attendees:

- Rick Beato – Musician and Youtuber

- Ben Brooks – Stability AI, Head of Public Policy

- Mike Capps – Howso, CEO and Co-founder

- Danielle Coffey – News/Media Alliance, President and CEO

- Duncan Crabtree-Ireland, SAG-AFTRA, National Executive Director and Chief Negotiator

- Ali Farhadi – Allen Institute for AI, CEO

- Zach Graves – Foundation for American Innovation, Executive Director

- Vanessa Holtgrewe – IATSE, Assistant Department Director of Motion Picture and Television Production

- Mounir Ibrahim, Truepic

- Dennis Kooker – Sony Music Entertainment, President of Global Digital Business & US Sales

- Curtis LeGeyt – National Association of Broadcasters, President and CEO

- Riley McCormack – DigiMarc, President and CEO

- Cynthia Rudin – Duke University, Professor of Computer Science and Engineering and Director of the Interpretable Machine Learning Lab

- Jon Schleuss – NewsGuild, President

- Ben Sheffner – Motion Picture Association, Senior VP and Associate General Counsel for Law & Policy

- Navrina Singh – Credo AI, Founder and CEO

- Ziad Sultan – Spotify, Vice President of Personalization

- Andrew Trask – OpenMined, Creator and leader

- Nicol Turner Lee, Brookings, Senior Fellow of Governance Studies and Director of the Center for Technology Innovation

Senators who attended part of at least one forum on Nov. 29:

- Sen. Dick Durbin (D-IL)

- Sen. Angus King (I-ME)

- Sen. Amy Klobuchar (D-MN)

- Sen. Alex Padilla (D-CA)

- Sen. Mark Warner (D-VA)

Issues discussed:

- NO FAKES Act Senate draft bill

- Digital replicas and likeness

- Narrow versus broad copyright legislation

- Data used to train large language models (LLMs) and the fair use doctrine

- Balancing copyright with First Amendment protections

- Open models and algorithmic transparency

- AI assurance sandboxes

- The Coalition for Content Provenance and Authenticity (C2PA) standard

- Interoperability and scaling transparency online

- AI disclosure mechanisms

Ahead of the seventh ‘AI Insight Forum,’ Sen. Todd Young (R-IN) sat down with Axios Co-founder and Executive Editor Mike Allen for Axios' AI+ Summit in Washington, DC. There, the senator expressed how encouraged and “frankly a little surprised” he’s been by the series, which he’s co-hosting alongside Sen. Charles Schumer (D-NY), Sen. Martin Heinrich (D-NM), and Sen. Mike Rounds (R-SD).

“I expected more disagreement when it came to the role that government should play, the extent to which we should protect workers in various ways from the [AI] technology. There haven’t been very significant disagreements,” Sen. Young said. One of the areas he expects the most division around, however, is labor – a major theme of this forum.

Of the 127 total attendees across the seven ‘AI Insight Forums,’ thirteen have been from labor unions – three of which joined this latest forum. And Sen. Young was correct in regards to discord between the labor leaders, primarily over intellectual property and copyright.

One of the biggest points of tension at Wednesday’s forum was around potential federal protections for digital image rights. Attendee Duncan Crabtree-Ireland, the National Executive Director and Chief Negotiator at the Screen Actors Guild and the American Federation of Television and Radio Artists, or SAG-AFTRA, advocated at the forum for a federal intellectual property right in voice and likeness. He specifically endorsed protections found in the NO FAKES Act, a discussion draft of a bill introduced by Sen. Chris Coons (D-DE) with Sens. Marsha Blackburn (R-TN), Amy Klobuchar (D-MN), and Thom Tillis (R-NC) in October, that addresses the use of non-consensual digital replications in audiovisual works and sound recordings. SAG-AFTRA, which is still ratifying its contract after Hollywood’s 148 day strike earlier this year, built AI provisions into its deal with the movie studios that give its members substantial control over the creation and use of their digital replicas.

The Motion Picture Association (MPA), on the other hand, isn’t throwing its full weight behind the NO FAKES Act just yet. MPA’s Senior Vice President and Associate General Counsel for Law and Policy Ben Sheffner, who also attended the forum, told Tech Policy Press that the MPA is “working productively” with the staff of the bill’s cosponsors to “improve some of the First Amendment protections that would get us to a place where we can support [the Act].” While the MPA thinks it’s legitimate for Congress to consider federal protections around, say, replacing an actor or a recording artist's performance without their permission, Sheffner said it needs to be done carefully and narrowly. Some of the constitutional encroachments, according to the MPA, include requiring studios to put “MADE WITH AI” labels in movie scenes, which it argues “would hinder creative freedom and could conflict with the First Amendment’s prohibition against compelled speech.”

The news industry was also well-represented at the forum, with leaders from the National Association of Broadcasters, NewsGuild, and the News Media Alliance all in attendance. NewsGuild President Jon Schleuss’ major focus at the forum was pointing out that large language models are not only actively accelerating the spread of misinformation and disinformation, but also using news to train their models in violation of these sites’ terms of service. “For the publishers, the broadcasters, for me, there's a lot of concern that we had around OpenAI, these AI companies that have actually wholesale scraped most every piece of news content from news websites and used that to train their large language models,” Schleuss said in an interview. “It’s also just hyper-problematic, but we don’t really know the full extent because there’s no transparency in what those AI models actually use as training material, right?” he added. Schleuss pointed senators to the Community News and Small Business Support Act, which is a bill proposed in the House that would provide tax credits to news companies to hire and retain local journalists. Even recovering a fraction of the roughly 30,000 journalism jobs lost over the past decade could help mitigate the spread of disinformation, Schleuss said.

On the subject of transparency and explainability, one expert at the forum was Professor of Computer Science and Electrical and Computer Engineering at Duke University Cynthia Rudin, who focuses on interpretable machine learning, or designing machine learning models that people can understand. This work includes when to use what models in which contexts, such as healthcare or criminal justice decisions, where Rudin argues for simple predictive models in lieu of ultra-complex, black box ones. “I want the government to legislate that no black box model should be used [in high-stakes domains] unless you can't create an equally accurate interpretable model,” Rudin told Tech Policy Press. “There's no reason to use a black box model, if you can construct something that's equally accurate, and that's interpretable.”

Rudin’s technical expertise also serves as a bridge to the forum’s intellectual property and copyright discussion. She shares worries with news media representatives about generative AI’s potential to produce fairly believable disinformation at scale, which may in turn degrade trust in news and democracy. And, from a copyright perspective, Rudin doesn’t buy generative AI companies’ claims that just because they can train their algorithms on news content under fair use, they should. “I did make a point during the forum that this is a technical problem, like [generative AI companies] may claim they can't train without a huge amount of data, but that's not necessarily true, they just don't know how to do it,” Rudin said. Arguments over fair use can be distractions from potential technical solutions.

After the forum, Sen. Schumer told reporters that the next and final two sessions will focus on ‘national security’ and ‘doomsday scenarios.’ And in terms of a timeline for forthcoming AI legislation, the Senate Majority Leader doubled down on his promise that it is coming in a matter of months. “We’re already sitting down and trying to figure out legislation in certain areas, it won’t be easy, but I’d still say months, not days, not years,” he said. One of his highest legislative priorities, he said, will be around AI and elections.

Press coverage:

- PBS: Senate Majority Leader Schumer gives remarks after AI Insight Forum (video)

- Axios: D.C.'s AI forums stay non-partisan, for now

- The Hill: Young says labor issues could lead to bipartisan disagreements on AI regulation

Additional documents:

Forums Five and Six

Sen. Schumer co-hosts the Senate’s fifth and sixth AI Insight Forums focused on ‘elections and democracy’ and ‘privacy and liability,’ respectively. Nov. 8, 2023.

On Wednesday, Nov. 8, the Senate hosted its fifth and sixth ‘AI Insight’ forums on ‘Democracy and Elections’ and ‘Privacy and Liability,’ respectively. The pair of forums is the latest installment in Senate Majority Leader Charles Schumer (D-NY)’s nine-part series – alongside co-hosts Sen. Martin Heinrich (D-NM), Sen. Mike Rounds (R-SD), and Sen. Todd Young (R-IN) – to “supercharge” the process of building consensus on Capitol Hill for artificial intelligence legislation.

Of the 108 attendees that have so far participated in the Senate’s six ‘AI Insight’ Forums, 44 have been from industry, while nearly forty have been from civil society and fourteen from academia. Civil society representation has included leaders from think tanks like the Center for American Progress and the Heritage Foundation, labor unions like the Retail, Wholesale and Department Store Union, and civic engagement groups such as the National Coalition on Black Civic Participation, among others. Two state government officials – Secretary of State of the State of Michigan Jocelyn Benson and Lieutenant Governor of the State of Utah Deidre Henderson – participated in the most recent forum on ‘Democracy and Elections.’

Senators who attended part of at least one forum on Nov. 8:

- Sen. Cory Booker (D-NJ)

- Sen. Maria Cantwell (D-WA)

- Sen. Dick Durbin (D-IL)

- Sen. John Hickenlooper (D-CO)

- Sen. Angus King (I-ME)

- Sen. Amy Klobuchar (D-MN)

- Sen. Jeff Merkley (D-OR)

- Sen. Alex Padilla (D-CA)

- Sen. Cynthia Shaheen (D-NH)

- Sen. Mark Warner (D-VA)

- Sen. Raphael Warnock (D-GA)

Press Coverage:

- Washington Examiner: Schumer artificial intelligence forums address elections and privacy

- Axios: Elections and privacy featured in next AI forums

Additional documents:

Forum Five

Democracy and Elections

Attendees:

- Alex Stamos – Stanford University, Director of the Stanford Internet Observatory at the Cyber Policy Center

- Amy Cohen – National Association of State Election Directors, Executive Director

- Ari Cohn – TechFreedom, Free Speech Counsel

- Ben Ginsberg – Hoover Institution, Volker Distinguished Visiting Fellow

- Damon Hewitt – Lawyers’ Committee for Civil Rights Under Law, President and Executive Director

- Andy Parsons – Adobe, Senior Director of the Content Authenticity Initiative

- Jennifer Huddleston – CATO Institute, Tech Policy Research Fellow

- Jessica Brandt – Brookings Institution, Policy Director of Artificial Intelligence and Emerging Technology Initiative and Foreign Policy Fellow

- Melanie Campbell – National Coalition on Black Civic Participation, President and CEO

- Matt Masterson – Microsoft, Director of Information Integrity

- Michael Chertoff – Chertoff Group, Co-founder and Executive Chairman; Former Secretary of Department of Homeland Security

- Lawrence Norden – Brennan Center for Justice, Senior Director of the Elections and Government Program

- Neil Potts – Facebook, Vice President of Public Policy, Trust & Safety

- Dave Vorhaus – Google, Director, Global Elections Integrity

- Yael Eisenstat – Anti-Defamation League, Vice President of the Center for Technology & Society

- Kara Frederick – The Heritage Foundation, Director of the Tech Policy Center

- Jocelyn Benson – Secretary of State, State of Michigan

- Deidre Henderson – Lieutenant Governor, State of Utah

Sen. Schumer opened up his fifth forum – focused on ‘Elections and Democracy’ – with a new AP poll that found a majority of Americans (nearly 6 in 10 adults) are worried AI tools will increase the spread of false and misleading information in the 2024 elections.

“This isn’t theoretical,” Sen. Schumer warned. “Political ads have already been released this year using AI-generated images and text-to-voice converters to depict candidates negatively. Uncensored chatbots have already been deployed at a massive scale, targeting millions of individual voters for political persuasion. This is happening today.” One of the ways Congress is moving quickly to create AI safeguards, Sen. Schumer said, is through Sen. Amy Klobuchar’s two bipartisan bills meant to crack down on political ads containing AI-generated images and video. “But of course, more will have to be done,” he said as he kicked off the forum.

Issues discussed:

- Pres. Biden’s Executive Order on the ‘Safe, Secure, and Trustworthy Development and Use of Artificial Intelligence.’

- Obligations for watermarking AI-generated content

- Positive use cases for AI’s role in elections and civic engagement

- Mandating AI risk assessments

Civil Rights and AI

Forum five was largely centered around identifying the risks that AI poses for civil rights and civic participation, especially ahead of the 2024 elections, and what tools can be used to mitigate these potential harms.

In 2017, there was a deceptive campaign where bots flooded the Federal Communications Commission (FCC) with millions of comments from fake constituents pressing for the repeal of net neutrality rules, the Brennan Center for Justice’s Lawrence Norden wrote in his written statement for the ‘Elections and Democracy’ forum. As senior director of the Elections and Government Programs, Norden highlighted this instance because the technology used to accomplish the campaign is a predecessor to today’s generative AI systems, and the episode “could forecast imminent dangers for agency rulemaking and other policymaking practices informed by public input.”

Another example brought before the Senate was from the Lawyers’ Committee for Civil Rights Under Law President and Executive Director Damon Hewitt. He cited a lawsuit that the National Coalition on Black Civic Participation recently won against right wing activists Jack Burman and Jacob Wohl, who in the 2020 elections made 85,000 robocalls largely to Black Americans to discourage them from voting by mail. Hewitt said it was an example of why the US needs federal legislation that can address AI-specific threats to maintain election integrity. The two men used “primitive” technology “on the cheap” to tell voters that if you vote by mail, the police will execute outstanding warrants, creditors will collect outstanding debts, and the CDC will use voters’ personal data to force mandatory vaccinations. “If they combine what they tried to do with AI, they’d have better data sets to more precisely target Black voters. And they could mimic me–my name, image, likeness–and clone my voice as a trusted messenger,” Hewitt told Tech Policy Press. While they were successfully prosecuted under Section 11 of the Voting Rights Act, today, no federal legislation currently exists that could address an AI-amplified version of these types of illegal schemes.

Hewitt doesn’t see AI as an enemy, but rather a “force multiplier for discrimination, racism, and hate, structural or otherwise.” He explained that as long as elections have existed in this country, so too has voter suppression and racial discrimination. This is why the Votings Rights Act exists, but Hewitt doesn’t see its provisions as enough to address the potential harms AI may bring to elections. “To the extent that there’s some in the room who would say, ‘There’s no need for regulation,’ you’re kind of denying the everyday reality of Black voters and other voters who are disenfranchised historically and repeatedly,” said Hewitt.

Jennifer Huddleston, a tech policy research fellow at the CATO Institute, frames the issue differently. “When it comes to questions of voter intimidation or things like that, we’re talking about a bad actor using a technology to do the act, not the technology itself doing the act,” she told Tech Policy Press. “I think it’s important to remember that all of our existing regulations did not disappear just when artificial intelligence became part of the process,” Huddleston said. “And there are, in many cases, already laws on the books to deal with some of those concerns.”

As for desired outcomes, the Brennan Center for Justice’s Norden recommended that Congress invest in “more secure, accessible, and privacy-protecting CAPTCHAs that government bodies can implement where appropriate,” among others. Hewitt hopes to see regulation that requires AI tools to be tested before and after they are deployed, similar to an environmental impact assessment. And another attendee, the National Coalition on Black Civic Participation’s President and CEO Melanie Campbell, called for a government-sponsored study on AI’s potential impact on the electoral process ahead of the 2024 elections as well as a task force for real-time monitoring of AI’s impact on voter suppression, intimidation and voter safety.

Watermarking AI-generated content

Sen. Amy Klobuchar (D-MN), who both attended the forum and spoke to reporters afterwards, has helped lead the charge on passing legislation around watermarking AI-generated content. In May, as Chairwoman of the Senate Committee on Rules and Administration with oversight over federal elections, she introducedthe REAL Political Ads Act alongside Senators Cory Booker (D-NJ) and Michael Bennet (D-CO). The proposed legislation would require a disclaimer on all political ads using AI-generated images or video. Then, in September, Sen. Klobuchar teamed up with Senators Josh Hawley (R-MO), Chris Coons (D-DE), and Susan Collins (D-ME) to introduce a bill called the Protect Elections from Deceptive AI Act – a total ban on the use of AI to generate “materially deceptive content falsely depicting federal candidates in political ads.” That legislation, however, would need additional sponsors before it might get marked up in committee, Sen. Klobuchar told the Washington Examiner after the forum. Sen. Schumer added that he will attempt to get legislation out that prioritizes the effects of AI on elections in the coming months.

On the same day as the ‘Elections and Democracy’ forum, Meta announced its voluntary commitment requiring disclosures for all political ads running on its platforms that were created or altered using artificial intelligence. The day prior, Microsoft announced a series of commitments to protect politicians against deepfakes and amplify “authoritative” election information in its Bing search results. Microsoft also endorsed the Protect Elections from Deceptive AI Act. Both Meta and Microsoft representatives – Vice President of Public Policy for Trust & Safety Neil Potts and Director of Information Integrity Matt Masterson, respectively – were in attendance at the forum. (These types of ‘voluntary commitments’ are often criticized as being a way for tech companies to avoid regulation and evade accountability when things go awry.)

“There’s broad consensus that we need guardrails in place, and today we saw tech companies announce additional actions to address AI in elections,” Sen. Klobuchar said in a press release issued after the forum. “But as was discussed at the forum, we need laws in place and we can’t just rely on voluntary steps.”

Not everyone embraced watermarking, though.

During the forum, sitting in the same room as Adobe Senior Director of the Content Authenticity Initiative Andy Parsons, ADL Center for Technology & Society Vice President Yaёl Eisenstat brought up recent reporting by the Australian news outlet Crikey that found Adobe had been selling fake AI images of the Israel-Gaza conflict. While Adobe technically did the ‘right thing’ by labeling the images as synthetic at the time of licensing, that approach doesn’t “stop all of the harms created once those images start getting amplified online,” she told Tech Policy Press in an interview. Along the same vein, National Association of State Election Directors executive director Amy Cohen believes there must be a “human readable component” that requires no technical expertise or knowledge to verify an image. Without this key component, watermarking may do little to affect how AI-generated content containing false information spreads, “especially on a topic like elections where emotions, not logic, tend to govern behavior,” according to her written statement.

And because AI is so broad, what rises to the level of AI-altered content for a watermark mandate might be difficult to define. The CATO Institute’s Huddleston worries about ending up in a situation where something innocuous, like auto-generated captions in a political ad video, could trigger a mandated ‘watermark’ designation.

Forum Six

Privacy and Liability

Attendees:

- Arthur Evans Jr – American Psychological Association, CEO and Executive VP

- Chris Lewis – Public Knowledge, President and CEO

- Mackenzie Arnold – Legal Priorities Project, Head of Strategy and liability specialist

- Daniel Castro – Information Technology and Innovation Foundation, VP and Director of ITIF’s Center for Data Innovation

- Ganesh Sitaraman – Vanderbilt Law, New York Alumni Chancellor’s Chair in Law

- Tracy Pizzo Frey – Advisor, Common Sense Media

- Linda Lipsen – American Association for Justice, CEO

- Samir Jain – Center for Democracy and Technology, Vice President of Policy

- Mutale Nkonde – AI For the People, founding CEO

- Rashad Robinson – Color of Change, President

- Stuart Ingis – Venable LLP, Chairman

- Stuart Appelbaum – Retail, Wholesale and Department Store Union, President

- Bernard Kim – Match Group, CEO

- Zachary Lipton – Abridge CEO and Assistant Professor of Machine Learning at Carnegie Mellon

- Gary Shapiro – Consumer Technology Association, President and CEO

- Mark Surman – Mozilla Foundation, President and Executive Director

Issues discussed:

- Open source and proprietary AI models

- Data privacy legislation

- AI vs. a general privacy bill

- Children’s online safety

- Establishing AI risk tiers

- Section 230

- Standards and apportioning of liability

Forum five, which took place on the afternoon of Nov. 8, focused on ‘privacy and liability,’ including the collection, use, and retention of data” and “when AI companies may be liable for harms caused by their technologies,” according to a statement from Sen. Schumer’s office.

“This used to be science fiction, but now it’s increasingly closer to reality,” said Sen. Schumer in his opening remarks about the risk for AI to intrude on users’ personal lives as the technology advances. He warned forum attendees that AI could be weaponized as a tool of emotional manipulation, and may ‘take the place of’ friends and significant human relationships. “So, designing and enforcing safeguards for privacy is critical for keeping trust in AI systems. We must know when and how and how to hold AI companies accountable when that trust is violated,” he explained.

The forum was divided into two parts, with the first hour centered around privacy and the second half focused on liability.

Privacy

Forum attendees seemed to largely agree that privacy rules must be part of AI legislation, even if they disagree on the details.

Public Knowledge President and CEO Chris Lewis used the forum to emphasize the importance of passing a comprehensive national privacy law rooted in strong civil rights protections and data minimization practices. Lewis hopes the American Data Privacy Protection Act (ADPPA) will be re-introduced, a “compromise” bill which passed overwhelmingly with bipartisan support out of the House Energy and Commerce committee last year. “Even though it’s not a bill that’s targeted at AI, AI systems depend so heavily on that sort of data that it will at least create the basic guardrails around how data that’s collected for AI is used, shared, and other practices,” Lewis told Tech Policy Press.

Recommendations from industry participants were mixed. Abridge Chief Scientific Officer Zachary Lipton called for more focused, application-specific AI policy, warning of the ‘curse of generality’ in his written statement. Match Group CEO Bernard Kim supports ‘reasonable legislation’ that incorporates industry standards for safety and privacy by design. And Mozilla Foundation President and Executive Director Mark Surman advised Congress not to get ‘fancy’ on consumer privacy legislation. In an interview, he suggested going after comprehensive consumer privacy regulation now, and if AI regulation comes out of these forums, privacy should be considered as part of the design of that legislation, too. “If they end up both coming to pass, you want to make sure that they’re compatible,” Surman said. “But the consensus is you need privacy rules as a part of AI rules given how central data is to what AI is.”

Samir Jain, vice president of policy at the Center for Democracy and Technology, echoed Surman’s thoughts on privacy. “I think we have crossed the threshold where most people agree privacy legislation is a necessity. And that if we’re going to do AI regulation correctly, privacy needs to be a piece of that,” he told Tech Policy Press. “Maybe AI legislation serves as the impetus for finally getting [a comprehensive federal privacy bill] done, assuming AI legislation actually moves,” he added.

Liability

Discussions around liability were largely over Section 230, with many attendees in the room arguing that the controversial law should not apply to generative AI. In her written statement, Legal Priorities Project Head of Strategy and liability specialist Mackenzie Arnold said that, “the most sensible reading of Section 230 suggests that generative AI is a content creator” that “creates novel and creative outputs rather than merely hosting existing information.” Ambiguity around generative AI and Section 230 will persist “absent Congressional intervention,” Arnold wrote with a link to a bill cosponsored by Senators Josh Hawley (R-MO) and Richard Blumenthal (D-CT) called the No Section 230 Immunity for AI Act.

The Information Technology and Innovation Foundation called for Congress to amend Section 230 in its written statement, partly over concerns that liability under the law would ‘chill innovation.’ ITIF Vice President and Director of the Center for Data Innovation Daniel Castro argues that this approach “would recognize that using generative AI in this context is not unlike a search engine in the way it produces content: both require user input and generate results based on third-party information from outside sources.”

While Public Knowledge agrees that Section 230 “does not provide intermediary liability protection for generative AI,” Lewis mostly discussed the importance of a digital regulator or agency over digital platforms that have the expertise to deal with very specific liability claims. “Depending on the case, there are existing regimes that you don’t need AI-specific policy for,” he said. Some examples he flagged were copyright law, which has long standing jurisprudence that “should probably hold up fairly well,” and civil rights protections for racial justice issues, such as bias in facial recognition technologies. “As to why we need an expert regulator, it’s to continue to keep up with the pace of innovation,” Lewis added.

Standards of liability, such as strict liability versus negligence, and where to apply them across the technology stack were of great interest to the senators, according to CDT’s Jain.

“If you think of generative AI, for example, you might have one entity that’s creating the training data, and another entity that develops the model, and yet another entity that creates the application that rides on top of the model,” Jain explained. “How do we think about allocating liability across that stack? And who is liable?” Generally, Jain said, most agreed that whichever entity is best positioned to take appropriate or reasonable steps to avoid harm should be held liable.

Open source models

Questions around open source models, such as balancing cybersecurity risks with innovation and transparency, were touched on in both the privacy and liability conversations.

While the public debate has been divisive, the forum was not, according to the Mozilla Foundation’s Surman, who found this ‘surprising.’ He expected open source models to be positioned as a national security risk, and for the issue to be framed as a binary of open versus closed at the forum, as is often done by “some of the big cloud-based AI labs” leading the charge. “There are a lot of people who have used fear about future risks to go at open source in ways that we think are both unwarranted and unwise, and possibly self-interested,” Surman said.

CDT’s Jain pointed out that open source can actually enable innovation and foster competition, and while it presents risks, so too do proprietary models. “The real question is, what do responsible practices around open source look like? And how do we make sure that the release and use of those models is handled responsibly?” Jain stated.

On Oct. 31, the Mozilla Foundation released a Joint Statement on AI Safety and Openness, which calls for a ‘spectrum’ of approaches to mitigate AI harms over rushed regulation. “The idea that tight and proprietary control of foundational AI models is the only path to protecting us from society-scale harm is naive at best, dangerous at worst,” the statement read. Several attendees from previous AI ‘Insight Forums’ signed onto Mozilla’s letter, including the Institute for Advanced Study’s Alondra Nelson, Princeton’s Arvind Narayanan, and UC Berkeley’s Deborah Raji. Employees from Meta, the Electronic Frontier Foundation, and Hugging Face were also among the more than 1,700 other signatories.

“Open source actually adds transparency” because it “excels at creating robust, secure systems when you have all these people looking for what’s going to go wrong or try to fix what went wrong,” Surman said. “It’s a bit of a red herring. To say there’s a future security risk of AI? True. That those future risks are attached to open source? Red herring.”

Forums Three and Four

On Nov. 1, Senator Schumer co-hosted the Senate’s third and fourth AI ‘Insight Forums,’ focused on AI and the workforce as well as “high impact” AI, respectively. Source.

Forum Three

Focus: Workforce

The first forum of the day began at 10:30 am and focused on the intersection of AI and the workforce. The fifteen attendees and lawmakers gathered on Nov. 1 to “explore how AI will alter the way that Americans work, including the risks and opportunities” across industries such as medicine, manufacturing, energy, and more, according to a press release from Sen. Schumer’s office.

Forum Three Attendees:

- Allyson Knox – Microsoft, Senior Director of Education Policy

- Claude Cummings Jr. – Communication Workers of America (CWA), President

- Anton Korinek – University of Virginia, Professor

- Arnab Chakraborty – Accenture, Senior Managing Director of Data and AI and Global Responsible AI Lead

- Austin Keyser – International Brotherhood of Electrical Workers (IBEW), Assistant to the International President for Government Affairs

- Bonnie Castillo, RN – National Nurses United, Executive Director

- Chris Hyams – CEO and Board Director, Indeed

- Daron Acemoglu – Massachusetts Institute of Technology, Professor

- José-Marie Griffiths – Dakota State University, President

- Michael Fraccaro – Mastercard, Chief People Officer

- Michael R. Strain – American Enterprise Institute, Director of Economic Policy Studies

- Patrick Gaspard – Center for American Progress (CAP), President and CEO

- Paul Schwalb – UNITE HERE, Executive Secretary-Treasurer of DC Local 25

- Rachel Lyons – United Food and Commercial Workers International Union (UFCW), Legislative Director

- Robert D. Atkinson – Information Technology and Innovation Foundation (ITIF), Founder and President

Labor unions were well represented in the forum. Of the 15 total attendees, leaders from five different unions attended, including the Communications Workers of America; the International Brotherhood of Electrical Workers; National Nurses United; UNITE HERE, which represents hospitality workers; and the United Food and Commercial Workers union.

The executive director for National Nurses United, Bonnie Castillo, explained in a thread on X (formerly Twitter) how AI technologies have been used for years in healthcare settings to try to replace RN judgment, with algorithms prone to racial and ethnic bias as well as errors. Some of these include clinical decision support systems embedded in electronic health records, staffing platforms that support gig nursing, and, increasingly, generative AI systems, according to Castillo.

“It is clear to us that hospital employers use this tech in attempts to outsource, devalue, deskill, and automate our work. Doing so increases their profit margins at the expense of patient care and safety,” wroteCastillo, who is herself a registered nurse. She called on Congress to draft legislation that places the burden on employers to demonstrate technologies are safe and equitable, ensures RNs have the legal right to bargain over AI in the workplace, protects patient privacy, and more, all while grounded in precautionary principles.

Without workers’ voices at the policy-making table in both the development and deployment of these new technologies, outcomes include ineffective implementations, increased workloads, and potential health and safety issues when things go awry, according to UNITE HERE’s deputy director of research Ben Begleiter. “It’s incumbent on tech developers and on congress to make it so that the worker’s voice is included,” he said, “and at the current moment, the best – and perhaps the only way – to get workers’ voices included in those is through union representation.”

CWA president Claude Cummings Jr. echoed the need for collective bargaining and union contracts as an essential safeguard for workers to have a voice in AI implementation within their jobs. In an official emailed statement, Cummings Jr. called on the government to prohibit abusive surveillance in the workplace, protect worker privacy, and ensure that decisions made by AI systems can be appealed to a human.

Others represented at the third Forum included four tech industry leaders, three academics, and three think tank representatives.

TechNet, a bipartisan network of technology CEOs and senior executives that positions itself as “the voice of the innovation economy,” had three of its member organizations in attendance, including Accenture, Indeed, and Mastercard. In a thread on X, the trade group emphasized how AI has the potential for economic growth and productivity enhancement. TechNet noted that while 63 percent of US jobs will be allegedly complemented by AI, 30 percent of the workforce will be completely unaffected, according to data from Goldman Sachs, one of the world’s largest banking firms.

TechNet previously commented on the AI Insight Forums in a press release issued last week by president and CEO Linda Moore that simultaneously boasted AI’s potential while warning that the US is “in a race to win the next era of innovation and China and other competitors are striving to win the AI battle.”

Forum Four

Focus: High Impact AI

The second forum on Nov. 1 kicked off at 3 pm. Its focus was on “high impact” issues, such as “AI in the financial sector and health industry, and how AI developers and deployers can best mitigate potential harms,” according to the press statement.

Forum Four Attendees:

- Arvind Narayanan – Princeton University, Professor

- Cathy O’Neil – O’Neil Risk Consulting & Algorithmic Auditing (ORCAA), Founder and CEO

- Dave Girouard – Upstart, Founder and CEO

- Dominique Harrison – Joint Center for Political and Economic Studies, Director of Technology Policy

- Hoan Ton-That – Clearview AI, Co-founder and CEO

- Janelle Jones – Service Employees International Union (SEIU), Chief Economist and Policy Director

- Jason Oxman – Information Technology Industry Council (ITI), President and CEO

- Julia Stoyanovich – Center for Responsible AI at the NYU Tandon School of Engineering, Co-founder and Director

- Margaret Mitchell – Hugging Face, Researcher and Chief Ethics Scientist

- Lisa Rice – National Fair Housing Alliance (NFHA), President and CEO

- Prem Natarajan – Capital One, Chief Scientist and Head of Enterprise Data and AI

- Reggie Townsend – SAS Institute, Vice President of Data Ethics

- Seth Hain – Epic, Senior Vice President of R&D

- Surya Mattu – Digital Witness Lab at the Princeton Center for Information Technology Policy, Co-founder and Lead

- Tulsee Doshi – Google, Director and Head of Product for Responsible AI

- Yvette Badu-Nimako – National Urban League, Vice President of Policy

The topics discussed:

The forum was split into two parts, with the first on the risks of AI and the second on opportunities that AI technologies present. Topics discussed include:

- Civil rights and AI discrimination laws

- Facial recognition technology

- Accuracy of AI tools

- Identifying risks and risk frameworks

- Auditing AI systems

- Critical infrastructure

- AI used in hiring and employment

- Environmental risks

- Housing and financing

- AI in healthcare and medicine

There wasn’t much discussion about Pres. Biden’s AI Executive Order, issued just two days prior to the forum. “Honestly, I was worried that [the EO] might impact the discussion; it did not at all,” said Julia Stoyanovich, professor and director of the Center for Responsible AI at NYU. “The Executive Order, in most respects, is still at a very high level, and then people were saying things that were more concrete at the forum.”

While the panel was somewhat balanced, with seven attendees from the industry sector, three from academia, two from civil society, and two consultants, Stoyanovich hopes that when it comes time to draw up AI legislation, independent voices will be well represented. “I was unhappy that the first forum wasn’t balanced and, of course, the first forum was perhaps the most visible,” she said, “but I’m glad that subsequent forums included more diverse voices.” (Around sixty percent of Forum one attendees were public figures from high-profile tech companies, such as OpenAI’s Sam Altman and Meta’s Mark Zuckerberg.)

One eyebrow-raising guest at the fourth Forum, however, was the CEO and co-founder of the controversialfacial recognition firm Clearview AI, Hoan Ton-That. The CEO reportedly cited the ways Clearview AI has partnered with Ukraine to identify Russian soldiers and how its software has been used to track down child predators, among other positive use cases. A labor leader, however, countered that facial recognition tools have also been used to surveil workers and unmask protestors. Tech Policy Press was told by several people in the room that Ton-That got a notable amount of airtime. This was in-part because of the forum’s format, which allowed people to speak more freely than at a traditional hearing, and because facial recognition was a big topic of the fourth forum.

Allotting only two hours for sixteen attendees made some feel rushed and unable to address the complexities of the issues. For example, many of the representatives, particularly from financial institutions, focused on the benefits and opportunities AI has given the banking sector. Yet, research and reporting has shown that the use of AI in banking can lead to severe discrimination, specifically when algorithms are determining risk factors of districts and borrowers that may reinforce existing racial and gender disparities.

While it was great that so much time was spent on the potential risks posed by AI, the technology has and continues to challenge communities of color as it relates to economic mobility and wealth generation, according to Dominique Harrison, a racial equity consultant and co-chair of the forthcoming AI Equity Lab at the Brookings Institution. “These algorithms and models are not as fair as they could and should be. And there’s not a lot of guardrails, transparency, accountability mechanisms, to ensure that banks and financial institutions are constantly trying to find or use algorithms in ways that are more fair for our communities,” Harrison added. “There just wasn’t enough time for me to raise my hand again, to pontificate on that issue.”

When asked what additional topics she wished were discussed, researcher and chief ethics scientist at Hugging Face Margaret Mitchell wrote, “EVERYTHING,” in an emailed statement to Tech Policy Press. “I would have loved to spend more time on data, including issues with consent and what can be done to measure data more scientifically, rather than using whatever is available and fixing issues post-hoc,” she said.

Surya Mattu, co-founder and lead at the Digital Witness Lab at the Princeton Center for Information Technology Policy, similarly wanted the conversation to focus more around consent. “[AI] is very tightly coupled to the conversation around privacy, and we don’t have a comprehensive privacy law,” Mattu said. While he acknowledged that this is difficult to do, he thinks it’s important to have clarity on consent disclosures that must be provided to individuals. This would result in “meaningful reporting from the outside to find ways in which companies are collecting data for trainee models or how people are being subjugated to AI decision making in a way that they might not have realized otherwise,” he argued.

Arvind Narayanan, professor of computer science at Princeton University, used the forum to drive home that certain areas of AI, such as automated video analysis used to screen job candidates, should be approached with a level of skepticism. “Some types of AI, as far as we can tell, don’t even work,” he told Tech Policy Press, which is a different issue from discrimination. “It’s not about do they work for specific populations, it’s about do they work for anyone?” posed Narayanan. Mandated transparency into the efficacy of these algorithms may help weed out “snake oil” products, Narayanan wrote in his statement to Congress, adding that policy makers should “consider minimum performance requirements for particularly consequential decisions.”

While awaiting AI regulation, Stoyanovich hopes that Congress will fund more academic research on responsible AI, specifically education and upskilling efforts as well as training, and invest in capacity building within government. And as for what will come out of the forums, Mitchell hopes for regulation that protects the rights of individuals, not just corporations. “Corporations are good at protecting themselves. It’s the people who need help, and who the government is meant to protect,” she wrote.

Press Coverage:

- FedScoop: Schumer to host AI workforce forum with labor unions, big banks and tech scholars

- Biometric Update: AI Insight Forum draws flak with Clearview invitation

- The Hill: AI forum on elections to be held in Senate next week, Schumer says

- The Darden Report (UVA): Addressing the US Senate, UVA Darden Professor Urges Scenario Planning for the Workforce Implications of AI

Forum Two

Focus: Innovation

The US Senate’s second-ever AI ‘Insight Forum’ brought together some of the nation’s leading voices in labor, academia, tech, and civil rights to discuss the theme of ‘innovation’ on October 24, 2023. Source.

On Tuesday, Oct. 24, 2023, Senate Majority Leader Charles Schumer (D-NY) hosted the second installment of his bipartisan ‘AI Insight Forum’ in the Russell Senate Office Building. The innovation-themed session included prominent venture capitalists from Andreeessen Horowitz and Kleiner Perkins, as well as leaders from artificial intelligence companies such as SeedAI and Cohere, as first reported by Axios. Academics, labor leaders, and representatives from civil society groups including the NAACP and the Center for Democracy and Technology were also in attendance.

The second forum lasted just three hours, half the time allotted for the first. And it was less star-studded than its predecessor, which included the likes of Mark Zuckerberg and Elon Musk. However, many prominent voices advancing ideas around AI governance, industry standards, and more were invited to Tuesday’s forum, including Max Tegmark, the MIT professor and Future of Life Institute president who helped author the ‘pause AI’ letter earlier this year; Alondra Nelson, a professor at the Institute for Advanced Study as well as a former deputy assistant to President Joe Biden and acting director of the White House Office of Science and Technology Policy (OSTP), who led the creation of the White House’s “Blueprint for an AI Bill of Rights”; and Suresh Venkatasubramanian, a Brown University professor who also helped develop the Blueprint while serving as a White House advisor, among others.

On social media, observers raised concerns about perspectives excluded from the forum. Justine Bateman, a writer, director, producer, and member of the Directors Guild of America (DGA), the WGA, and SAG-AFTRA, pointed out in a post on X (formerly Twitter) that only one of the 43 total witnesses – Writers Guild of America president Meredith Stiehm – called to Congress so far has been an artist, despite the existential threats currently facing Hollywood, writers, and the visual arts with regards to AI.

While more than two-thirds of the Senate attended last month’s closed-door hearing, which was criticizedfor prohibiting the press and “muzzling” senators, the second forum was met with much less fanfare. Some senators seen shuffling into the hearing, according to a Nexstar reporter present on the Hill, included Senators Elizabeth Warren (D-MA), J.D. Vance (R-OH), and John Hickenlooper (D-CO).

The second forum’s announcement coincided with the introduction of a new bill, called the Artificial Intelligence Advancement Act of 2023 (S. 3050). The proposed legislation is sponsored by the AI Forums’ “Gang of Four” – Sen. Martin Heinrich (D-NM), Sen. Mike Rounds (R-SD), Sen. Charles Schumer (D-NY), and Sen. Todd Young (R-IN) – and will establish a bug bounty program as well as require reports and analyses on data sharing and coordination, artificial intelligence regulation in the financial sector, and AI-enabled military applications.

On the same day as the hearing, Senators Brian Schatz (D-HI) and John Kennedy (R-LA) introduced what they’re calling the “Schatz-Kennedy AI Labeling Act” (S. 2691), which would provide more transparency for users around AI-generated content. “[This Act] puts the onus where it belongs – on companies, not consumers. Because people shouldn’t have to double and triple check, or parse through thick lines of code, to find out whether something was made by AI,” Sen. Schatz said in a press release, “It should be right there, in the open, clearly marked with a label.”

Forum Two Attendees:

- Marc Andreessen – Andreessen Horowitz, Co-founder & General Partner

- Ylli Bajraktari – SCSP, President & CEO

- Amanda Ballantyne – AFL-CIO Technology Institute, Director

- Manish Bhatia – Micron, Executive Vice President

- Stella Biderman – EleutherAI, Executive Director

- Austin Carson – SeedAI, Founder & President

- Steve Case – Revolution, Chairman & CEO

- Patrick Collison – Stripe, Co-founder & CEO

- Tyler Cowen – George Mason University, Professor

- John Doerr – Kleiner Perkins, Chairman

- Jodi Forlizzi – Carnegie Mellon University, Professor

- Aidan Gomez – Cohere, Co-founder & CEO

- Derrick Johnson – NAACP, President & CEO

- Sean McClain – AbSci, Founder & CEO

- Alondra Nelson – Institute for Advanced Study, Professor

- Kofi Nyarko – Morgan State University, Professor

- Alexandra Reeve Givens – Center for Democracy and Technology , President & CEO

- Rafael Reif – MIT, Former President

- Evan Smith – Altana Technologies, Co-founder & CEO

- Max Tegmark – Future of Life Institute, President

- Suresh Venkatasubramanian – Brown University, Professor

The topics discussed:

- ‘Transformational’ innovation that pushes the boundaries of medicine, energy, and science

- ‘Sustainable’ innovation that drives advancements in security, accountability, and transparency in AI

- Government research and development funding (R&D) that incentivizes equitable and responsible AI innovation

- Open source AI models: Balancing national security concerns while recognizing this existing market could be an opportunity for American innovation

- Making government datasets available to researchers

- Minimizing harms, such as job loss, racial and gender biases, and economic displacement

‘Encouraging,’ ‘thoughtful,’ ‘civil,’ and ‘optimistic’ were words used to describe the tone of the second AI ‘insight forum,’ according to attendees Tech Policy Press spoke with shortly after the hearing.

Sen. Schumer set the stage early on Tuesday by reminding attendees that during the first forum, every single person raised their hand when asked, “Does the government need to play a role in regulating AI? This, Brown University Professor Suresh Venkatasubramanian said, established a baseline so that the regulatory question really became ‘what’ and ‘how.’ “Many, even from the business side of the group, talked about ‘rules of the road’ that we need so that we can all be comfortable and innovate without fear of harms. I think that makes sense,” said Venkatasubramanian. “And then the rest of it is a question of degree.”

Sen. Schumer also framed the innovation-themed forum as striking a careful balance between ‘transformational’ and ‘sustainable’ innovation. However, attendee Alondra Nelson told Tech Policy Press that she’s not entirely convinced by this sort of division. “We need all the innovation to be transformational,” Nelson argued, because “the status quo is not sustainable.” “What are the kinds of creative levers that we can use and think about now in government? What are the types of transformational innovation in the space of business that the government can help foster or catalyze? How do we use innovation? And how can government help drive investments and incentive structures for responsible, equitable AI that really drives public goods that helps us to address climate change, that helps us to address healthcare inequality?” Nelson asked. “I think there’s a lot more granularity that we probably are going to need to add around what we mean by transformational innovation.”

The greatest point of tension seemed to be around the level of AI regulation different stakeholders see as reasonable. This comes as little surprise, given that billionaire attendee Marc Andreessen published a “techno-optimist manifesto” the week prior that appeared to equate AI regulation with murder. And although tension around the right path forward on regulation was “very visible in the room,” as Center for Democracy and Technology President Alexandra Reeve Givens observed, it wasn’t “in a manner that felt completely insurmountable.”

For industry representatives, much of the focus was on research and development funding as the US government looks for ways to incentivize AI innovation. This, Reeve Givens said, seemed to resonate with Schumer as he thinks about this series of forums as AI’s version of the CHIPS and Science Act. “It’s clear [Congress] is really paying attention,” Reeve Givens said, “I think this is an important exercise for Congress to do. But the important part will come when they start putting pen to paper, right?”

Sen. Schumer indicated during the hearing that future forums will be focused on AI harms and solutions, although no timeline has been publicly announced.

Press Coverage:

- FedScoop: Sen. Chuck Schumer’s second AI Insight Forum covers increased R&D funding, immigration challenges and safeguards

- TIME: Federal AI Regulation Draws Nearer as Schumer Hosts Second Insight Forum

- MarketWatch: One tech executive’s inside take on the U.S. Senate’s AI forum

- Engadget: The US Senate and Silicon Valley reconvene for a second AI Insight Forum

- Axios Pro: Details on Schumer’s next AI forum

- Bloomberg: Marc Andreessen and John Doerr Join Closed Senate Forum on AI

Other Relevant materials:

- Sen. Schumer’s opening remarks and closing remarks

Forum One

On September 13, 2023, US Senate Majority Leader Charles Schumer (D-NY) brought together 22 of tech’s most influential billionaires, civil society group leaders, and experts for the first of nine promised “AI Insight Forums” he plans to host on Capitol Hill. More than sixty-five senators, or around two-thirds of the Senate, joined the closed-door meeting – although no questions were allowed – in what Sen. Schumer has described as an effort to “supercharge” the Committee process and build consensus on artificial intelligence legislation.

High-profile tech industry leaders in attendance included Meta’s Mark Zuckerberg, OpenAI’s Sam Altman, and X/Tesla’s Elon Musk, among others, who left the Senate’s Kennedy Caucus Room reportedly feeling generally positive about the meeting but skeptical as to how ready Congress is to actually regulate AI.

The private format, however, was widely criticized, and not just by civil society groups concerned about corporate influence. Senator Elizabeth Warren (D-MA), who was present at the Forum, said that “closed-door [sessions] for tech giants” is a “terrible precedent” for developing legislation and further questioned why even the press was shut out of the meeting. Some members of the press wondered how accountability can be assured by meetings held behind closed doors, while others characterized the forum as “scripted political theater” that runs the risk of exacerbating mistrust in the American political system and its historical inability to rein in Silicon Valley.

The first AI Insight Forum came amid a busy week for the Senate in regards to governing artificial intelligence. Just the day prior, on Tuesday, the Senate Judiciary Subcommittee on Privacy, Technology, and the Law, led by Chairman Sen. Richard Blumenthal (D-CT) and Ranking Member Sen. Josh Hawley (R-MO), met with industry and experts for crafting legislation that balances basic safeguards with maximum benefits. (A full transcript of the hearing can be found on Tech Policy Press.)

That same day, the Biden-Harris administration announced it secured additional voluntary commitments from eight leading artificial intelligence companies to “help drive safe, secure, and trustworthy development of AI technology,” joining the seven other companies who signed on in July. These non-binding measures range from notifying users when content is generated by AI to thoroughly testing AI systems before their release. Three of the newest signatories – IBM, NVIDIA, and Palantir – were represented at Schumer’s round-table.

Other Senate hearings last week were hosted by the Commerce, Science, and Transportation Committee as well as the Homeland Security and Governmental Affairs committee to discuss transparency in artificial intelligence and AI governance regarding acquisition and procurement, respectively.

Little is publicly known about the first-of-its-kind AI Insight Forum, other than from official statements, attendees’ quips to the press, and leaks from those present in the room. A handful of attendees publicly reflected on the meeting – like Maya Wiley, CEO and President of the Leadership Conference on Civil and Human Rights, on CNN This Morning as well as the Center for Humane Technology’s Tristan Harris and Aza Raskin on the Politico Tech podcast – but many questions remain beyond the panelists’ own interpretation of events.

What Senators were saying:

The topics discussed:

- Elections and the use of deepfakes

- High-risk applications

- Impact of AI on the workforce

- National security

- Privacy, such as AI-driven geolocation tracking and facial recognition concerns

Press reaction:

- Wired: Inside the Senate’s Private AI Meeting With Tech’s Billionaire Elites

- The New York Times: In Show of Force, Silicon Valley Titans Pledge ‘Getting This Right’ With A.I.

- The Washington Post: Tech leaders including Musk, Zuckerberg call for government action on AI

- The Washington Post: 3 takeaways from senators’ private huddle with tech execs on AI

- The Verge: Tech leaders want ‘balanced’ AI regulation in private Senate meeting

- FedScoop: Sen. Schumer’s first AI insight forum focuses on 2024 election, federal regulators

- The German Marshall Fund: Taking Stock of the First AI Insight Forum

What to take away from Forum One:

- There was reportedly broad agreement in the room for both the unprecedented societal risks posed by AI and the need to take urgent action to mitigate it. (Sen. Schumer noted after the forum that every single attendees’ hand went up when asked if the government should regulate AI.) How to achieve this, on the other hand, was the biggest point of tension. There were calls for Congress to create a new AI agency, while others want to leverage existing authorities, like the National Institute of Standards and Technology (NIST), to regulate the emerging technology.

- Repeated emphasis was placed on the need to balance regulation and building “safeguards” with innovation that can help advance the American economy and its geopolitical standing.

- No voluntary commitments for AI tech companies were proposed or promised. The meeting was characterized more as a “framing” conversation regarding AI problems to inform future policy proposals. It seems widely agreed upon that Congress is not yet ready to draft detailed legislation.

- Several senators expressed concern that the forum will lead nowhere. Others, like Sen. Cynthia Lummis (R-WY), said the crash course was more informative than they had expected it to be. “I kind of expected it to be a nothingburger and I learned a lot. I thought it was extremely helpful, so I’m really glad I went,” Sen. Lummis told the press after the forum.

Other relevant materials:

- A transcript of Sen. Schumer’s June 21, 2023 speech announcing the forums at the Center for Strategic and International Studies (CSIS) in Washington, DC.

- A transcript of Sen. Schumer’s June 22, 2023 floor remarks on launching the SAFE Innovation Framework and AI Insight Forums.

- The document describing Sen. Schumer’s “SAFE Innovation Framework.”

Authors