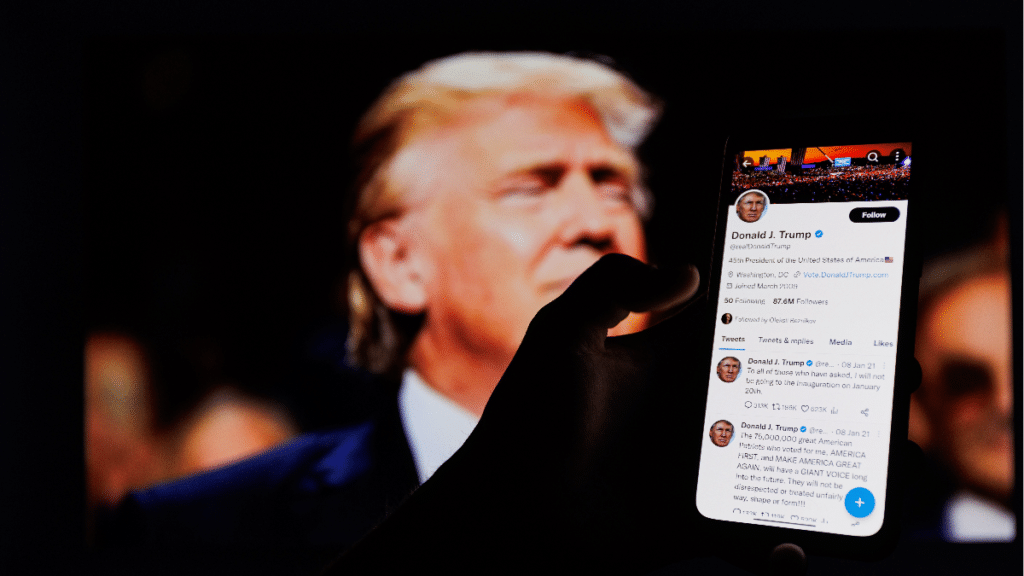

Trump’s Reinstatement on Social Media Platforms and Coded Forms of Incitement

Anika Collier Navaroli / Apr 11, 2023Anika Collier Navaroli is currently a Practitioner Fellow at Stanford University. She previously worked in senior content policy positions inside Trusty & Safety departments at Twitter and Twitch and within research think tanks and advocacy non-profit organizations.

This piece is cross-published at Just Security.

Over the past few weeks, major social media companies including Facebook, Twitter and YouTube reinstated former President Donald Trump’s social media accounts and privileges. Now, in the aftermath of his indictment in Manhattan’s Criminal Court and likely future indictment elsewhere, their decisions will be put to the test.

After his day in court, Trump was back at Mar-a-Lago, where he addressed the media and streamed his remarks on Facebook Live. He used his platform to lay out a list of grievances against his perceived political opponents, including doubling down on unfounded conspiracy theories about the 2020 election and framing his legal troubles as “political persecution” designed to “interfere with the upcoming 2024 election."

As a whistleblower from inside one of those major social media companies, I can say with conviction that the path we are on is dangerous. I know first hand. As I testified to Congress, while an employee at Twitter I spent months warning the company's leadership that the coded language Trump and his followers were using was going to lead to violence on Jan. 6, 2021. I am also the person who argued to Twitter executives that they would have more blood on their hands if they did not follow my team’s recommendation to permanently suspend Trump’s account on Jan. 8, 2021.

Just weeks after that, former Twitter CEO Jack Dorsey told Congress that Twitter played a role in the violence of Jan. 6. However, the exact role that social media played in the violent attack on the Capitol has never been fully disclosed, though it was investigated by the House Select Committee.

The committee heard days of detailed accounts from myself, another brave former Twitter employee, and employees from other social media companies about the failings we saw with our own eyes. But, the committee's almost 900 page final report, released in early January 2023, did not present the findings from the team tasked with looking into social media.

An unpublished draft of this team’s findings was leaked in late January. It painted a damning picture of the culpability of social media companies in the Capitol attack. Chief among its key findings was that “social media platforms delayed response to the rise of far-right extremism—and President Trump’s incitement of his supporters—helped to facilitate the attack on January 6th.”

But the report didn’t stop there. It went on to detail critical failings within specific social media companies. It said, “key decisions at Twitter were bungled by incompetence and poor judgement,” and “Twitter failed to take actions that could have prevented the spread of incitement to violence after the election.” By the time these findings were shared, however, the former president’s account had been reinstated at Twitter by its new owner, Elon Musk, following a Twitter poll.

According to the committee’s social media team’s findings, Twitter was not alone in sharing responsibility for allowing violence to be inspired on its platform in the runup to Jan. 6. Rather, the committee’s investigators found: “Facebook did not fail to grapple with election delegitimization after the election so much as it did not even try.” The investigators also noted that Facebook was due to review the former President’s suspension. The draft report clearly states, “President Trump could soon return to social media—but the risk of violence has not abated.”

Yet, within days of the committee’s draft social media report publicly leaking, Meta announced that it would reinstate the former President’s accounts. Nick Clegg, the company’s President of Global Affairs, boldly proclaimed that after assessing the serious risk to public safety and the current security environment, “our determination is that the risk has sufficiently receded.” He then hedged, “Mr. Trump is subject to our Community Standards."

The January 6th committee’s draft social media report also singled out YouTube in its key findings. It detailed the company’s “failure to take significant proactive steps against content related to election disinformation or Stop the Steal.” It also concluded that “YouTube’s policies relevant to election integrity were inadequate to the moment.”

Last month YouTube also decided to reinstate Donald Trump’s posting privileges. YouTube's vice president of public policy, Leslie Miller, said the platform’s determination was made after it “carefully evaluated the continued risk of real-world violence” and “the importance of preserving the opportunity for voters to hear equally from major national candidates in the run up to an election.” Like Meta, YouTube also promised that the former president’s account would still be subject to company content moderation policies.

How does this happen? How do social media companies come to the exact opposite conclusion of a year’s long congressional investigation?

It’s like January 6th never happened. It’s like we haven’t learned our lessons. Or maybe we just want to forget.

But I haven’t forgotten. In February, I was a witness at a congressional hearing that highlighted the extreme political polarization that our country is currently undergoing.

During the hearing, I was called by representatives an “American hero” and a “sinister overlord.” I was told by members of the United States Congress that I should be celebrated for speaking the truth, and that my arrest for unspecified crimes was imminent. Throughout the hearing, people on the internet posted images of nooses directed toward me.

Since then, members of Congress who swore oaths to uphold the Constitution have continued their veiled calls for an American civil war on Twitter. As Donald Trump faced his indictment in New York City, he posted on Truth Social with language that directly mirrored the dog whistles he used in the days leading up to January 6th, 2021, and he gathered his followers in Waco, where he glamorized the Capitol attack.

These repetitions of history did not go unnoticed. During Trump’s court appearance this week, the prosecutor raised concerns over the former President’s threatening statements and social media posts, such as Trump’s warning of “potential death and destruction” that he said would follow his indictment. While the judge did not impose a gag order, he noted his serious concerns about this activity, requesting the defense counsel to tell their client to “please refrain from making comments or engaging in conduct that has the potential to incite violence, create civil unrest, or jeopardize the safety or well-being of any individuals.”

While there thankfully was no immediate political violence in the aftermath of Trump’s arraignment, the threat of violence is nowhere near over.

Trump’s New York indictment, ongoing criminal investigations at both the state and federal level, and his political campaign will be a pressure test of companies’ decisions to reinstate the former president. While these companies have promised that the former president will now be subject to their rules, the truth is, we’ve heard this promise before. As I testified, companies previously bent and broke their own rules behind closed doors in order to protect Trump’s dangerous speech. After Trump’s remarks on social media this week which led to the judge and prosecutor having reportedly been subject to an increase in death threats, what indication do we have that this time will be different?

Even if platforms do decide to enforce their rules, the reality remains that these baseline policies are insufficient. As I testified to Congress, in 2020 my team at Twitter advocated for the creation of a new nuanced policy that would prohibit coded language like dog whistles that would lead to the incitement of violence. Despite seeing the interpretation of Trump’s statements by his base, it was not until the Capitol had been attacked on Jan. 6, 2021 that we were allowed to implement the policy.

Both the other Twitter whistleblower and I testified that we left the company in part after this policy was eliminated and we realized that the rolled back enforcement would inevitably lead to more political violence. The riots on Jan. 8, 2023 in Brazil’s capital showed us that companies have still not created policies that address nuanced or coded language, and that world leaders and their followers can still employ anti-democratic campaigns that incite violence. It has become an off-the-shelf playbook.

The normalization of hate, dehumanization and harmful misinformation within political discourse on social media has put us on a cataclysmic course. Politicians skirting the lines of content moderation policies under the guise of open communication with constituents has fueled lawless actions. And companies have not only failed to update their policies to address these gaps, many have scaled back or wholly removed the teams who were responsible for moderation.

As social media companies now reevaluate whether to stay the course, I encourage company leaders to learn from our not too distant history. Allowing former President Donald Trump to retake his algorithmically amplified megaphone on the largest social media platforms poses a threat to our democracy. Not only does it lead us down the exact path to violence we have already walked, it signals to would-be authoritarians all over the world that there is safe harbor for dangerous speech at American technology companies.

I challenge my former colleagues and peers at other platforms to ask themselves: Do you really want to bear responsibility when violence happens again?

Authors