Transcript: House Hearing on Legislative Solutions to Protect Children and Teens Online

Justin Hendrix / Dec 7, 2025

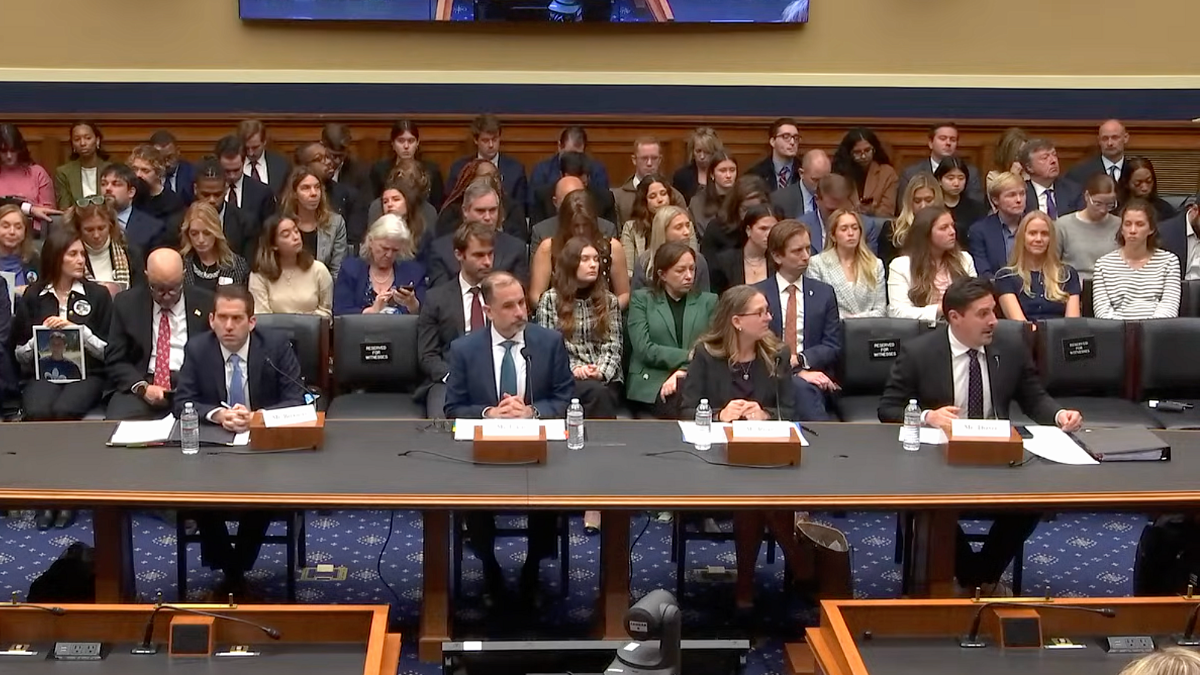

The Subcommittee on Commerce, Manufacturing, and Trade of the Committee on Energy and Commerce held a hearing on Tuesday, December 2, 2025, entitled "Legislative Solutions to Protect Children and Teens Online."

On Tuesday, December 2, 2025, the United States House Subcommittee on Commerce, Manufacturing, and Trade of the Committee on Energy and Commerce held a hearing entitled "Legislative Solutions to Protect Children and Teens Online." Witnesses included:

- Marc Berkman, Chief Executive Officer, Organization for Social Media Safety (written testimony)

- Joel Thayer, President, Digital Progress Institute (written testimony)

- Paul Lekas, Executive Vice President, Software & Information Industry Association (written testimony)

- Kate Ruane, Director of the Free Expression Project, Center for Democracy & Technology (written testimony)

What follows is a lightly edited transcript. Refer to the video of the hearing when quoting to check for accuracy.

Rep. Gus Bilirakis (R-FL):

We will come to order. The chairman recognizes himself for five minutes for an opening statement. Good morning and welcome to today's hearing to discuss legislative solutions to protect children online. Our children are facing an online epidemic. This issue is personal. We have parents on both sides of the aisle and we all have constituents who have been affected there. Why We are here today, we're examining almost 20 bills today, which together form a comprehensive strategy to protect kids online. Our approach is straightforward, protect kids, empower parents, and future proof our legislation. As new risks and technologies emerge, these bills are not standalone solutions. They compliment and reinforce one another to create the safe, safest possible environment for our children. There is no one size fits all Bill to protect kids online and our plan reflects that parents must be empowered to safeguard their children online. Just as a parent can observe their children's activities and social behaviors at home and that school functions, so should they be able to check on their kids' activities online.

Our bills ensure parents have the tools and resources to keep their children safe. In the modern world, a child's life in the 21st century is much more complex than generations past and parents need the tools to adapt. Our bills are mindful of the constitution's protections for free speech. We've seen it in the states. Laws with good intentions have been struck down for violating the first amendment. We are learning from those experiences because a law that gets struck down in court does not protect a child and the status quo is unacceptable. As far as I'm concerned. All our bills employ this strategy including the Kids Online Safety Act or KOSA that I'm proud to lead that KOSA sets a national standard to protect kids across America. It mandates false safeguards and easy to use parental controls to empower families. It blocks children from being exposed to or targeted with ads for illegal or inappropriate content like drugs and alcohol.

It takes on addictive design features that keep kids hooked and harm their mental health and most importantly, it holds big tech accountable. With mandatory audits and strong enforcement by the FTC and State Attorneys General, I made precise changes to ensure KOSA is durable. Don't mistake durability for weakness. Folks, this bill has teeth by focusing on design features rather than protecting speech, we will ensure it can withstand legal challenge while delivering real protections for kids online and then kids and their families. I'm proud of the members of this subcommittee for working on legislation to address a myriad of harms and challenges. This is used personal to every one of us up here. It shows in the number of bills before us today. I know this is a shared bipartisan goal. My office is open. Call me or find me on the floor. I think you'll know that I'm willing to listen. Let's find a way to work together and this is not a partisan issue folks. Let's find a way to work together and save America's kids from the threats they're facing online. That's the bottom line. I yield back the balance of my time. The chairman recognizes the Ranking Member, Ms. Schakowsky for her five minutes for an opening statement. You're recognized.

Rep. Jan Schakowsky (D-IL):

Thank you Mr. Chairman. There is not a single thing that we deal with that in my view is most important to take care of our kids and I do not believe that the legislation that has been offered by the Republicans does not do the job and it's really frustrating because we have been working on this bill and moving it around here and there for a long time. Yesterday I had the privilege of meeting with three women who are here today in the audience who have been here for five years because their children died. Not necessarily they didn't need to die and it's the kinds of things that we can learn from them that we can do much better. I mean to say that who can't states? Not states. Yeah. The different estates. What is it? States, what not states. The states. States, sorry. Cannot do their own bill. Cannot do their own bill. I'm sorry. We have a long, long way to go to protect our children and to make sure that all our involved. Now I want to yield at this point to Congressman Mullin to continue in this discussion.

Rep. Kevin Mullin (D-CA):

Thank you. Ranking Member Schakowsky. Thank you Mr. Chair for convening this hearing on a very important topic. Protecting kids is perhaps the single most unifying bipartisan issue we have here in Congress. We all want to keep our kids safe. I myself am the father of two small boys who aren't yet but will be before I know it. An age when they'll be navigating the internet on their own to say I am worried for them is an understatement, but the country and Congress is not without tools to keep people safe online. In fact, more than 100 years ago, the Federal Trade Commission was set up to prevent harms to consumers of all kinds, including kids. Indeed, most of the bills we will be discussing today rely on the FTC to enforce them. So that is why it has been stunning to see the contempt in which the Trump administration has held the FTC.

Rather than seeking from Congress a change in the law, president Trump has simply attempted to unlawfully fire two of its commissioners eliminating bipartisan input. Meanwhile, the chair of the FTC has attempted to dramatically reduce the commission staff through buyouts and other drastic measures. He's actually bragged that he wants to get the commission staff size to its lowest level in 10 years. The chair has also been using the tools of the FTC to wage, a culture war against medical professionals and parental choices. He disagrees with this is more than a harmful deviation from the commission's mission. It undermines consumer protections for all of us by taking cops off their normal beat. Yet today we'll be talking about all the things we want and need the FTC to do to protect kids online, but who will be doing that work? The remaining employees at the FTC who are already stretched too thin as evidenced by this long list of bipartisan bills, there's clearly a lot of alignment across the parties about the role we want the FTC to play in that spirit. I hope that as we discuss the legislative proposals today, we acknowledge the need to fully fund the FTC, protect its bipartisan commission structure and ensure the FTC is focused on the mission Congress gives it. I look forward to a robust discussion today. With that, I yield back

Rep. Gus Bilirakis (R-FL):

Gentlemen yields back. Now I'll recognize the chairman of the full committee, Mr. Guthrie for his five minutes.

Rep. Brett Guthrie (R-KY):

Thank you Chairman Bilirakis. Thanks for having this hearing today and thank you to our witnesses for being with us here today. Tragedy strikes children every day online. In March, I shared the story of a constituent who took his life due to a sextortion scheme. I know each of us here today and many others have similar stories to share and while we passed the Take it Down Act into law earlier this Congress to address sex extortion, countless other harms persist and it is our responsibility to find a solution. That is why we're here today. We have almost two dozen bills before us that take a broad approach to create a comprehensive strategy to protect children online. Imagine a 14-year-old child trying to download a social media app on their phone. There are many different layers needed to protect them from harm and we are discussing a comprehensive approach today where different types of legislation could work in harmony to address various concerns.

For example, age verification is needed first and foremost, including appropriate parental consent even before logging in. Once our user's age is known, privacy protections under COPPA 2.0 would be triggered. We also have a range of bills to further address issues like parental monitoring of online activity restrictions on the types of apps kids can access, how information is presented to kids in apps and how children's data is used by online platforms. And finally, the Kids Online Safety Act provides robust parental controls and limitations on harmful design features to ensure that platforms live up to these standards. Now I would note that we went to great links to address concerns that members of the house on both sides of the aisle raised regarding KOSA in the last Congress as well as concerns that the previous version would not pass a legal challenge and thus not protect a single child.

The KOSA we are considering today still holds platforms accountable while addressing these concerns. Further when it comes to AI chatbots, we learned about this in committee a couple of weeks ago. The Safe Bots Act and AWARE Act will ensure users are appropriately informed and their using AI and that there are educational resources for kids, parents, and educators on the safe use of AI chatbots. These exemplify our strategy that chairman Bill Rakus has highlighted. The Unli world is large and complex and there is no silver bullet solution to protect kids. Each bill we're looking at today could be piece of a puzzle designed to work together to create the safest possible environment for children online. They empower parents through parental consent mechanisms, standards for parental tools and educational resources. They are curated to withstand constitutional challenges. A law that gets struck down protects no one, and if that happened, we'd fail to protect the very children We're here to protect. We have a unique opportunity to work together to craft a multifaceted solution to protect children online. Thank you again to our witnesses for being here. We really appreciate the effort you made to be here. We greatly look forward to the discussions and I'll yield back.

Rep. Gus Bilirakis (R-FL):

Gentlemen yields back. Now I recognize the Ranking Member of the full committee, Mr. Pallone for his five minutes.

Rep. Frank Pallone (D-NJ):

Thank you Chairman Bilirakis. Today we're discussing the important topic of youth online safety and as more of our lives are lived online, there have been tremendous changes in how we communicate, socialize and learn. And with these changes come new challenges to ensuring the health and wellbeing of all Americans, but particularly our youngest and most vulnerable. We can all agree that we want our kids and teens to be safe online Congress along with parents, educators, and states can and should play an active role in keeping minors safe. And that role includes prioritizing strong comprehensive data privacy legislation, which unfortunately is not included in the 19 bills we're considering today. Comprehensive data privacy legislation is something I've cared about for many years, but in the absence of data privacy legislation, companies will continue to collect, process and sell as much of our data and the data of our kids as possible.

And this data allows companies to exploit human psychology and individual preferences to fuel invasive ads and design features without regard to the harm suffered by those still developing critical thinking and judgment. Artificial intelligence is only accelerating existing incentives because like social media, AI relies on the exploitation of our data. Without such a strong legislative solution for children, teens and all Americans, we must recognize that the measures we take in Congress will not address the full scope of the problems perpetrated by an online ecosystem Fundamentally built on reckless and abusive data practices. We can and should do more for our children and for all consumers, but if comprehensive privacy legislation was easy, it would already be law and the urgency of addressing harms to children and teens presents an opportunity to make progress towards ensuring the internet is a safe replace for all Americans. And this is why I'm pleased we'll be discussing my bill that will prevent shadowy data brokers from selling miners data and allow parents and teens to request the deletion of any data already in the hands of brokers.

We simply should not allow nameless data harvesters to profit off of our kids' data. Our kids deserve the right to enter adulthood with a clean slate, not a detailed dossier that will follow them throughout their adult lives. They also deserve online safety legislation that will make the internet safer, not put their data and physical safety more at risk. And I'm concerned that mandating third party access to children's data and requiring additional collection and sharing of sensitive data before accessing content, sending a message or downloading an app would move us in the wrong direction in the fight for online privacy. Congress must also remember that unfortunately many kids find themselves in unsupportive or even abusive or neglected households that can be real world harm from allowing parents complete access and control over their teen's existence online. Instead of shifting evermore burden onto parents and teens and putting evermore trust in tech companies, we can give everyone safer defaults and more control over their digital lives.

We can also require companies to evaluate their algorithms for bias and harms before making our kids the Guinea pigs. And we can resist efforts to preempt existing protections and let states continue to respond to rapidly evolving technologies like AI chatbots and enforce their existing child safety and privacy laws and we can all stand up for an independent Federal Trade Commission. Now the FTC under both Republican and Democratic leadership has consistently been America's strongest champion against the abuses of all who seek to exploit our nation's children and adults for profit both on and offline. When President Trump attempted to illegally fire the FTC’s Democratic Commissioners, he made our children less safe online. If my Republican colleagues want to further empower the FTC to protect kids and teens, they must join Democrats standing up for a bipartisan and independent FTC. They should join us in demanding that the democratic commissioners be reinstated. So I look forward to the discussion today on this important issue and I thank you Mr. Chairman. You'll back the balance of my time,

Rep. Gus Bilirakis (R-FL):

Gentlemen yields back. We appreciate that. And now we are going to introduce our witnesses for today, Mr. Mark Berkman, CEO of the Organization for Social Media Safety. Welcome sir. Mr. Paul Lekas, executive Vice President Software and Information Industry Association. Welcome Ms. Kate Ruane, director of the free Expression Project Center for Democracy and Technology. Welcome and Mr. Joel Thayer who is Joel Thayer who is president of the Digital Progress Institute. Welcome to all our witnesses. So Mr. Berkman, you're recognized for your five minutes of testimony.

Marc Berkman:

Thank you Mr. Chairman. Good afternoon and thank you to this distinguished members of this committee for the opportunity to offer my organization's social media safety expertise at this historic legislative hearing to protect our children from online harms. My name is Mark Berkman and I am the CEO of the Organization for Social Media Safety. The first national non-partisan consumer protection organization focused exclusively on social media. The reason for this legislative hearing must be explicitly clear. Social media is harming children. It is harming millions of America's children up to 95% of youth ages 13 to 17 report using a social media platform with more than a third saying they use social media almost constantly. Nearly 40% of children ages eight to 12 use social media In our own study with UCLA School of Education including over 16,000 teens, we have found that 50% self-report using social media for more than five hours daily.

The evidence based on credible research and testimony in this very chamber shows severe pervasive harms related to adolescent social media use, cyberbullying harassment, predation, human trafficking, drug trafficking, violence, fraud, extortion, suicide, depression, cognitive impairment, eating disorders, and more social media related harm is far reaching, impacting homes in every congressional district, families of every demographic. But to understand the true toll we need look no further than the many parents here with us today who have tragically lost a child to a social media related threat. Sitting behind me is Rose Bronstein, her son Nate Bronstein, forever 15 died by suicide after suffering severe cyber bullying over social media and Samuel Chapman, his son Sammy Chapman, forever 16, died after ingesting fentanyl poison drugs that he easily acquired from a drug dealer operating on social media. Rose and Sam and the many other angel parents who traveled from across the country to be here today have collectively spent years fiercely and bravely advocating for urgently needed legislative reform.

In the hope that Congress acts to ensure that other families do not have to suffer such tragedies, we owe them a debt of gratitude. The record on the extent and severity of the social media related harm impacting our children is clear. Yet the social media industry continues to fail to protect our children. Thank you to the brave work of whistleblowers and attorneys general across the country. We know that the industry has an entrenched durable conflict of interest when it comes to safety. Even in the face of mounting child fatalities, social media executives have prioritized growing the number of child users and engagement on their platforms over our children's safety, making a federal legislative response absolutely essential and critically urgent. The social media industry has been too slow, their solutions to ineffective their apathy sadly to a parent and so we are grateful that the committee today recognizes that a broad approach with multiple tactics are needed.

Resources for education, accountability and rules for platforms, research, privacy reforms, and critically safety technology. I would like to highlight Sammy's Law. One of the most effective ways for parents to protect children is by using third party safety software, which can provide alerts to parents when dangerous content is shared through children's social media accounts, enabling life saving interventions at critical moments. For example, if a child is suffering severe cyber bullying via social media, then a parent who has received an alert through third party safety software can immediately provide critical support. We know that this intervention is highly effective. Over the last several years, safety software companies have provided alerts to parents that have protected millions of children saving numerous lives. Sammy's law will finally provide families with this option on all major social media platforms while also substantially increasing data security and child privacy over the status quo. We urge this committee to pass this bipartisan common sense legislation that will save lives. American families have sat for years living in quiet despair, watching social media steal from their children's potential and wellbeing. We are hopeful that this year this committee can join together and deliver the solutions families so desperately need. Thank you and I look forward to your questions.

Rep. Gus Bilirakis (R-FL):

Thank you so very much. Now I recognize Mr. Lekas for his five minutes. You

Paul Lekas:

Recognize sir Chairman Bilirakis, Ranking Member Schakowsky and members of the subcommittee. Thank you for the opportunity to appear before you today. My name is Paul Lekas and I serve as Executive Vice President for the Software and Information Industry Association. SIIA represents nearly 400 organizations at the forefront of innovation. From startups to global leaders in ai, cloud computing, education, technology and more. Our mission is to support policies to foster a healthy information ecosystem that cannot occur without responsible data use. We share the subcommittee's goal making the internet a safer place for all. We appreciate that the subcommittee has taken a fresh look at how to achieve this. We are here to urge passage of bipartisan legislation that protects online youth privacy and safety. It's exactly for this reason that we work closely with industry and policy makers to develop our child and teen privacy and safety principles in 2024.

Many of the themes and the principles are reflected in provisions of the bills under consideration today, I'd like to highlight a few of these. First is data minimization. Companies must be required to minimize the collection of personal data from youth and restrict how that data is used. This is widely recognized as a best practice by privacy experts and is even more important in the youth context. Second, we believe companies should not advertise to youth based on their online behavior, nor should they create profiles of youth for targeted advertising. Contextual advertising should remain permitted to ensure that youth receive age appropriate content. Third is empowerment and transparency. Legislation should incentivize companies to provide easy to use tools that allow families control over their settings and data transparency is key to building trust and helping families make informed decisions. Finally, we need national consistency. The internet is not partitioned by state lines.

The current patchwork of state regulations creates confusion for both platforms and consumers. A federal law must be strong and preemptive to ensure all American children have the same high level of protection. As Congress considers new legislation, a threshold is to strike the right balance between the constitution's protection of free expression and the governmental interest in youth safety. Thus far, many online safety laws have failed to strike that balance. Several states have proposed inherently content-based regulations requiring platforms to judge speech as harmful or detrimental. These regulations trigger strict scrutiny and courts have historically struck them down for being overbroad and infringing on the free expression of adults and minors, including content reflective of their own religious or political speech. Recent case law provides a roadmap. The Supreme Court in 2025 confirmed that age verification is constitutional when targeting sites with unprotected sexually explicit material. On the other hand, the ninth circuit in 2024 warned that broadly requiring platforms to assess the risk of harm can transform a design regulation into an unconstitutional content regulation.

Legislating in this area is possible but requires precision. There is much that is permissible securing data, restricting certain features, leveraging non-technical tools like digital literacy. Vague duty of care models like the Senate version of CSA that require filtering content based on subjective harm will invite and fail constitutional scrutiny. The version of CSA before this subcommittee reflects a serious attempt to grApple with this challenge. In addition to creating new tools, we support modernizing older ones like Kapa. We support codifying recent FTC regulations and crucially strengthening the privacy protections at the intersection of COPPA and FERPA. We must ensure that when schools contract with vendors, student data is used solely for educational purposes and we are not overburdening our families. We also urge Congress to avoid unintended consequences in two important but very different areas. The first is age assurance. Mandating age verification requires collecting sensitive data, often government IDs from all users, not just kids.

We should incentivize age estimation and parental controls which protect youth without creating high value data repositories for cyber criminals. We must also recognize that everyone in the ecosystem has a role to play. The second involves the collection and use of third party data. Many institutions rely on data about minors for essential services like extending auto insurance to teen drivers, helping youth to develop credit history, protecting minors from identity theft, college scholarships, financial aid, countering human trafficking. The list goes on. We should prevent actors from misusing youth data without disrupting vital societally necessary practices. Online safety and privacy for children and teens requires a holistic approach. There is no silver bullet. There is room for legislation and we're, the subcommittee, is considering an array of bills to identify the right mix. I welcome your questions and look forward to continuing to work with you to advance legislation to keep youth safe while preserving the internet as a resource for learning and connection. Thank you.

Rep. Gus Bilirakis (R-FL):

Thank you Mr. Lekas. It's now recognize Ms. Ruane for your five minutes. You're recognized.

Kate Ruane:

Thank you so much. Thank you Chair Bilirakis, Ranking Member Shakowsky, Chair Guthrie and Ranking Member Palone for the opportunity to testify today. I am Kate Ruane, director of the free expression project at the Center for Democracy and Technology. Children will use online services for their entire lives. The subcommittee is right to focus on their future. I'd like to raise five points essential to protecting children online. First, Congress should address root causes of online harms including privacy. Comprehensive consumer data privacy legislation is the best way to protect everyone online. Currently many online services business models are based on advertising sales powered by platforms, collection use and sale of personal information. This is harmful because it is privacy invasive, increasing the risks of data breaches. It is also deficient because it ignores better signals of what users value about online services. By conflating engagement with user preference, Congress could realign this business model to benefit everyone through comprehensive privacy legislation.

Enhancing children's privacy protections is also a laudable goal. Measures like COPPA 2.0 and don't sell Kids Data Act crafted properly would address some of the root causes of harm to kids online. But the bills under consideration today put the FTC in charge of enforcement at the same time, the current administration is undermining its independence and ability to enforce the law fairly. As the committee considers how to protect kids, it must also ensure that the FTC executes its policies to protect all children and support the rule of law. Second, protecting children includes protecting their right to express themselves online. Minors use social media for everything including to access news, communicate with family and friends, do their schoolwork and create art. Children have First Amendment rights and as Justice Scalia wrote, only in relatively narrow and well-defined circumstances may the government bar public dissemination of protected materials to them.

We are encouraged that today's Kids' Online Safety Act narrows the overly broad duty of care in an attempt to grApple with the tension between ensuring safety and protecting free expression. This is a difficult balance to strike, but it's preferable to flatly banning minors from accessing critical speech services like social media. We hope to work with the committee as the bill moves forward. Third age assurance creates significant privacy risks that should be mitigated in legislation. Age verification and assurance raise significant concerns for all users' rights to name just two age assurance techniques mean either more collection or more processing sensitive data, leading to increased risks of data breaches, which could include people's IDs or biometric information. Age assurance also chills online engagement with sensitive topics that people want to keep private. These concerns are not theoretical. Researchers at Georgia Tech have a forthcoming study demonstrating that the privacy and security concerns related to the use of age assurance techniques are playing out in real time with increased risks for end users.

If Congress nevertheless feels obligated to impose age assurance requirements, it should mitigate the risks by requiring the highest levels of privacy guardrails, including data minimization, deletion, reliance on high quality data transparency and accountability. Fourth, creating good policy requires taking into account the perspectives of minors and their caregivers. CDT has conducted research asking a sample of teens and their parents about features of current child safety proposals. To give just two salient examples of their views, first, parents and teens expressed safety and privacy concerns with subjecting minors to age assurance methods preferring parent centered approaches that enable parents to declare their children's age. Second, we found that teens preferred algorithmic recommendations. Teens trust these feeds precisely because they feel that they are in control of that content. Knowing what parents and minors want and how they currently navigate their online lives will improve policymaking at both companies and in legislatures.

Finally, Congress must not unduly restrict a state's ability to act. Many of the bills at issue today would preempt state laws and regulations that simply relate to the provisions in the bills. We're concerned that the “relates to” standard will broadly preempt many existing state laws and emerging legislation that provides significant protections to children online even if state law would provide better protections for kids. To make matters worse, public reporting indicates that Congress may be negotiating a deal to pair a kid safety package with the controversial provision preempting state laws on artificial intelligence. It would be ill-advised to broadly preempt states’ ability to enact laws related to AI's impact without putting in place strong federal protections at least equal in scope to any such preemption. Thank you for the opportunity to testify and I look forward to your questions.

Rep. Gus Bilirakis (R-FL):

Thank you very much. Appreciate that. Now I recognize Mr. Thayer for your five minutes

Joel Thayer:

Chairman Bilirakis ranking Member Shakowsky and members of the esteemed committee, thank you for holding this incredibly important hearing to advance legislative solutions that ensures the health and safety of kids online. I'm Joel Thayer. I'm a practicing attorney and I sit as president of the Digital Progress Institute, a think tank dedicated to finding bipartisan solutions in the tech and telecom policy spaces. Finding political consensus on incremental solutions to today's acute concerns in tech policy is at the core of our advocacy. Indeed, some of the bills before you today are based off of frameworks we help develop. This hearing coupled with its bevy of bills makes a few things abundantly clear. First, we care about our children and their wellbeing on and off their devices. Second, we are no longer satisfied with the status quo in so many ways today's youth are robbed of their innocence far more easily than ever before.

This is thanks to the ubiquity of mobile devices and the services they host. Large tech platforms are inundating kids with lewd and lascivious exhibitions and even connecting them to child predators. This is well-documented. Senator Marsha Blackburn even went as far as describing Instagram as the premier sex trafficking site in this country. Despite what the tech companies trumpet in their press releases, parents are left with almost no resources to combat their encroachments. Worse tech companies are in fact perpetuating the problem. Herein lies the rub. Big Tech’s form of child exploitation pays very well. Our children are not only big text users but are also their product. Meta specifically targets young users and even places a monetary value of $270 on each child's head. But the issue is we're still children are not only feeding big tech algorithms to sell to advertisers but are also used to inform their respective AI programs.

AI programs like chatbots that have already resulted in some child deaths by encouraging kids to commit suicide. At DPI, we are all for winning the AI war, but we do not believe children should serve as its casualties. It is why measures you are considering today are so essential to both ensuring that we remain dominant in the AI race and we protect our most vulnerable, our children. However, we cannot ignore the long road ahead to get these passed. Big Tech lobby is not only fierce but also unrelenting. They admire the validities of these solutions by instilling fears of consumer forfeiting privacy and the stifling of speech. But this is all a farce. As to privacy, I say consider the source courts, regulators and consumers have found every one of these companies to have violated their user's personal privacy. Take Apple for instance, that proclaims your privacy is a fundamental human right.

Discovery from a class action lawsuit, however, reveals that since October, 2011, Apple had routinely recorded users' private conversations without their consent and disclose those conversations to third parties such as advertisers. Some of these disclosures included private conversations with their doctors so much for privacy being a human right. One of Google's privacy violations was so egregious that the Federal Trade Commission created a first of its kind settlement requiring Google to implement a comprehensive privacy program that it unbelievably didn't already have. Social media companies do not fare better. Again, an FTC report found that social media companies like Snap, Meta and TikTok have all engaged in vast surveillance of users with lax privacy controls and inadequate safeguards for kids and teens. Point being, these companies are hardly in authority on proper privacy hygienics. As to free speech, I stand on the shoulders of Third Circuit Judge Paul Matey, who poignantly stated that big tech smuggles constitutional conceptions of a free trade in ideas into a digital cauldron of illicit loves that leap and boil with no oversight, no accountability and no remedy.

And it's true Big Tech has leveraged the admittedly messy First Amendment jurisprudence to turn our bull work for free expression into a sword to cut down laws they frankly don't like. But here's the good news. The Supreme Court is creating a clear pathway for these measures to start. In TikTok v Garland, the Supreme Court categorically rejected Tiktok's argument that the mere regulation of an algorithm raises First Amendment scrutiny even better. The court clarified that a law regulating a tech platform doesn't invite First Amendment review if the law's primary justification is not content-based, even if its ancillary justifications are in some of the bills before us today, particularly the App Store Accountability Act. The Screen Act and the Kids' Online Safety Act have taken these considerations into account and are poised to resolve many of the challenges parents are facing in today's digital age with respect to child safety. With that, I appreciate the committee's time for inviting me to testify and I look forward to all of your questions and working with you further.

Rep. Gus Bilirakis (R-FL):

Thank you so much. Now I'll begin the questioning and recognize myself for five minutes. Mr. Berkman, for too long, the narrative has been that online safety is solely a parent's responsibility, but as you know, 80% of parents say that harms now outweigh the benefits and they feel completely outmatched by these highly complex platforms designed to keep their kids hooked. My bill, the Kids Online Safety Act, again, it's a discussion draft fundamentally flips this dynamic. We require platforms to enable the strongest safeguards by default rather than forcing parents to dig through multilayered menus to find these critical tools. Can you explain why shifting the burden of safety from the parent to the platform can change the trajectory of this dire safety crisis?

Marc Berkman:

Thank you for the question chairman, and I think it's a very apt one. You are correct that parents across the country are frustrated, they're saddened and they feel defeated. We work with hundreds of thousands of families partnering with K through 12 schools teaching essential social media safety skills, and so we can hear from families on the ground nationwide, children are using multiple platforms many hours a day with many different features and they are not set to be safe by design. And so we do believe that the provisions you've put forward in your legislation would materially move safety forward and ensure that parents are not battling for their children's safety alone. And I would note Sammy's law as well would give parents one unified tool to help maximize safety and so that is why we support that bill.

Rep. Gus Bilirakis (R-FL):

Thank you very much Mr. Thayer. In your testimony you state that KOSA, along with many of the other bills considered today, will resolve many of the challenges parents are facing in today's digital age with respect to child safety, KOSA includes numerous other protections such as parental controls, default settings, policy to address design features and mandatory audits. Can you explain how KOSA will empower parents to protect their kids online and will hold big tech accountable?

Joel Thayer:

Well, you took the word right out of my mouth. The key word there is accountability. And right now there is absolutely none at this point. We have to rely basically on their musings via press releases and other terms of service that we hope they will adhere to and we have actual data to support that at this time, TikTok, Meta and the like all have so-called parental protections and they all have failed miserably. The problem is, is that there are frankly no laws that actually articulate what their goals are and what they're responsible for. Things like KOSA, which I prefer over more general statutes, articulates clearly what these companies are responsible for doing and when they run afoul of those responsibilities and when we can enforce at this point they have no sort of damocles over their head and even if they did, we wouldn't be able cut it down to get the results that we need to protect kids and protect parents. So KOSA will go a long way in ensuring that the status quo is not going to be the status quo forever.

Rep. Gus Bilirakis (R-FL):

Thank you very much Mr. Lekas. In your testimony you call for online tools that empower children, teens, and families in your view are KOSA requirements for platforms to implement new safeguards and parental controls workable.

Paul Lekas:

Thank you for that question. In our sense, and we're still reviewing this with our members, but the tools that would be required under KOSA are definitely workable. There's a number of companies that have implemented some of these tools already, but we need them to be implemented across the board. We need to protect all children on all children on all platforms. The other thing I would say about KOSA is the requirements to establish procedures and processes and so forth seem really well designed to address the constitutional concerns that have come up with respect to earlier drafts of this legislation.

Rep. Gus Bilirakis (R-FL):

Very good. Thank you very much. I yield back and I'll recognize now the Ranking Member of the subcommittee, Ms. Shakowsky for her five minutes of questioning.

Rep. Jan Schakowsky (D-IL):

The biggest guess. Okay. Ms. Ruane, I wanted to ask you, what is the biggest gap that you see right now that if we were to begin right now to fix it, what do you think is the biggest gap that we are encountering?

Kate Ruane:

Thank you representative Shikowsky for the question. I see a number of gaps that would be left by the legislation. Even with the 19 bills, I see a number of gaps that would be left. The biggest one that I see is the preemption standard is within all of the bills that are currently before the committee. We are concerned that the relates to preemption standard will preempt state laws that specifically protect kids and also state laws that have general protections that also apply to children. So state UDAP laws, state tort laws and also state comprehensive privacy laws that have specific protections for children including enhanced protections for children's data as sensitive data. Texas, for example, protects all children's data as sensitive data at a higher standard than the current COPPA does. The current preemption standard under the bills that are that we're considering today would likely preempt that law as applied to kids.

If we finish this process with the “relates to” standard in place, we will likely wind up with children having less protections at the state level than they do today and we will have failed to do our jobs. So I think that's one of the biggest gaps that I see. I also see that there is not enough in any of the bills that require age verification. We do not see sufficient guardrails to protect and require privacy and security practices within the age assurance requirements and to ensure that any age assurance techniques that are used are fairly and equitably accessible to everyone that has to go through the age assurance process.

Rep. Jan Schakowsky (D-IL):

When I listen to you, I think there are so many things that need to be changed as soon as possible and the idea that the states cannot do anything in the legislation is quite shocking to me. And so I just really think that we have to go back to the drawing board and make sure that we know. The other thing I wanted to ask you is about LGBTQ kids and making sure that they have some authority to be there to be heard. And I wonder if you could comment on that?

Kate Ruane:

Absolutely. Thank you for the question Ranking Member. We are significantly concerned that any censorship incentives within some of the bills that are before us today and within the Senate's version of KOSA could lead to the censorship of LGBTQ speech access to that content by LGBTQ kids and speech by LGBTQ kids because oftentimes that speech can be miscategorized as sexual speech or as speech that falls into categories that platforms look to take down because they're concerned about enforcement and about harms to children related to accessing sexualized content. Our second concern with respect to that is that the bills that issued today delegate authority to enforcement authority to this current FTC, which has demonstrated its willingness to target groups that it disfavors, including transgender families, including transgender people, their families, and their caregivers. If we do not have an independent FTC that is enforcing the law fairly as it applies to all children and protects all children, we have have significant concerns about how these bills would operate in practice, even if the way that they are currently constituted is neutral and would protect everyone.

Rep. Jan Schakowsky (D-IL):

So appreciated. And with that, I yield back. Thank you.

Rep. Gus Bilirakis (R-FL):

Gentle lady yields back now. Recognize the chairman of the full committee, Mr. Guthrie for his five minutes of questioning.

Rep. Brett Guthrie (R-KY):

Thank you. I appreciate that and thank you all for being here. So Mr. Berkman, there is no single solution for protecting kids online and that's why we're advancing a comprehensive strategy of nearly two dozen bills that build in layers of protection from age verification and COPPA 2.0, privacy protections to parental tools and default by design. Can you speak to the urgency of this moment and the need for Congress to protect kids and children and teens online?

Marc Berkman:

Yes, I appreciate the question. We're seeing still an alarming disconnect in terms of the awareness of how severe and pervasive the harms of social media are. We are seeing millions of children across the country harmed. We are on the ground working with families and the actual data is absolutely stunning and the stories are heartbreaking. And so I appreciate the approach taken by the committee. We need to find consensus and pass meaningful social media safety legislation this year to protect our children.

Rep. Brett Guthrie (R-KY):

Thank you. And to add to that, Mr. Lucas and Mr. Thayer, can you explain why we need a layered comprehensive strategy rather than just one single bill?

Joel Thayer:

Thank you for the question. And I think the question sort of beckons the answer is that individual layers require different policy solutions. The same thing that would apply to a social media company may not be apropos to maybe something on the app store layer or even the operating system layer or device layer. Each one of these folks have a role to play or each actor has a role to play and every policy solution should be attuned to what those particular rules are. So a comprehensive approach, whether it's privacy competition or even child safety has to be administered and thought through. So again, in full agreement with what I've heard today, and it seems to be the case that each individual layer requires a special sensitivity to what those particular areas do and needs a policy solution that meets them where they are.

Rep. Brett Guthrie (R-KY):

Thank you, Mr. Lekas.

Paul Lekas:

Thank you for that question. I think the overall goal that seems to be the consensus of everyone in this room is we need to protect youth online. We need to improve the privacy protections and the safety protections for youth. And I think stepping back and looking at all the different components here and trying to identify what's the best way to do each of these components is a really smart way to proceed. Congress has been debating legislation in this space for years and it's urgent to move forward now. So we appreciate the approach taken by the committee.

Rep. Brett Guthrie (R-KY):

Thank you. And also, Mr. Thayer, in my opening statement emphasized that a child, a law that gets struck down by courts won't protect a single child From a legal and constitutional perspective, why is it so critical that Congress can step in to establish a single uniform national standard? And how does this package of bills learn from the defects of laws that have failed judicial review?

Joel Thayer:

Well, as someone that really does appreciate the structure of our constitution and especially the federalist structure that they've created, and again, leveraging the insights from different justices, much smarter legal minds than my own. Justice Brandeis, for instance, said that the states are the laboratories of our democracy. But at the same time, those laboratories yield results. And I think what we've seen in the past 10 years, absent federal insight onto as to what Congress actually wants or what the national standard is, has allowed for a lot of these experiments to go all the way through the courts. The courts have evaluated each one of these standards and has given, as I said in my opening testimony, a pathway for Congress to actually set those standards. Because part of that experiment is not just only seeing where Congress can step in. It's seen the limitations that states have in fully protecting children. A child in Texas should be equally protected as a child in California or in Iowa and everywhere else. And that's where I think the federal standard is probably preferable in many cases. But again, that comes from a lot of the insights that we're getting from states.

Rep. Brett Guthrie (R-KY):

Okay, thank you. And that concludes my questions. I'll yield back.

Rep. Gus Bilirakis (R-FL):

Gentlemen yields back now. Recognize the Ranking Member of the full committee, Mr. Pallone for his five minutes of questioning.

Rep. Frank Pallone (D-NJ):

Thank you, Chairman Bilirakis. Data brokers collect and sell billions of data points on nearly every consumer in the United States, including children. And while I believe that comprehensive privacy is the preferred path to addressing the risks of data brokers, I also believe it's imperative to do what we can now to prevent data brokers from exploiting the personal data of our kids. So I have a series of questions for Ms. Ruane. If you could just answer them quickly, otherwise I won't get through them. First is why is it so important that kids and teens are able to enter adulthood without an extensive profile already built of their online activities and inferences about who they are?

Kate Ruane:

Thank you, Ranking Member. Well, kids are growing up online, right? They are sharing all of their information all of the time. They are engaging constantly and their data is also being collected constantly in ways that they might not understand or even be aware of or be developmentally capable of making decisions about. They don't know they're making a trail that's going to follow them forever. But what's most important is that children are not commodities and their lives and privacy have to be respected, ensuring that their information cannot be bought and sold by shady data. Brokers will meaningfully protect their privacy in general and their ability to enter adulthood without a profile that will follow them for the rest of time.

Rep. Frank Pallone (D-NJ):

Well, thank you. Now, many of the bills being considered for this hearing today contain broad preemption clause and in many cases preempting any state laws that relate to the legislation. So the next question is, what are some unintended consequences of such broad preemption? Could common law and product liability claims currently being used to hold tech companies accountable be impacted by that?

Kate Ruane:

Yes, absolutely. We are concerned that currently extinct state protections that protect everyone will no longer be able to protect children across all 50 states. One other thing to note is a lot of the bills today focus on legacy platforms and services that have existed for a while, and they do not focus on emerging technologies like generative ai. If we institute a broad relates to preemption standard across all of these bills, we run the risk of preempting states’ ability to act on emerging technologies quickly.

Rep. Frank Pallone (D-NJ):

Okay. Many of the bills being discussed today also have provisions that expand access to the data of teens, both to third parties and to parents in the name of increased safety. And while I encourage parents and teens to have conversation about teens online activity, that also needs to be balanced against teens rights to privacy. So as we consider the bills before us, how do we best balance privacy and safety? Are there any specific provisions in these bills that pose a threat to kids' privacy?

Kate Ruane:

Absolutely. Parents and kids' families need tools to help them navigate their online lives. And CDT fully supports giving parents those tools and children those tools. But we are concerned about any provisions that give parents access to and control over the content of kids' communications. One thing that the current version of KOSA does well is it ensures that parents' platforms do not have to disclose the contents of kids' communications. But today's new version of KOSA, of COPPA 2.0, for example, contains language that might allow parents to delete kids, to delete their kids' data and to delete content that they may have created. When we talk to parents and kids about whether they wanted to have those sorts of tools, parents told us that that seemed overly burdensome for parents and kids told us that seemed overly invasive to them. Moreover, as you noted in your opening statement, not all kids are growing up in families that are perfectly supportive and some kids are growing up in situations where they don't have families at all.

Rep. Frank Pallone (D-NJ):

Well, that's sort of my last question because I know we're going to run out of time, but unfortunately, the reality is that there are parents who abuse or neglect their children. And for these kids, access to safe spaces online can be critical. So the last question is how do we foster healthy connections online while ensuring that kids and teens are protected? Are there potential risks to children and teens in dangerous or hostile family environments caused by the measures in these bills?

Kate Ruane:

Well, when we talked to teenagers about how they use, for example, direct messaging services, they told us that what they really want are more controls over, more controls over who gets to message them. So the ability to reduce their own visibility online Congress could also incentivize more friction or speed bumps in interaction with unknown profiles or unknown adults. We also took note that one of the bills today would restrict access to ephemeral messaging. Now while it's understandable that in some circumstances ephemeral messaging could be linked to harm, we also want to note that it could also be used to protect kids' privacy. So for example, children might communicate with each other, teens might communicate with each other through racy text messages. Ephemeral messaging could reduce the likelihood that those messages be used for abusive purposes like non-consensual intimate image distribution at a later date. Ephemeral messages could also be helpful for children who have relationships with domestic violence victims or who are domestic violence victims themselves to ensure safety and to ensure their ability to communicate privately without risk to their physical selves.

Rep. Gus Bilirakis (R-FL):

Thank you. Thank you, Mr. Chairman. Thank you very much. And the gentleman yields back. We'll recognize the vice-chairman of the subcommittee, Mr. Fulcher for his five minutes of questioning.

Rep. Russ Fulcher (R-ID):

Thank you, Mr. Chairman. A question for Mr. Berkman. This has to do with recommendations you might have for young adults who are engaging with some self-harming behavior. In your testimony, you cite some statistics having to do with self-harm, cyber bullying with eating disorders and that particular topic. And we've also learned at various court filings that social media companies have known about this. They know this is going on. Not long ago we had some hearings with TikTok and we learned that they had sent 13 year olds even more content on self-harm and with eating disorders. And that came out also that meta had pushed body image content when teens had expressed some dissatisfaction with their body. So these social media companies, they know about this. And I dealt with this in a previous life and as a state legislator with some mental health issues. And I just want to ask you from your perspective, what recommendations or guidelines can you offer for this body here to address this growing problem?

Marc Berkman:

Thank you, Congressman for the question and I appreciate it. It is true, and something we really need to recognize across this country that these companies have a durable inherent conflict of interest between our children's safety and their profits. And so for the last decade, we have seen child harmed after child harmed. And so one of the bills that we really support here is a bill called Sammy's Law, and that would give parents families access to what we call third party safety software, software not connected to the social media platforms themselves that can provide families with critical lifesaving alerts like eating disorder related content, content involving suicidal ideation so that families can provide lifesaving support at the exact right critical moment, which right now they are not getting. And because of that, we are seeing fatalities.

Rep. Russ Fulcher (R-ID):

So engagement with the parents in that third party software is help.

Marc Berkman:

Third party software, and I would note it's significantly, it adds to privacy in a significant way over the status quo. This is technology that we now have that can limit alerts to parents on a list of harms that every member of this body agrees on and that children vitally need support to protect themselves from.

Rep. Russ Fulcher (R-ID):

Thank you for that. I'm going to come back to you here in just a second, but I want to get a question. Mr. Thayer here. We really need real consequences for bad actors and in the enforcement that goes with it and all the bills we consider here today. They place regulatory obligations on tech platforms and include both FTCs first fine authority and authorizes State Attorneys General to engage with this. First of all, do you see that as adequate and can you discuss the benefits of these enforcement tools and how they will actually be used to hold some of these tech firms accountable?

Joel Thayer:

Yeah, our DPS position is the more cops of the beat, the better. State Attorney Generals have a very special role when it comes to consumer protection, as does the FTC. I think one value of these bills is that they are specific authority statutes as opposed to general authority statutes, which means you can target the specific harms on both the federal and the state level on exactly, on what they want. And in terms of combining the two, you have the FTC, which has a giant remit already. They have a national remit, whereas state attorney generals can react almost in real time or if not very close to the local issues that they can observe. So I've worked very closely with State Attorney General's offices in California, Texas, and Louisiana. And I can tell you that they look at these statutes and they look at these statutes in a very comprehensive way, and they also look at it from the perspective of protecting their populations in particular. So again, in my view, you need both. You can't just have put all the reliance on the FTC. You're going to need some state attorney generals looking at this as well.

Rep. Russ Fulcher (R-ID):

Great. Thank you Mr. Bergman. I got 20 seconds left. Any input on that?

Marc Berkman:

Yeah, we agree. You need a robust enforcement regime. State attorneys general kind of added to that regime. We have leadership across the country from Mississippi, Illinois, Arkansas, and California Attorneys general prosecuting in an effective way, violations of law by the social media industry that is impacting children. They're doing it collaboratively, which means pooled resources. That means infective enforcement. That means save lives. Great.

Rep. Russ Fulcher (R-ID):

Thank you Mr. Bergman and Mr. Chairman. I yield back.

Rep. Gus Bilirakis (R-FL):

Gentlemen, yields back now recognize Ms. Castor from the great state of Florida. You're recognized for five minutes of questioning.

Rep. Kathy Castor (D-FL):

Well, thank you Mr. Chairman. Thank you for teeing up action on to address the privacy intrusion surveillance and targeting of kids online and the growing harms due to the malign design of online apps that lead to physical and mental harm to young people. I want to thank the witnesses, but the panel is missing a parent. There are many here today who could have testified you're missing a young person. There are other young people here who could have testified you're missing a psychologist or a pediatrician and one of the many whistleblowers former tech company employees to testify on the insidious schemes to addict and exploit children. Even as tech company executives knew of the harm to kids. It was 2021 when Frances Haugen, the Facebook whistleblower, testified before Congress that Facebook meta built a business model that prioritizes its profit over the safety of its users.

She said Facebook repeatedly encountered conflicts between its own profits and safety, and that Facebook consistently resolved those conflicts in favor of its own profits. She went on to testify that Facebook became a trillion dollar company by paying for its profits with our safety, including the safety of our children. Doesn't this remind you of the tobacco company propaganda of years ago? This committee knows too well that the big tech companies take advantage of young people online. That is why it is so disappointing that Republicans in the house are offering weak ineffectual versions of COPPA and KOSA. These versions are a gift to the big tech companies, and they're a slap in the face to the parents, the experts and the advocates to bipartisan members of Congress who have worked long and hard on strong child protection bills to protect them from what's happening online. So despite a broad agreement among house members and Senate members, we really need to address these watered-down bills.

And I'm hoping that the rank and file membership and the members of this committee will chart that course. For example, we should not put a ceiling on kids' protections at the state level or stifle the good work of the states or weaken knowledge standards that are critical to holding tech companies accountable. Ms. Ruane, you've already addressed preemption. You said a lot of the preemption language in the bills would provide less protection to kids. You've touched on weaker enforcement that's contained here. You talked about data minimization. There's now a difference in the knowledge standards too. The Senate versions are kind of viewed as the strong bipartisan versions. How does the Senate knowledge standard further protect young people compared to what's in the house versions?

Kate Ruane:

Thank you, representative Castor. Yes, there is a difference in the knowledge standard in the bills at issue today, particularly in COPPA 2.0. We see a tiered knowledge standard that preserves actual knowledge for the vast majority of actors, and that's basically preserving or maintaining the status quo. The Senate version of COPPA 2.0 has an updated knowledge standard, which would apply to all actors. Honestly, this is a difficult balance to strike, but the hope is to land on a standard that does not permit a company to look the other way when they know there are kids on their service while avoiding incentivizing broad age assurance across the entire internet. While we think there needs to be changes to House COPPA, we fully support enhancing protections for children's privacy and we hope that we can all work together to update COPPA 2.0 in a way that would do so.

Rep. Kathy Castor (D-FL):

Thank you for that. Do you want to say anything else about enforcement as well? You already talked a little bit about the FTC, but when we're talking about kids, isn't it appropriate to have a number of tools in the toolbox to make sure they're safe and tech is held accountable?

Kate Ruane:

Absolutely. It's not just appropriate. It is also how Congress has approached protecting children. Historically, Congress has generally not chosen to preempt states’ ability to act and has always ensured that there are more tools in the toolbox. It was good to hear and it is good to see that the FTC would have things like first fine authority and that State attorneys general would have the ability to act, but we also need to see things like private rights of action as a force multiplier for families and kids to be able to enforce their rights under any statute, any statute. That comes to

Rep. Kathy Castor (D-FL):

Pass, and thank you for that. I also want to make sure that I get into the record a number of provisions. Mr. Chairman, at the end of the hearing, we will have a number of documents to submit for the record. A lot of letters from groups, the Federal Trade Commission, September, 2024 report that I recommend to the committee, some of the whistleblower testimony from the United States Senate that is very illuminating, some of the other reports from various medical societies. Think all of this would really inform our decision making as we move forward.

Rep. Gus Bilirakis (R-FL):

Thank you.

Rep. Kathy Castor (D-FL):

And I'll ask unanimous consent at the appropriate time.

Rep. Gus Bilirakis (R-FL):

Absolutely. Okay, thank you. Alright, now we'll recognize Dr. Dunn. He's the vice chair of the full committee. I'll recognize you for five minutes, sir.

Rep. Neal Dunn (R-FL):

For your time. Thank you very much. Mr. Chairman. I've been fortunate to wear a lot of hats in my life. I was a soldier. I served my community as a surgeon for 35 years, but the title that keeps me up at night that drives me is granddad. My sixth grandchild was born yesterday. As you know, Gus. Fortunately I made it look easy, but we're living through a crisis right now. We've handed our children devices that are more powerful than the most powerful computers that sent man to the moon, but we failed to install the digital equivalent of seat belts and smoke detectors on these things. As a doctor, I see when I see patient bleeding and to supply a tourniquet, I don't call a committee meeting right now. Our children are bleeding. Our children and our grandchildren are being targeted, groomed and exploited on social media platforms that are designed intentionally designed to hide the evidence of their exploitation.

That's why I introduced HR to 62 57, the Safe Messaging Act for kids or the SMK Act of 2025. This bill is a direct intervention to stop two specific mechanisms that predators use to hunt our children, ephemeral messaging and unsolicited contact. The problem is disappearing evidence. Let's talk about ephemeral messaging. This is a fancy term for a dangerous feature that messages automatically delete themselves after they're viewed. Imagine if a drug dealer could sell fentanyl to a teenager in a school hallway, and then the moment the transaction is done, any security camera footage automatically erased itself. That's what's happening online. Predators love ephemeral messaging. It's their best friend. It destroys any evidence of grooming, cyber bullying, illicit transactions before the parent ever sees it. And before law enforcement can build a case, my bill would put an end to this. Under section three of the SMK acts, social media platforms would be strictly prohibited from offering ephemeral messaging to any user they know or willfully disregard is a minor under 17.

If you're a tech company and you know your user is a 14-year-old child, you should not be handing them a tool to destroy evidence. It's that simple. The solution is of course, parental authority. The second part of this bill is unsolicited contact. Right now in the digital world, strangers can walk up to our children and whisper in their ear. We would never allow that on a playground. Why do we allow indirect messages? The SMK ACT mandates parental direct messaging controls. We are putting parents back in a driver's seat. For children under 13, the bill requires that direct messaging features be disabled. By default. A 10-year-old should not be fielding messages from strangers. If that feature is to be turned on. A parent must proactively give verifiable consent. For teenagers under 17, parents have to have the tools to see who's knocking on the door.

The bill requires platforms to notify parents of requests for unapproved contacts and gives the parents the power to approve or deny those requests before any messaging occurs. This isn't about hovering. This isn't helicopter parenting, it's just about paring. It's about giving moms and dads a dashboard. They need to keep kids safe. Mr. Chairman in medicine you knew we take an oath, do no harm for too long. We have allowed social media platforms to violate that oath and they've built features that harm our children, drive engagement by our children. The Safe Messaging Act for Kids is a common sense treatment plan for this. And it preserves the evidence of crimes that restores parental authority, that protects our kids. And I urge my colleagues to support this legislation. I will submit questions for the panel. I'm sorry I've used up my time, but I wanted to make a case for this. Thank you so much, Mr. Chairman.

Rep. Russ Fulcher (R-ID):

Thank you. The gentleman of Florida. Mr. Soto is recognized for five minutes.

Rep. Darren Soto (R-FL):

Thank you Mr. Chairman. Today we're here to empower parents to protect kids online and Congress needs to do its job and make rules of the road. It's been five years since the Children's Online Safety Act has been filed five years too long to address this critical issue. When we look at the internet and social media, we see education, entertainment, communication with friends, all great things for kids, but we also see a quagmire of issues that they could fall into, which is why we need guardrails to protect children's privacy, stop access to adult content, unbridled chatbots, even predators online. So today we have 19 bills, including our bipartisan Promoting a Safe Internet for Minors Act. With representative Laurel Lee, my fellow Floridian, HR 6289 directs the Federal Trade Commission to conduct nationwide education campaigns to promote safe internet use by minors, encouraging best practices for educators, parents, platforms and minors, and sharing latest trends about negatively impacting that are negatively impacting minors online and making publicly available online safety education.

The only issue is we have an FTC where President Trump fired both the Democrats and now there are only three out of the full five strength that they need. And the courts have been taking their sweet time to address this issue. And so it's critical that we as a committee make sure we get the FTC up to strength and hold the present accountable for this unlawful action. We also need to limit kids' access to chatbots. We saw a tragic story from central Florida of Sewell Setzer III's story, a ninth grader from Orlando Christian Prep. He died by suicide last year at age 14 after being prompted by a chatbot that he was listening to. Sewell's mother, Megan Garcia testified in the Senate Judiciary Committee in September of this year. In her testimony, she described how Sewell was manipulated by the chatbot and sent sexually explicit material.

She has filed a wrongful death lawsuit. This is why bills like the Safe Bots Act that we see, which would develop AI policies to prevent harm. AI disclosure prohibiting them from posing as licensed professionals. And then enforcement's key, the FTC, which we need to fully restore to its full strength and state attorney's general. And then back in Florida we see a new law that restricts use under 14 years of age, parental consent, 14 and 15 years of age, and it was just recently upheld on appeal. And lastly, we're working with local sheriffs like the Osceola County Sheriff's Office to get them millions in funding to protect kids online with the internet Crimes against Children Task force, absolutely critical for our local kids. Ms. We saw President Trump illegally fire two of the Democratic members of the FTC. Even with all these bills that we have filed, even if they pass, how does the decimation of the FTC affect enforcement of laws meant to protect kids online?

Kate Ruane:

Thank you, representative Soto. So the FTC has historically had as an independent agency, has historically had a reputation of protecting consumers. Regardless of the party in office, the current administration is undermining that reputation and threatening the FTC's ability to enforce the law fairly. If I say this in my testimony, but I'll say it here again. Laws are only as good as their enforcement mechanisms. They are only as good and fair as their enforcers. Laws without good enforcement are at best just words on the page. But at worst, they are weapons to be used by the powerful against the powerless. And the worry with the FTC becoming a politicized institution is that rather than enforcing the laws to protect everyone and uphold the rule of law, it will instead become a partisan tool that could be used and weaponized against those that the administration, whoever occupies the office dislikes or on the other hand favors. If for example, the president has a particular relationship with a particular company or a particular CEO, we are concerned that the politicization of an FTC could lead to favorable treatment and lack of enforcement of laws against those companies. And on the other side, targeting or retribution against those who would.

Rep. Darren Soto (R-FL):

Thank you, Ms. Ruane. Mr. Thayer, we talked a little bit about bots being out of control. What rules of the road do we need to help make sure we protect our kids?

Joel Thayer:

Well thank you Congressman. And look, we're going to need a lot, but I mean the rate at which this adoption is happening is unlike anything that we've ever seen, even with respect to social media and particularly when it comes to kids. Kids are now using chatbots for everything under the sun, whether it's

Rep. Russ Fulcher (R-ID):

So the time’s expired. If you could wrap really quick please.

Joel Thayer:

Sure. But in general, solutions that you guys have proposed today can go a long way. Also, what Senator Holly has introduced is another interesting avenue along with Senator Blumenthal. So I look forward to working with you in your office. Thank you for that.

Rep. Russ Fulcher (R-ID):

Time's expired. The chair recognizes this gentle lady from Florida. Ms. Cammack, please.

Rep. Kat Cammack (R-FL):

Well, thank you Mr. Chairman. Thank you to our witnesses and for everyone in the audience here today as a new mom, today's hearing certainly is hitting pretty close to home. Today we're reviewing 19 bills, but we are missing a critical one, the App Store Freedom Act. This bill would empower parents and consumers foster innovation and most importantly, protect kids online and their data. This bill has brought bipartisan support on this committee and is a critical step in keeping kids safe online. I want folks to imagine for a moment what it would look like if parents parents were allowed to build an online marketplace, an app marketplace where they could vet the apps and knew for sure that their kids were safe. Today that is not possible and only our bill, the App Store Freedom Act can do that. I would also like to point out for the record that last year alone in 2024, that Apple App Store facilitated nearly 406 billion in sales.

So taking that into consideration, the 30% tax, no matter how you slice it, that Apple requires of these apps and their subscriptions is a multi-billion dollar industry. So it would stand to reason that Apple has a couple billion reasons why they don't want to protect kids online and they don't want parents creating their own marketplace. So I'm going to jump to our questions panel. We're going to go rapid fire to start. In 2024 in my home state of Florida, 13 and 14-year-old boys were using notify apps to take pictures of their 12-year-old classmates and digitally unclothed them the perpetrators because that's what they are. Then shared those images with their classmates and others. Another investigation into Apple showed that it allowed a notify app in its app store and then rated it Apple rated that app as appropriate for four year olds, four year olds. So for the panel Rapid Fire, we'll start with you Mr. Beckman, do you think that app stores should be allowed to have children accessing notify apps? Yes or no?

Marc Berkman:

No.

Rep. Kat Cammack (R-FL):

Mr. Lekas.

Paul Lekas:

No.

Rep. Kat Cammack (R-FL):

No.

Paul Lekas:

Hell no.

Rep. Kat Cammack (R-FL):

Excellent. I like this rapid fire for the panel, yes or no. Today, Apple and Google Profit by taking a 30% commission for sales of app subscriptions, apps that include the ability to notify under age classmates. Is that acceptable? Yes or no?

Paul Lekas:

No. I have no awareness of those facts. I can't speak to the question.

Rep. Kat Cammack (R-FL):

Interesting. Yes or no?

Kate Ruane:

I'm not sure, but I think no, because...

Rep. Kat Cammack (R-FL):

God, I would hope no. Mr. Thayer?

Kate Ruane:

The question is whether they should profit off of notify apps?

Rep. Kat Cammack (R-FL):

Should Apple and Google be profiting off of?

Kate Ruane:

No, no one should profit off of notify apps.

Rep. Kat Cammack (R-FL):

I appreciate the clarity in your answer. Thank you. Mr. Thayer.

Joel Thayer:

Again. Hell no.

Rep. Kat Cammack (R-FL):

No, I appreciate that. Now, I think we're all aware that there have been investigations into notify apps being used by minors against fellow minors and also adults using these apps to notify minors. This has been covered extensively through the BBC, Fox News, CNN, CNBC, and others. So Mr. Thayer, Apple and Google have said that they're keeping kids safe online, but based on that track record that is well-documented, do you think that Apple and Google are doing everything that they should to keep kids safe in the App store?

Joel Thayer:

Not even the bare minimum.

Rep. Kat Cammack (R-FL):

Perfect. Now, as mentioned before, we have a bipartisan bill with many co-sponsors on this committee that would stop Apple and Google from maintaining their app store monopoly because that is in fact what they have. Often when we talk about monopolies, we focus on price gouging. But there are other good reasons why you would want to stop a monopoly from abusing their power. Like speeding up innovation, improving quality, protecting kids, for example. So Mr. Thayer, you recently signed a letter in support of our bill, the App Store Freedom Act. Thank you for that. It's not easy taking on the tech giants. Is it

Joel Thayer:

Someone who's taking them on in every state? No,

Rep. Kat Cammack (R-FL):