Transcript: House Hearing on AI- Advancing Innovation in the National Interest

Justin Hendrix / Jun 25, 2023

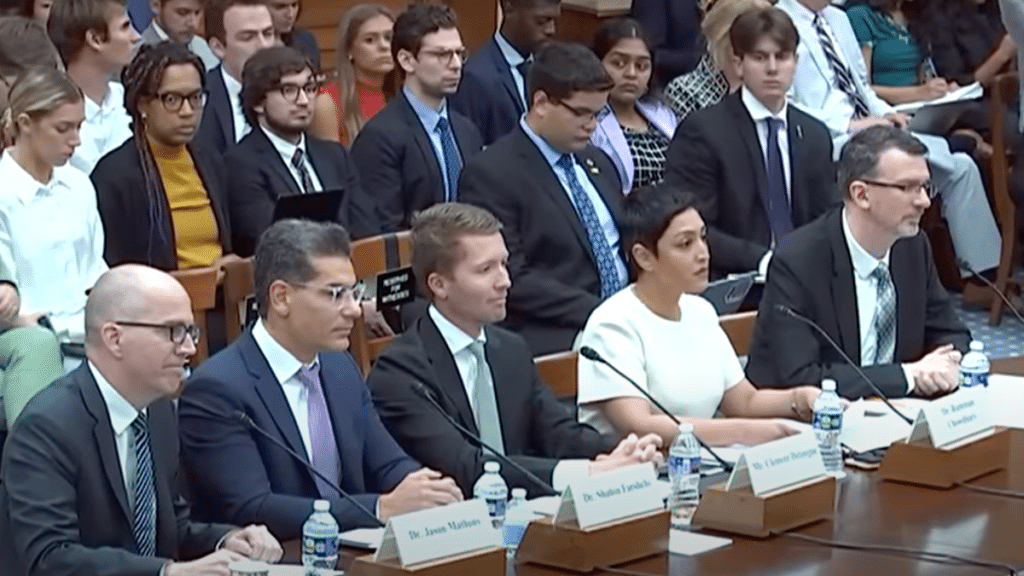

On Thursday, July 22, 2023 the US House of Representatives Committee on Science, Space and Technology hosted a hearing titled Artificial Intelligence: Advancing Innovation Towards the National Interest. Chaired by Rep. Frank Lucas (R-OK) with Ranking Member Zoe Lofgren (D-CA), the hearing featured testimony from:

- Dr. Jason Matheny, President & CEO, RAND Corporation (Testimony)

- Dr. Shahin Farshchi, General Partner, Lux Capital (Testimony)

- Mr. Clement Delangue, Co-founder & CEO, HuggingFace (Testimony)

- Dr. Rumman Chowdhury, Responsible AI Fellow, Harvard University (Testimony)

- Dr. Dewey Murdick, Executive Director, Center for Security and Emerging Technology (Testimony)

What follows is a flash transcript- compare to video for exact quotations.

Rep. Frank Lucas (R-OK):

The committee will come to order without objection. The chair is authorized to declare recess of the committee at any time. Welcome to today's hearing entitled Artificial Intelligence Advancing Innovation in the National Interest. I recognize myself for five minutes for an opening statement. Good morning and welcome to what I anticipate will be one of the first of multiple hearings on artificial intelligence that the Science, Space and Technology Committee will hold this Congress. As we've all seen AI applications like ChatGPT have given, taken the world by storm. The rapid pace of technological progress in this field, primarily driven by American researchers, technologists, and entrepreneurs, presents a generational opportunity for Congress. We must ensure the United States remains the leader in a technology that many experts believe is as transformative as the internet and electricity. The purpose of this hearing is to explore an important question, perhaps the most important question for Congress regarding AI.

How can we support innovation development in AI so that it advances our national interest? For starters, most of us can imagine agree that this is in our national interest to ensure cutting edge ai. AI research continues happening here in America and is based on our democratic values. Although the United States remains the country where the most sophisticated AI research is happening, this gap is narrowing. A recent study by Stanford University ranked universities by the number of AI papers they published. The study found that nine of the top 10 universities were based in China. Coming in at 10th was the only US institution, the Massachusetts Institute of Technology Chinese published papers received nearly the same percentage of citations as US researchers papers showing the gap in research quality is also diminishing. It is in our national interest to ensure the United States has a robust innovation pipeline that supports fundamental research all the way through to real world applications.

The country that leads in commercial and military applications will have a decisive advantage in global economic and geopolitical competition. The front lines of the war in the Ukraine are already demonstrating how AI is being applied to the 21st century warfare. Autonomous drones, fake images, audio used for propaganda and real time satellite imagery analysis are all a small taste of how AI is shaping today's battlefields. However, while it's critical that the US support advances in AI, these advances do not have to come at the expense of safety, security, fairness, or transparency. In fact, embedding our values in AI technology development is central to our economic competitiveness and national security. As members of Congress, our job is never to lose sight of the fact that our national interest ultimately lies with what is best for the American people. The science committee has and can continue to play a pivotal role in the service on this mission.

For starters, we can continue supporting the application of AI in advanced science and new economic opportunities. AI is already being used to solve fundamental problems in biology, chemistry, and physics. These advances have helped us develop novel therapeutics, design advanced semiconductors, forecast crop yields, saving countless amounts of time and money. The National Science Foundation's AI Research Institutes, the Department of Energy's world class supercomputers and the National Institutes of Standards and Technologies, Risk Management Framework and precision measurement expertise are all driving critical advances in this area. Pivotal to our national interest is ensuring these systems are safe and trustworthy. The committee understood that back in 2020 when it introduced the bipartisan National Artificial Intelligence Initiative Act of 2020. This legislation created a broad national strategy to accelerate investments in responsible AI research development and standards. It facilitated new public private partnerships to ensure the US leads the world in the development and use of responsible AI systems.

Our committee will continue to build off of this work to establish and promote technical standards for trustworthy ai. We are also exploring ways to mitigate risks caused by AI systems through research and development of technical solutions such as using all automation to detect AI generated media. As AI systems proliferate across the economy, we need to develop our workforce to meet changing skill requirements. Helping US workers augment their performance with AI will be a critical pillar in maintaining our economic competitiveness. And while the United States currently is the global leader in AI research development technology, our adversaries are catching up. The Chinese Communist Party is implementing AI industrial policy at a national scale, investing billions through state financed investment funds, designating national AI champions, and providing preferential tax treatment to grow AI startups. We cannot and should not try to copy China's playbook, but we can maintain our leadership role in AI and we can ensure its development with our values of trustworthiness, fairness, and transparency. To do so, Congress needs to make strategic investments, build our workforce and establish proper safeguards without overregulation, but we cannot do it alone. We need the academic community, the private sector, and the open source community to help us figure out how to shape the future of this technology. I look forward to hearing the recommendations of our witnesses on how this committee can strengthen our nation's leadership and artificial intelligence and make it beneficial and safe for all American citizens. I now recognize the Ranking Member, the gentlewoman from California for her statement.

Rep. Zoe Lofgren (D-CA):

Thank you. Thank you Chairman Lucas for holding today's hearing, and I'd also like to welcome a very distinguished panel of witnesses. Artificial intelligence opens the door to really untold benefits for society, and I'm truly excited about its potential. However, AI could create risks including with respect to misinformation and discrimination. It will create risk to our nation's cybersecurity in the near term, and there may be medium and long term risks to economic and national security. Some have even posited existential risks to the very nature of our society. We're here today to learn more about the benefits and risks associated with artificial intelligence. This is a topic that has caught the attention of many lawmakers in both chambers across many committees. However, none of this is new to the science committee as the Chairman has pointed out. In 2020, members of this committee developed and enacted the National AI Initiative Act to advance research workforce development and standards for trusted ai.

The federal science agencies have since taken significant steps to implement this law, including notably NIST work on the AI risk management framework. However, we're still in the early days of understanding how AI systems work and how to effectively govern them even as the technology itself continues to rapidly advance in both capabilities as well as applications. I do believe regulation of AI may be necessary, but I'm also keenly aware that we must strike a balance that allows for innovation and ensures that the US maintains leadership. While the contours of a regulatory framework are still being debated, it's clear we will need a suite of tools. Some risks can be addressed by the laws and standards already on the books. It's possible others may need new rules and norms. Even as this debate continues, Congress can act now to improve trust in AI systems and assure America's continued leadership in ai.

At a minimum, we need to be investing in the research and workforce to help us develop the tools we need going forward. Let me just wrap up with one concrete challenge I'd like to address in this hearing. One is the intersection of AI and intellectual property. Whether addressing AI based inputs or outputs, it's my sincere hope that the content creation community and AI platforms can advance their dialogue and arrive at a mutually agreeable solution. If not, I think we need to have a discussion on how the Congress should address this. Finally, research and infrastructure workforce challenges are also top of mind. One of the major barriers to developing an AI capable workforce and ensuring long-term US leadership is a lack of access to computing and training data for all but large companies and the most well-researched institutions. There are good ideas already underway at our agencies to address this challenge and I'd like to hear the panel's input on what's needed. In your view, it's my hope that Congress can quickly move beyond the fact finding stage to focus on what this institution can realistically do to address the development and deployment of trustworthy ai. At this hearing, I hope we can discuss what the science committee should focus on. I look forward to today's very important discussion with stakeholders from industry, academia and venture capital. And as a representative from Silicon Valley, I know how important private capital is today to the US R&D ecosystem. Thank you all for being with us and I yield back.

Rep. Frank Lucas (R-OK):

Ranking Member yields back. Let me introduce our witnesses for today's hearing. Our first witness today is Dr. Jason Matheny, chair president and CEO of Rand Corporation. Prior to becoming CEO of Rand the doctor led the White House policy on technology and national security at the National Security Agency in the Office of Science and Technology Policy. He also served as director of the Intelligence at Advanced Research Projects activity, and I'd also like to congratulate him for being selected to serve on the selection committee for the Board of Trustees for the National Semiconductor Technology Center. Our next witness is Dr. Shahin Farshchi the general partner of the Lux Capital, one of Silicon Valley's leading frontier science and technology investors. He invests in the intersection of artificial intelligence and science and is co-founded and invested in many companies that have gone on to raise billions of dollars.

Our third witness of the day is Dr. Clement Delangue, co-founder and CEO of Hugging Face, the leading platform for the open source AI community. It has raised over a hundred million dollars with Lux Capital leading their last financing round and counts over 10,000 companies and a hundred thousand developers as users. Next we turn to Dr. Rumman Chowdhury, responsible AI fellow at Harvard University, and she is a pioneer in the field of applied algorithmic ethics, which investigates creating technical solutions for trustworthy AI. Previously, she served as director of machine learning accountability at Twitter and founder of Parity, an enterprise algorithmic auditing company.

And our final witness is Dr. Dewey Murdick, the executive director of Georgetown Center for Security and Emerging Technology. He previously served as the chief analytics officer and deputy chief Scientist within the Department of Homeland Security and also co-founded an office in predictive intelligence at IARPA. Thank you all witnesses for being here today and I recognize Dr. Matheny for the first five minutes to present your testimony and overlook my phonetic weaknesses.

Dr. Jason Matheny:

<laugh>, No problem at all. Thanks so much, Chairman Lucas, Ranking Member Lofgren and members of the committee. Good morning and thank you for the opportunity to testify. As mentioned, I'm the president and CEO of Rand, a nonprofit and nonpartisan researcher organization and one of our priorities is to provide detailed policy analysis relevant to AI in the years ahead. We have many studies that we have underway relevant to AI. I'll focus my comments today on how the federal government can advance AI in a beneficial and trustworthy manner for all Americans. Among a broad set of technologies, AI stands out both for its rate of progress and for its scope of applications. AI holds the potential to broadly transform entire industries, including ones that are critical to our future prosperity. As noted, the United States is currently the global leader in AI.

However, AI systems have security and safety vulnerabilities and a major AI related accident in the United States or a misuse could dissolve our lead. Much like nuclear accidents set back the acceptance of nuclear power in the United States. The United States can make safety a differentiator for our AI industry just as it was a differentiator for our early aviation and pharmaceutical industries. Government involvement and safety standards and testing led to safer products, which in turn led to consumer trust and market leadership. Today, government involvement can build consumer trust in AI that strengthens the US position as a market leader. And this is one reason why many AI firms are calling for government oversight to ensure that AI systems are safe and secure, it's good for their business. I'll highlight five actions that the federal government could take to advance trustworthy AI within the jurisdiction of this committee.

First is to invest in potential research moonshots for trustworthy AI, including generalizable approaches to evaluate the safety and security of AI systems before they're deployed. Second fundamentals of designing agents that will persistently follow a set of values in all situations. And third, microelectronic controls embedded in AI chips to prevent the development of large models that lack safety and security safeguards. A second recommendation is to accelerate AI safety and security research and development through rapid high return on investment techniques such as prize challenges, prizes pay only for results and remove the costly barrier to entry for researchers who are writing applications, making them a cost effective way to pursue ambitious research goals while opening the field to non-traditional performers such as small businesses. A third policy option is to ensure that US AI efforts conduct risk assessments prior to the training of very large models as well as safety evaluations and red team tasks prior to the deployment of large models.

A fourth option is to ensure that the National Institute of Standards and Technology has the resources needed to continue applications of the NIST Risk Management Framework and fully participate in key international standards relevant to AI such as ISO SC 42. A fifth option is to prevent intentional or accidental misuse of advanced AI systems by requiring that companies report the development or distribution of very large AI clusters training runs and trained models such as those involving over 10 to the 26 operations. Second, include in federal contracts with cloud computing providers, requirements that they employ. Know your customer screening for all customers before training large AI models. And third, include in federal contracts with AI developers know your customer screening as well as security requirements to prevent the theft of large AI models. Thank you for the opportunity to testify and I look forward to your questions later.

Rep. Frank Lucas (R-OK):

Thank you. And I recognize Dr. Farshchi for five minutes to present his testimony.

Dr. Shahin Farshchi:

Thank you, Mr. Chairman. Chairman Lucas, Ranking Member Lofgren and members of the committee. My name is Dr. Shahin Farshchi and I'm a general partner at Lux Capital, a venture capital firm with 5 billion of assets under management. Lux specializes in building and funding tomorrow's generational companies that are leveraging breakthroughs in science and engineering. I have helped create and fund companies pushing the state-of-the-art and semiconductors, rockets, satellites, driverless cars, robotics, and ai. From that perspective, there are two important considerations for the committee today. One, preserving competition in AI to ensure our country's position as a global leader in the field. And two, driving federal resources to our most promising AI investigators. Before addressing directly how America can reinforce its dominant position in AI, it is important to appreciate how some of Lux portfolio companies are pushing the state of the art in the field. Hugging Face, who's founder, Clem Delangue is a witness on this panel; Mosaic ML, a member of the Hugging Face community is helping individuals enterprises train, tune and run the most advanced AI models. Mosaic's language models have exceeded the performance of open AI's GPT-3. Unlike open AI mosaics models are made available to their customers entirely as opposed to through an application programming interface, thereby allowing customers to keep all of their data private. Mosaic built the most downloaded LLM in history, MPT-7B, which is a testament to the innovations coming into the open source from startups and researchers. Runway ML is bringing the power of generative AI to consumers to generate videos from simple text and images. Runway is inventing the future of creative tools with AI thereby re-imagining how we create so individuals can achieve the same expressive power of the most powerful Hollywood studios. These are just a few examples of lux companies advancing the state of the art in ai in large part because of the vital work of this committee to ensure America is developing its diverse talent, providing the private sector with helpful guidance to manage risk in democratizing access to computing resources that fuel AI research and the next generation of tools to advance the US national interest.

To continue America's leadership, we need competitive markets to give entrepreneurs the opportunity to challenge even the largest dominant players. Unfortunately, there are steep barriers to entry for AI researchers and founders. The most advanced AI generative models cost more than a hundred million to train. If we do not provide open, fair and diverse access to computing resources, we could see a concentration of resources in a time of rapid change reminiscent of standard oil during the industrial revolution. I encourage this committee to continuous leadership in democratizing access to AI r and d by authorizing and funding the National AI research resource. This effort will help overcome the access divide and ensure that our country is benefiting from diverse perspectives that will build the future of AI technology and help guide its role in our society. I am particularly concerned that Google, Amazon and Microsoft, which are already using vast amounts of personal data to train their models, have also attracted a vast majority of investments in AI startups because of the need to access their vast computing resources to train AI models further entrenching their preexisting dominance in the market.

In fact, Google and Microsoft are investing heavily in AI startups under the condition that their invested dollars are spent solely on their own compute resources. One example is OpenAI's partnership with Microsoft through efforts such as NAIRR, we hope that competitors at Google, Microsoft and Amazon will be empowered to offer compute resources to fledgling AI startups while perhaps even endowing compute resources directly to the startups and researchers as well. This will facilitate a more competitive environment that will be more conducive to our national dominance at the global stage. Furthermore, Congress must rebalance efforts towards providing resources with deep investment into top AI investigators. For example, the DOD has taken a unique approach by allocating funding to top investigators as opposed to the National Science Foundation, which tends to spread funding across a larger number of investigators when balance appropriately. Both approaches have value to the broader innovation ecosystem.

However, deep investment has driven discoveries at the frontier leading to the creation of great companies like OpenAI, whose dollars were initially funded, whose founders were initially funded by relatively large DOD grant dollars. Building on these successes is key to amer's continued innovation, continued success in AI innovation. Thank you for the opportunity to share how Lux Capital is working with founders to advance AI in our national interest, bolster our national defense and security, strengthen our economic competitiveness and foster innovation right here in America. Lux is honored to play a role in this exciting technology at this pivotal moment. I look forward to your questions. Thank

Rep. Frank Lucas (R-OK):

You. And I recognize Mr. Delangue for five minutes for his testimony.

Clement Delangue:

Chairman Lucas Ranking Member Lofgren and members of the committee, thank you for the opportunity to discuss AI innovation with you. I deeply appreciate the work you are doing to advance and guide it in the us. My name is Cleman DeLong and I'm the co-founder and CEO of Hugging Face. I'm French, as you can hear from my accent and moved to the US 10 years ago, barely speaking English with my co-founders, Julian and Thomas. We started this company from scratch here in the US as a US startup, and we are proud to employ team members in 10 different US states today. I believe we could not have created this company anywhere else. I am leaving proof that the openness and culture of innovation in the US allows for such a story to happen. The reason I'm testifying today is not so much the size of our organization or the cuteness of our emoji name Hugging Face, and contrary to what you said, Chairman Lucas, I don't hold a PhD like all the other witnesses but the reason I'm here today is because we enable 15,000 small companies, startups, nonprofits, public organizations and companies to build AI features and workflows collectively on our platform.

They have shared over 200,000 open models, 5,000 new ones just last week, 50,000 open data sets and a hundred thousand applications ranging from data anonymization for self-driving cars, speech recognition from visual lip movement for people with hearing disabilities, applications to detect gender and racial biases, translation tools in low resource languages to share information globally, not only with large language models and generative ai, but also with all sorts of machine learning algorithms with usually smaller, customized, specialized models in domains as diverse as social productivity platforms, finance, biology, chemistry and more. We are seeing firsthand that AI provides a unique opportunity for value creation, productivity, boosting and improving people's lives potentially at the larger scale on higher velocity than the internet or software before. However, for this to happen across all companies and at the sufficient scale for the US to keep leading compared to other countries, I believe open science and open source are critical to incentivize and are extremely aligned with American values and interests.

First, it's good to remember that most of today's progress has been powered by open science and open source, like the attention is all you need. Paper, the BERT paper, the latent diffusion paper, and so many others, the same way without open source, PyTorch, transformers, diffusers, all invented here in the US, the US might not be the leading country for AI. Now, when we look towards the future, open science and open source distribute economic gains by enabling hundreds of thousands of small companies and startups to build with AI. It fosters innovation and fair competition between all thanks to ethical openness. It creates a safer path for development of the technology by giving civil society, nonprofits, academia and policy makers the capabilities they need to counterbalance the power of big private, of big private companies. Open science and open source, prevent black box systems, make companies more accountable and help solving today's challenges like mitigating biases, reducing misinformation, promoting copyrights, and rewarding all stakeholders, including artists and content creators in the value creation process.

Our approach to ethical openness combines institutional policies such as documentation with model cards pioneered by our own Dr. Margaret Mitchell. Technical safeguards such as staged releases and community incentives like moderation and opt-in opt-out, data sets. There are many examples of safe AI thanks to openness like bloom, an open model that has been assessed by Stanford as the most compliant model with the EU AI Act or the research advancement in watermarking for AI content. Some of that you can only do with open models and open data sets. In conclusion, by embracing ethical AI development with a focus on open science and open source, I believe the US can start a new era of progress for all, amplify its worldwide leadership and give more opportunities to all that it like it gave to me. Thank you very much.

Rep. Frank Lucas (R-OK):

Absolutely, and thank you Mr. Delangue and I would note probably that there would be some of my colleagues who would note that your version of English might be more understandable than my Okie dialect, but setting that issue aside, I now recognize Dr. Chowdhury for five minutes for her testimony.

Dr. Rumman Chowdhury:

Thank you, Chairman Lucas, Ranking Member Lofgren and esteemed members of the committee. My name is Dr. Rumman Chowdhury and I'm an AI developer, data scientist and social scientist. For the past seven years, I've helped address some of the biggest problems in AI ethics, including holding leadership roles and responsible AI at Accenture, the largest tech consulting firm in the world, and at Twitter. Today, I'm a responsible AI fellow at Harvard University. I'm honored to provide testimony on trustworthy AI and innovation. Artificial intelligence is not inherently neutral, trustworthy, nor beneficial, concerted and directed effort is needed to ensure this technology is used appropriately. My career in responsible AI can be described by my commitment to one word governance. People often forget that governance is more than the law. Governance is a spectrum ranging from codes of conduct standards, open research and more. In order to remain competitive and innovative, the United States would benefit from a significant investment in all aspects of AI governance.

I would like to start by dispelling the myth that governance stifles innovation much to the contrary. I use the phrase brakes help you drive faster to explain this phenomenon. The ability to stop your car in dangerous situations is what enables us to feel comfortable driving at fast speeds. Governance is innovation. This holds true for the current wave of artificial intelligence. Recently, a leaked Google memo declared there is no moat. In other words, AI will be unstoppable as open source capabilities meet and surpass closed models. There is also a concern about the US remaining globally competitive. If we aren't investing in AI development at all costs, this is simply untrue. Building the most robust AI industry isn't just about processors and microchips. The real competitive advantage is trustworthiness. If there is one thing to take away from my testimony, it's that the US government should invest in public accountability and transparency of AI systems.

In this testimony, I describe how I make the following four recommendations to ensure the US advances in innovation in the national interest. First, support for AI model access to enable independent research and audit. Second investment in and legal protections for red teaming and third party ethical hacking. Third, the development of a non-regulatory tech technology body to supplement existing US government oversight efforts. Fourth, participation in a global AI oversight. CEOs of the most powerful AI companies will tell you that they spend significant resources to build trustworthy ai. This is true. I was one of those people. My team and I held ourselves to the highest ethical standards and as, and my colleagues who remain in these roles still do so. Today, however, a well-developed ecosystem of governance also empowers individuals whose organizational missions are to inform and protect society. The DSA Article 40 creates this sort of access for Europeans.

Similarly, the UK government has announced Google DeepMind and open AI will allow model access. My first recommendation is the United States should match these efforts. Next, new laws are mandating third party algorithmic auditing. However, there is currently a workforce challenge in identifying sufficiently trained third party algorithmic investigators. Two things can fix this: first, funding for independent groups to conduct red teaming and adversarial auditing and second legal protection. So these individuals operating the public good are not silenced with litigation. With the support of the White House, I am part of a group designing the largest ever AI red teaming exercise. In collaboration with the largest open and closed source AI companies, we'll provide access to thousands of individuals who will compete to identify how these models may produce harmful content. Red teaming is a process by which invited third party experts are given special permission access by AI companies to find flaws in their models.

Traditionally, these practices happen behind closed doors and public information sharing is at the company's discretion. We want to open those closed doors. Our goals are to educate, address vulnerabilities, and importantly grow a new profession. Finally, I recommend investment in domestic and global government institutions in alignment with this third party robust ecosystem. A centralized body focused on responsible innovation could assist existing oversight by promoting interoperable licensing, conducting research to inform AI policy and sharing best practices and resources. Parallels and other governments include the UK Center for Data Ethics and Innovation of which I'm a board member, and the EU Center for algorithmic transparency. There's also a sustained and increasing call for global governance of AI system among them, experts like myself, OpenAI CEO Sam Altman, and former New Zealand Prime Minister Jacinda Ardern. A global governance effort should develop empirically driven enforceable solutions for algorithmic accountability and promote global benefits of AI systems in some innovation in the national interest starts with good governance. By investing in and protecting this ecosystem, we will ensure AI technologies are beneficial to all. Thank you for your time.

Rep. Frank Lucas (R-OK):

Thank you, doctor. And I now recognize Dr. Murdick for five minutes to present his testimony.

Dr. Dewey Murdick:

Thank you, Chairman Lucas, Ranking Member Lofgren and everyone on the committee to be, have this opportunity to talk about how we can make AI better for our country. there are many actions Congress can do to support AI innovation, protect key technology for misuse and ensure customers or consumers are safe. I'd like to highlight three today. First, we need to get used to working with AI as a society and individually we need to learn what, what, when we can trust our AI teammates and when to question or ignore them. I think this takes a lot of training and time. Two, we need skilled people to build future AI systems and to increase AI literacy. Three, we need to keep a close eye on the policies that we do enact to may need to make sure that every policy is being action, is being monitored, and make sure it's actually doing what we think it's doing and update that as we need to.

This is especially true when we're facing peer innovators, especially in a rapidly changing area like artificial intelligence. China is such a peer innovator, but we need to remember they're not 10 feet tall and they have different priorities for their AI than we do. China's AI leadership is evident through aggressive use of state power and substantial research investments, making it a peer innovator for us and our allies never far ahead and never far behind either. China focuses on how AI can assist military decision making and mass surveillance to help maintain societal control. This is very unlike the United States. Thankfully, managing that controlled means they're not letting AI run around all willy-nilly. In fact, they deploying of, the deployment of large language models does not appear to be a priority for China's leadership. Precisely for that reason, we should not let the fear of China surpassing the US deter oversight of the AI industry and AI technology.

Instead, the focus should be on developing methods that allow enforcement of AI risk and harm management and guiding the innovation advancement of AI technology. I'd like to return to my first three points and expand on them a little bit. Going back to my opening points. The first one was, we must get used to working with AI via effective human machine teaming, which is central to AI's evolution in my opinion in the next decade. Understanding what an AI system can and cannot do and should and shouldn't do and when to rely on them and when to avoid using them should guide our future innovation and also our training standards. One thing that keeps me up at night is when human partners trust machines when they shouldn't, and there's interesting examples of that. they fail to trust AI when they should or are manipulated by a system, and there's some instances of that.

Also. We've witnessed rapid AI advancements and the convergence between AI and other sectors promises widespread innovation in areas from medical imaging to manufacturing. Therefore, fostering AI literacy across the population is critical for economic competitiveness, but also, and I think even more importantly, it is essential for democratic governance. We cannot engage in a meaningful societal, societal debate about AI if we don't understand enough about it. This means an increasingly large fraction of the US citizens will encounter AI daily, so that's the second point. We need skilled people working at all levels. We need innovators from technical and non-technical backgrounds. We need to attract and retain diverse talent from across our nation and internationally and separately from those who are building the AI systems, these future and current ones. We need comprehensive AI training for the general population, K through 12 curricula certifications. There's a lot of good ideas there.

AI literacy is the central key though, so what else can we do? I think we can promote better decision making by gathering information now that we need to make decisions. For example, tracking AI harms via incident reporting is a good way to learn where things are breaking, learning how to request key model and training data for oversight to make sure it's being used in important applications correctly. We don't know how to do that. encouraging and developing third party auditing and ecosystem and the red teaming ecosystem. Excellent. If we are going to license AI software, which is a common proposal we hear, we're probably going to need to update existing authorities for existing agencies, and we may need to create a new one, a new agency or organization. This new organization could check how AI is being used and overseen by existing agencies.

It could be the first to deal with problems directing those in need to the right solutions either in the government or private sector and fill gaps in sector specific agencies. My last point, and I see I'm going too long. We need to make sure our policies are monitored and effectively implemented. There's really great ideas in the House and Senate on how to increase the analytic capacity to do that. I look forward to this discussion because I think this is a persistent issue that's it's just not going to go away and CSAT has and I have dedicated our professional lives to this, so thank you so much.

Rep. Frank Lucas (R-OK):

Thank you, doctor, and thank you to the entire panel for some very insightful opening comments. continuing with you Dr. Murdick. Making AI systems safer is not only a matter of regulations, but also requires technical advances in making the systems more reliable, transparent and trustworthy. It seems to me that the United States would be more likely than China to invest in these research areas given our democratic values. So Doctor, can you compare or expand on your comments earlier, can you compare how the Chinese Communist Party in the United States political values influence their research and development priorities for AI systems?

Dr. Dewey Murdick:

Sure. I think that the large language models, which is the obsession right now of a lot of the AI system provides a very interesting example of this. China's very concerned about how it can destabilize their societal structure by having a system that they can't control and might say things that would be offensive about it might bring up the TNM in Square or might associate the, the president with Winnie the Pooh or something, and that could be very destructive to their way of of their societal control. Because of this, they're really limiting control. They're passing regulations and laws that are very constraining of how that's doing. So that is a difference between our societies and how we, what we view as acceptable. I do think the military control the command and control emphasis as well as the desire to maintain control through mass surveillance. If you look at their research portfolio, it most of where they're leading is could be very well associated with those types of areas. So I think those are differences that are pretty significant. We can go on, but I'm gonna just pause there to make sure other people have opportunities.

Rep. Frank Lucas (R-OK):

Absolutely. Dr. Matheny, some advocate advocated for a broad approach to AI regulations such as restricting entire categories of AI systems in the United States. Many agencies already have the existing authorities to regulate the user cases of AI in their jurisdiction. For example, the Department of Transportation can perform set performance benchmarks that autonomous vehicles must meet to drive on US roads. What are your opinions regarding outcomes of user case driven approach to AI regulation versus an approach that places broad restrictions on AI development or deployment?

Dr. Jason Matheny:

Thank you, Mr. Chairman. I think that in many of the cases that we're most concerned about related to risks of AI systems, especially these large foundation models, we may not know enough in advance to specify the use cases. and those are ones then where the kind of testing and red teaming that's been described here is really essential. So having terms and conditions in federal contracts with compute providers actually might be one of the highest leverage points of governance is that we could require that models trained on infrastructure that is currently federally contracted involve red teaming and other evaluation before models are trained or deployed.

Rep. Frank Lucas (R-OK):

Mr. Delangue, in my opening statement, I highlighted the importance of ensuring that we continue to lead in AI research and development because American built systems are more likely to reflect democratic values, given how critical it is to ensure we maintain our leadership in AI. How do you recommend Congress ensure that we do not pass regulations that stifle innovation?

Clement Delangue:

I think a good point that you made earlier is that AI is so broad and the impact of AI could be so widespread across use cases, domains, sectors, is leads to the point that for regulation to be effective and not stiff stifle innovation at scale, you need to be you know, customized and focused on specific domains, use cases and sectors where there are more risks and then kind of like empower the whole ecosystem to, to keep growing and keep innovating. The parallel that I like to draw, draw to draw is with software, right? It's such a broad applicable technology that the important thing is to regulate the final use cases and the specific domains of application of software, rather in software in general.

Rep. Frank Lucas (R-OK):

In my remaining moments Dr. Farshchi advances in civilian AI. Civilian R&D often helps progress defense technologies and advance national security. How have civilian R&D efforts in AI transformed in advances in defense applications, and how do you anticipate that relationship evolving over the next few years?

Dr. Shahin Farshchi:

I expect that relationship to evolve in a positive way. I expect it to, I expect there to be a further strengthening of the relationship between the private sector and dual use and government targeted products. thanks to the open source, there are many technologies that otherwise would have not been available, would it have to be, would've had to be reinvented are now made available to build on top of the venture capital community is very excited about funding companies that have dual use products and companies that sell to the US government. Palantir was an example where investors profited greatly from investing in a company that was targeted, that was targeting the US government as a customer. Same with SpaceX. And so it's my expectation that this trend will continue, that more private dollars will go into funding companies that are selling into the US government and leveraging technologies that come out of the open source to build on top of to continue innovating in this sector.

Rep. Frank Lucas (R-OK):

Thank you. My time's expired and I recognize the Ranking Member Mrs. Lofgren thank you Mr. Chairman, and thanks to our panelists. This is wonderful testimony and I'm glad this is the first of several hearings because there's a lot to wrap our heads around. Dr. Chadri, as you know, large language models have basically vacuumed up all the information from the internet and there's a dispute between copyright holders who feel they ought to be compensated. Others who feel it's a fair use of the information, you know, it's in court, they'll probably decide it before Congress will will, but here's a question I've had. What techniques are possible to identify copyrighted material in the training corpus of the large language models? Is it even possible to go back and identify protected material?

Dr. Rumman Chowdhury:

Thank you for the excellent question. it's not easy. It is quite difficult. What we really need is protections for the individuals generating this artwork because they're at risk of having not only their work stolen, but their entire livelihood taken from.

Rep. Zoe Lofgren (D-CA):

I understand, but the question is retroactively, right? Is it possible to identify?

Dr. Rumman Chowdhury:

It's difficult to, but yes, it can. One can use digital imaging matching but it's also important to think through, you know, what we are doing with the data, what it is being used for.

Rep. Zoe Lofgren (D-CA):

No, I understand that as well. Absolutely. Thank you. One of the things I'm interested in, you mentioned CFAA and that's a barrier to people trying to do third party analysis. I had a bill actually after Aaron Swartz's untimely and sad demise. I had a bill named after him to allow those who are making non-commercial use to actually do what Aaron was doing, and Jim Sensenbrenner was my co-sponsor since retired and we couldn't get anywhere. There were large vested interests. Who opposed that? Do you think if we approached it just as those who register rather than be licensed with the government as non-commercial entities that are doing red shirting, whether that would be a viable model for what you're suggesting?

Dr. Rumman Chowdhury:

I think so. I think that would be a great start. I do think there would need to be follow up to ensure that people are indeed using it for non-commercial purposes.

Rep. Zoe Lofgren (D-CA):

Correct. I'm interested in the whole issue of licensing versus registration. I'm mindful that the Congress really doesn't know enough in many cases to create a, a licensing regime, and the technology is moving so fast that I fear we might make some mistakes or federal agencies might make some mistakes, but licensing, which is giving permission would be different than registration, which would allow for the capacity to oversee and prevent harm. What's your thought on the two alternatives? Anyone who wants to speak?

Dr. Rumman Chowdhury:

I can speak to that. I think overly onerous licensing would actually prevent people from doing one-off experimental or fun exercises. What we have to understand is, you know, there is a particular scale of impact number of people being, you know, using a product that maybe would trigger licensing rather than saying, everybody needs to license. There are college students, high school kids who want to use these models and just do fun things and we should allow them to do it.

Rep. Zoe Lofgren (D-CA):

I, you know, I guess I have some qualms and other members may, you know, we've got large model ais and to some extent they are a black box. Even the creators don't fully understand what they're doing. And the idea that we would have a licensing regime I think is very daunting. as opposed to a registration regime where we might have the capacity for third parties to do auditing and the like, I'll just lay that out there. I wanna ask anybody who can answer this question. When it comes to large language models, generative ai, the computing power necessary is so immense that we have ended up with basically three very large private sector entities who have the computing capacity to actually do that. Mr. Altman was here I think last month and opined when he met with us at the Aspen Institute breakfast, and it might not even be possible to catch up in terms of the pure computing power. We've had discussions here on whether the government should create the computing power to allow not only the private sector, but academics to be competitive. Is that even viable at this point? Whoever could answer that.

Dr. Shahin Farshchi:

I can take that real quick. I believe so. I think it is possible, bear in mind that the compute resources that were created by these three entities were initially meant for internal consumption. Correct. And they have been repurposed now for training AI in semiconductor development, which is the core of this technology. There is ultimately a trade off between narrow functionality and or breadth of functionality and performance at a single use case. And so if there was a decision made to build resources that were targeted at training large language models, for example, I think it would be possible to quickly catch up and build that resource the same way we built specific resources during World War II for a certain type of, of warfare warfare and then again during the Persian Gulf War. So I think it's, we as a nation are capable of doing that.

Rep. Zoe Lofgren (D-CA):

I see. My time is expired. I thank you so much and my additional questions we'll send to you after the hearing and I yield back.

Rep. Jay Obernolte (R-CA):

Gentleman yields back. I will recognize myself for five minutes for my questions, and I wanna thank you for the really fascinating testimony. Dr. Hanni, I'd like to start with you. You'd brought up the concept of trustworthy ai and I think that that's an extremely important topic. I actually dislike the word trustworthy AI because it imparts to AI something that doesn't have human qualities. It's just a piece of software. I was interested, Dr. Murdick, when you said, sometimes human partners trust AI when they shouldn't and fail to trust it when they should. And I think that that is a better way of expressing what we mean when we talk about ai. but this is an important conversation to have because we in Congress, as we contemplate establishing a regulatory framework for AI, we need to be explicit when we say we want it to be trustworthy.

You know, we can talk about efficacy or robustness or repeatability, but we need to be very specific when we talk about what we mean by trustworthy. It's not helpful to use evocative terms. Like, well, AI has to share its values, which is something that's in the framework of other countries' approach to AI. Well, that's great. You know, values, that's a human term. What does that mean for AI to have values? So the question for you, doctor, is what, what do we mean when we say trustworthy AI? And, you know, in what context should us as, as lawmakers think about AI as trustworthy?

Dr. Jason Matheny:

Thank you. When we talk about trustworthiness of engineered systems, we usually mean, do they behave as predicted? Do they behave in a way that is safe, reliable, and robust given a variety of environments? So for example do we have trust in our seatbelt? Do we have trust in the anti-lock braking system of a car? Do we have trust in the accident avoidance system on an an airplane? So those are the kinds of properties that we want in our engineered systems: are they safe, are they reliable, are they robust?

Rep. Jay Obernolte (R-CA):

I would agree. And I think that we can put metrics on those things. I just don't think that calling AI trustworthy is helpful because we're already having this perceptual problem that people are thinking of it in an anthropomorphic way, and it isn't. It is just software. And you know, we could talk about the intent when we deploy it. We can talk about the intent when we create it, of us as humans, but to impart those qualities to the software, I think is is misleading to people. Dr. Chowdhury, I want to continue the Ranking Member's line of questioning which I thought was excellent on the on the, the intersection between copyright holders and, and content creators and the training of AI because I think that this is going to be a really critical issue for us to grapple with.

And I mean, here's, here's the problem. The, if, if we say, as some content creators have suggested that no copyrighted content can be used in the training of ai, you know, which from their point of view is, is completely the real reasonable thing to be saying. But if we do that, then we're gonna wind up with AI that is fundamentally useless in a lot of different domains. And let me give you a specific example. Cause I'd like your thoughts on it. I mean, one trademarked term is right. The NFL would not like someone using the word Super Bowl. In a commercial sense, if you want a bar, you have to talk about, you know, the party to watch the big game, nudge, nudge, wink, wink, right? Which is from their point of view, completely reasonable. But if you prohibited the use of the word Super Bowl in training AI, you'd come up with a large language model that if you said, what time is the Super Bowl? You would've no idea what you were talking about. You know, it would lack the, you know, the context to be able to answer questions like that. So how do we navigate that space?

Dr. Rumman Chowdhury:

I think you're bringing up an excellent point. I think these technologies are gonna push the upper limits of many of the laws we have, including protections for copyright. I don't think there's a good answer. I think this is what we are negotiating today. The answer will lie somewhere in the spectrum. There will be certain terms. I think a similar conversation happened about the term Taco Tuesday and the ability to use it widely, and it was actually decided you could use it widely. I think some of these will be addressed in a case by case basis, but more broadly, I think the thing to keep an eye on is whether or not somebody's livelihood is being impacted. It's not really about a word or picture. It is actually about whether someone is taken out of a job because of a model that's being built.

Rep. Jay Obernolte (R-CA):

Hmm. I partially agree. You know, I think it is, it gets into a very dicey area when we talk about if someone's job is being impacted, because AI is gonna be extremely economically disruptive. And our job as lawmakers is to make sure that disruption is largely positive, okay, for the median person in our society. But, you know, jobs will be impacted. We hope that most will be positive and not negative. I actually think, and I'm gonna run outta time here, but I, we have a large body of legal knowledge already on this topic around the concept of fair use. And I think that that really is the solution to this problem. There'll be fair use of intellectual property and ai, and there'll be things that are clearly are not fair use or infringing, and I think that we can use that as a foundation, but love to continue the discussion later.

Dr. Rumman Chowdhury:

Absolutely.

Rep. Jay Obernolte (R-CA):

Next we'll recognize the gentleman from Oregon. Ms. Bonamici, you are recognized for five minutes.

Rep. Suzanne Bonamici (D-OR):

Thank you, Mr. Chairman, Ranking Member, thank you to the witnesses for your expertise. I acknowledge the tremendous potential of AI, but also the significant risks and concerns that I've heard about, including what we just talked about, potential job displacement, privacy concerns, ethical considerations, bias, which we've been talking about in this committee for years. Market dominance by large firms, and in the hands of scammers and fraudsters, a whole range of nefarious possibilities to mislead and deceive voters and consumers. also the data sets take up an enormous amount of energy to run. We know we acknowledge that. So we need responsible development with ethical guidelines to maximize the benefits and, and minimize the risks, of course. So, Dr. Chowdhury, as AI systems move forward, I remain concerned about the lack of diversity in the workforce. So could you mention how increasing diversity will help address bias?

Dr. Rumman Chowdhury:

What an excellent point. Thank you so much, Congresswoman. So first of all, we need a wide range of perspectives in order to understand the impact of artificial intelligence systems. I'll give you a specific example. For my time at Twitter, we held the first algorithmic bias bounty. That meant we opened up a Twitter model for public scrutiny, and we learned things that my team of highly educated PhDs wouldn't think of. For example, did you know if you put a single dot on a photo, you could change how the algorithm decided where to crop the photo? We didn't know that somebody told us this. Did you know that algorithmic cropping tended to crop out people in camouflage because they blended in with their backgrounds. It did what camouflage was supposed to do. We didn't know that. so we, we learn more when we bring more people in. So, you know, open more open access independent researcher funding, red teaming, et cetera, opening doors to people will be what makes our systems more robust.

Rep. Suzanne Bonamici (D-OR):

A absolutely appreciate that so much. And, and I wanna continue Dr. Chowdhury, I wanna talk about the ethics. and I expect that those in this room will all agree that ethical AI is important to align the systems with values, respect fundamental rights, contribute positively to the, to society while minimizing potential harms, and get, gets to this trustworthiness issue, which you mentioned and that, that we've been talking about. So, who defines what ethical is is, is there a universal definition? Does Congress have a role? Is this being defined by industry? I know there's a bipartisan proposal for a blue ribbon commission to, to develop a strategy for regulating ai. Would this be something that they would handle or would near be involved? and, and also I'm gonna tell you the second part of this question and, and then let you respond. In your testimony, you talk about the ethical hackers and in your testimony explains the role that they play, but how can they help design and implement ethical systems? And how can policy differentiate between bad hackers and ethical hackers?

Dr. Rumman Chowdhury:

Both great questions. So first I wanna adjust, the first part of what you brought up is who defines ethics? And you know, fortunately, this is not a new problem with technology. We have grappled with this in the law for many, many years. So you know, I recognize that previously someone mentioned that we seem to think a lot of problems are new with technology. This is not a new problem. And usually what we do is we get at this by a diversity of opinions and input and also ensuring that our AI is reflective of our values. And we've articulated our democratic values, right? For the US, we have the Blueprint for an AI bill of Rights. We have the NIST AI Risk Management Framework. So we've actually, as a nation, sat down and done this. so to your second question on ethical hackers.

Ethical hackers are operating in the public good, and there is a very clear difference. So what an ethical hacker will do is, for example, identify a vulnerability in in some sort of a system. And often they actually go to the company first to say, Hey, can you fix this? So, but often these individuals are silenced with threats of litigation. So we need to do is actually have in, in increasing protections for these individuals who are operating the public good, have repositories where people can share this information with each other and also allow companies to be part of this process. For example, what might responsible disclosure look like? How can we make this not an adversarial environment where it's the public versus companies, but the public as a resource for companies to improve what they're doing above and beyond what they're able to do themselves?

Rep. Suzanne Bonamici (D-OR):

I appreciate that. And I wanna follow up on the earlier point about yes, and I, I'm aware of the work that's been done so far on the ethical standards. However, I'm just questioning whether this is something that, does it need to be put into law to regulation? Does everyone agree? And, and could this, is there hope that there could be some sort of universal standard?

Dr. Rumman Chowdhury:

I do not think a universal ethical standard is possible. We live in a society that reflects diversity of opinions, thought, and we need to respect that and encourage that. But how do we prevent, how do we create the safeguards and identify what is harmful in social media? We would think a lot about what is harmful content, toxic content. And all of this lives on a spectrum. And I think any governance that's created has to respect that. Society changes, people change the words and terms we use to reflect things change, and our ethics will change as well. So creating a flexible framework by which we can create ethical guidelines to help people make smart decisions is a better way to go.

Rep. Suzanne Bonamici (D-OR):

And just to confirm, that would be voluntary, not mandatory. Oh, see, my time is expired. I must yield back. Thank, can you, could you answer that question, just yes or no?

Rep. Frank Lucas (R-OK):

Certainly.

Dr. Rumman Chowdhury:

Yes.

Rep. Suzanne Bonamici (D-OR):

Thank you. <laugh>,

Rep. Jay Obernolte (R-CA):

The gentleman yields back. We'll go next to my colleague from California. Congressman Issa, you're recognized for five minutes.

Rep. Darrell Issa (R-CA):

Thank you. I'll continue right where she left off. Dr. Chowdhury, the last answer got me just perfect because so it's gonna be voluntary. So who decides who puts that.in a place that affects the cropping?

Dr. Rumman Chowdhury:

I'm gonna continue my answer <laugh>. So it can be voluntary, but in certain cases, high in, in high risk cases and high impact.

Rep. Darrell Issa (R-CA):

We don't do voluntary here at Congress. You know that <laugh>?

Dr. Rumman Chowdhury:

I do. But I also do recognize that the way that we have structured regulation in the United States is context specific. You, you know, financial authorities, regulate....

Rep. Darrell Issa (R-CA):

Well, let's, let's get a context, cuz I, and it's for a couple of you, if you've, if you've got a PhD, you're, you're eligible to answer this question.

And if you've published a book, you're eligible to answer this question. Now, the most knowledgeable person on AI up here at the dais that I know of is sitting in the chair right now. So, but she, he happened to say "fair use." And of course, that gets my hackles up as the Chairman of the subcommittee that determines what fair use is. Now, how many of you went to college and studied from books that were published for the pure purpose of your, your reading them usually by the professor who wrote them? Raise your hand. Okay. Nothing has changed in academia. So is it fair use to not pay for that book, absorb the entire content and add it to your learning experience?

So then the question, and I'll start with you because both Twitter time and academia time and so on today, everyone assumes that the learning process of AI is fair use. Is there any basis for it being fair use rather than a standard essential copyright one that must be licensed. But if you're going to absorb every one of your published books and every one of the published books that gave you the education you have and call it fair use, are we in fact turning upside down the history of learning and fair use just because it's a computer?

Dr. Rumman Chowdhury:

I think the difference here is there's a difference between me borrowing a book from my friend, learning and my individual impact on society.

Rep. Darrell Issa (R-CA):

Oh, because it's cumulative. Because you've borrowed everyone's book and read everyone's book.

Dr. Rumman Chowdhury:

Well, and because it's at scale, I cannot impact hundreds of millions of people around the world the way these models can, the things I say will not change the shape of democracy.

Rep. Darrell Issa (R-CA):

So, so I, I'll accept that. Anyone want to have a slightly different opinion on stealing 100% of all the works from, of all time both copyrighted and not copyrighted and calling it fair use and saying, because you took so much, because you stole so big that in fact, you're fine. Anyone wanna defend that?

Dr. Rumman Chowdhury:

I'm sorry. I don't think that at all. I think it's wrong to do that. I think it's wrong...

Rep. Darrell Issa (R-CA):

So what is one of the essential items that we must determine the rights of the information that goes in to be properly compensated, assuming it's under copyright?

Dr. Rumman Chowdhury:

Yes.

Rep. Darrell Issa (R-CA):

You all agree with that? Okay. Put 'em in your computers and give us an idea of how we do it, because obviously this is something we've never grappled with. We've never grappled with universal copyright absorption. And hopefully since all of you do publish and do think about it, and the Rand Corporation has the dollars to help us please begin the process because it's one of the areas that is so, it is going to emerge incredibly quickly. Now obviously we could switch from that to we're talking about job losses and, and so on. One of my questions, of course is if for anyone who wants to ate this, if we put all of the information in and we turn on a computer and we give it the funding of the Chinese Communist Party and we say patent everything, do we in fact eliminate the ability to, or to independently patent something and not be bracketed by a massive series of patents that are all are in part produced by artificial intelligence? I'm not looking at 2001 a Space Odyssey or, or Terminator. I'm looking at destruction of the building blocks of intellectual property that have allowed for innovation for hundreds of years.

Most courageous, please.

Dr. Dewey Murdick:

Yeah. I don't know why I'm turning the mic on at this moment, but I I think there's a core for the, both two questions you asked. I think money actually matters. The reason that we haven't litigated fair use fully is there hasn't been much money made in these models that have been adjusting, incorporating all the copyrighted material.

Rep. Darrell Issa (R-CA):

But trust me, Google was sued when they were losing money too. Yeah,

Dr. Dewey Murdick:

<laugh>. But I do think that the fact that there's a view that there's a market will change the dynamics about how, because people will start saying, wait, you're, you're profiting off my content and there'll be, so I do think money changes this a little bit. And I think and, and I, it goes to the second question too. I I think the pragmatics of money will change the dynamics. Anyway, it's a fairly simple observation on this point.

Rep. Darrell Issa (R-CA):

Thank you. And I apologize for dominating on, on this, but I'm going to Nashville this weekend and meet with a whole bunch of songwriters that are scared stiff. So I, anyone that wants to follow up? yes. If the Chairman doesn't mind.

Clement Delangue:

Yes. I think an interesting example some people have been describing Hugging Face as some sort of, kind of like a giant kind of library. And, and in a sense we accept that people can run books at the library because it contributes to kind of like public and global conflict progress. I think we can see the same thing there where if we kind of like give access to this content for, for open source, for open science, I think it should be accepted in our society because it contributes to public good. but when it's for private commercial interest, then it should be approached differently. And actually in that sense, you know, open science and open source is some sort of a solution to this problem because it gives transparency. There's no way to know what copyright content is used in black box systems, like most of the systems that we have to today in order to take a decision

Rep. Jay Obernolte (R-CA):

That that, that knocking says the rest. For the record, he's been very kind. Thank you.

Clement Delangue:

Thank you.

Rep. Jay Obernolte (R-CA):

Gentleman yields back. We'll go next to the gentlewoman from Michigan. Ms. Stevens, you recognize for five minutes.

Rep. Haley Stevens (D-MI):

And thank you Mr. Chair. I'm hoping I can also get the extra minute given that I am so excited about what we're talking about here today. And I want to thank you all for your individual expertise and leadership on a topic that is transforming the very dialogue of society and the way we are going to function and do business. Yet again at the quarter 21st century mark, after we've lived through many technological revolutions already before us and, and to my colleague who mentioned some very interesting points, I'll, I'll just say we're in a race right now. I'm also on the select committee on competitiveness with the Chinese Communist Party. And I come from Michigan, if you can't tell from my accent and artificial intelligence is proliferating with autonomous vehicle technology and we're either gonna have it in this country or we're not.

That's the deal, right? We, we, we can ask ourselves what happened to battery manufacturing and why we're overly reliant on the 85% of battery manufacturing that takes place in China when we would like it to be Michigan manufacturers when we'd like it to be United States manufacturers. And we're catching up. So invest in the technology. I mean, certainly our witness here from the venture capital company with 5 billion is gonna be making those decisions. But our federal government has gotta serve as a good steward and partner of the technology proliferation through the guardrails that Dr. Char's talking about, or our lunch will be eaten. This is a reality. We've got one AV manufacturer that is wholly owned in the United States, that's crews a subsidiary of General Motors, and they've gotta succeed and we've gotta pass the guardrails to enable them to, to make the cars. Cuz right now they can only do 2,500. So the question I wanted to ask is, cuz yesterday I sent a note, it's a letter to the secretary of state Mr. Blinken and I asked about this conversation, this point that has come up multiple times in the testimony about how we are going to dialogue at the international level to put up these proper AI guardrails. And so, Dr. Chowdry, you've brought this up in your testimony, and what do you think are the biggest cross-national problems in need of global oversight with regard to artificial intelligence right now?

Dr. Rumman Chowdhury:

Thank you. What a wonderful question, Congresswoman. I don't think every problem is a global problem. The important thing to ask ourselves is simply what, what is the climate change of AI? What are the problems that are so big that a given country or a given company can't solve it themselves. These are things like information integrity, preserving democratic values and democratic institutions cs, a child sexual abuse material radicalization. And we do have organizations that have been set up that are extra governmental to address these kinds of problems. but there, there's more. What we need is a rubric or a way of thinking through the biggest problems and how we're gonna work on them in a multi-stakeholder fashion.

Rep. Haley Stevens (D-MI):

Right. And Dr. Murdick, could you share with which existing authorities you believe would be best to convene the global community to abide by responsible AI measures?

Dr. Dewey Murdick:

Yeah. Well, I'm, I'm not sure I can name treaties, chapter and verse treaties. Well, s=so I think just one point about the core goal, the US plus its allies is stronger than China. If you, by a lot of different measures, you look at, you know, everything from research production to technology to innovation to talent, space size, to companies to, you know, investment levels. I think it's important that we're stronger together. If we can work together, if we're ever going to implement any kind of export controls effectively, we have to do them together as opposed to individually. So there's a lot of venues, international multi-party bodies that work on a variety of things. There's a lot of treaties. There's plural lateral, there's multilateral agreements. And I think we do have to work together to be able to implement these. Yeah, and I think any and all of them are relevant.

Rep. Haley Stevens (D-MI):

Well, and allow me to do, say Mr. Delangue. We have a great French American presence in my home state of Michigan, and we'd love to see you look at expanding to my state. We've got a lot of exciting technology happening. And as I mentioned, with autonomous vehicles, just to level set this, we've got CCP owned autonomous vehicle companies that are testing in San Francisco and all along the West coast. But we cannot test our technology in China. We cannot sell our technology, our autonomous vehicle technology in China. That's an example of a guardrail. I love what you just said, Dr. Murdick, about working with our allies, working with open democratic societies to make, produce and innovate and win the future. Thank you so much for Mr. Chair. I yield back.

Rep. Jay Obernolte (R-CA):

Gentlewoman yields back. We'll go next to the gentleman from Ohio, Mr. Miller, you're recognized for five minutes.

Rep. Max Miller (R-OH):

Thank you Mr. Chairman, and Ranking Member Lofgren for holding this hearing today. Dr. Murdick, as you highlighted in your written testimony, AI is just not a field to be left to PhDs and engineers. We need many types of workers across the AI supply chain, from PhD computer scientists to semiconductor engineers to lab technicians. Can you describe the workforce requirements needed to support the entire AI ecosystem? And what role will individuals with technical expertise play?

Dr. Dewey Murdick:

No, this is a great question and I will try to be brief because it's such a fascinating conversation. When you look at the AI workforce, it's super easy to get fixated on your PhD folks. And I think that it's a big mistake. One of the first applications that I started seeing discussed was a lawn, a landscaping business using the proprietor which had trouble writing. and they started using large language models to help communicate with customers to give them proposals when they couldn't do it before. That proliferation, no, I don't mean that in a proliferation way, sorry. That expansion of capabilities into and users that are much wider than your standard users is really the space we are living today. So in the deployment of any AI capability, you have your tech core tech team, you have your team that surrounds them or implements software engineers and other things like that.

You have your product managers. When I worked in Silicon Valley, the product managers were my favorite human beings because they could take a technical concept and connect it to reality in a way that a lot of people couldn't. And then you have your commercial and marketing people, you have a variety of different technologies showing up at the intersection of energy manufacturing. And so I think this AI literacy is actually relevant because I think all of us are going to be part of this economy in some way or another. So the size of this community is growing and I think we just need to make sure that they're trained well and they have that expertise to be able to implement implementations since they are respectful of the, the, the values that we've been discussing.

Rep. Max Miller (R-OH):

Thank you for that answer. I, I'm a big believer that technical education and CTE should be taught at a k through 12 level for every American to go ahead for an alternative career pathway. And I believe that the American dream has been distorted within this reality for younger generations of what they think that that is. It used to be, you know, a nice house, a white picket fence and to provide for your family and a future generation. Now it's a little bit different and distorted in my view. So thank you very much for that answer. another question. What role will community and technical colleges play in AI workforce development in the short term? you know, more recently than we think down the road, what about your recommended two year programs are well-suited to recruit and trained students in AI related fields?

Dr. Shahin Farshchi:

I'm a product of the California Community College system, so I can comment on that. So I feel like the emphasis in the community college system right now, or at least what I experienced back in the late nineties was, was two things, was preparation for transfer into a four year institution and vocational skills to enter into an existing kind of technical workforce. And I feel like there's an opportunity in the community college system, which is excellent, by the way, which is the reason why I'm sitting here today. to also focus on preparing an existing workforce instead of just being vocational into existing jobs, into jobs that are right over the horizon. So in the middle, in between the kind of get ready to become a mechanic, to get ready to transfer to uc, Berkeley, in between those two things, there are jobs that are going to be evolving as a result of ai, therefore upskilling you as an existing worker to these new jobs that will be emerging in the next couple years. So I think that in between kind of service to the community, through the community college system, I think would be extremely valuable to the community.

Rep. Max Miller (R-OH):

Dewey.

Dr. Dewey Murdick:

Just to add to that I think that the I spent after high school, 12 years in school, that's a lot of time to grind away at a particular technology and concept as the world accelerates. I don't think that that kind of investment is for everyone, and it never really was for everyone. But I think it needs to be more and more focused. And I think community colleges provide that opportunity to pick up skills. Maybe you graduated as an English major and now you're like, wait, I'd literally like to do a data science and, and, and that kind of environment of quick training, picking up the skills, stacking up certifications so that you can actually use that is a perfect venue for community colleges to be able to execute rapid training. And that adaption adaptation that's necessary in a very quick wording world working, wow, excuse me. A rapidly moving economy.

Dr. Rumman Chowdhury:

If I may add quickly, at our generative AI red teaming event, we're working with, funded by the Knight Foundation, working with seed AI to bring hundreds of community college students from around America to hack these models. So I absolutely support your endeavors.

Rep. Max Miller (R-OH):

Nice. Well, thank you all very much. Thank you, Mr. Chairman. And I have a few more questions I'm gonna enter into the record. But thank you very much. This is a joy. I yield back.

Rep. Jay Obernolte (R-CA):

Gentlemen, yields back, we'll hear next from the gentleman from New York. Mr. Bowman, you're recognized for five minutes.

Rep. Jamaal Bowman (D-NY):