Tracking the Weaponization of America's Political System in Favor of Disinformation—And Its Export Abroad

Nina Jankowicz, Benjamin Shultz / Oct 9, 2024Nina Jankowicz is the CEO of The American Sunlight Project and the author of two books on online harms. Benjamin Shultz is a researcher at The American Sunlight Project and serves as an expert on artificial intelligence for the Council of Europe.

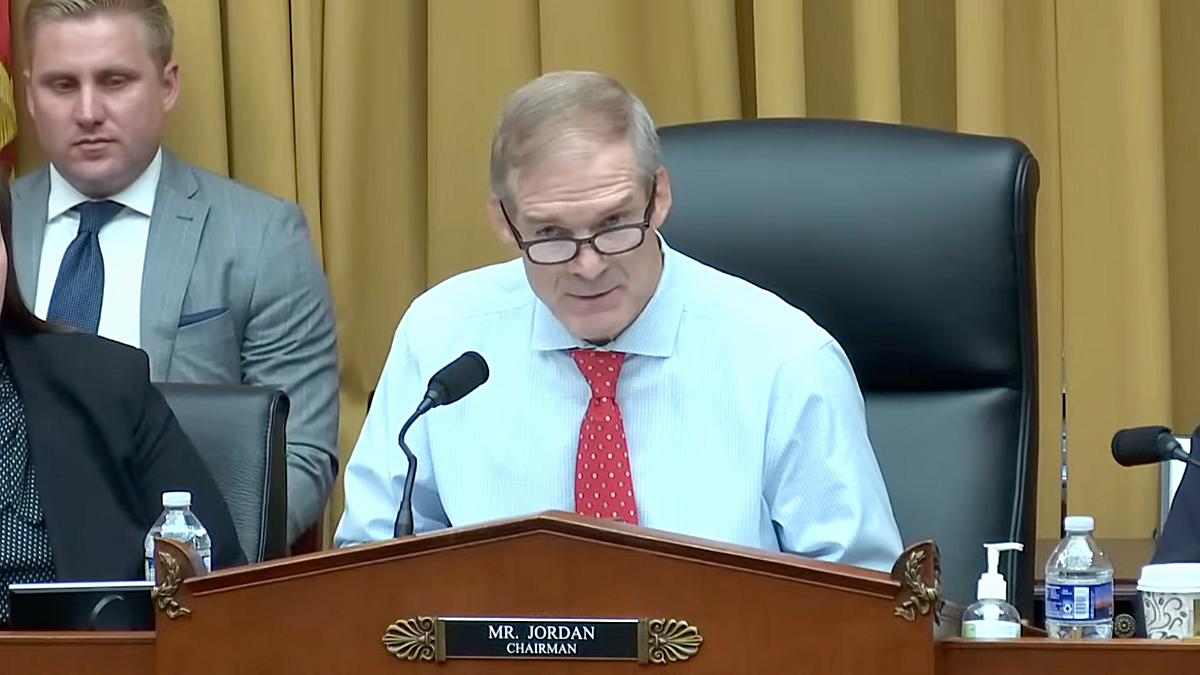

September 25, 2024: Rep. Jim Jordan (R-OH), chairs a hearing of the House Select Subcommittee on the Weaponization of the Federal Government in the Rayburn House Office Building in Washington, DC.

On August 25, French authorities detained Telegram co-founder and CEO Pavel Durov for “complicity in offenses including distribution of pornographic images of minors,” and his “refusal to communicate” with investigators. Two days later, Meta CEO Mark Zuckerberg wrote to Congress, claiming that Meta felt pressured by the White House to remove false and misleading content around COVID-19, despite a lack of publicly-available evidence demonstrating any coercion. And, capping off the week, X was banned in Brazil after its owner, Elon Musk, refused to comply with court orders to remove content on the platform that violated Brazilian law. X was in the crosshairs after shuttering its local office in violation of Brazil’s legal requirement to maintain a physical presence in Brazil.

Supporters of Durov, Zuckerberg, and Musk all defended themselves and their platforms with a similar line: Enforcement against harmful — and in Durov and Musk’s cases, illegal — content violates the principle of free speech. While reactions to these events may seem disconnected, they have been shaped by a coordinated campaign involving conservative media, allied advocacy organizations, and Congressional investigations led by Rep. Jim Jordan (R-OH) and the House Judiciary Committee he chairs to crack down on efforts to address online harms, including the proliferation of hate speech across social media and foreign influence in the US information environment. This campaign has created a hostile environment for anyone standing up for the truth — including researchers working to make the Internet safer — and now, they're exporting those efforts to other countries.

We explored this effort in a new report, which demonstrates how political operatives like Rep. Jordan are contributors to an information laundering cycle, using allegations of censorship to silence counter-disinformation researchers, trust and safety professionals, and government employees working to protect democracy ahead of the November election. Rep. Jordan and his supporters are buoyed by Substack bloggers who make hundreds of thousands of dollars amplifying these claims and "just asking questions," as well as powerful individuals like Musk and Zuckerberg who are rolling back efforts to address harmful online content in order to curry political favor and protect their bottom line.

One of the better known examples of the information laundering cycle was the relentless onslaught of depositions, records requests, and lawsuits against the Stanford Internet Observatory, which put that institution’s future in doubt. However, it has been employed in a number of other cases.

In November 2023, Rep. Jordan’s Select Subcommittee on the Weaponization of the Federal Government and the House Homeland Security Committee began promoting false claims that the Center for Internet Security (CIS), a nonprofit organization specializing in countering cyber threats, was engaged in censorship around the 2020 election. CIS was involved in several efforts in 2020 to monitor and report disinformation around the presidential election, including false polling place locations and threats against elections officials. Two weeks later, Twitter Files blogger Matt Taibbi conflated CIS with the Cybersecurity and Infrastructure Agency (CISA), falsely claiming that CIS sought the “elimination of millions of tweets” in partnership with the State Department Global Engagement Center — something CIS (nor CISA) never sought.

In February 2024, America First Legal (AFL) — a conservative legal action group headed by former Trump Administration advisor Stephen Miller — filed a series of records requests against CIS for alleged censorship of election-related content in Arizona, arguing CIS acted as a “government agent,” and therefore should be subject to Arizona public records laws. This radical legal rationale threatens private actors which, acting in good faith, engage government authorities and social media platforms about harmful, dangerous and illegal content online, as CIS did in reporting fake polling locations and threats against officials. Subjecting them to public records requests not only opens them to overwhelming, coordinated inquiries which distract from their work; it also exposes them to doxxing and further legal—or even physical—harassment. This is precisely what happened to Kate Starbird, a University of Washington professor who was interviewed by the House Judiciary Committee for her work on election rumors.

Following AFL’s records filings in Arizona, a one-two punch to keep this narrative circulating was carried out by the Weaponization Subcommittee, which released staff reports echoing these claims. This back-and-forth between Rep. Jordan and his allies, bogging down their targets with frivolous, resource-intensive legal action, appears to be cyclical by design. Material cherry-picked from records requests, legal discovery, and leaked documents is used to justify Congressional hearings and subpoenas. That Congressional activity generates news cycles which lead to further legal action. Pressure on researchers mounts as the spiral accelerates, until institutions and individuals begin, ironically, to self-censor to avoid accusations of censorship. This information laundering cycle has made a very real impact on government officials and researchers—even those who have never made, and will never make, any content moderation decisions.

The same networks were activated around Durov’s arrest and Musk’s game of chicken in Brazil. When news of Pavel Durov's arrest broke, Telegram said in a statement, “It is absurd to claim that a platform or its owner are responsible for abuse of that platform.” Elon Musk framed Durov’s arrest as an attack on free speech, not child sexual abuse material. Musk himself is also embroiled in spats beyond Brazil. He has bitterly criticized Australia’s eSafety Commissioner for simply enforcing the country’s laws and framed the UK's response to anti-immigrant riots spurred by disinformation as the road to an "inevitable" civil war. His comments have been readily echoed by other parts of the "censorship" information laundering cycle, including AFL, which lamented Durov’s arrest and claimed it was part of a supposed plan to stifle free speech across the West.

Ironically, Musk and Rep. Jordan’s “free speech absolutist” positions don’t always hold firm when they’re financially or politically inconvenient. Musk and X have had no problem censoring content critical of increasingly illiberal leaders around the world in the last year. In April 2023, X removed a BBC documentary that criticized Prime Minister Narendra Modi of India, and less than a month later during the 2023 Turkish presidential election campaign, Musk strongly defended removing content critical of the sitting Turkish government.

For Rep. Jordan’s part, despite claims of good-faith oversight from Republican leadership on the House Judiciary Committee around alleged censorship, his committee continues to overlook the fact that the Trump Administration asked X (then Twitter) to remove tweets critical of then-President Trump, none of which contained any illicit or illegal material.

Meanwhile, Meta CEO Mark Zuckerberg has lent credibility to Musk and Jordan’s position by capitulating to Rep. Jordan in a letter claiming that Meta was pressured by the White House to remove false and misleading content around COVID-19. In the past, Zuckerberg has repeatedly stated that it was ultimately Meta’s own decisions and policies that determined which content was actioned. This was a key point that the US Supreme Court highlighted when ruling on Murthy v. Missouri in June. During oral arguments in the case, the original plaintiffs could not trace a single piece of removed social media content to pressure from government officials. But Zuckerberg, finger in the wind, decided to legitimize Musk and Jordan’s crusade out of political expediency.

The next few months in the US will be a harbinger of the fight for truth around the world, and so far, the signs are chilling: trust and safety teams have been reduced at most major tech platforms in the last year, allowing hate speech, conspiracies, and other harmful content to run rampant. Academic access to social media APIs has been axed in favor of costly subscriptions or inferior products, leaving the public in the dark about online harms. The recent shutdown of Meta’s social listening tool CrowdTangle, for example, has yielded a replacement, the Meta Content Library, that is widely viewed as less transparent and less accessible. Beyond this, researchers and tech policy professionals have been hauled before Congress, interrogated for simply doing their jobs, and left subject to death threats and online harassment from the political fringe-gone-mainstream. Put simply, protection of the American information environment is in a worse place today than it was four or even eight years ago. By weaponizing baseless allegations of censorship, fringe political operatives and conservative media outlets have silenced critical voices and hindered efforts to make the Internet a safer place.

Now, in exporting their efforts abroad, they are attempting to shape the global political stage to their benefit. Their actions have the potential to erode trust in democratic institutions around the world, just as they’ve done at home. Regardless of the outcome of the November election, there is a clear and present danger facing anyone seeking to make the internet a safer place — both inside and outside US borders.

Authors