The Whiteness of Mastodon

Justin Hendrix / Nov 23, 2022Audio of this conversation is available via your favorite podcast service.

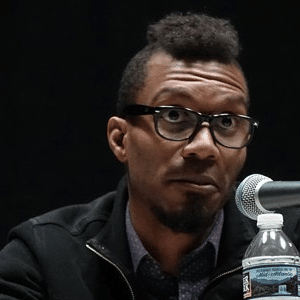

By all accounts, Elon Musk’s acquisition of Twitter is not going well. And yet many have the real sense that something important may be lost if the platform collapses; or if there is a substantial migration away from it to alternatives like Mastodon, the open source, decentralized platform that has grown from three hundred thousand monthly active users to nearly two million since Musk bought Twitter. In this episode, I had the chance to discuss Musk’s takeover with Dr. Johnathan Flowers, who studies the philosophy of technology, and to learn more about some of the exclusive norms he’s observed that may create obstacles to communities of color when contemplating the switch to Mastodon, or potentially to their safety in the 'Fediverse.'

What follows is a lightly edited transcript of the discussion.

Justin Hendrix:

Dr. Flowers, can you tell me a little bit about your academic work, your research? What do you get up to? What are your curiosities?

Dr. Johnathan Flowers:

My primary areas of research are East Asian philosophy, philosophy of technology, American pragmatism and philosophy of race, disability, and gender, as well as aesthetics. Broadly, I think about the ways that identity can be understood as one affective or felt. I think about the ways that race, gender, and disability intersect with technology such that not every user has the same experience through technology, and through technology is important here because it's not simply that users engage with technology, they engage with other people through technology, so not every user has the same experience through technology. I think through the ways that our lived experience is primarily grounded in the affect of the felt sense of the world through American pragmatism and Japanese aesthetics, as opposed to a primarily a cognitive experience of the world. So it's less, "I think, therefore I am," and more, "I feel, therefore I know."

Justin Hendrix:

That seems to offer an insight into our modern social media environment.

Dr. Johnathan Flowers:

Yeah, because if we think about, say, users connecting with other people through technology, then we start thinking about the ways in which identity is made possible online by means of technology. Here, when I say by means of technology, I'm drawing on John Dewey who says that communities, individuals, organisms emerge by means of their environment that is in transaction with their environment such that both the individual and the environment are changed. Moreover, again, drawing on Dewey, when I say individuals emerge by means of the environment, I mean that they incorporate some element of the environment into how they make their personhood and their individuality present within a variety of spaces.

So when we're talking about something like social media, which I take to be an environment in the truest sense, a digital environment, people make their identities present by means of the affordances or the various tools and resources present within a digital space. This is something that pairs with some of the work that André Brock has done in Distributed Blackness. This pairs with some of the work that Catherine Knight Steel has done in Digital Black Feminism, and this pairs with some of the work done in Hashtag Activism.

Justin Hendrix:

I've been observing how you are putting this set of ideas and perhaps framework to use in your critique of Elon Musk's takeover of Twitter and how that is impacting that particular environment. I suppose I'll give you just an open-ended opportunity to say what's on your mind with regard to the changes that Musk is making and the general vibe in this transition.

Dr. Johnathan Flowers:

As you note, I have been fairly critical of both Musk's takeover and the response of Twitter users to Musk's takeover, as well as the emergence of Mastodon as an alternative to Twitter.

So Musk's takeover is, I'd like to start there because that's probably the thing that I have said the least about. In general, my broad philosophical approach to Musk's takeover is that it reveals the ways in which our commons, our gathering places are under the control generally of corporate interests. In considering the ways that our gathering places, our commons, are under the control of corporate interests, we can think through the ways that not everyone has equal access to participation in the commons.

Given the fact that we live in a society broadly structured by white supremacy, broadly structured by patriarchy, broadly structured by homophobia, transphobia, and anti-LGBTQ sentiment in general, to have our commons under the control of corporate interests, of capitalist interests, means that those folks most marginalized in our society, either through legislation, through social perspective, through deprivation of resources will have the least amount of access to these commons. So far as these populations have the least amount of access to their commons, these commons are never truly the open fora that they are argued about, which gets us into some ironies about our current discourse about social media because one of the ongoing claims that drives right-wing critiques of big tech is that they stifle participation by right-wing adherence. They engage in selective censoring to minimize right-wing voices.

This critique is generally something that we've heard for the past four or five years, given the ways that social media has been used by right-wing individuals, both politicians and their adherence to circulate particular kinds of disinformation and misinformation. Now, there are two kinds of things that I want to say about this. One, given the nature of the private ownership of the commons such as Twitter and Facebook, these complaints are not something that one could lodge or level against a government. These are private platforms. It is not as though the government is engaging in censorship. It is as though there is censorship of a public commons where everyone has an equal expectation for participation. They are literally complaining about the nature of a private platform as engaging in its own internal moderation. I could just as soon complain about the rules of the library.

If I were to make too much noise in the library, the library has the responsibility, or the option, to throw me out. But that's an imprecise metaphor insofar as libraries often receive funding from public or governmental spaces, so they can't necessarily engage in censorship that right-wing adherents are complaining about. A better analogy would be a private home. I invite people into my home for a meeting, one of the participants gets out of hand or one of the participants begins saying things that I don't like as a homeowner, I can throw them out of my home and not risk reprisal. The private nature of our digital commons means that the corporate interests that control them can do similar things. They can evict me, they can silence me, they can stifle my voice on the basis of their own value systems, and I generally have limited recourse to engage with it.

Now, having said all of that, this is why many of the right-wing critiques make very little sense because marginalized folks have been subject to this for years. We need to only look at the history of sex workers on Twitter or on Facebook or on Reddit or on any of the other social media fora that we take to be valuable. Sex workers have experienced multiple instances of their voices being suppressed in the context of Twitter, of being shadow banned wherein, one's account still exists, but one cannot use all of the affordances of the space to engage with other people, so on and so forth. All these things are typically grounded in anti-sex work sentiment or alignment with the case of the Tumblr fans of sex workers alignment with the ideals of a given corporation. So I say all this to say that Elon Musk's takeover of Twitter and the promise to civilize say the digital commons is a misnomer because all he's doing is instituting his own vision of what or corporate-controlled digital commons should look like, and the metaphor of civilizing a digital commons is particularly problematic given Musk's enduring history.

He is a white man bringing “civilization” to a platform that is heavily used by marginalized populations. Our history tells us that white people trying to bring "civilization," and I'm putting civilization in scare quotes here, to marginalized people historically has not worked out well for the marginalized subject. That's generally my broader critique on Musk. Now, the response of Twitter users to Musk's takeover is problematic in a couple of senses. One, the first way that I think about this is in terms of the damage done by Twitter users migrating to other platforms or to their existing networks. So some of these networks are more fragile than others because of the ways they use the affordances of the website. Here again, by affordances I mean the resources, the tools, the very structure of the website, things like hashtags, retweets, quote tweets, and so on. The ways that a given community forms by means of the affordances of a digital platform structures some of the nature of that community.

As André Brock notes in his book, Distributed Blackness, Black Twitter as a phenomena emerged by means of the affordances of Twitter, through how things like quote tweets, retweets and hashtags allowed Black users to engage in Black digital practices, which are the performances of Black offline culture by means of the affordances of the digital platform of Twitter. So things like call and response, playing the dozens, which are culturally-mediated practices within the Black community, to engage in information sharing, in community building, were all enabled by the features of Twitter such that Black folks could engage in digital practices that made present their identities as members of a community. This coupled with the ability of the hashtag and the quote tweet to one participate and add onto a conversation through a call and response enabled Black Twitter to form as this broad interconnected network of users who made themselves present to one another through their digital practices.

So in explaining this right, the affordances, the very structure of Twitter is what enabled Black Twitter to emerge. Similarly, with disability Twitter insofar as many disabled persons are, especially in the time of COVID, unable to fully participate in their offline lives. One of the things that Twitter enabled was this flourishing of a meeting space, a common resource for disabled persons to come together and talk about their experiences such that they could find community in a world that had become immediately hostile to them. By immediately hostile to them, I don't simply mean by the fact of the ongoing pandemic and the multiple other kinds of viral outbreaks that it spawned, I meant immediately hostile by revealing the ways in which resistance to the pandemic meant condemning many disabled people to death or social isolation. Insofar as this is the case, Twitter's affordances, again, the hashtag functionality, the quote tweet functionality both enabled the emergence of a community or among a group of people whose identity could be made salient through the affordances of the platforms.

Now, disability Twitter uses the affordances a little bit differently than Black Twitter insofar as call and response isn't necessarily a fomenting element of the disability community in the same way that it is for the Black community. But the ability to align a conversation with a hashtag, things like #NEISVoid, things like #CripTheVote serve to enable members of the community to one, find each other through narrative, and two, participate in the narrative through the quote tweet functionality such that they became visible through how they participated in certain conversations. It also enabled the sharing of information and the organization of communities around particular kinds of knowledge production such that disabled users became legible to one another and legible to other users within the broader space of the platform. So these are two different ways that two different communities use the same affordances to give rise to a community that sustained them within digital space.

For Black Twitter it was the extension of offline digital practices and cultural practices into the online space. For disability Twitter, it was the creation of a space that enabled the formation of networks of support and community in the face of a hostile offline environment such that resources could be shared in community could be formed. To this end, insofar as this is the case, a Twitter migration, the migration off the platform, and I'm not speaking specifically migrating to Mastodon or any site in general, we'll get to Mastodon in a moment. Twitter migration damages these networks in different ways. So for Black Twitter, a Twitter migration is simply unlikely because other sites don't have the affordances that enable the formation of a community like Black Twitter. As I said, Black users make their identities legible by means of the affordances, the resources of the digital environment.

In doing so, a different platform would not allow Black Twitter users to make themselves legible in the same way. So we would have to engage in a process of transaction with the new platform to determine how best to make our identities legible, which as when I get to Mastodon will be a key point for why Mastodon is problematic. But for disability Twitter, a migration irreparably damages these networks because on disability Twitter each user functions as a specific kind of node, not just through their experience with disability, but through the ways that they use the retweet and quote tweet function to circulate information and to circulate narratives about disability. So insofar as each user functions as a unique node and some nodes are larger or smaller, given their follower count, given the kinds of ways they engage in both online and offline activism, the loss of a node means the loss of a point within the community wherein information circulates.

That loss means that other individuals connected to that node no longer have access to the information, the experiences that are being circulated through that node. When those members lose access to that node, the information does not spread through the network. Think about it in terms of the map of a city. If you look at a city from orbit, you see a whole bunch of interconnected lights. When a power station goes down, you see the city go dark in a ripple effect that stops at the boundaries of say, one power transmission station or another. This visual analogy I think will help us understand what happens with a space like disability Twitter, when a person migrates, so all of the connections that they have go dark, and there's this void in the network where information is no longer circulating, and so the network contracts.

It's not simply that this hole remains there, it's that the network contracts and gets smaller. The more people leave, the smaller the network becomes and the less connected users are to one another through the circulation of information and experiences. Insofar as this is the case, even the act of migrating away from Twitter just to see what's out there and going silent damages these networks. Part of this is due to the algorithmic nature of Twitter, but part of this is due to the fact that these people are simply no longer circulating the information through their communities and no longer acting as a node within the broader network. One of the hazards of a migration, at least in my view, where disability Twitter is concerned, is the loss of the ability to circulate information, particularly insofar as many disabled persons rely on Twitter to maintain their sense of community.

To be clear, I'm not the only disabled person who's been talking about this, and Imani Barberin has a blog post that outlines some of this, at least not with in terms of the network effect that I'm describing, but in terms of the overall cultural and communal effect on the disability community that the, I guess, demise of Twitter. I think that's a little bit hyperbolic, but let's put it in those terms, that the ‘demise’ of Twitter is having, right? Because disabled people rely on Twitter for community, for sharing information, for navigating a world that is hostile to us, the loss of even one person within our broader network has an irreparable effect on the network itself. That's generally my two major perspectives on Elon Musk's effects on Twitter. One of the things that I want to be clear about is Musk hasn't really done too much to the moderation reporting and content management systems of Twitter.

Aside from firing a whole bunch of people, which imperils the infrastructure of the platform, one of the things that I have noted is that there hasn't really been a failure of the reporting and moderation systems, the systems that are designed to prevent abuse. There have been individual failures of, say, the capacity to lock an account or to prevent retweets or to add comments and other microsystems on Twitter, but the overall reporting structure seems to be intact. Whether or not there are personnel still available to review these reports is another separate question, but the systems are intact. One of the interesting things is most migrations are driven by the fear that Elon Musk will open the floodgate to trolls and general bad actors, which would accompany say, a weakening of the moderation technologies available on Twitter, and by moderation technologies that don't simply mean the reporting functionality, I mean the ways in which moderation is accomplished as a human enterprise, a technique of sorting and filtering and understanding the harm caused by a given reported object.

So these moderation technologies are still intact. What has happened in response to Elon Musk's takeover, however, is the revelation of what happens when bad actors are no longer restrained by the fear of, say, consequence. So the initial thing that many folks were worried about was that Donald Trump and his ilk would be allowed back on Twitter. Insofar as this was the case, one of the things that happened was a whole bunch of existing users, existing right-wing, belligerent users took Elon Musk's takeover of Twitter as a sign that the rules no longer applied to them. So we saw a 500% jump, I believe it was in the use of racial slurs. We saw a massive increase in anti-Semitism, in anti-LGBTQ sentiment. I want to be clear about this. It wasn't the fact that there were new people coming in and doing this, it was the fact that existing accounts were no longer subject to the threat or to the potential threat of being banned, of being moderated, of being reported, which was not the case.

You could still report somebody for using racial slurs, you could still report somebody for intimidation or impersonation or other kinds of online violence. These functions didn't go away. What happened was people were simply not afraid of being punished for their rule violations because they felt that the new boss would have their back. They felt that Elon Musk would overturn such reports or prevent them from being permanently banned if they engaged in problematic behavior. So far, at least to my understanding, this is not the case. But one of the things that I would like to point out is Musk's mere stating that he would say, revise the moderation or other content kinds of functions on Twitter enabled these belligerent actors to take the mask off and reveal what their intentions actually were.

Musk didn't actually have to change anything to produce this influx of violence against marginalized subjects and then drive people off the platform. This is the other thing that I'm observing about the Twitter migration. It is in response to something that has not happened yet. It is in response to a perceived fear, and that perceived fear has created an opening for these belligerent actors to drive people off of the website, to drive people out of what is increasingly treated as a digital public commons, and to cede that commons to these kind of belligerent actors, and in doing so, emboldens them, at least in my view, in other ways offline.

If they can force you out of a digital commons online, that gives them a good reason to assume that they can force you out of a commons in public using the same tactics. For my money, I think that's one of the scariest things about Musk's takeover is the ways in which it demonstrates how one need not change the rules of the common, one need not do anything to the broader forward-facing moderation policies to embolden people to force other people out of the commons, and I think that's massive. That is incredibly scary because my general thesis is that what happens online is continuous with what happens offline. If folks can simply force you out of a commons online, then they're more likely to engage in similar activities offline, and that spells disaster for any kind of public discourse.

Justin Hendrix:

I just want to maybe try to summarize what I hear you saying, which is firstly, there are obviously significant concerns about the general social media ecosystem, the private nature of these platforms, and of course, the ability of one individual to come in and either purposefully or tacitly essentially change the culture of a platform with simply their own presence or their own ownership. But at the same time, I hear you almost mourning something that you do see as legitimate and worth preserving inside of this flawed environment, which is that, of course, these networks that have been built up sometimes in– well, I don't want to use the word spite– but sometimes in opposition to the dynamics of that platform. Is that a fair assessment of your argument?

Dr. Johnathan Flowers:

Yeah, that's a fair assessment of my argument, I would say that ‘in spite of the platform’ is actually a pretty good way to put it. When we start talking about Mastodon, one of the things that I'm going to try to make clear is how this’ in spite of’ nature functions as a result of the ways that platforms inherit whiteness or inherit structures of oppression. But ‘in spite of’ is actually a fairly good way to put it, because it is in spite of the privately-owned nature of the platform, that it has become a digital commons. It is in spite of the ways that private interests, at least in a white supremacist society, are often opposed to the public or private interests of the Black community. I say public or private interest because there's a public interest in having good things like equal rights or the capacity to participate in public discourse, and there's a private interest in being in community. So ‘in spite of’ is actually a pretty good way of putting it.

The same thing with other marginalized communities is in spite of the attempts, the longstanding attempt to exclude disabled persons from participating in public spaces. You can read Foucault's history of the asylum. You can read Shelley Tremain's Foucault and The Government of Disability. You can read a number of histories that point to the ways that disabled persons have been sequestered away from participation in public space. You can even look at the ableist backlash against Fetterman's campaign in Pennsylvania, the argument that he is unfit to serve due to his disability as another example of the ways that disabled persons are forced out of participation in public spaces. So in spite of, is actually a very good way of putting it, disabled persons participate in public spaces. The #CripTheVote hashtag is actually a pretty good one, in spite of the rampant ableism of the society around us, and in spite of the attempts to hide us away from public engagement.

Justin Hendrix:

So let's talk about Mastodon, because one of the things that you've been, well, tweeting about quite a lot, is some of the problematic aspects of that platform and some of the norms that are at least present on it at the moment. Probably fair to say that just as the Musk ownership of Twitter is a new circumstance, this migration is a new circumstance, and so the conditions may very much change and they may change somewhat in response to criticisms like the ones that you are levying.

Dr. Johnathan Flowers:

So, Mastodon. So there are a couple of things that we can say about Mastodon, right? One, Mastodon is a fundamentally different platform than Twitter. It has different affordances, and those affordances result in different communities forming by means of the platform. Mastodon's federated nature, its disconnected nature means that it is possible for communities to form their own instances within the federated network. These instances can have different rules for moderation, for content, for how users are expected to use the affordances of the platform within the space. These different kinds of rules about using the affordances of the platform means that different identities will be made present differently across different instances. Unlike on Twitter where one can use the affordances of the platform to make present a consistent identity across the entire website, on Mastodon, if a user signs up for multiple instances, that user will appear differently within those different instances, but on the basis of how a given instance structures the use of its affordances.

Now, given the federated nature of Mastodon, when a user follows another user on a different instance, some of the hosts from that user will appear in the timeline of other users on that instance. So if a user on one instance has a different kind of standard for how to use the affordances than a user on another instance, then you run into some friction in how users work. Now, having laid all of that out, there are actually some very good explainers for how Mastodon functions. To be clear, I'm using Mastodon as a metonym for the Fediverse in line with the documentation provided by Mastodon, which itself acknowledges the conflation of Mastodon with the Fediverse. While Fediverse is technically probably the more accurate way to talk about this, Mastodon as a shorthand has left into the cultural consciousness so much so that even Mastodon's own documentation has a line that acknowledges this is happening. So that's how I'm using Mastodon for the purposes of this conversation.

Now, when we're talking about Mastodon, first, we need to acknowledge that there is a history on Mastodon of instances of color being marginalized, being discriminated against. There is a history users of color being subject to racist, to anti-Semitic, to homophobic kinds of abuse. There is a history of the kinds of similar kinds of violence against users of color, against disabled users, against marginalized users on Mastodon that there is on Twitter; however I say, similar, similar at least in the effect of sense of doing kinds of oppressive violence to these users, but not similar structurally because the affordances of Mastodon means that while any identity must be made present differently because the resources available to make present that identity are different, any kind of harassment must be articulated differently on Mastodon.

So the first thing that I want to make clear is that Mastodon has a history of being inhospitable to marginalized users. This history is born out, as I've learned, through the marginalization and eventual shuttering of instances of color, of instances that were dedicated to hosting and supporting sex workers, of harassment of disabled users and so on. So Mastodon-- while its federated model was premised on, well, the activity protocol, if I understand the history correctly-- it was built in some ways to produce affordances that would avoid the kinds of harassment on Twitter. Things like quote tweet pile ons, things like other kinds of usage of the quote tweet or the comment or the reply feature to do violence. What that hasn't done is prevented the violence. In fact, it's given the kinds of bad actors who would do violence an opportunity to say, adjust to the new affordances because it's not simply identities that are enabled by means of a digital environment, it is oppression that is enabled by means of a digital environment. So the oppression that one experiences on a platform like Mastodon will necessarily be different than the oppression that one experiences on a platform like Twitter, because of the different affordances of the platform.

Now, having said all of that, right, Mastodon is a very white space. It draws upon some of the values and some of the interests of indie web producers, of the DIY tech community, wherein there's this sense of rugged individualism. The open source nature means that you can make your own stuff, and this motivates some of the kinds of responses that Mastodonians will make to users who say that there are certain features that aren't available, that there are certain content guidelines and moderation policies that tend to act as social norms on Mastodon itself. The argument would be, make your own instance. Now, as I have said on Mastodon, and less so on Twitter, the argument that you can make your own instance ignores particular resource costs of doing so.

Putting aside the monetary cost of starting up and maintaining one's own instance– which again, if you're a marginalized subject and you are living in a white supremacist society, this is an additional financial burden on yourself. We need to think about the moderation and personal costs of running your own instance. So an instance of color, for example, would have to have a robust moderation team to merely catch the racists. So this moderation team, be it members of color or other folks, would have to subject themselves to racist violence over and over and over again to filter out the racists and protect their community. You would essentially need, and I'm going to put this in scare quotes, "a warrior caste" to police the boundaries of your instance so that your community is not subject to the worst kinds of racist abuse. To be clear, there are users who are interested in visiting the worst kind of abuse on users of color, I have experienced myself.

It took me eight hours to get a pile of racist vitriol in response to some critiques of Mastodon, something that I needed an entire week of critiquing science, technology, engineering and mathematics in higher education to have a similar result. So it is not the case that there aren't users on Mastodon who are engaged in this behavior, but this gets me back to my point about moderation. The reason why I didn't just pack up and leave Mastodon or the server that I'd moved to is because the moderation team on my server responded incredibly fast. They responded quickly to both ban the instance and ban the individual users engaging in targeted harassment, and they'd done so even almost as soon as I got there. As I looked through my DMs, there was a couple of messages letting me know that they had banned or blocked some users who had already been engaging in this kind of harassment, and I didn't even know it. The only way I recognized it was by several posts on my timeline being filtered when I had set up no filters, so this brings us back to moderation.

For instances of color, you would need a robust moderation team to do exactly that. That involves asking people to take on the burden of filtering through, sifting through, engaging with the worst kinds of racist vitriol for the sake of having a space where they could be themselves. The alternative being, as many Mastodonians seem to be suggesting, you need to leave parts of yourself at the door when you get onto a given instance. So when people tell marginalized subjects to make their own instances, they grossly underestimate the resource intensity of doing so. It is not simply the case that we can make an instance and then suddenly be free of all of the things that we're trying to get away from. But it is the case that when we make an instance, we have to police it thoroughly, given the broader context of white supremacy in which these platforms grow up. I wanted to get that out of the way first because many of the replies or responses of Mastodonians to my critiques of Mastodon is, “make your own instance,” and it's simply not that easy.

That's not simply a thing that marginalized subjects can do and expect to be able to build a safe community, you have to make the community safe, which gets me into the nature of Mastodon as a platform. As I said, Mastodon is a very white platform, and insofar as I'm saying it's a very white platform, here I'm drawing on the work of Sara Ahmed in her pieceA Phenomenology of Whiteness, who argues that spaces inherit the orientations of the people within them. So if you have a space that is predominantly populated by white persons regardless of their other identities, if you are in a space primarily populated by white persons, the norms, the habits, the very structure of that space will take on a likeness to whiteness by virtue of how the majority of people participate in that space. As I said, Mastodon is a very white space. It is not unlike other tech spaces where whiteness is predominant. Insofar as this is the case, the norms, the habits, the affordances of the platform will inherit whiteness.

So we can think about the ongoing argument over the use of content warnings on Mastodon for certain topics as an example of this whiteness. To be clear, this I say ongoing because as I have learned in speaking with other Mastodonians of color and actually doing some research on the platform, this is not a new thing. Mastodonians of color have been fighting about the use of content warnings specifically for racism for a very long time. Part of the conflict over content warnings has motivated some of the responses of “move to your own instance where you can use content warnings however you see fit,” which as I have said, is not necessarily the solution that many think it is. But to get back to the use of content warnings, some of the arguments for content warnings, or at least the majority of them, at least in my view, come down to an attempt to preserve a particular white entitlement to comfort or freedom from racialized stress through the ways in which whiteness is held center as an inheritance of the platform.

Again, when you have a majority of the individuals in a space being white, that space will take up the habits, the norms, the perspectives, the orientations of the users or bodies within it. Insofar as the majority of the users on Mastodon are white, then they take up the kinds of ways that whiteness organizes space, including an entitlement to freedom from say, understanding one's complicitness and racism or freedom from engaging with experiences of racism as made present by users of color. The conflict over content warnings is symptomatic of a broader orientation or organization of the platform around whiteness. In my view, the arguments that conversations about race, and I say conversations about race because this conflict is not simply about whether you should content warn obviously racist things. So one of the things that I think is fairly valuable about Mastodon is the ability to say, content warn something that graphically depicts the death of a Black person. I'm not talking about that. I'm talking about the ways that the whiteness of the space conflates conversations about race and racism itself under demands to use the content warning functionality.

So this conflation results in a theoretical discussion about racism as so the conversation we're having right now on some instances would have to be flagged with a content warning or a discussion about the role that race plays in a variety of experiences of Black folks and people of color would, on some instances, have to go under a content warning on more scholastically-focused instances. Research on race might be also subject to content warnings or similarly selected out of or moderated away from the instance because it's not taken to be scholastic or part of the, say, mission of the scientifically-oriented or scholastically-oriented instance. So all of these things are the ways in which instances inherit whiteness. Insofar as instances inherit whiteness, the content warning conversation, at least in my view, is an argument over whether or not Mastodon should continue to maintain its inheritance of whiteness because as Ahmed says, "One of the challenges for white people is rejecting this inheritance."

One of the challenges of Mastodon, at least as I see it, is rejecting its inheritance or is rejecting the inheritance of whiteness through how the norms of Mastodon demand the use of its affordances. Insofar as most Mastodonians refuse to do this, and it's default to make your own instance, one of the things they're implicitly saying is, “you can only make present your identity in the ways that we approve of. You can only use these affordances or you can only be Black by means of the affordances of the platform in the ways that we approve of.”

To be clear, while Mastodonians of color have been fighting this fight for a long time, and this fight has recently been reinvigorated by the influx of folks from Mastodon, this is not just an issue for Black folks or Black Twitter ex-patriots, this is an issue for folks in the disability community, folks in the Jewish community, folks in the queer community, particularly queer folks of color, because whiteness functions within queer spaces, which is interesting given one of the arguments against the whiteness of Mastodon is the presentation of the history of the protocol as being developed by predominantly queer developers.

That is fine. I grant that history, but it is also the case that whiteness is a large problem within the LGBTQ community. Being queer does not insulate one cell from the inheritance of whiteness, although one can use one's queerness as a shield from critique, as many Mastodonians have used by presenting the history of Mastodon as grounded in queer folks attempts to avoid the harassment on Twitter. That's generally my overarching thesis here. Because of the norms around how affordances the platform are used, Mastodon, unless they're like particular kinds of changes made, will never be able to support a robust community of color like Black Twitter. It might do so by virtue of creating, say, a Black instance. But that brings with it its own particular kinds of harms. One, it risks ghettoizing the Black community in a federated space. All of the Black people go here and instances have the option of engaging with them or deeding from them.

You could block the entire Black instance and then see nothing, which is a problem for a variety of reasons, but would also mirror the social dynamics of racism in America. So white flight, for example, this would be a kind of inversion of white flight where Black users go to an area where they feel comfortable, white users view that area as dangerous and then defederate from it or put up digital fire breaks around it such that the conversations within that instance do not escape from it and disturb the white suburbia of the other instances. That's a problem. That is a problem that many Mastodonians don't seem to think of as a problem when suggesting “make your own instance.” There's also the case that the whiteness of Mastodon is felt affectively. It is recognized as a sense of a space, and I described it on Twitter like going into a predominantly white work environment and recognizing that there are some elements of you that you have to leave at the door. That feeling, that sense of whiteness as a felt space is off-putting to many users.

We don't get online to deal with the same kinds of things that we do offline. Even the process of navigating, swimming through the sea of whiteness that is Mastodon is made more difficult for any potential migration. That, and to be honest, if we look at everything that Elon Musk is doing with Twitter, there is no reason for Black Twitter to migrate to Mastodon. We endure things like Elon Musk all the time, and Black Twitter can endure on Twitter regardless of many of the changes, although some may affect the ways that we build community, the verification thing, for example. Although as an aside, one of the things that I predicted with the use of the verification feature would be folks taking up the verification feature to impersonate individuals and then parody them through impersonation. My prediction would be that had the verification feature remained what it was beyond the initial test phase, you might have seen some users from Black Twitter taking up the verification feature to parody known racists in the similar ways that Black Twitter chews them up in the quote tweets, for example.

You would've seen the use of that affordance in a culturally-specific comedic way that I probably would've found amusing, but that's one of the ways in which communities use these affordances differently. The parody would've become yet another way that folks take up the affordances of Twitter to engage in community, which to get back to Mastodon, these affordances aren't available on Mastodon, which will make community building that much more difficult. My last point about Mastodon here is, given everything I've said about the overwhelming whiteness of the platform, the lack of affordances, one of the things that Mastodon makes more difficult is actually forming a community. So if your space is organized around norms of whiteness that determine how you use the affordances, and if these norms police users who don't use the affordances "appropriately," and I'm putting appropriately in quotations, then yo users will be disincentivized from exploring unique ways to use these affordances.

So one of the things that the history of Black Twitter teaches us is that Black users, when they encounter a new platform, play with the affordances. They use them creatively to create community, to engage in unique digital practices, to develop a habitus in a phenomenological sense, which is one's disposition towards action within an environment by means of the affordances of the platforms. This requires experimentation, this requires play, this requires interacting with the community. Mastodon has entrenched norms that, as I have said, inherit whiteness, which inhibit the ability for users of color to play with and experiment with the affordances such that their unique identities can be made present. If you're on an instance that has specific rules about content warnings, about the ways that you can make yourself present, then you are limited in how you can experiment with the affordances of the platform such that your identity can become salient. In fact, your identity becomes pre-determined upon entry into the space, so you come to recognize how you can and cannot be Black on Twitter.

As one of the Mastodonians that I have come to rely on for the history of users of color on Mastodon has said, trying to do “this you”-- that is the Black Twitter call and response methodology where we point out a problem, the contradictions in a person's statement by bringing up another statement that they've made and saying “this you,” right– that's a narrow way of defining it. But doing something like “this you” on Mastodon invites a torrent of racist and sexist kinds of violence from other Mastodonians. Your mentions become what Twitter users call a block party, where you have to go through and report every instance of the kinds of racist vitriol for engaging in a Black digital practice. If that is the case, if doing something like that risks massive reprisal in the form of racist vitriol, then you're going to disincentivize users from experimenting with the affordances of the platform and figuring out unique ways to be themselves.

This, I think, is one of the biggest things that is overlooked by Mastodonians who argue that the influx of new users will bring changes to the platforms. If you have an influx of new users and then you punish them for being themselves or trying to figure out ways to be themselves on the platform, then you will disincentivize users from recommending transformation or changes in the platform, even in open source platforms. They simply will not want to engage. This disincentivization is in contradiction to the assumption of long-time Mastodonians that the influx of new users will prompt a growth and transformation of the server. As you know, many scholars have noted from George Yancy to Angela Davis, to Audre Lorde, to Cornell West, to Sarah Ahmed, to even W.E.B. DuBois and Ida B. Wells-Barnett, whiteness is resilient, it is adaptive, and it will fight to keep itself center in ways that at least on Mastodon will deny users of color the opportunity to enact the changes that many Mastodonians argue for.

Justin Hendrix:

On some level you're trying to balance in your mind right now, of course, the challenge that Elon Musk's change in culture and potentially change to platform and affordances represents on Twitter with the challenge of getting the operators of all of these instances within the architecture of Mastodon to perhaps recognize some of the problems that you're describing. If there was some Congress of, I think there are now thousands of instances, I think I saw the number 3000 the other day, if there was some congress of incense operators, is there one thing that you'd say to them that might ameliorate some of your concern if they were to follow your advice?

Dr. Johnathan Flowers:

This is not a technical problem, and it doesn't have a technical solution. So when I say this is not a technical problem, I mean to say that this isn't a problem that can be solved by simply adding new features or building new instances and so on. This is a problem at the intersection of technology and culture; that is, even if you were to resolve the technical issues, you still have the problem of the culture of Mastodon as inheriting whiteness and inhibiting the very kinds of experimentation, the very kinds of play that drives the development of open source platforms. So when Mastodonians suggest technical solutions like, "Move to another server, or how about this variation of the quote tweet," my response is, "You are missing the fact that technology enables identity in a digital space, and insofar as technology enables identity in the digital space, the very norms of that space constrict how we can use the technology to make our identities present."

What I would say to this hypothetical congress of Mastodon is you need to take a moment and reflect on the ways in which your space reenacts the oppressive structures that you are trying or that you claim that Mastodon was created to escape, right? You need to think about the ways that the norms around the affordances, the very tools of your structure are organized in such a way as to inhibit the ability of marginalized users who find a home on the platform. You need to think about the very real dangers of a federated structure in a society organized around white supremacy. You could easily have an entire instance of right-wing white supremacists pop up that would engage in targeted harassment of users of color.

If the Fediverse or this hypothetical congress doesn't decide en masse to ban that instance for whatever reason, they open up their users of color to racist violence. This is one of the weaknesses of the federated model, and this is one of the things that I think Mastodonians need to take seriously, right? In as much as something like the quote tweet enabled pylons and violence, so too does the federated nature of Mastodon. There are ways that the affordances of Mastodon can be weaponized to maintain structures of oppression. Again, if there's one thing that I would say to this hypothetical Congress, they need to be sensitive to the ways that oppression online emerges at the intersection of technology and society. It's not simply something you can resolve by offering a technical solution. You have to think about the norms that organize how you expect people to use your technical solution.

Justin Hendrix:

I think that's a good place for us to end. Dr. Jonathan Flowers, thank you so much for speaking to me today.

Dr. Johnathan Flowers:

Yeah, no, it was my pleasure. Thank you.

Authors