The US Must Prioritize Use-Case AI Regulation

Kadijatou (Kadija) Diallo / Nov 30, 2023

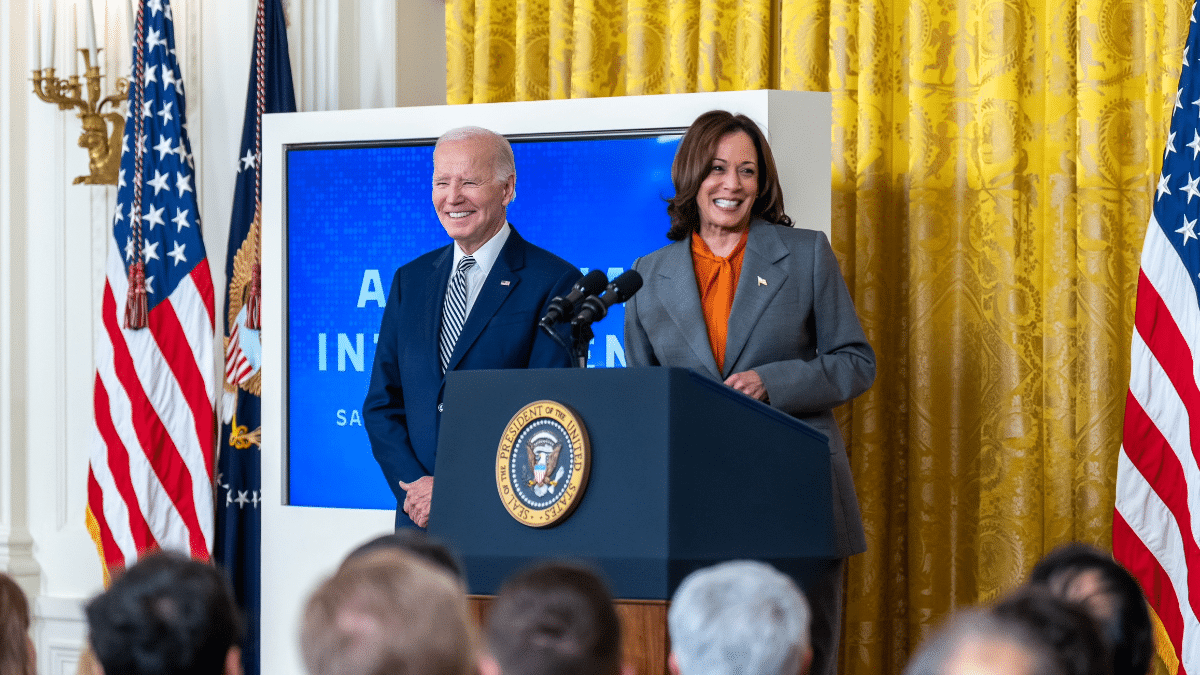

Vice President Kamala Harris joins President Joe Biden at the signing of an Executive Order on artificial intelligence, October 30, 2023.

At the end of October, President Joe Biden signed a sweeping Executive Order addressing the safe deployment and use of artificial intelligence (AI), marking a pivotal moment in the evolution of US technology regulation and laying the foundation for future federal oversight on AI regulation, development, and deployment. The Executive Order joins a growing list of policies that countries around the world are adopting in an effort to regulate this rapidly advancing sector.

While the Executive Order and similar proposals for the general regulation of AI are crucial and necessary, it is imperative for the US not to lose sight of the importance of improving use-case regulation for AI and other emerging technologies. General regulation efforts, such as those underway at the White House and in Congress, play a vital role in establishing baseline protections and terms of development. However, the dynamic nature of AI necessitates legislation that is not only flexible enough to adapt to technological changes but also stringent enough to maintain consistent and robust protections for users.

The misrepresentation of AI threats often leads to misconceptions about the immediate risks posed by AI. Contrary to the idea of superintelligent agents eroding individual agency or enslaving humanity, the real and more immediate threat lies in the mundane capacity of AI as a tool. AI has the potential to exacerbate existing harmful practices in industry and society, and in some cases has undergirded dubious claims by companies, as illustrated by notable business failures such as Theranos, FTX, and WeWork. Despite marketing themselves as sophisticated tech services or being “AI-powered,” companies such as these engaged in typical business misbehavior, under the veneer of revolutionary technology, revealing the need for close scrutiny by the necessary regulatory authorities.

Consider the case of recommendation algorithms, which suggest items to users based on shared preferences or past indications. The vast usage of these algorithms across industries and platforms makes it challenging to enact blanket oversight on their deployment. A recommendation system in online investment tools differs from those on social media platforms, which, in turn, differs from those on marketplaces. Creating oversight for recommendation systems used by investment firms falls within the purview of the SEC, while the FTC possesses greater expertise in mitigating discrimination and privacy violations by recommendation systems deployed on social media platforms, as seen in the agency’s successful 2019 lawsuit against Facebook for data privacy violations.

Taking a use-case approach to AI won’t be an entirely new strategy for federal agencies; instead it aligns with a pragmatic evolution of their mandates. For example, in 2023, the Consumer Financial Protection Bureau (CFPB) updated its guidance within the framework of the decades-old Equal Credit Opportunity Act (ECOA) by requiring that lenders using AI algorithms to determine applicants’ eligibility for credit ensure their models did not discriminate along protected classes and provide applicants with detailed explanation for their credit denials. The move added a layer of transparency to the algorithmic decision-making process simply by expanding interpretation of an existing rule.

Another instance illustrating effective use-case strategy is found in the context of the Health Insurance Portability and Accountability Act (HIPAA). Although HIPAA was established before the widespread use of algorithms in healthcare, it has consistently played a pivotal role in shaping how AI tools handle patient data and maintain health privacy. Serving as a formidable guardrail, HIPAA compels healthcare technologies to prioritize the protection of patient data, ensuring that the integration of AI aligns with stringent standards of privacy and security, without restricting innovation in the sector.

In these cases, the regulatory focus is not on the technology itself, but on the impact and outcome of the technology. This focus on the outcomes from the use of AI, rather than the function of any specific tool, is crucial in the discussion on effective regulation. Legislation will always lag behind technology, so focusing efforts on legislating technological tools, especially ones we don’t have a full understanding of and that are constantly evolving, is wasted effort. Rather, focus should be on ensuring that processes and outcomes align with legal protections and sectoral best practices, an approach that is more future-proof and realistically achievable with our existing legislative capabilities.

If Europe’s General Data Protection Regulation (GDPR) is any example, more targeted oversight of technology impact not only better protects users but also pushes firms to be more innovative in designing products that adhere to legislative requirements. The US market is too powerful and lucrative for most firms not to engage with it; firms would be well incentivized to create products that align with sensible sector-specific US regulations rather than forgo access to American consumers.

As with general AI regulation, there are normative and functional challenges to deploying effective use-case regulation. At its core, use-case regulation inherently requires strengthening consumer and civil rights protections across the board, a challenging endeavor in the current US political climate. Furthermore, coordinating and maintaining consistency across regulations can be difficult, especially if a platform or technology concerns multiple agency jurisdictions, and risks fragmentation potentially leading to more harm and weaker protections than currently available.

However, these barriers are not insurmountable. It’s promising that both Congress and the White House acknowledge the profound societal and economic implications of AI in the US, demonstrating a sense of urgency in comprehending and mitigating potential harms through bipartisan efforts. Directing this momentum to increasing funding and supporting the recruitment of subject matter and industry experts to regulatory agencies would position the US as a leader in safeguarding against AI risk, while promoting technological innovation.

Too narrow a focus on comprehensive AI regulation risks overlooking more achievable and immediate safeguards. The US is uniquely positioned to leverage its existing ecosystem of regulatory agencies, each equipped with sector-specific expertise and historical context, to contend with changing industry capabilities and societal concerns. The US must capitalize on this strength, strategically deploying use-case regulatory measures that are both targeted and responsive to the nuanced challenges presented by AI.

Authors