The US Government's AI Safety Gambit: A Step Forward or Just Another Voluntary Commitment?

Stephanie Haven / Sep 20, 2024

Vice President Kamala Harris, pictured speaking with AI company executives in May 2023, announced the White House’s policy on uses of AI across government in a speech on March 28, 2024. (Lawrence Jackson / The White House)

Last month, the year-old US AI Safety Institute (US AISI) took a significant step by signing an agreement with two AI giants, OpenAI and Anthropic. The companies committed to sharing pre- and post-deployment models for government testing, a move that could mark a leap toward safeguarding society from AI risks. However, the effectiveness of this voluntary commitment remains to be seen, as a comparable 2023 agreement with the UK AI Safety Institute (UK AISI) has had varied results.

The Agreement: A Closer Look

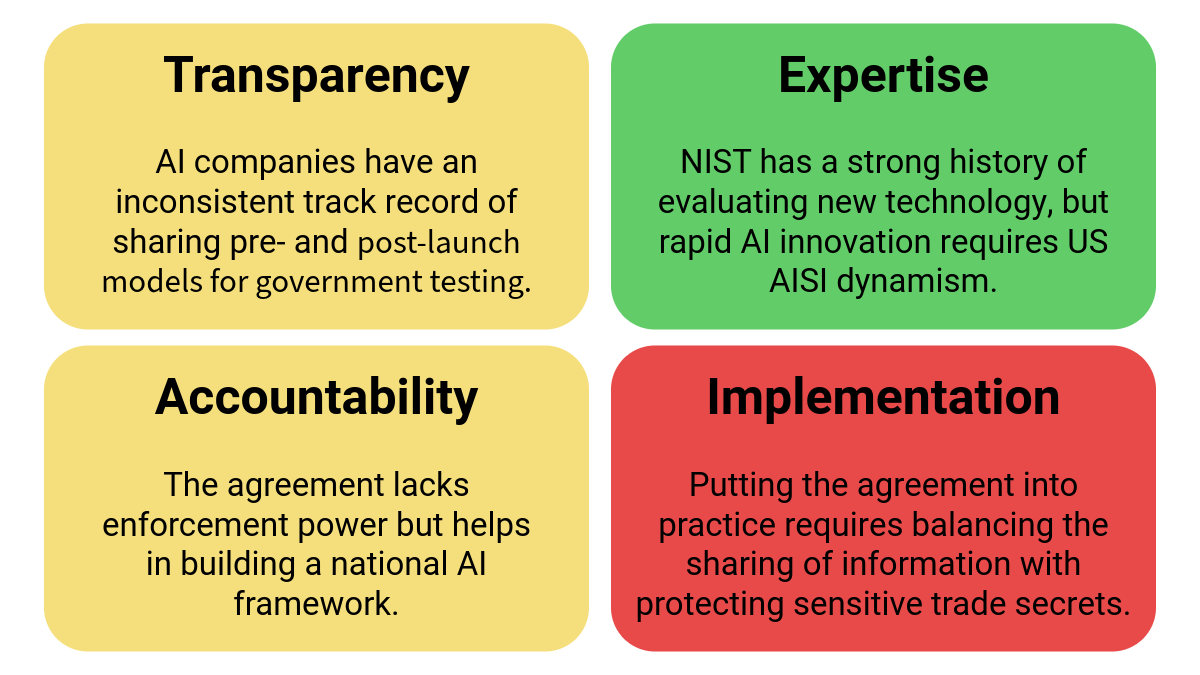

To assess whether this agreement marks a substantive step toward safe AI development, we need to examine it from four perspectives:

- Transparency: How much information will the companies actually share?

- Expertise: Does the government agency have the necessary capabilities to evaluate these complex systems?

- Accountability: What happens if safety issues are identified?

- Implementation: Can this agreement be effectively operationalized in practice?

These criteria are inspired by lessons learned from historical US safety testing in industries such as transportation and aviation, where enforceable standards have built trust and improved safety outcomes. They also draw from my professional experience developing governance strategies for a technology company interfacing with external regulators.

Overview: The US AISI Agreement with OpenAI and Anthropic

Issues pertaining to the US AISI agreement with OpenAI and Anthropic.

Transparency: A Promising Start

OpenAI and Anthropic's agreement to submit their AI models for government testing represents a significant step towards transparency in AI development. However, the practical implications of this commitment remain to be seen.

This isn't the first such agreement for these AI companies – in 2023, they joined other leading labs in a similar pledge to the UK AISI. By June 2024, Anthropic and Google DeepMind had followed through. However, while OpenAI has shared post-launch models, it is uncertain whether they've allowed the UK AISI pre-deployment access. Nevertheless, OpenAI CEO Sam Altman specified his support for the US AISI to conduct “pre-release testing” in an August 2024 post on X.

The UK AISI shared testing results with the US AISI this summer, exemplifying growing international cooperation in AI safety efforts. Repeated commitments on both sides of the Atlantic underscore a growing consensus on the importance of external oversight in AI development.

Still, the devil is in the details when determining whether US AISI can interpret the models once shared. Anthropic's co-founder Jack Clark acknowledged to Politico in April that "pre-deployment testing is a nice idea, but very difficult to implement."

Expertise: Building Capacity

Led by Elizabeth Kelly, one of Time’s 100 Most Influential People in AI, the US AISI brings together a multidisciplinary team of technologists, economists, and policy experts. Operating within the (reportedly underfunded) National Institute for Standards and Training (NIST), the US AISI seems substantively well-positioned to develop industry standards for AI safety.

NIST’s work ranges from biometric recognition to intelligent systems. While the historical expertise provides a solid foundation, the rapid advancements in large language models (LLMs) present new challenges. NIST’s new Assessing Risks and Impacts of AI (ARIA) program and AI Risk Management Framework are particularly relevant to evaluating LLMs, but the dynamic field demands continuous adaptation.

US AISI also faces the challenge of attracting top talent in a competitive market. While leading AI researchers in the private sector can have salaries nearing $1 million, government agencies typically offer more modest compensation packages, potentially impacting their ability to recruit cutting-edge expertise.

The US AISI's ability to develop robust industry standards for AI safety will depend not only on leveraging NIST's historical expertise, but also on successfully bridging the talent gap between public and private sectors.

Accountability: The Missing Link

While the US AISI agreement with OpenAI and Anthropic promises "collaboration on AI safety research, testing and evaluation," critical details remain unclear. The full agreement is not publicly available and the press release didn't specify enforcement mechanisms or consequences for disregarding evaluation results – nor did it define what constitutes actionable findings.

Despite the ambiguity surrounding how OpenAI and Anthropic will incorporate US AISI's test results, this agreement fulfills a commitment made by the US at the 2023 UK AI Safety Summit. There, 28 countries and the European Union affirmed their "responsibility for the overall framework for AI in their countries" and agreed that testing should address AI models' potentially harmful capabilities.

While it remains to be seen whether the US AISI will add enforcement power to its agreement with OpenAI and Anthropic, the national - and complementary international - agreement at least creates a pathway for the US AISI to scrutinize AI development in the public interest.

Implementation: The Real Challenge

Operationalizing this agreement faces several hurdles:

Developing an interface for sharing sensitive model information. There are trade-offs between protecting the AI companies’ proprietary technology – in the interest of both business competition and national security – and incentivizing multiple AI companies to share model access with a growing list of national AI Safety Institutes. Scaling a secure, interoperable application programming interface (API) that enables different AI company systems to communicate with multiple governments’ AI Safety Institute programs may be a cost effective mechanism for both sets of stakeholders to adapt as the landscape of international oversight and regulation evolves.

Adapting internal operations to integrate testing. For AI companies, this means integrating consistent model submission intervals into their existing product development cycles to minimize business operation and product launch disruptions. Understanding the US AISI's estimated testing timelines will be crucial for standardizing this new step in their processes. Simultaneously, the nascent US AISI faces the challenge of rapidly building its capabilities. Staffing the less-than-year-old institute with AI and testing experts is critical for developing robust third-party evaluations of frontier AI models.

Establishing a feedback process between AI labs and the US AISI. If the only requirement is for AI labs to submit their models for testing, there may be minimal disruptions to AI labs' development cycles, but also less comprehensive evaluations. Conversely, if AI labs are expected to engage in ongoing dialogue throughout the testing and evaluation process, assessments would be more thorough, but at a higher time and resource cost from both the labs and the US AISI. The selected approach will impact the depth of evaluations, the speed of the process, and the potential for real-time adjustments to AI systems. Clearly defining expectations at the beginning will be fundamental to building trust, ensuring transparency, and maintaining the long-term legitimacy of the agreement.

Balancing transparency with the protection of trade secrets. While OpenAI and Anthropic are not required to publicly disclose AI safety issues, their approach has been proactive: Anthropic's Responsible Scaling Policy commits to publishing safety guardrail updates, and OpenAI issues System Cards detailing safety testing for each model launch. The US AISI, following NIST's long-standing tradition of public reporting, is likely to share its evaluation tools and results. However, all have to be careful to avoid exposing sensitive information that could compromise market competition and/or national security.

The Road Ahead: From Voluntary to Mandatory?

While the agreement may not be immediately fully operationalizable – given the early stages of third-party evaluations – the government's proactive investment in this capability demonstrates foresight. The agreement positions the US AISI to effectively implement evaluations once industry standards solidify. This proactive approach enables the agency to be ready for a future where AI safety testing could become a routine part of development cycles.

As AI capabilities continue to advance at a breakneck pace, the pressure for mandatory compliance and regulation has grown. In Silicon Valley’s home state of California, where both OpenAI and Anthropic are headquartered, the Governor recently signed into law several AI bills about deep fakes and watermarking. While US federal regulation remains to be seen, the recently elected UK Labour government has signaled its intent to introduce "binding regulation on the handful of companies developing the most powerful AI models."

Conclusion: A Foundation to Build On

The US AISI's agreement with OpenAI and Anthropic represents a crucial step in the US government's efforts to ensure AI safety. While it falls short in terms of accountability and clear enforcement mechanisms, it establishes a framework for collaboration that can be built upon in the future.

The true test will be in the implementation. Can the US AISI effectively evaluate these AI models? Will the companies act on any safety concerns raised? And perhaps most importantly, will this voluntary agreement pave the way for more robust, legally binding regulations in the future?

The success or failure of initiatives like this will play a crucial role in shaping the future of AI governance – and potentially, the future of humanity itself.

Authors