Taylor Swift, Kamala Harris, AI Falsehoods and the Limits of Counterspeech

Belle Torek / Sep 12, 2024

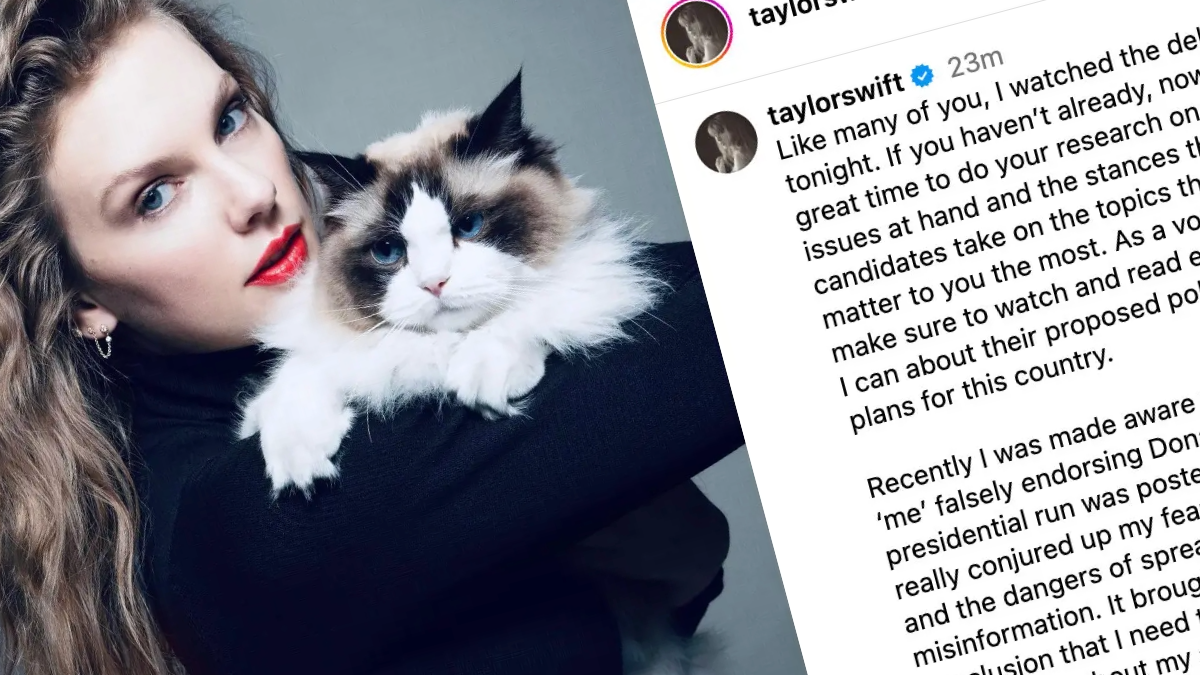

Taylor Swift, Instagram.

Artificial intelligence and misinformation were a factor in Taylor Swift’s Tuesday night endorsement of Vice President Kamala Harris in the 2024 presidential election. In addition to marking Swift’s first public mention of AI and its role in contributing to the creation and spread of misinformation, Swift’s statement illustrates the significance of AI in conversations about democracy. While many have applauded Swift for drawing attention to this critical issue, Swift stops short of making express calls for AI regulation, instead advocating that “[t]he simplest way to combat misinformation is with the truth.” While Swift clearly sees AI as an issue worth addressing, her comments raise a question for those of us in the tech policy world: is counterspeech really enough to address the rapidly evolving threats AI poses?

Counterspeech, or the idea that harmful or reckless speech can be corrected by presenting more and better information, has centuries-old origins but most notable roots in holdings by Supreme Court Justices Brandeis and Holmes, as well as free speech bastions like the ACLU, all of which argue that the “marketplace of ideas” will ultimately allow truth to triumph. However, these theories long predate the rise of social media, algorithmic amplification, and AI deepfakes–all of which complicate the landscape of misinformation–and are being increasingly challenged.

Given Taylor Swift’s own history with AI abuse and misuse, her reliance on counterspeech as the solution may come as a surprise to many readers. Earlier this year, bad actors created and disseminated AI-generated nude images of Swift across social media. Last month, former President and 2024 Republican nominee Donald Trump shared AI-generated images on Truth Social of Swift and her supporters, which were paired with text falsely claiming that Swift had endorsed Trump. Taylor Swift is well-known for being a careful custodian of her image, and is no stranger to pursuing legal action, sometimes even on principle for nominal damages as small as one dollar. And yet, Swift’s proposed solution to the rise of AI deepfakes was not lawsuits or government regulation, but simply more and better information.

So, is counterspeech indeed the best solution to addressing AI deepfakes? Perhaps this is the case for Taylor Swift, one of the richest and most powerful women in the world, who is known for her anomalous political impact. Her massive platform has consistently driven voter registrations and turnout, and her fanbase of Swifties is recognized as a key voting bloc in electoral politics. In a late 2023 NBC News poll, Swift topped a list of celebrities in terms of net favorability. All of this is to say that if anyone’s counterspeech could truly be an antidote to misinformation, it is likely Taylor Swift’s. But even so, does this actually afford her the recourse she deserves?

The regulation of AI-driven deepfakes may seem novel, and of course there are cases where it is, but legal avenues already exist to help address some of these harms. We must resist the impulse to treat AI issues as if they always require entirely new legal frameworks. Often, existing law can be adapted to fit these emerging challenges. In the case of the fake Trump endorsement, Swift could potentially recover damages using existing law, without relying on developing AI regulation: tort law affords remedies to victims of false light, or publicity that creates an untrue or misleading impression about them, and a court may very well determine that Swift’s claim meets the actual malice standard required of public figures in such cases.

Related Reading:

- Taylor Swift Deepfakes Show What’s Coming Next In Gender and Tech – And Advocates Should Be Concerned

- Swift Justice? Assessing Taylor's Legal Options in Wake of AI-Generated Images

- US States Struggle to Define “Deepfakes” and Related Terms as Technically Complex Legislation Proliferates

Swift’s reluctance to embrace the full range of legal solutions available to her may stem from her strategic positioning as a bipartisan figure. Rather than making a summary endorsement based on partisanship, Swift frames her endorsement as having listened to both sides and encourages her followers to do the same. For someone trying to galvanize voters across the political spectrum, an outright call for stronger AI regulation might alienate more conservative supporters. Still, tech policy–much like speech jurisprudence–often creates strange bedfellows that don’t fall neatly along party lines, and legislators on both sides of the political aisle increasingly recognize the need for effective AI governance and protections.

Of course, it is also possible that Swift is wholly supportive of AI regulation, but is choosing to let her endorsement speak for itself. Harris’ platform, which was released last month, represents a continuation of work that began within the Biden-Harris Administration, perhaps most notably President Joe Biden’s Executive Order on the “Safe, Secure, and Trustworthy Development and Use of Artificial Intelligence.” But under the Biden-Harris Administration, the White House also established a task force to address online harassment and abuse and, most recently, made a call to action to combat image-based sexual abuse, which ultimately resulted in a collaborative partnership between tech companies and civil society leaders to develop a set of principles and private sector voluntary commitments for prevention and mitigation efforts. By lending support to the campaign that has so clearly demonstrated its commitment to addressing AI safety, perhaps Swift has given her imprimatur to Harris’ platform on these issues.

Still, the question remains, if Swift, with her massive platform and resources, feels that her only option is to out-speak AI-driven misinformation and abuse, where does that leave the rest of us? Most of us don’t have Swift’s 284 million-strong Instagram following, and for every person who can rely on counterspeech, there are thousands more who can’t. For the average person, counterspeech is unlikely to be a viable solution for addressing malicious AI deepfakes.

This brings us to the broader tech policy implications of Swift’s endorsement. Is her invocation of counterspeech a step forward for AI governance? On the one hand, raising broad public awareness about AI misuse is undoubtedly a positive development, and Swift’s backing of Harris could boost support for a candidate who takes AI harms seriously. But by perpetuating the idea that truth alone can outweigh AI misinformation, Swift risks sidelining the legal remedies that could protect those who lack her power and influence from tech-facilitated defamation and abuse.

At its heart, Swift’s endorsement of Kamala Harris reflects a deeply personal concern about AI’s potential to perpetuate harm, a concern that so many of us across tech and civil society share. But as Ariana Aboulafia and I wrote in an earlier Tech Policy Press piece, relying solely on counterspeech in lieu of other protections leaves far too many vulnerable. While Swift’s influence is extraordinary, the rest of us—without her platform—require more than truth and an Instagram megaphone as a shield against AI-generated misinformation.

Belle Torek is an attorney who works at the intersection of free speech, cyber civil rights, and online safety. The opinions expressed in this piece are solely her own and do not reflect the views of her current or former employers.

Authors