Shadowbanning: Sorting Fact from Fiction

Gabriel Nicholas / Jul 13, 2022Gabriel Nicholas is a Research Fellow at the Center for Democracy & Technology.

Shadowbanning is a never-ending source of outrage on the internet. Users from groups that feel marginalized online — sex workers, conservatives, Black Lives Matter, the list goes on — perennially accuse platforms of making their content less visible without telling them. Platforms roundly deny these claims in judicious yet vaguely worded blog posts. The users who believe they've been shadowbanned cry "gaslighting", requiring more conciliatory blog posts. The discourse is cyclical, futile, and exhausting.

It also fails to answer even the simplest questions. What exactly is shadowbanning? Do platforms really do it? Who gets shadowbanned, and how do beliefs around shadowbanning affect speech online?

At the Center for Democracy & Technology, we tried to answer these questions in our report Shedding Light on Shadowbanning. To do this, we ran a survey of over one thousand US social media users to understand their attitudes and beliefs about shadowbanning. We also completed three dozen interviews, both with people who believe they have been shadowbanned and with platform workers who design content moderation policies. Our report found that nearly one out in ten US social media users believe that they have at some point been shadowbanned. Those users are disproportionately male, conservative, Latino, or non-cis gendered. In our interviews, we found that beliefs about shadowbanning have led to feelings of isolation, conspiracy, and deep distrust in social media content moderation practices writ large.

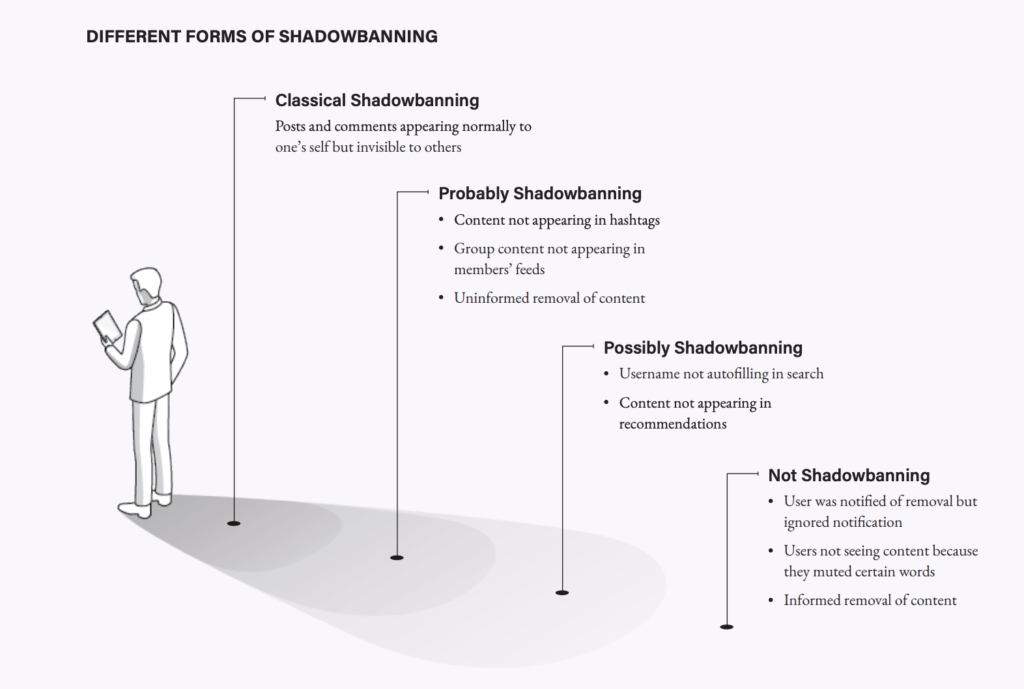

Whether shadowbanning is “real” or not depends on your definition. When claiming they do not shadowban, social media companies often give the word a narrow definition. Reddit and Twitter for example have both defined shadowbanning as when a platform makes a user’s posts invisible to everybody but themselves. Platforms claim to do this rarely or never, and likely that is true. However, in our interviews, we found that people use the word “shadowban” to describe other methods of undisclosed content moderation, such as not having posts appear under certain hashtags, hiding a user’s handle from a search suggestion box, or downranking a user’s content in a recommendation algorithm. In our report, we suggest that shadowbanning has come to mean any time a social media platform hides or reduces the visibility of a user’s content without informing them.

Democracy & Technology.

There have been a handful of public shadowbanning controversies in the past few years. Conservatives accused Twitter of shadowbanning when it stopped auto-filling in its search box the usernames of prominent Republicans, including Republican Party chair Ronna McDaniel and Representatives Jim Jordan (R-OH) and Matt Gaetz (R-FL). (In a post-mortem, Twitter said that this was a bug that affected hundreds of thousands of accounts across the political spectrum.) Black Lives Matter activists accused TikTok of shadowbanning when videos with the #BlackLivesMatter and #GeorgeFloyd hashtags sharply dropped in views (TikTok also said this was a technical glitch.) Cardi B, Ice Cube, and Donald Trump say they have been shadowbanned, as do 30% of sex workers, according to a survey by Hacking // Hustling. According to our survey, people most commonly believe they are shadowbanned for their political views (39%) or social beliefs (29%).

With shadowbanning, it is hard to separate what is real from what is rumor. Still, shadowbanning is harmful both as a practice and as a rhetorical device. Systems that automatically shadowban have no way of correcting for errors, and it is hard for the public to evaluate whether certain voices are being systematically excluded from conversations. A user that believes they have been shadowbanned can feel isolated, paranoid, and marginalized. Those feelings of marginalization can also be weaponized politically to stir up anti-tech sentiment in ways that threaten free speech online. Politicians within and without the US have used alleged shadowbanning and other conspiratorial rhetoric to justify overbroad laws limiting companies’ ability to moderate content, such as Texas’ and Florida’s unconstitutional social media laws.

Despite all its harms, shadowbanning can sometimes do good. Advanced bad actors can, in some situations, use the knowledge that their content has been moderated to find structural weaknesses in moderation systems. Take for example, the troll trying every misspelling of a sexist slur to see which gets past automated filters, or a foreign disinformation network trying to learn how to like its own posts so that they get picked up by recommendation algorithms. In cases like these, shadowbanning can help mitigate harmful content without perpetuating the content moderation arms race between social media companies and the bad actors they are trying to stop.

How then can platforms address the harmful individual and societal effects of shadowbanning without completely removing it as a tool from their toolbelt? Our report at CDT makes three recommendations. First, platforms should admit to shadowbanning (though perhaps come up with a less contentious term) and publicly disclose the cases in which they do it. This could increase users’ trust in platforms and help shift debates from whether platforms should shadowban to more productive debates about when platforms should shadowban. Second, platforms should only shadowban actors who are trying to circumvent moderation systems. Platforms should never shadowban to avoid hiding moderation strategies the public may find objectionable. Third, platforms should share data about the content they shadowban with select, vetted, third-party independent researchers. Trust in social media platforms has eroded to the point where disclosure is not enough. Platforms need outside researchers both to uncover as-of-yet unknown effects of content moderation and to validate that they are doing what they claim they are.

Authors