September 2023 U.S. Tech Policy Roundup

Kennedy Patlan, Rachel Lau, J.J. Tolentino / Sep 29, 2023Rachel Lau, Kennedy Patlan, and J.J. Tolentino work with leading public interest foundations and nonprofits on technology policy issues at Freedman Consulting, LLC.

While a government shutdown looms in the month ahead, the White House and U.S. Congress were back in action in September, with a central focus on artificial intelligence (AI). A highlight of September’s congressional happenings was Senate Majority Leader Chuck Schumer’s private convening of major tech company leadership at the first of several planned AI Insight Forums in Washington, DC. The same week, the White House announced additional voluntary AI risk management commitments from eight companies, including Adobe, IBM, Nvidia, and Salesforce. This month, President Joe Biden spoke to the President's Council of Advisors on Science and Technology, emphasizing the administration’s focus on AI and America’s leadership on the technology.

After a federal appeals court in Louisiana ruled that the White House and other government officials were violating users’ First Amendment rights by encouraging technology companies to remove posts that included disinformation, the White House filed an emergency application asking the Supreme Court to halt the order. At the end of the month, Supreme Court Justice Samuel Alito extended a delay on the ruling, though the process has been muddled.

At the agency level, the Justice Department made headlines this month as it opened a three-month trial against Google, marking the first monopoly-related case to make it to trial in decades. The Federal Trade Commission (FTC) filed an antitrust lawsuit against Amazon alongside 17 states who allege that the company is engaging in a slew of monopolistic practices. Separately, the agency released a report on the potential harms of blurred advertising for children. The Federal Communications Commission (FCC) confirmed Biden appointee Anna Gomez as a commissioner, breaking a long deadlock. Shortly after, FCC Chair Jessica Rosenworcel proposed reinstating net neutrality rules. Finally, the Privacy and Civil Liberties Oversight Board, an independent agency in the executive branch, called for a “warrant requirement” in upcoming Section 702 reforms as the contentious data collection tool’s end-of-year expiration approaches.

In corporate news, Apple announced it will adopt the EU’s universal standards through its transition to USB-C charging. Online shoe seller Hey Dude Inc. was fined $1.95 million by the FTC for suppressing negative reviews. Finally, the end of the month revealed that iPhone designer Jony Ive and OpenAI CEO Sam Altman may be discussing the development of a new AI hardware device.

In international news, the UK announced a transatlantic data transfer deal with the United States through the “UK Extension to the EU-US Data Privacy Framework,” which will allow US companies certified under the EU framework to receive UK citizens’ data.

The tech policy tracking database is available under a Creative Commons license, so please feel free to build upon it. If you have questions about the Tech Policy Tracker, please don’t hesitate to reach out to Alex Hart and Kennedy Patlan.

Read on to learn more about September developments across general and generative AI policy news.

A New Congressional Convening Series for AI

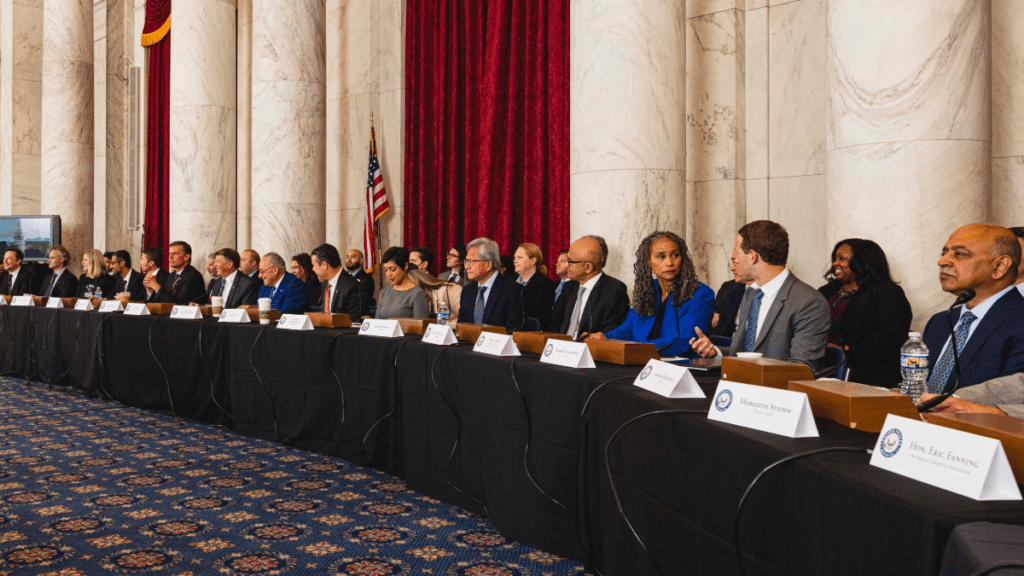

- Summary: This month, Senate Majority Leader Chuck Schumer (D-NY) held the first AI Insight Forum, a closed-door convening that brought together tech industry leaders, representatives from labor and civil rights advocacy groups, and more than sixty-five senators to discuss major AI issues. Prominent names in attendance included Google CEO Sundar Pichai, Tesla CEO and X owner Elon Musk, and OpenAI CEO Sam Altman. Civil sector representatives included the Leadership Conference on Civil and Human Rights President and CEO Maya Wiley, AFL-CIO President Liz Shuler, and Mozilla Foundation Fellow Deb Raji. The meeting covered a wide range of AI-related topics such as national security, electoral politics, and workforce implications. Attendees also debated the creation of a new federal AI agency and how to best use existing agencies to regulate the technology. This month’s meeting was just the first in a nine-part series of AI-related forums that will continue throughout the fall. The cadence of the convenings has not been announced.

- Stakeholder Response: Immediate reactions from attendees at the AI Forum reflected a general desire for more government action. However, there continues to be a lack of consensus on what regulation should look like. Sen. Maria Cantwell (D-WA) said that AI legislation can get done in the next year, but Sen. Mike Rounds (R-SD), warned that Congress is not yet ready to regulate the industry. Despite the mixed messages, Sen. Schumer told reporters that he envisions AI regulation will follow a similar path as the “CHIPS and Science Act, where legislation will be pursued in a bipartisan way and committees rely on scientific and technical information from the forums.”

- Industry leaders voiced general support for Congress taking a larger role in regulating AI technology. Elon Musk acknowledged that it is important for the tech industry to “have a referee,”while Mark Zuckerberg stated that the standard on AI regulation should be set by American companies in collaboration with the government. Similarly, OpenAI CEO Sam Altman agreed that AI development is “something that we need the government’s leadership on.”

- Sen. Schumer faced criticism about theformat of the closed-door meeting. Janet Murguia, President of Hispanic civil rights organization UnidosUS, reflected on her desire to see “more segments of society” in the next forum. Mozilla Foundation Fellow Deb Raji argued that the most reliable experts on the harms of AI come from outside of corporations and voiced her support to include a wider range of issue experts. Both Sens. Elizabeth Warren (D-MA) and Josh Hawley (R-MO) argued that these forums should be open to the public. Sen. Warren expressed that a closed-door session “for tech giants to come in and talk to senators and answer no tough questions is a terrible precedent for trying to develop any kind of legislation.”

Senators and DHS Take Action on Artificial Intelligence

- Summary: In addition to Schumer’s AI forum, the Senate Judiciary Subcommittee on Privacy, Technology and the Law also hosted a hearing on AI following the release of a bipartisan legislative AI framework by Sens. Richard Blumenthal (D-CT) and Josh Hawley (R-MO). Additionally, the Department of Homeland Security (DHS) announced two new AI policies relating to the agency’s use of AI, including a policy on facial recognition tools.

- In Congress, Sens. Richard Blumenthal (D-CT) and Josh Hawley (R-MO) released a bipartisan legislative framework on artificial intelligence. The framework includes recommendations regarding: protections for consumers and kids, transparency regulations, establishing AI companies’ liability for harms, the creation of a licensing regime by an independent oversight body, and a proposal for a national security and trade structure for AI models and hardware. Following the framework’s release, the senators led a hearing on AI in the Senate Judiciary Subcommittee on Privacy, Technology and the Law. The subcommittee’s hearings were attended by top tech executives, including Microsoft’s Brad Smith, OpenAI’s Sam Altman, and Elon Musk.

- Stakeholder Response: At the subcommittee hearing following Sen. Blumenthal and Sen. Hawley’s joint AI framework announcement, corporate leaders including Microsoft’s president Brad Smith and Nvidia’s chief scientist and SVP Wiliam Dally celebrated the blueprint, with Dally arguing for “keeping a human in the loop” to ensure that AI systems are kept in check. Some policymakers, however, have expressed concerns about the specificity and speed of the Blumenthal-Hawley framework, arguing that lawmakers need to learn more about AI before writing legislation. In an interview with Bloomberg, Sen. Ted Cruz (R-TX) expressed that he would not support a “heavy-handed [federal] regulatory regime” governing AI, citing members of Congress not fully understanding the technology and concerns over global competitiveness.

- The Department of Homeland Security (DHS) announced two newAI policies developed by the DHS Artificial Intelligence Task Force (AITF) this month. The first policy establishes a set of principles for AI acquisition and use in DHS while the second mandates that “all uses of face recognition and face capture technologies will be thoroughly tested to ensure there is no unintended bias or disparate impact in accordance with national standards.” DHS also announced the appointment of the agency’s first chief AI officer, Eric Hysen, who will also continue to serve as DHS’s Chief Information Officer. These announcements followed a report released by the U.S. Government Accountability Office that revealed that seven law enforcement agencies in the Departments of Homeland Security and Justice use facial recognition services without appropriate policies to train staff on the use of the technology or to protect people’s civil rights and liberties.

- Stakeholder Response: Executive Director Paromita Shah of Justice Futures Law, a legal nonprofit, responded to the DHS announcement, saying that the organization “remain[s] skeptical that DHS will be able to follow basic civil rights standards and transparency measures, given their troubling record with existing technologies.”

Other AI Happenings in September:

- Following the AI Insight Forum, the U.S. Chamber of Commerce sent a letter to members of Congress urging them to advance ethical policies for the development and deployment of responsible AI technologies. The letter recommended the creation of a new Assistant Secretary of Commerce for Emerging Technology office within the U.S. Department of Commerce that would coordinate AI policies, convene agencies, and identify and eliminate regulatory overlap or other gaps. The letter also recommended that Congress authorize and fund the National Artificial Intelligence Research Resource (NAIRR) and suggested that the National Science Foundation provide Congress with essential guidelines to better prepare K-12 students for the use of AI.

- A group of tech accountability and civil society organizations introduced the Democracy By Design framework that addresses the use of AI in election manipulation on social media. The framework recommended that social media platforms prohibit the use of generative AI to create deep fakes of public figures and advocated for companies to implement clear disclosures of AI-generated content relating to political advertising.

- This month, Congress also received a letter from the National Association of Attorneys General on behalf of 54 attorneys general nationwide. The letter asks Congress to study AI’s effect on children and pass legislation expanding current restrictions on child sexual abuse material (CSAM) to explicitly cover AI-generated CSAM.

- In late September, Hollywood saw the close of a deal between the Writers Guild of America and major entertainment companies, ending a five-month strike. However, questions remain about what AI may mean for the industry, as the new agreement allows studios the right to train AI models based on writers’ work, even as it enacts new AI-related protections.

- This month also saw continued AI efforts from industry and civil rights groups. Google announced that it is investing $20 million in a new Digital Futures Project to “support researchers, organize convenings and foster debate on public policy solutions to encourage the responsible development of AI.” The Leadership Conference on Human and Civil Rights’ Education Fund announced the launch of The Center for Civil Rights and Technology to serve as a hub for information, research, and collaboration to address artificial intelligence.

What We’re Reading on AI:

- In Tech Policy Press, Gabby Miller is tracking key takeaways and comments arising from Sen. Schumer’s AI Insight Forum.

- Time published its TIME100 Most Influential People in AI, highlighting individuals who are shaping the direction of AI technology.

- Gabby Miller published a transcript of the Senate Judiciary Subcommittee on Privacy, Technology, and the Law’s hearing on AI in Tech Policy Press.

- Bloomberg published a piece on AI regulations in the states, with increasing state-level legislation to study AI and its potential impacts on civil rights, the job market, and more.

- Politico reported that starting in November, Google will require all political advertisements to disclose the use of AI tools in any videos, images, and audio.

- The Associated Press discussed how Amazon has begun to require e-book authors to disclose use of AI in their works to the company, but has yet to require public disclosure.

- In Tech Policy Press, Kalie Mayberry warned that AI has the potential to replace online forums as a source of information, a process that may reduce collaboration and sense of community online.

- Axios’ Alison Snyderexamined how English is becoming the de facto language used to train AI models, causing concern for the technology's inclusivity, biases, and usability on a global scale.

- The Atlantic talked about what AI might mean for computer-science degrees.

New Legislation and Policy Updates

- Protect Elections from Deceptive AI Act (S.2770, sponsored by Sens. Amy Klobuchar (D-MN), Josh Hawley (R-MO), Christopher Coons (D-DE), and Susan Collins (R-ME)): This bill would amend the Federal Election Campaign Act of 1971 to prohibit the use of artificial intelligence to generate deceptive content that falsely depicts federal election candidates in political ads.

- Advisory for AI-Generated Content Act (S.2765, sponsored by Sen. Ricketts (R-NE)): This bill would require AI-generating entities to watermark their AI-generated materials. It also instructs the Federal Trade Commission to consult with the Federal Communications Commission, the U.S. Attorney General, and the Secretary of Homeland Security to issue regulations for the watermarks within 180 days of passage to take effect one year post-publication.

Public Opinion Spotlight

The Harris Poll and the MITRE Corporation released a poll of 2,063 U.S. adults between July 13-17, 2023 about public trust in AI. Key findings include:

- Only 39 percent of American adults believe today's AI technology is safe and secure to use, down from 48 percent in November 2022.

- 51 percent of men and 40 percent of women are more excited than concerned about AI.

- 57 percent of Gen Z and 62 percent of millennials say they are more excited than concerned about AI, compared to 42 percent of Gen X and 30 percent of Baby Boomers.

- Many Americans are worried about the potential criminal and political uses of AI. 80 percent worry about AI being used for cyber attacks, 78 percent worry about it being used for identity theft, and 74 percent worry about it being used to create deceptive political ads.

- 52 percent of employed U.S. adults are concerned about AI replacing them in their job.

- However, 85 percent support a nationwide effort across government, industry, and academia to make AI safe and secure. This support is strong across Republicans (82 percent), Democrats (87 percent), and Independents (85 percent).

- 85 percent want industry to transparently share AI assurance practices before bringing products equipped with AI technology to market.

- 81 percent believe industry should invest more in AI assurance measures, up 11 points from November 2022.

From July 14-August 6, 2023, the Axios-Generation Lab-Syracuse University AI Experts Poll surveyed 213 professors of computer science and engineering from 65 of the top 100 computer science programs in the country. Respondents were asked about their perspectives on AI-related issues. Key findings include:

- 37 percent of experts expressed that a federal AI agency would be the best entity to regulate AI, while 22 percent of experts reported that they would favor a global organization or treaty. Only 16 percent of experts voiced support for congressional regulation.

- About 1 in 5 experts predicted AI will definitely stay within human control. Remaining experts’ opinions were split on whether or not AI would get out of human control.

- 42 percent of experts voiced concern about discrimination and bias, while only 22 percent were concerned with the risk of mass unemployment.

Gallup polled 1,014 U.S. adults from August 1-23, 2023 on workers' fear of becoming obsolete.

- 22 percent of workers said they worry about technology making their job obsolete, up seven percentage points from 2021.

- The rise in concern was concentrated in college-educated workers, 20 percent of whom are now worried about their job's obsolescence, compared to 8 percent in 2021.

A recent Axios-Morning Consult AI Poll of 2,203 U.S. adults from August 10-13, 2023 examined public opinion on AI in the upcoming elections. Key findings include:

- Supporters of former President Trump (47 percent) were nearly twice as likely to say the spread of misinformation by AI would decrease their trust in election results compared to Biden supporters (27 percent).

- 53 percent of respondents said it is likely “misinformation spread by artificial intelligence will have an impact on who wins the upcoming 2024 U.S. presidential election”.

- 35 percent of respondents reported that AI will decrease their trust in election advertising, with Trump supporters (42 percent) more likely to distrust ads than Biden supporters (33 percent).

A poll by the American Economic Liberties Project and Fight Corporate Monopolies of 1,227 likely voters from September 13-14, 2023 found that:

- 60 percent of Americans believe companies like Google have too much market power, disadvantaging competitors and harming consumers and small businesses.

- 60 percent of respondents express concern about Google's handling of privacy and data.

- 38 percent of respondents believe that Google uses too many ads.

- 43 percent of people believe that Google ads are “mostly useless.”

- 46 percent believe the government should do more to regulate Big Tech companies (like Google, Amazon, and Meta).

Gallup released a survey about AI's impact on the job market, polling 5,458 American adults from May 8-15, 2023. They found that:

- 75 percent of Americans believe AI will replace jobs over the next ten years, while 19 percent believe it will not impact the job market.

- 68 percent of adults with a bachelor's degree say AI will decrease the number of jobs, compared to 80 percent of those with less than a bachelor's degree.

- 67 percent of adults aged 18 to 29 say AI will decrease the number of jobs, compared to 72 percent of adults aged 30 to 44, 79 percent of adults aged 45 to 59, and 80 percent of those aged 60 and older.

Between spring and early summer of 2023, S&P Global issued a survey to 4,000 consumers in 42 countries about the metaverse, defined as “virtual spaces built for socializing, gaming and shopping.” They reported that:

- 70 percent of respondents said they were concerned about privacy and data collection regimes, standards of conduct, anonymity, and payment security in online spaces.

- 71.3 percent indicated they were either very concerned or somewhat concerned about their privacy and personal data.

- 73.8 percent said they were either very concerned or somewhat concerned about establishing community rules and standards.

- 74.8 percent said they were either concerned or very concerned about payment and transaction safety.

- - -

We welcome feedback on how this roundup could be most helpful in your work – please contact Alex Hart and Kennedy Patlan with your thoughts.

Authors