Responding to Climate Disinformation: A Conversation with Jennie King & Michael Khoo

Justin Hendrix / Jun 11, 2022

Subscribe to the Tech Policy Press podcast with your favorite service.

The latest reports from the Intergovernmental Panel on Climate Change (IPCC) do not mince words. They say that “climate change is causing dangerous and widespread disruption in nature and affecting the lives of billions of people.”

The quality of the public discourse on climate issues plays a role. A report released by the IPCC in February says that the “[r]hetoric and misinformation on climate change and the deliberate undermining of science have contributed to misperceptions of the scientific consensus, uncertainty, disregarded risk and urgency, and dissent.” An April installment describes how “opposition from status quo interests” and “the propagation of scientifically misleading information” are “barriers” to climate action and have “negative implications for climate policy.”

This week, a coalition of groups published a report titled Deny, Deceive, Delay: Documenting and Responding to Climate Disinformation at COP26 & Beyond that outlines prominent discourses that seek to pervert and prevent efforts to address climate change, and makes recommendations for governments, social media platforms platforms and the media on what to do to address the the issue.

I spoke to two individuals involved in the effort to produce the report to learn more:

- Jennie King, the head of civic action and education at the Institute for Strategic Dialogue

- Michael Khoo, the Climate Change Coalition co-chair at Friends of the Earth

What follows is a lightly edited transcript.

Justin Hendrix:

So Michael, Jennie, you have just published this report, Deny, Deceive, Delay: Documenting and Responding to Climate Disinformation at COP26 and Beyond. But this is a work of multiple organizations. Can you just explain the organization behind this and the partnership that you've put together?

Jennie King:

Absolutely. This coalition, which is called the Climate Action Against Disinformation Coalition, and it works with the US based Alliance that Michael mentioned, Climate Disinformation Coalition, has formed somewhat organically over the past 12 to 18 months, and really combines organizations that have precedent and expertise in researching disinformation and malign influence operations and those who are much more engaged in the climate science, climate advocacy and climate policy spaces, who realized that there was a real and present danger being posed by mis and disinformation, in the ability to pass meaningful legislation.

And there were kind of ad hoc pieces of research that were coming from organizations like Avaaz or Global Witness or Friends of the Earth, or my organization ISD. And we realized that there could be huge economies of scale and better impact if we leveraged our collective knowledge on this issue and became a more formalized coalition. And that really came into fruition most acutely last year at COP26, the climate summit in Glasgow, when we formed what we were calling a war room but in practice was a real time 24 hour monitoring unit that was looking at information threats which attempted to undermine the negotiations and the outcomes of the summit. And off the back of that we had a huge body of evidence that we decided to build on and turn into the report that came out on Thursday, June 9th.

Justin Hendrix:

So one of the things I wanted to kind of ask just upfront, one of the things you point out in the report is that there is a mandate, a public mandate at least, across the world. That climate change is a problem, is an issue and is something that most people want to address one way or the other. That seems to be even true in the United States, where perhaps we're a little bit behind Europe in thinking about these matters. What do you think the impact of disinformation is in this context where we have achieved a kind of, I suppose, consensus that we should act, but perhaps we're still not doing it?

Jennie King:

I sometimes refer to this as the enthusiasm gap. And I think it really shows how sophisticated and nuanced the evolution of anti-climate rhetoric and lines of attack is. There is clearly recognition amongst adversary groups that they lost the first battleground, which was trying to deny the reality of climate change as a problem writ large, or human impact on it and the need for urgent action. And as you said, there is now widespread evidence, including polls by UNDP that looked at 50 countries and millions of people, which says there is consensus. This is a real issue. It needs to be tackled by government led mandates and we want that to happen now. However, there is a huge gulf between winning that particular battle and the actual implementation of meaningful policies in line with the goals of the Paris Agreement and IPCC scientific consensus.

And that is where you see a lot of the information warfare taking place now. Is yes, you might have a wellspring of support and the basis for a public mandate in most countries around the world. But that doesn't mean that there is a public mandate for the exact legislative agenda that needs to be passed at both the domestic and the multilateral level, or that people have any understanding of what the viable solutions are, going forward. And in reality, if you can coordinate campaigns or put enough information into the public commons, which stops policies from ever being passed, in a way that's just as bad as never recognizing the problem in the first place. Because you end up in the same place, which is, well, we now know that it's happening but we're not doing anything about it.

Michael Khoo:

I think I'd also add, if you look at how climate disinformation has played out, especially over the last year, we've seen some really great and terrible examples of how climate disinformation has flooded the zone, so to speak, of any discussions, rational discussions of what the alternatives should be. And so we documented last year this case study in Texas, where there was a giant power outage, many people died, and the forces of the other side, to put it broadly, but driven by the fossil fuel industry, blamed that all on renewable power and on, quote, frozen windmills. And they did it all based on this one image that was taken from 2015 from Sweden to falsely say that that was the cause of the power outage, which was absolutely not the case. But it didn't stop that from completely flooding the conversation online.

And we did a lot to document how that went from one small corner of the internet, to getting amplified through other platforms on the internet, to making it to mainstream news, to Governor Abbot's lips in just four days, it became a talking point. And it's been a talking point for the GOP ever since, this idea of this liberal, weak-kneed alternative energy path that's going to make America weak. It's been a talking point they've wanted to create for a long time, as we think to create solutions to Jennie's point that it's attacking the pathway out since they can't just say the earth is flat anymore.

Jennie King:

And if you think about the end goal here as maintenance of the status quo, that those who oppose climate action, that have vested interests or financial incentives for maintaining reliance on existing technologies or on fossil fuels or on oil and gas, that the playbook has had to change. And it's no longer as palatable to say publicly, "Well this isn't a problem and it doesn't require action." But it's extremely effective to say, "Well of course we believe in climate change, we're not cranks, but all of the solutions on the table are either overly expensive, too disruptive to our lifestyles, they're going to cede geopolitical power to bad actors like China." Insert whichever excuse you want to use. And you end up with the same end results.

Justin Hendrix:

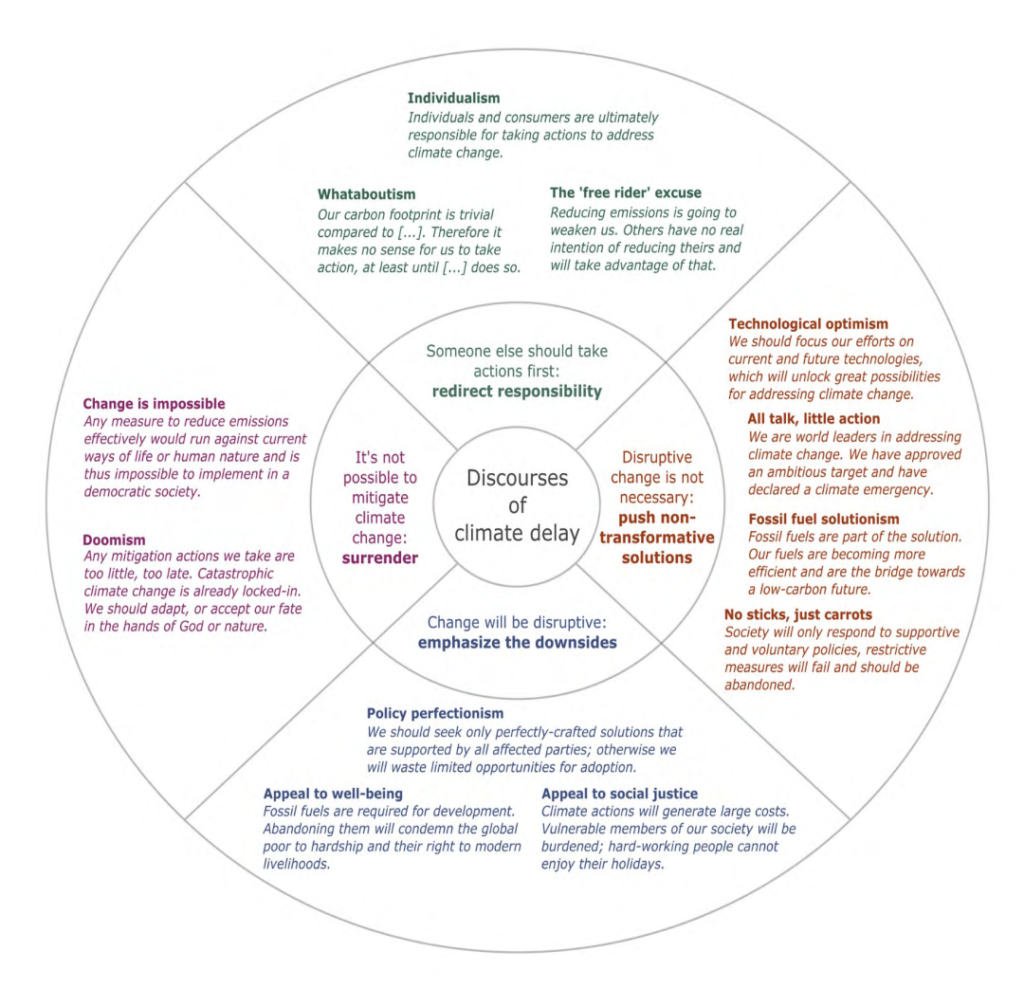

And I would recommend people to the report where there is a great graphic on the discourses of climate delay. Take us through those just a bit. You just mentioned one in particular, this sort of argument about the idea that disruptive change is not necessary. What are some of the others?

Jennie King:

I mean, it's a beautiful and terrifying taxonomy because it's so simple in its effectiveness. One column of this really is to subsume climate policy and climate change as an issue into the culture wars, and therefore situate it in themes around power and agency and state-citizen relations. So one of the major lines of attack that you see, and this was where most of the volume of content in and around the COP26 summit last year was focused, never really talked about the substance of climate at all. It wasn't interested in carbon markets versus other forms of mitigation and adaptation strategies. Instead, the argument being made was that climate negotiations in and of themselves are illegitimate because the people involved are elites or liberals or members of the new world order, or are committed to conspiracy theories like the great replacement and the great reset. And their entire goal is to use the pretext of climate change to enact a form of green tyranny that infringes on your civil liberties and fundamentally changes the parameters of your life.

S., Creutzig, F., . . . Steinberger, J. (2020). Discourses of climate delay. Global Sustainability, 3, E17.

Source

So that is one major body of content that is circulating across social media. Another key discourse of delay is what I would call absolutionism, which is a term that we potentially coined in this document. It's not that common. I guess it's similar in a way to whataboutism, which is the idea that, "Well we, insert country, don't need to act because actually, insert other country, is a worse perpetrator in this space." And in the US particularly you will see that argument being framed around China and India. So the GOP or Republican congressman at the big oil hearings that took place last year, a number of them said, "Well, why is the US taking these severely punitive actions to introduce net zero targets that are going to destroy livelihoods and are going to raise cost of living for the American people and are going to punish our historic oil and gas sector, when China are actually bigger polluters than us, and they're not doing enough?"

And so it creates this false either/or paradigm, zero sum paradigm, which is totally counter to the Paris Agreement that says everybody needs to be acting. It is not mutually exclusive. Every single country needs to have strong, nationally determined contributions. And instead it just pushes the responsibility and the accountability onto some villain elsewhere in the globe. That's another key discourse of delay.

And there may be one other that I'd mentioned, because I think it's so relevant to the greenwashing issue, is what we sometimes call technological utopianism. So it's the idea that, "Look, don't worry about climate change. We've got it sorted. We, usually the fossil fuel industries, are going to introduce a silver bullet solution such as carbon capture and storage, that means we don't really need to have any substantive efforts taking place elsewhere. We don't really need to have an energy transition. We don't need to stop emitting carbon and methane and other dangerous gases. We don't need to change consumer behavior. Because actually we're just going to suck all of our emissions out of the atmosphere." Using a technology which isn't really substantiated by the science at present, and certainly doesn't exist at nearly the scale or the sophistication to solve the problem in this present moment. But it creates a good news story, which is really compelling for citizens who are terrified about climate change and want to be told that this is a solvable issue.

Justin Hendrix:

So you move on to kind of documenting the social media ecosystem, the role of the social media ecosystem. This is the problem of disinformation, of course we've been talking about it now for years, ISD's built up an incredible expertise around it as have a number of the other firms that you reference in here. What do you regard as now the sort of social media platform's responsibility to this problem? And how have you sort of... I guess I'll ask, and how do their policies differ?

Michael Khoo:

Well, I think this is the problem, is it's one thing to have a level playing field for a debate. It's another thing when one very small group of people get an outsize footprint in that debate. And that's what you have, the traditional 80/20 rule. It seems much more online and on social media platforms like a 95/5. Where you have, again, a dedicated group of climate denialists who are now getting married together with the culture wars. And certainly our documentation saw the marriage of QAnon and climate denialism as a group that were... A Venn diagram that became a circle. And you've seen that really increase over the last four years from being a bit of a disparate issue to it really glomming onto every other hot topic for the conservative social space.

And so then you think about what have the platforms been doing about that to reduce the virality? And in fact, what they do is increase the virality. They give an artificial megaphone to those groups so as to really, I think, fundamentally threaten freedom of speech. Because you talk about this debate in America where people are talking about it in an individual sense, but if you talk about it in the collective sense of freedom of speech the broad majority who believe in climate change, who believe in many solutions, are completely getting drowned out by this idea of a false windmill, or the idea of planes stacked up at Glasgow. All these false images that just get flooded.

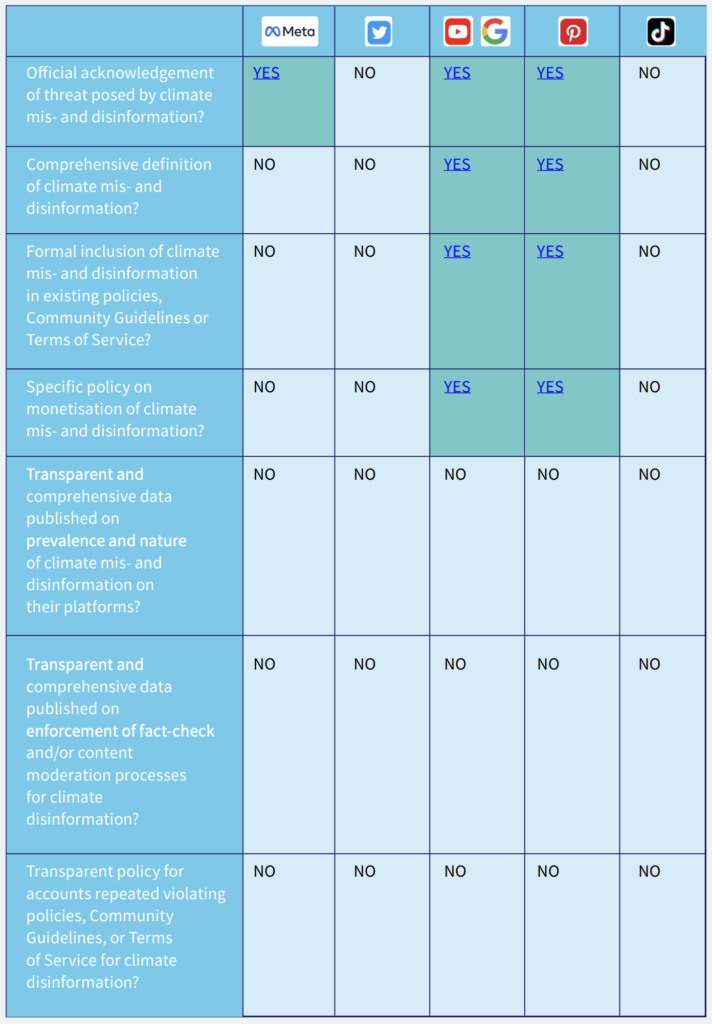

So you look at what the platforms have done over last year, and I will say there's been a bright light in the whole movement, which generally is a bit of a downward trend. But there's been a bright light in some of the corporate movements in that they have all taken some actions over the last year. So in just 12 months we've had Twitter, Google, Facebook, and most recently Pinterest coming up with probably the gold standard of the different social media platforms, all taking certain steps towards limiting climate disinformation.

And the one great thing I think that we can feel positive about is what we have done is remove the social license to spread climate disinformation from the platforms. I think they all would agree to that publicly. The question now is the details, and boy are there a lot of details. But we've seen these smaller actions, like Twitter came up with a topic area, which is a moderated discussion area. And it really is a fantastic unsung story of success in the social media realm, that they created a reasonable conversation that also has a really high engagement. So it belies some of this, "We can't do moderation because people only want a crazy toxic conversation." People don't, even outside of advertisers who don't want to be a part of that. There've been really great successes from some of the companies that we're hoping adds up to a momentum that we build this evidence base to show all the examples, and ideally are pushing them to go further over the course of the next year.

Jennie King:

If I could add a couple of quite stark statistics that I think really evidence the problem of what's taking place on social media. For us, one of the biggest recommendations for companies is that they enforce their preexisting or introduce robust policies around repeat offenders. And the argument that we are making is that this would actually have a force multiplier effect, because the people who we found were gaining the highest amount of engagement and interactions on climate skeptic and climate denial content were more often than not also perpetrators in other harms. And that could be everything from anti-vaccination rhetoric or denial of the COVID-19 pandemic as a phenomenon, all the way through to very extreme movements that I've mentioned previously, like the great replacement or QAnon. And even in some cases totally unacceptable discourse like Holocaust denial, which is completely restricted under existing terms of service and community guidelines.

To give you one example, we looked at 16 super spreader accounts on Twitter who have great organic reach, very high follower bases and the ability to galvanize a large audience, in just the month before, during and after COP26. And they had something like 500,000 active interactions with their climate related posts during that time. Now those 16 accounts outperformed 148 of the other prominent climate skeptic accounts and actors in this space. So it shows just how disproportionate the influence of this tiny group of people can be on the overall discussion.

And then equally on Facebook, they often tout their climate science center as being the flagship initiative that's come out of the platform. And every time you produce a piece of research they'll say, "Well we're doing loads. We've created this bespoke content hub that steers people towards authoritative content. And it has X number of visitors per day." But we compare the seven or eight pages that are in that hub with seven or eight pages who have a track record and a history of spreading climate skeptic and delay-ist narratives. And we found that during COP the disinformers outperformed the climate science center by an order of 12. So they're 12 times more popular, even when the platform is pushing verified and credible sources. So it just shows that the fundamental architecture of these systems is still rewarding the misleading content, the sensational content, the incendiary and polarizing content, rather than the nuanced and trusted science.

Justin Hendrix:

One of the things that you point out in the report, of course, is that there's still an enormous amount of money in this problem. There's huge sums of money being spent on paid advertising by the fossil fuel industry, and all manner of other forms of influence I'm sure. How do you disentangle the role of political elites who are in hock to fossil fuel interests? Fossil fuel interests and the role of the platforms? 'Cause clearly there's some separation.

Jennie King:

There is part of this that is about monetizing an industry, that kind of connects at least two of the groups that you talked about there, which would be the tech companies and the carbon majors as we call them. So the 100 companies who've been responsible for 70% of emissions historically. And there was a study done by our partner Eco-Bot.Net, which found that 16 of those companies spread 1700 adverts in the first nine months of 2021, just on Facebook. And those got 150 million impressions. But most importantly for me, it made $5 million of revenue for Meta as a parent company.

Now that system has to change. We need to remove the permission structure for those who are actively trying to greenwash this debate, or create an impression of false solutions that they're able to use these products and services to reach the maximum audience.

The other part of the ad tech industry that I really don't think gets enough attention and is quite opaque, is the fact that misinformation sites... So take something like Breitbart, whose editorial line is absolutely unequivocal. They don't believe that climate change exists. They don't believe that we should take action on it. That there are brands who are continuing to give them, unwittingly or not, advertising revenue by having their content shown next to these articles. And there was a study that was done last year which estimated that $2.6 billion a year are spent advertising on mis and disinformation outlets.

Now brands in the past have claimed that they didn't know that was happening, but now I think they're either woefully or willfully ignorant of the problem. Because there is enough evidence happening and people like Sleeping Giants or Check My Ads or the Conscious Advertising Network that are doing advocacy in this space to say, "You need to use your leverage as major investors in the online ecosystem to say, "This is not acceptable and we are no longer willing to fund it."" So I think those are two key parts of the architecture that need to change immediately.

Michael Khoo:

And we'll just add another study that another one of our coalition partners did, Influence Map, which was leading into the Build Back Better debates in the US last fall. And they tracked a huge uptick in spending directly from the fossil fuel industry in August, a million dollars that they spent in just that month leading into the debate to influence it. And this is just more direct evidence of the sort of multi-layered way that they're approaching their campaigns. And all of this to us, you asked about like, "How do you separate the layers?" An one hand you don't even really need to, or want to, from a platform perspective. You want to say, "Are you allowing a reasonable debate between your ad policies and your organic policies?" They all really need to fall under the same policy to say you're not going to allow the spread of climate denial on your site. And it's just only more egregious when they're profiting from it.

Justin Hendrix:

You have recommendations for government bodies, multilateral bodies, also for tech companies. I suppose, having listened to you, I might expect more almost sort of censorious representations or more representations about removal of specific content. There's not so much of that. You seem to focus more on coming to terms about what a definition should be, criteria that could permit the tech firms to perhaps arrive at better implementation of their standards, terms of service. I guess I want to ask about the sort of tension between some of these recommendations and free expression. How did you sort of think about that as you put the report together?

Jennie King:

I know that Michael is much better placed to talk about the free speech landscape, but just to give a comment on why we don't advocate for active removal of content in every space is that I do think it's really important to say that there are plenty of mechanisms at your disposal to reduce the prominence and the influence of disinformation that doesn't require removing people's ability to say things. Because we do live in liberal democracies and people do have a prerogative to hold opinions, but there is a separation between freedom of speech and absolute freedom of reach.

And that's where we've really consolidated these recommendations is that in essence, mis and disinformation don't matter if they don't have the ability to influence public opinion, and ultimately to hinder the passing of climate legislation that is based on good faith debate. But we don't want to get wrapped around the axle in this kind of false dichotomy about censorship, or who is or isn't allowed to use social media. Unless people are saying things that are active excitement to violence, or really do violate times of service and therefore should be deplatformed. That's a separate matter around violent and extremist content.

But in this space I don't think that removal is a useful framing for this conversation, because as we were talking about earlier, this is actually about algorithmic amplification. It's about repeat offenders and the punitive actions that can be taken to downrank content or to add labels and interstitials. And it's about reducing the amount of oxygen that those accounts and those narratives are taking up in the online space.

Michael Khoo:

And to build on Jennie's point, all of these companies have already got policies that say to some degree that this spread of disinformation is not supposed to happen. So on first level, enforcing the community standards that not only they came up with, they have told advertisers they're going to have on their platform. There's a reason why there's not a lot of advertising on 4chan, right? People want to go to a place that has a reasonable conversation and has private companies. They've declared they want to have that space. What we're seeing is that we're lacking the transparency to see whether you're telling the truth. And in fact, what you find out from every whistleblower from Sophie Zhang to Frances Haugen is every time you actually lift up the lid it's far worse than you ever thought it was with your anecdotal evidence.

All these studies that we did, which really take a ton of nonprofit time, are just documenting what exists at the turn of a switch, internally. They have all the numbers that we want right there, ready for display, but they're hiding them. So I think, to step back and think, what do we expect out of companies in America? And we have a long history of basic transparency. And if you take something like the airline industry, the worst case scenario, a plane goes down, what do we do? We look for the, strangely termed, black box. And the black box is nothing but a black box. It's actually a transparent device that tells people the worst thing that could ever happen. It gets sent directly to regulators. It gets shared with the public. It gets shared with all other industries.

I mean, that is the exact opposite of what we have. When we find a problem on social media platforms they deny that it ever happened. And then when you say it happened they say it's much less, and we know how much better it was. Or any amount of lies. And then as you see, they go before Congress like Mark Zuckerberg has, and directly lie about all of these things. And they're held to no account. I mean, the idea of how far above the law these people think they are. And you put Elon Musk on that same list of people who just openly lie to flout the process of trying to regulate any piece of the tech industry.

What we're talking about is act just like your standard food processor or baby seat manufacturer. If you have a harm on the platform of the product you produce you have to report that transparently. And then we can have a discussion about how much in the margins we need to be, recalling that baby seat or tweaking the algorithm. Again, all of these things, these algorithms of amplification, they're designed by humans, right? They're not just something that happened to us. Every one of those lines of code is a value choice. And they're saying that they have certain values, and every time we poke slightly inside we can see that it represents none of that. So transparency, it becomes your first waypost to then have a reasonable discussion to Jennie's point of, repeat offenders who have over and over gone against the community standards that they want, that everybody wants. Why are those people on a special VIP list like they are at Facebook?

Jennie King:

This really does align with what's happening in the European context with the Digital Services Act and the requirement for there to be risk audits and regulatory oversight that is independent from the companies, that tries to reconcile some of the tensions that platforms have claimed in the past about providing access to data without compromising user privacy, and also without compromising proprietary intellectual property within those companies. But that there is precedent for this. Facebook tried to launch something called the Social Science One initiative a few years ago, and it ended up being unfortunately a bit of a damp squib because of the pace of the disclosures, the type of the disclosures. But the idea is that you have tiers of vetted institutions, either within the political architecture or from the research and the academic space, that have different levels of access, including to sort of what we call dummy data.

So anonymized data that allows you to get platform level insight into trends, without knowing anything about the specific individuals involved or being able to identify certain perpetrators, but that you can see the signals of what the disinformation playbook looks like. And I would also say that there is a document, I haven't been able to read the full 180 page epic yet, but the European Digital Media Observatory, EDMO, which came out of the tech regulation process in the EU, just released a big guide on how to do data disclosure from companies without compromising GDPR. And I think that that is a comprehensive effort to say that this is a doable process, that we can't have the same level of excuses that have been used previously and solely rely on voluntary disclosures and efforts, which was the case under things like the Code of Practice on Disinformation, where we were saying, "All right, well we'll just allow the platforms to release whatever they're happy to release in quarterly reports." And saying, "No, we need something that is a lot more routine and systemic, and that involves third party partners."

Justin Hendrix:

Of course I've covered the EDMO report at Tech Policy Press, as well as the fight more broadly for transparency legislation, not just in the EU but also here in the US where we have a couple of pending bills around that prospect. And I did find it interesting that one of the things you're after, not just general transparency, but you edge in what I think is probably a disinformation researcher's dream, which is around enabling API image based searches. Which I'm sure if you're wanting to study Instagram or YouTube or some of the more image heavy or video heavy sites is important to you, Jennie.

Jennie King:

So important. Michael has mentioned a couple of times the Texas blackout phenomenon. That was a viral campaign that was built on one or two meme-ified images. An image that we managed to attribute back to 2015 that claimed to show a wind turbine being deiced by a diesel helicopter, and was therefore making the claim that renewable energies aren't even renewable. Not only are they unreliable but you need fossil fuels to maintain them. It was entirely misattributed and decontextualized. But it was almost impossible for us to quantify and therefore respond in real time to those kinds of threats, because the API access does not allow you to do image based searches. So the amount of labor-intensive activity that's happening on the researcher's side in order to put a marker in the sand, and more importantly to have crisis mechanisms in place to actually respond to these things and make sure that they don't become the defining narratives of key events, is so important.

And I think that that will become more and more the case, because many of these disinformation campaigns are transnational and therefore they cross language barriers and they cross geographic contexts. And an image-based effort is far more effective in that international space, because it doesn't rely on text. It also doesn't rely on critical thinking. It kind of bypasses the necessity to engage with substance, and instead it hardwires straight into the more emotive clickbait-y forms of online content. So it has the potential to cross a critical mass of virality and also of concern much faster, in a way, than a traditional tweet or a traditional Facebook post. And at the moment we don't really have the tools at our disposal to actually track those trends in real time.

Michael Khoo:

And I think while it'd be great to have this evolution in our side's monitoring technology, I do want to say we went to all the companies and said, "This is a group of people who are going to do something like this." We went to them literally four weeks before that happened and we said, "Watch out for that, watch out for that within this group of people." And indeed it was exactly those people who spread that, were allowed to have the virality. So even before we get those sorts of innovations, the vast majority of that problem could have been stopped. You had, in Texas, the local mainstream media did a fantastic job actually of quickly, within 24 hours debunking those images. But they were allowed to be populated, even though we told the platforms, "Watch, it's happening right now, stop it." They have the easy power to stop that. And they completely ignored it at the time. And continue to as we have future events.

Justin Hendrix:

I'll ask just one last question. Has producing this report, looking at this problem so deeply, has it left you more or less optimistic we'll be able to get the, I guess, dialogue under control in a way that perhaps can move even these, I suppose, slightly ambivalent democracies towards doing something about climate change?

Michael Khoo:

Well, I think I actually remain optimistic about the social media side, despite all this conversation. Because if you look broadly, solving climate change itself is really, really hard. Comparatively, solving climate change disinformation is actually quite simple. We know where it starts. We know how it's being artificially created and amplified. We know the platforms where it's worst. And in particular, the actors who have a vested interest in pushing it forward. Climate change is really a much harder problem to solve. So when we're faced with something so existential for our planet, for all the people, for future, let's take the low hanging fruit where it is, which is to stop making the already difficult problem much, much worse.

Jennie King:

Yeah. I mean, working at an organization like ISD you're in a constant battle between optimism and a sense that everything is kind of intractable. But I have to say that I agree with Michael, because it feels like the roadmap is there. And the seven recommendations in the report that we published are part of this equation. And we really feel that combined they would do a huge amount to reducing the impact and the prominence of this kind of content. And that we are starting on the front foot because we know that there is that public consensus. What it requires is the buy-in and the attention on this issue.

But something that's really given me a sense of positivity is that we've had institutions like the UNFCCC, which is the UN agency that runs the climate summit's delegations at the COP, and a number of other actors say to us, "12 months ago this was not on the agenda. We had never heard a single person talk about climate disinformation in the public debate." And now it's everywhere. Around COP26 alone our coalition in the war room got 150 pieces of media in the mainstream press globally. And I'm talking everything from India to Sub-Saharan Africa to Europe, to North America, to Asia Pacific. And that level of momentum and the kind of meteoric picking up of pace to me is a sign that we've already closed the gap between trying to socialize people to this issue and raise awareness about it, and actually instituting some sort of meaningful response.

Justin Hendrix:

That is an optimistic place for us to end, with a major problem, major challenge, maybe major global challenge. But it sounds like some progress towards a specific piece of it. So I thank the both of you for your effort on this report and for talking to me today.

Michael Khoo:

Thank you so much for having us, great to speak with you.

Jennie King:

Thank you.

Authors