Researchers Query Misinformation and Interventions to Address It

Justin Hendrix / May 10, 2022

Disinformation! Misinformation! What to do about it? Academic researchers continue to crack away at the question. Three new studies look at issues around the credibility of news sources and the impact of factual corrections in social media news feeds.

Not All Misinformation Is Created Equal

A paper published this week in Nature by Carlos Carrasco-Farré at Universitat Ramon Llull—ESADE Business School in Barcelona is titled “The fingerprints of misinformation: how deceptive content differs from reliable sources in terms of cognitive effort and appeal to emotions.” Carrasco-Farré analyzed more than 92,000 news articles to “uncover the features that differentiate factual news and six types of misinformation categories” in order to then “assess the readability, perplexity, evocation to emotions, and appeal to morality” in them.

Carrasco-Farré’s analysis turns up some interesting results. For instance, according to his math “fake news” is “on average, 18 times more negative than factual news.” False news is less “lexically diverse” than factual news, and rumors are even less so. False news appeals to “moral values 37% more than factual content,” and it is easier to process cognitively, a finding consistent with past research.

Carrasco-Farré says understanding such quantitatively discernible features of news items may “help technology companies, media outlets and fact-checking organizations to prioritize the content to be checked,” and that the findings may suggest “promising interventions for early detection and the identification of check-worthy content,” among other benefits.

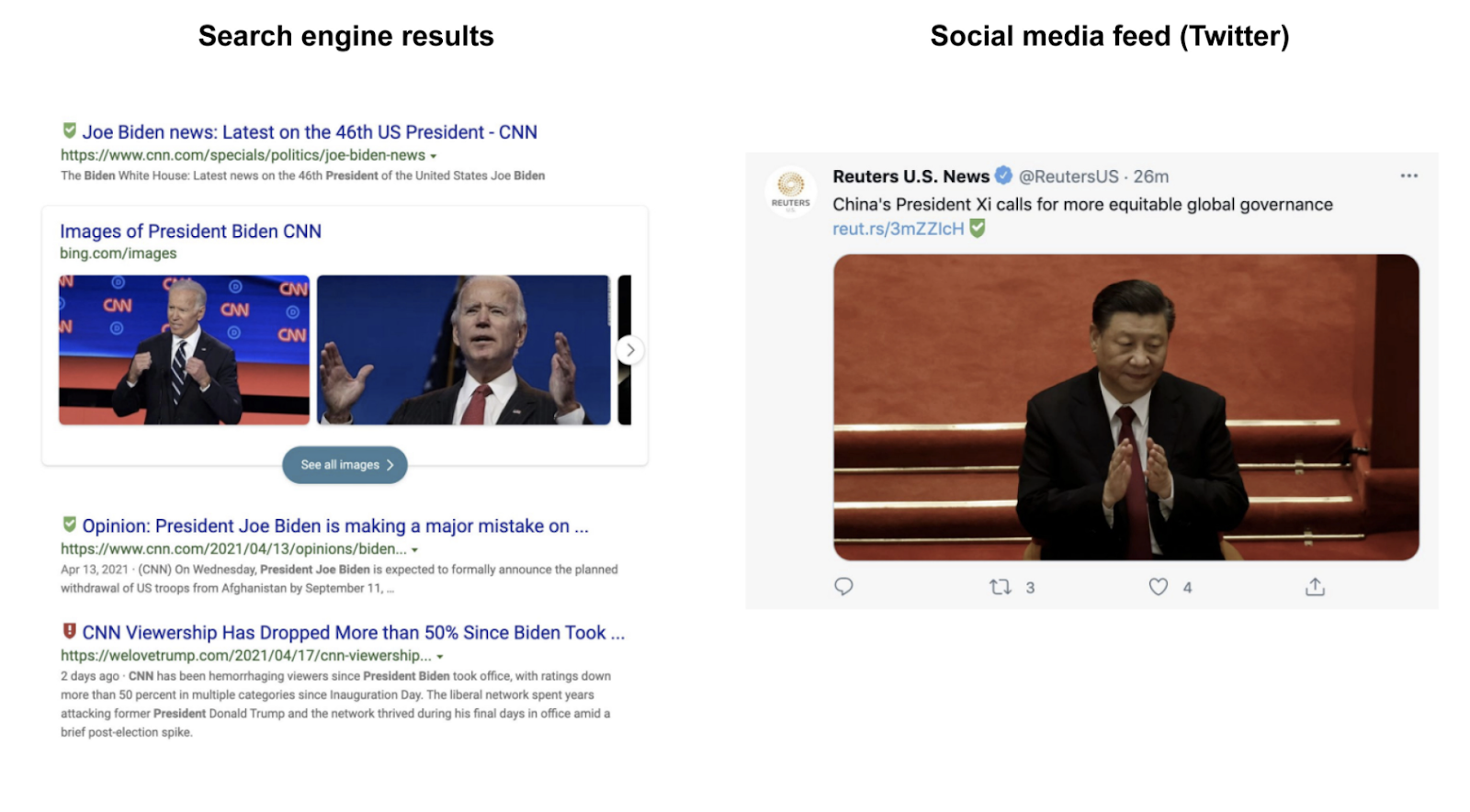

Labeling News Credibility May Help Heaviest Consumers of Misinformation, At Least

One idea to address the problem of misinformation and disinformation is to append credibility labels to news sources to send a signal to readers about the quality of the content. In 2018, media entrepreneurs Steve Brill and Gordon Crovitz launched NewsGuard, which aims to provide “transparent, accountable trust ratings for thousands of news outlets.” NewsGuard's browser extension inserts shield symbols on search results and in social feeds to indicate source quality. For instance, a green shield indicates a reliable source, a red shield indicates an unreliable source, a gray shield indicates a source with user generated content, etc. But do such labels work?

Last week, researchers at NYU’s Center for Social Media and Politics (CSMaP) published a paper in Science titled “News credibility labels have limited average effects on news diet quality and fail to reduce misperceptions,” that assessed “the impact of source credibility labels embedded in users’ social feeds and search results pages” using NewsGuard’s product.

The CSMaP researchers “randomly encouraged” two waves of more than 3,000 survey participants to install the NewsGuard browser extension, and then tracked their behavior. The goal was to determine whether the labels shifted “downstream news and information consumption,” and whether such a shift might “increase trust in mainstream media and reliable sources” or “mitigate phenomena associated with democratic dysfunction,” such as affective polarization and political cynicism.

According to the researchers, their findings include that, at least on average, credibility labels had no impact, did not change consumption patterns, and did not affect trust in sources more generally. But, for those who already binge on low-quality information, the labels do improve their consumption patterns, leading them to visit more credible sources.

Unfortunately, the researchers find “no support” for the proposition exposure to labels “could help to alleviate pathologies such as affective polarization and political cynicism associated with consuming, believing, and sharing news from unreliable sources,” after all. The researchers conclude that “subtle contextual information” like the NewsGuard badges may simply be insufficient to “shift perceptions of source credibility” in a more broadly substantial way.

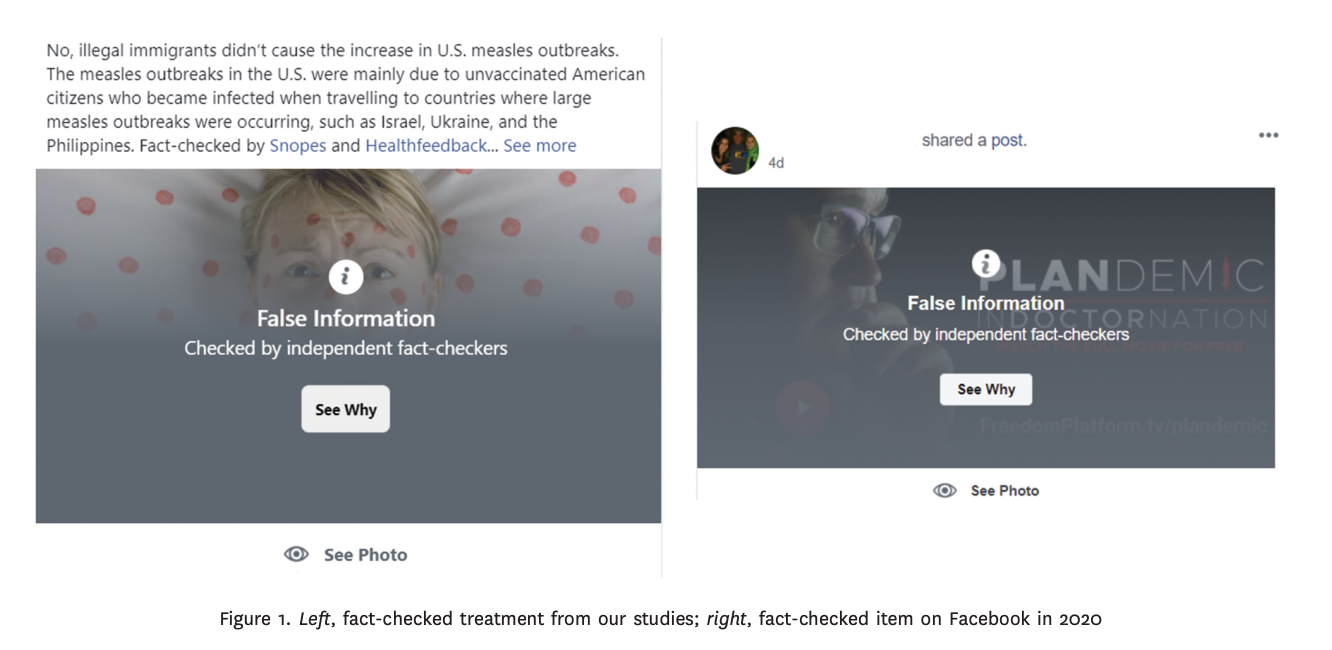

Corrections Effective in Rebutting Misinformation on Experimental News Feed

In a preprint published last week titled “Political Misinformation and Factual Corrections on the Facebook News Feed: Experimental Evidence,” researchers from George Washington University and Ohio State set out to “advance our understanding of the effectiveness of factual corrections on social media” by fielding experiments on a research platform “engineered to resemble Facebook’s news feed.” This test platform was designed by Avaaz, a civil society advocacy organization that has fielded campaigns related to the harms of social media platforms. Naturally, it allowed the researchers more flexibility than Facebook itself.

On the test platform, users were exposed first to a mock social feed that included “fake stories” that “had actually circulated on Facebook,” and then to a feed that featured factual corrections at random to users that had seen the fake stories. Then, the participants were quizzed to measure their factual beliefs about the items. A second experiment “investigated whether results from the first would be robust to changes in correction design and outcome measurement,” changing the visual appearance of the correction and the wording of the evaluation scale.

The researchers find that “even on a platform that approximates Facebook’s news feed, in which subjects were presented with politically incongruent fact-checks, corrections increased accuracy” on evaluations. How relatable are these results to Facebook itself? The researchers urge caution: while the simulated environment may look like Facebook, “[c]onspicuously absent, for example, is the appearance of real world friends and connections.” That social context may in fact lead to different results, but nevertheless, the research suggests corrections can gain some ground against misinformation in social feeds.

- - -

Taken together, these studies all reinforce the idea that addressing the problem of misinformation is a massively complex undertaking. But current and proposed interventions need nearly as much study as the problem itself in order to prove efficacy and help govern the allocation of resources by platforms, media, civil society and governments. Watch this space.

Authors