Researchers Identify False Twitter Personas Likely Powered by ChatGPT

Justin Hendrix / Aug 3, 2023Justin Hendrix is CEO and Editor of Tech Policy Press.

When it comes to imagining the harms of generative AI systems, the potential of such technologies to be used for disinformation and political manipulation is perhaps the mosts obvious. At a Senate Judiciary subcommittee hearing in May, OpenAI CEO Sam Altman called the risk that systems such as ChatGPT may be used to manipulate elections "one of my areas of greatest concern." In a paper released in January, researchers at OpenAI, Stanford and Georgetown pointed to "the prospect of highly scalable—and perhaps even highly persuasive—campaigns by those seeking to covertly influence public opinion."

Now, researchers Kai-Cheng Yang and Filippo Menczer at the Indiana University Observatory on Social Media have shared a preprint paper describing "a Twitter botnet that appears to employ ChatGPT to generate human-like content." The cluster of 1,140 accounts, which the researchers dubbed "fox8", appear to promote crypto/blockchain/NFT-related content. The accounts were not discovered with fancy forensic tools-- detection of machine generated text is still difficult for machines-- but by searching for "self revealing" text that may have been "posted accidentally by LLM-powered bots in the absence of a suitable filtering mechanism."

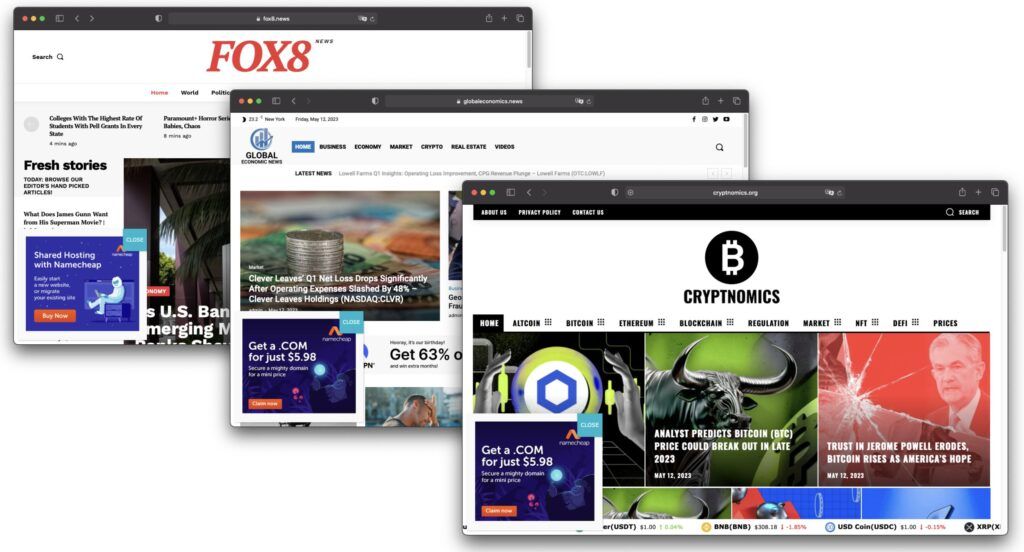

Using Twitter's API, the researchers searched Twitter for the phrase 'as an ai language model' over the six month period between October 2022, and April 2023. This phrase is a common response generated by ChatGPT when it receives a prompt that violates its usage policies. This produced a volume of tweets and accounts, from which the researchers were able to discern relationships. The fox8 network appeared to promote three "news" websites-- likely all controlled by the same anonymous owner.

When the researchers applied tools to detect language generated by a large language model to the corpus of tweets from the fox8 botnet, the results were disappointing. While it sent some useful signals, OpenAI's detector classified tweets from the botnet as human generated. Another tool, GPTZero, also failed to predict they were the product of a machine. The researchers see some promise in OpenAI's tool; but it's not yet up to the challenge of identifying text generated by OpenAI systems in the wild.

There is some hope. The researchers find that the behavior of the accounts in the botnet, likely due to their automation, follow a "single probabilistic model that determines their activity types and frequencies." So, whether they post a new tweet, reply or share a tweet, and when they engage in such activity, fits an observable pattern. Looking for such patterns-- which also apply to other automated accounts-- may help identify accounts powered by LLMs.

But such hope should be tempered by the reality that propagandists are just getting started using these now widely available tools. The researchers warn that "fox8 is likely the tip of the iceberg: the operators of other LLM-powered bots may not be as careless." As they point out, "emerging research indicates that LLMs can facilitate the development of autonomous agents capable of independently processing exposed information, making autonomous decisions, and utilizing tools such as APIs and search engines." No wonder Sam Altman is "a little bit scared" of what he's building.

"The future many have been worried about is here already," said one of the paper's authors, Kai-Cheng Yang, in a tweet. With elections looming in multiple democracies around the world in 2024, time is running out to put policies in place to mitigate the use of such systems to manipulate elections.

Authors