Researchers find evidence Facebook policy to prevent vaccine misinformation produced only temporary progress

Justin Hendrix / Feb 9, 2022Last July, U.S. Surgeon General Vivek Murthy took the stage to announce a warning about health misinformation.

“As Surgeon General, my job is to help people stay safe and healthy, and without limiting the spread of health misinformation, American lives are at risk,” he said. His advisory included multiple recommendations to the social media companies, asserting that “product features built into technology platforms have contributed to the spread of misinformation” and that the platforms “reward engagement rather than accuracy”.

Later that same week, President Joe Biden was asked what his message was to social media platforms with regard to COVID-19 disinformation.

“They’re killing people,” the President said. “Look, the only pandemic we have is among the unvaccinated, and that — and they’re killing people.”

In October 2021, documents revealed by Facebook whistleblower Frances Haugen showed the extent to which Facebook was engaged in efforts to understand the COVID-19 misinformation problem on its platforms, and a high degree of internal concern about its role in the propagation of anti-vaccine sentiment. The documents indicate the company knew COVID-19 misinformation was a much bigger and more intractable problem than it had publicly acknowledged.

Now, researchers from The George Washington University and Johns Hopkins have released a preprint study, Evaluating the Efficacy of Facebook’s Vaccine Misinformation Content Removal Policies, that would appear to support that conclusion, indicating the possibility that Facebook’s policy against vaccine misinformation has already been overwhelmed.

The overall goal of the study, which is not yet peer-reviewed, was to examine “whether Facebook’s vaccine misinformation policies were effective.” The researchers put forward four research questions:

- Did Facebook’s new policy lead to a successful reduction in the number of anti-vaccine pages, groups, posts, and engagements?

- Did its efforts improve the credibility of vaccine-related content and address the limitations of its prior policies?

- Was the company successful at reducing links between anti-vaccine Facebook pages?

- Did the company successfully mitigate coordinated efforts to spread anti-vaccine content?

Using CrowdTangle, the researchers gathered samples of hundreds of thousands of Facebook posts and engagements on a set of publicly available pro-vaccine and anti-vaccine pages and groups for comparison between two periods before and after “Facebook announced its anti-vaccine content ban” on February 8, 2021.

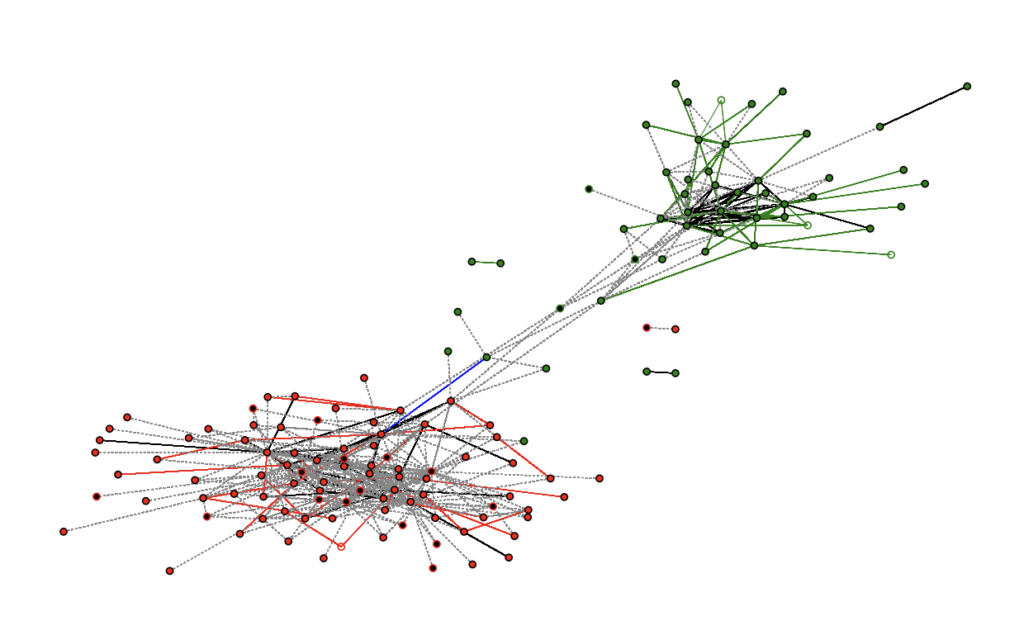

Analyzing links to URLs, the researchers compared the credibility of information sources posted in these pages and groups. Links between pages were assessed to understand the relationships on the platform, as were pages and groups that showed signs of posts being “routinely coordinated.”

Comparing the samples, the researchers were able to observe the impact of Facebook’s policy change and express it in mathematical terms, unveiling changes in the proportion and nature of posts and engagements and in the density and structure of the relationships between pages and groups. For instance:

- While anti-vaccine posts “still made up most of the posts in both pages and groups” in the sample set, the proportion of anti-vaccine posts in pages and groups was smaller after the policy was implemented, due to “the removal of anti-vaccine groups and pages.”

- Anti-vaccine pages were significantly more likely to have been removed than anti-vaccine groups when compared to the pro-vaccine sample.

- While posts and engagements in both anti-vaccine and pro-vaccine pages and groups decreased in the post-policy period, there is now evidence of recovery. “There are now more engagements in anti-vaccine groups than would be expected prior to the policy’s implementation” when considering the final sample.

- Contrary to the researchers’ expectations, the study found that the “fraction of posts with links to low- credibility sources -- and engagement with these posts -- has increased for anti-vaccine groups” across its samples.

- Results on the effect of the policy change on links between pages was mixed.

- The policy did appear to reduce coordinated link sharing between the pre- and post-policy period. Most coordination takes place in groups.

Perhaps the most significant observation is that while Facebook “appears to have had success in curtailing some anti-vaccine content” after the implementation of its policy, “anti-vaccine content recovered during our period of observation.” The researchers project that the “gains made by these policies may be both costly”– there was collateral damage in the form of imprecise removal of non-violating information– “and temporary.”

What’s more, the researchers conclude that the way Facebook implemented its policy may have gone against its intent:

Furthermore, it appears that Facebook’s efforts to remove anti-vaccine content may not have targeted the most egregious sources of information. Contrary to the intent of the policy, the proportion of links to high-credibility sources in pro-vaccine pages decreased, and proportions of low-credibility content in anti-vaccine Facebook assets increased. Thus, Facebook’s efforts to remove misinformative content may have had the unintended consequence of driving more attention to websites that routinely promote misinformation.

These results appear to confirm what Facebook’s own researchers were seeing internally- the ability of the platform’s algorithms to identify and distinguish between “harmful and helpful content” seems limited at best. The researchers suggest that given these limitations– and the limitations of self-regulation– “external, transparent evaluations are therefore crucial to ensure success.”

Access to platform data would be necessary to get a full handle on the impact of the policy and Facebook’s mitigation efforts, said Dr. Mor Naaman, a professor of Information Science at Cornell Tech.

"It would be hard to tell how much of this activity moved to private groups or to other channels without access to data from the platform, which makes overall evaluation of policy impact difficult," said Naaman.

It is indeed a moving target, said Renée DiResta, technical research manager at Stanford Internet Observatory and an expert on anti-vaccine mis- and disinformation.

"We've observed anti-vaccine discourse moving out of groups that were traditionally focused on the issue and into the broader discourse in groups on the right. So even as platforms have taken action against anti-vaccine groups, pages and personalities, the overall volume of vaccine misinformation is hard to quantify, and its spread has been hard to contain."

Authors