Regulators, Industry Ponder How to Integrate Online Safety Laws

Gabby Miller / Nov 17, 2023Gabby Miller is staff writer at Tech Policy Press.

On Monday, Nov. 13, hundreds of “parents and professionals” gathered at the Intercontinental Hotel in Washington, DC to attend the Family Institute for Online Safety’s annual conference. This year, the event was focused on “New Frontiers in Online Safety,” such as content moderation, privacy policies and practices, and more around issues like AI, online safety, and parenting.

The conference took place during a busy time for global online safety and digital rights legislation. Just a few weeks ago, the UK passed its Online Safety Act, which covers a wide range of illegal content that platforms must address over what their users, and particularly children, will see online. Then, less than two weeks ago, designated Very Large Online Platforms and Search Engines were compelled to publish their first transparency reports in accordance with the European Union's Digital Services Act (DSA). And while there was renewed hope at the start of this week that the Kids Online Safety Act (KOSA) might face a Senate floor vote, Congress is no closer to moving the bill along than it was last week.

While the US, as it stands, is currently a vacuum for both online safety legislation and federal privacy protection laws, what’s happening elsewhere will undoubtedly impact how platforms behave globally.

This was the backdrop for the one-day FOSI event, sponsored by tech giants like Amazon, Google, and TikTok, which brought in high-profile guests like UK Secretary of State for Science, Innovation, and Technology and keynote speaker Michelle Donelan; Federal Trade Commission (FTC) Commissioner Rebecca Slaughter; and Ofcom Group Director of Online Safety Gill Whitehead. Industry vendors and consultants, like Crisp and GoBubble, also spoke alongside public policy representatives from YouTube, Epic Games, and more.

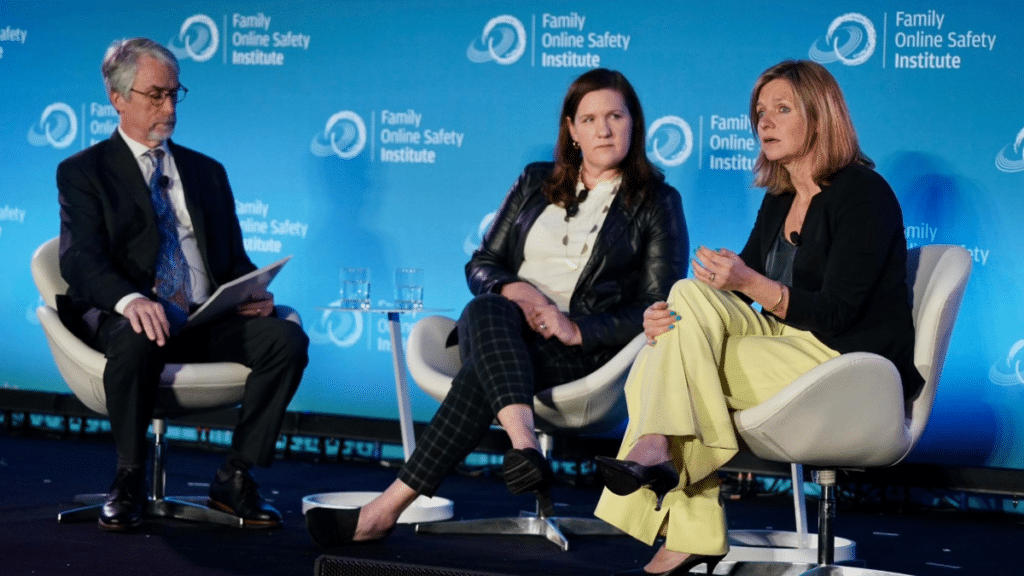

FOSI founder and CEO Stephen Balkam sat down with Ofcom’s Gill Whitehead and the FTC’s Rebecca Slaughter for a fireside chat about the path forward for online safety and privacy in both the US and UK. Coordinating on the demands of existing legislation across jurisdictions, communicating with industry on compliance, and comprehensive data privacy legislation were notable topics discussed.

Ofcom’s Whitehead spoke about the importance of ‘harmonizing’ legislation, not just between different laws, like the European Union's Digital Services Act (DSA) and the UK’s Online Safety Act (OSA), but also between government agencies and industry. “We say [the DSA and OSA] are regulatory cousins, they’re not twins. But they do share some things in common.” Whitehead said. “We don’t have powers to instruct folks to take content down, we’re not there to surveil and censor, we're there to improve governance, improve standards, improve safety by design,” she noted. “I think the onus is on us to explain what they have in common and also what the differences are.”

Whitehead also emphasized several times how Ofcom, currently equipped with a team of 350 people, is ready to “work with tech firms at large to really be effective” at compliance and risk mitigation while also learning from industry what best practices for innovation are and where they have “different ways of achieving the same goals in terms of making children's lives safer.” Ofcom released a consultation earlier this month, the beginning of a lengthy process to define how the law will be applied.

The FTC’s Slaughter picked up on this thread, explaining how harmony and directional convergence among international regulators is good not only for regulators but for industry, too. “It is how you foster coherent innovation, development, business growth, and opportunity, and we really want to see that,” she said. “The US hasn't had new legislation in this area recently, but there have been really important developments, many of which are consistent with some of the things that we're seeing across the ocean and around the country,” Slaughter added.

The two panelists also spoke about the importance of different jurisdictions being as aligned as possible on privacy and safety regulations, and how to help industry navigate areas where they differ. While Slaughter acknowledged that it is, at times, complicated for industry to operate across multiple jurisdictions, she reminded the room that it is not a requirement for companies to do business in any particular market. “It’s good for business and good for markets for companies to be able to operate in many places and grow innovation and access, but I also don’t think it’s an entitlement,” Slaughter said. She also reminded government leaders that they should be most worried about who their primary constituencies are, which are the citizens they represent and work for. “And in this topic, it's the children, which is the most important thing as adults, as parents, and as caregivers,” she added.

One of Slaughter’s frustrations is that there’s still no comprehensive federal privacy bill in the US. “We’re all very attuned to children’s online safety here,” she said. “And I’m really seeing firsthand with my nine and eleven year olds, I’m afraid of the things that they will have access to despite all my best efforts to impose digital literacy and have conversations with them.” While Slaughter plans to continue advocating for Congress to act, she believes it’s incumbent on governments to use current law better than it’s been applied in the past, while paying attention to global privacy and safety developments.

A series of eight ‘breakout sessions’ was held midday, including “A Worldwide Tour of Online Safety Policy" and "Online Safety Across State Capitals,” which featured panelists like the Australian eSafety Commissioner and an Illinois State Senator, respectively. However, one particularly timely–and sometimes heated–panel discussion was on “Speech, Censorship, and Scale: Content Moderation in 2023.”

“I know we’re here in DC, where everyone rushes to regulate and control everything,” said NetChoice Vice President & General Counsel Carl Szabo. What Netchoice, a tech trade association that represents social media companies like Meta, Google, and TikTok, wants instead, according to Szabo, is to identify the gaps in existing law that need to be filled in. He gave the example of Child Sexual Abuse Material (CSAM), where bad actors are now using pictures of children on AI-generated bodies to evade the law, which as it stands only protects a real photo of a child. “We can’t lay all the blame at platforms,” Szabo later noted, saying they “do a ton of” CSAM reporting, which goes “hand in hand with putting the criminals in prison.”

The panel's moderator – Unbossed Creative Founder Bridget Todd – asked what role the government should have in transparency, content moderation, and more. Kate Ruane, director of the Center for Democracy and Technology’s Free Expression Project, prefaced her response with an explanation that, as a US First Amendment lawyer, she has a “very deep skepticism of government involvement” and is aware that transparency legislation “can actually be a Trojan Horse” for governments to put pressure on platforms to moderate content in their favor. “That being said, the DSA exists. And it is going to have profound effects on online content,” Ruane said.

“The DSA and other types of regulation are not about ‘what is allowed’ and what is not, it’s more about how you do things and the procedures,” said ActiveFence CEO and Co-founder Noam Schwartz. This means looking under the hood of how shadowbanning works, and allowing users to flag and appeal issues they have around content moderation. “The DSA is going to be a major thing. The GDPR [General Data Protection Regulation] changed everything for the internet, and the DSA will also change everything. It is this thing that cannot be stopped,” he added.

“There’s another thing too, there is a governance asymmetry. And eventually platforms will be so much more powerful than any government,” Schwartz added. “The government can decide whatever it wants, but eventually, the head of policy in any given company, no matter how small it is, their decision goes in Singapore, or in India, or maybe another decision goes in the US.” This means the responsibility falls on platforms to do the right thing, if not from a legal perspective, then from a moral perspective, according to Schwartz.

While Ruane thinks much of the DSA wouldn’t pass constitutional muster in the US, such as requirements to audit speech moderation decisions or measurements of systemic risk, what platform policies and decisions are made in the European Union could soon be applied in the US, too. “To some extent, what I think the government's involvement in content moderation should or shouldn't be, doesn't matter, even though I wish it did. It's already happening. We are already seeing governments involving themselves in content moderation in the EU. We’ve got some good safeguards, but there are also some significant concerns,” Ruane said.

Mike Pappas, CEO of Modulate, which provides studios like Activision with AI-driven voice chat moderation software, believes that the best means for solving regulatory fracture is likely through industry-informed government entities. “Given that we have the DSA, the UK Online Safety Act, the eSafety Commission out in Australia, the Singapore Online Safety Bill, we have other bills in India and other places, something needs to bring all those things together into a consistent story for industry and platforms,” Pappas said. He hopes increased engagement with government players will help bring clarity to industry on how to straddle those different lines, and “find some way to bridge that together into something more consistent and less fractured.”

Immediately following other panelists’ thoughts on global legislation, Szabo wrapped the panel with a parenting metaphor for how the US government should be approaching online regulation. “I worry that just by saying, ‘Well, all of my friends are doing something silly, therefore I need to do something silly, too,’ is the right response. As a parent, I would tell my kids, ‘I did not raise you well, we need to have a serious conversation,’ said Szabo. “I feel like I have to say the same thing to our own existing government. Just because the rest of the world is making decisions that curtail freedom and individual liberty and free speech does not mean we need to catch up to them. I would argue we are miles ahead of them,” he added.

Few panelists at the conference made the pitch to stave off safety and privacy legislation entirely, but many struggled to give concrete solutions on how to coordinate compliance across jurisdictions and industry, even in places where none exist, like the US. The vendors in attendance, however, seemed more than willing to help provide the answers.

Authors