Reading the Systemic Risk Assessments for Major Speech Platforms: Notes and Observations

Tim Bernard / Dec 20, 2024Between December 17-20, Tim Bernard read a selection of the Very Large Online Platform (VLOP) systemic risk assessments released in late November 2024 in compliance with the Digital Services Act (DSA). Below, he shares high-level takeaways and interesting details from the Facebook, Instagram, YouTube, TikTok, and X reports.

1. Facebook

Structuring the Assessment and Report

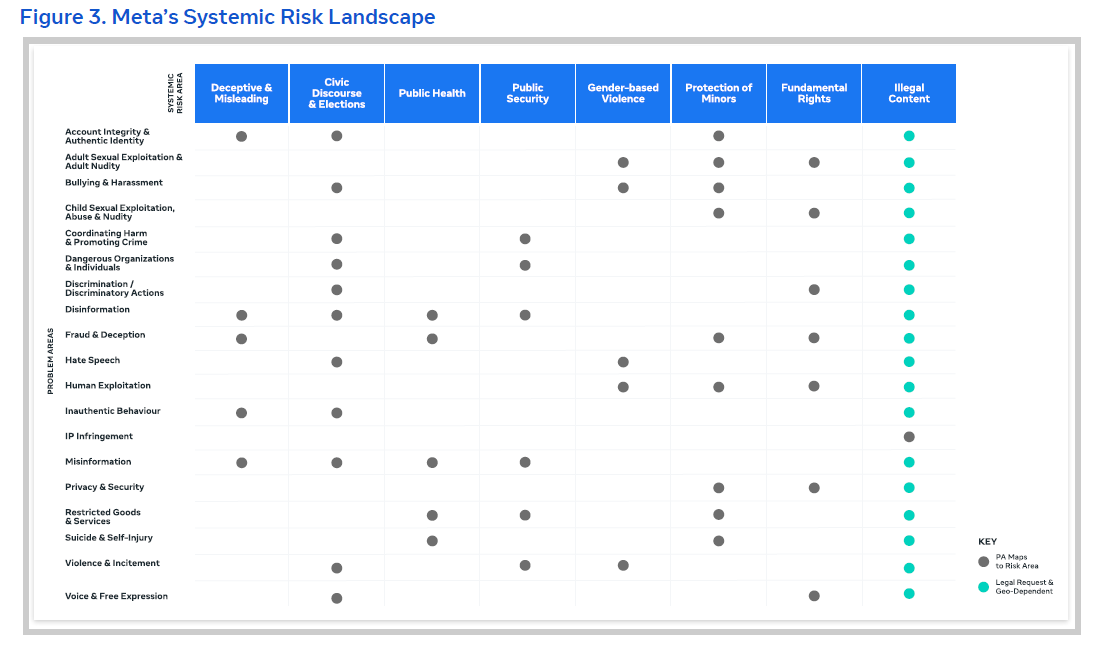

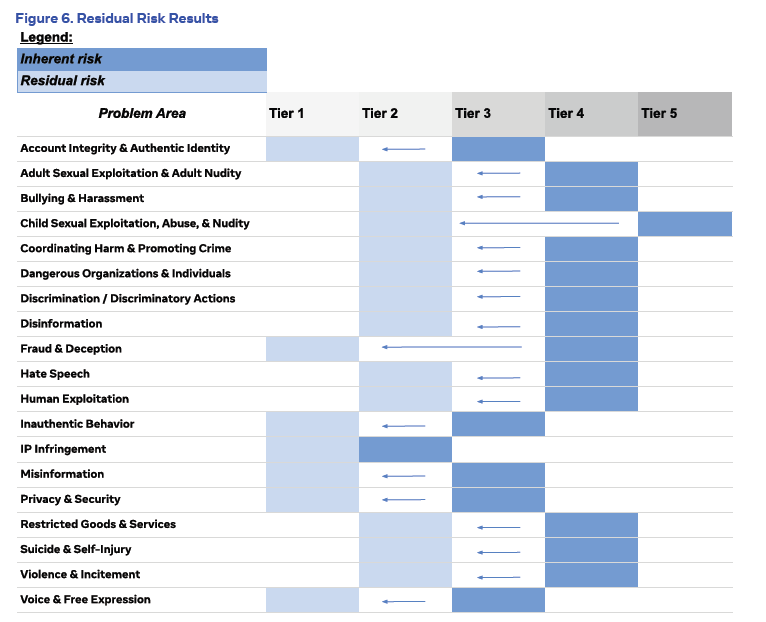

Article 34 of the DSA lays out a non-exhaustive list of the systemic risks that VLOPS must include in their assessments. Meta has divided these into 7 specific systemic risks and added one additional category (“deceptive and misleading,” perhaps informed by the risk management framework example on Russian disinformation published by the European Commission). Relating to these risks, 19 “problem areas” are identified and explained, most of which relate to one or a small grouping of Meta’s own Community Standards (exceptions are “disinformation,” “misinformation,” and “voice and free expression”). From these problem areas, 122 individual risks were identified and quantitatively evaluated as inherent risks and residual risks, i.e., taking into account current controls. As required by the DSA 34.2, five “influencing factors” are also considered.

Source: Meta Systemic Risk Report

Source: Meta Systemic Risk Report

This is followed by a survey of the many systems and tools Meta’s Integrity organization uses to mitigate the risks. The narrative heart of the report comes in section 6.2.2. This presents the following for each of the problem areas:

- Facebook’s policies prohibiting relevant content and behavior

- The related systemic risk area

- Foreseen 2024 trends

- Overview of mitigation efforts

- A discussion of inherent limitations to mitigation and identified areas for improvement.

Lastly, improvements to mitigation efforts over the last year are outlined.

What I Learned

The report clarifies that a lot of work went into the systemic risk assessment, including numerous internal stakeholders in assessing and measuring each of the 122 risks. The complexity of the mitigation efforts is also revealed, though exactly how much new mitigation will take place as a direct result of the assessment is unclear.

In particular, a plethora of tools, lists, frameworks, and operating procedures are mentioned, with varying levels of detail. These provide important fodder for regulators around the world with information-gathering powers, as well as potentially journalists, academics, and members of civil society. Not knowing what questions to ask or data to request or demand has been an obstacle in social media regulation and research, and this report may make strides toward correcting that when it comes to Facebook.

These details of how Meta measures and mitigates risks can also be of service to less mature services that are still scaling up. While the Digital Trust and Safety Partnership’s Safe Framework, no doubt informed by Meta’s input, gives fairly detailed guidance, the examples presented in this report add plenty of specificity.

The category of risk-raising trends mentioned in the report, though not particularly extensive or granular in this version, should be of interest to other platforms and to any parties interested in how external factors exacerbate online harms.

Snippets of interest:

- Attackers have been deliberately sharing known child sexual abuse material (CSAM) to disable target accounts and take over their linked accounts.

- When discussing discriminatory content, Meta reports adjusting its controversial engagement-based algorithm: “shifting away from weighting based on certain signals like the number of comments and shares when ranking content for Recommendations.”

- As identifying mis- and disinformation content is both incredibly challenging from both logistical and policy perspectives, they rely more on other signals to identify problematic material, including “behaviour of the accounts,” “how people are responding and how fast the content is spreading,” and “comments on posts that express disbelief.”

- In the section on “Suicide and Self-Injury,” Meta reported that “due to legal restrictions, we cannot use our classifiers to proactively detect [problematic content] in Facebook groups.”

What Was Missing

The approach taken by Meta is very much oriented around risks resulting from user content ad behavior that already violates their policies. The introduction to the assessment declares: “[r]isks can arise on our service when users share policy violating content or engage in policy violating behaviour” and the conclusion states, “policy violating content and behaviour risks can occur on Facebook, which may also have wider impacts.” Product or design risks are mostly only mentioned when they may be facilitating or exacerbating the problems caused by other users (or external attackers in the case of privacy and security risks). Some well-known risk topics that should fall under the systemic risk areas are therefore omitted or barely mentioned. These include excessive usage (time limits for minors are mentioned), sleep disruption, social comparison, beauty filters, and amplification of outrage-provoking and polarizing content that may not be deliberately deceptive or engagement-farming. While the impact of these is certainly up for debate, it is hard to argue that they are not risks worthy of consideration and mitigation.

By translating the risks into problem areas that already coincide with its policies, Meta may be missing some aspects and, in effect, putting its thumb on the scale to lead to a conclusion that it is already successfully performing mitigation efforts. (Similarly, it is unclear how much of the risk measurement was a process already in practice at Facebook.) Even if it is well-grounded in risk management standards and performed and reported in good faith, it reflects Meta’s internal priorities rather than those of the DSA. To be clear, given the lack of direction from the European Commission on this, it is no surprise that Meta relied on its current practices and policies. It will be interesting to see what future guidance emerges in these areas.

Finally, the report spends far more time discussing mitigation measures than working through the specific risks and how they truly relate to the systemic risk areas. The assessment is rather formalistic in just matching up the problem areas to the systemic risk areas and then jumping to how they approach and mitigate the categories of abuse that fall under the problem categories. While operations and systems aficionados may find many useful nuggets in this report, those seeking a more philosophical treatment of how Meta understands how the specifics of user-based and design-based harm can translate into societal impact are bound to be disappointed.

Response from Auditors

Because Meta is the target of a current DSA investigation related to systemic risks, the auditor declined to assess Meta’s compliance with most of the measures related to Articles 34 (Risk assessment) and 35 (Mitigation of risks).

2. Instagram

The risk assessment report for Instagram is essentially word-for-word identical to Facebook’s. Aside from superficial terminological differences and changes to statistics, many of the differences between the reports result from the “Groups” feature only existing on Facebook. On the one hand, this is not entirely unexpected as the two platforms share the policy suite that forms the basis of the assessment structure that Meta designed as well as most of the control measures. However, Instagram is a very different platform in terms of, for example, the age of the user base, degree of focus on creators, and norms of privacy. This should suggest a significantly different risk profile, but this is not reflected in the report.

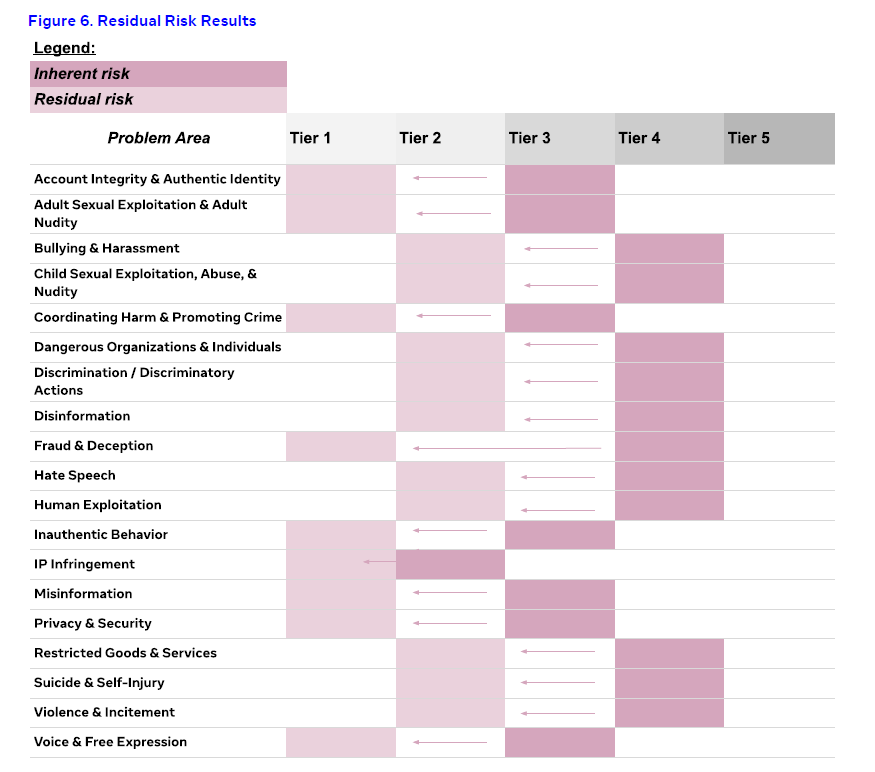

Despite this, quantitative risk calculations must have been conducted separately, as there is some divergence in the inherent and/or residual risk ratings between the two platforms for 3 of the 19 problem areas.

Source: Meta Systemic Risk Report

A side-by-side comparison of the two documents does reveal a few different operational specifics. In some cases, a mitigation measure is listed for one platform and not the other. For example, Instagram has a “Limits Function” anti-harassment feature, allowing users to temporarily block groups of other users meeting certain criteria. While Instagram may be a more obvious platform for this tool, it may be worth investigating why it has not been rolled out for Meta’s other platforms.

One other notable addition in the Instagram report is that the demotion of accounts focused on suicide and self-injury in search results (as opposed to just specific content) is listed as an area for improvement. This is claimed as a weakness that Meta is already working on correcting—again raising the question of whether these reports merely discuss risks that Meta was already focused on rather than illuminating previously overlooked areas.

3. YouTube

Structuring the Assessment and Report

Google owns five different VLOPs and one Very Large Search Engine. It chose to submit one report with company-wide introductory and conclusory material and divide out the risk assessment results for each product within that chapter of the document. This review only looked at product-specific material for YouTube.

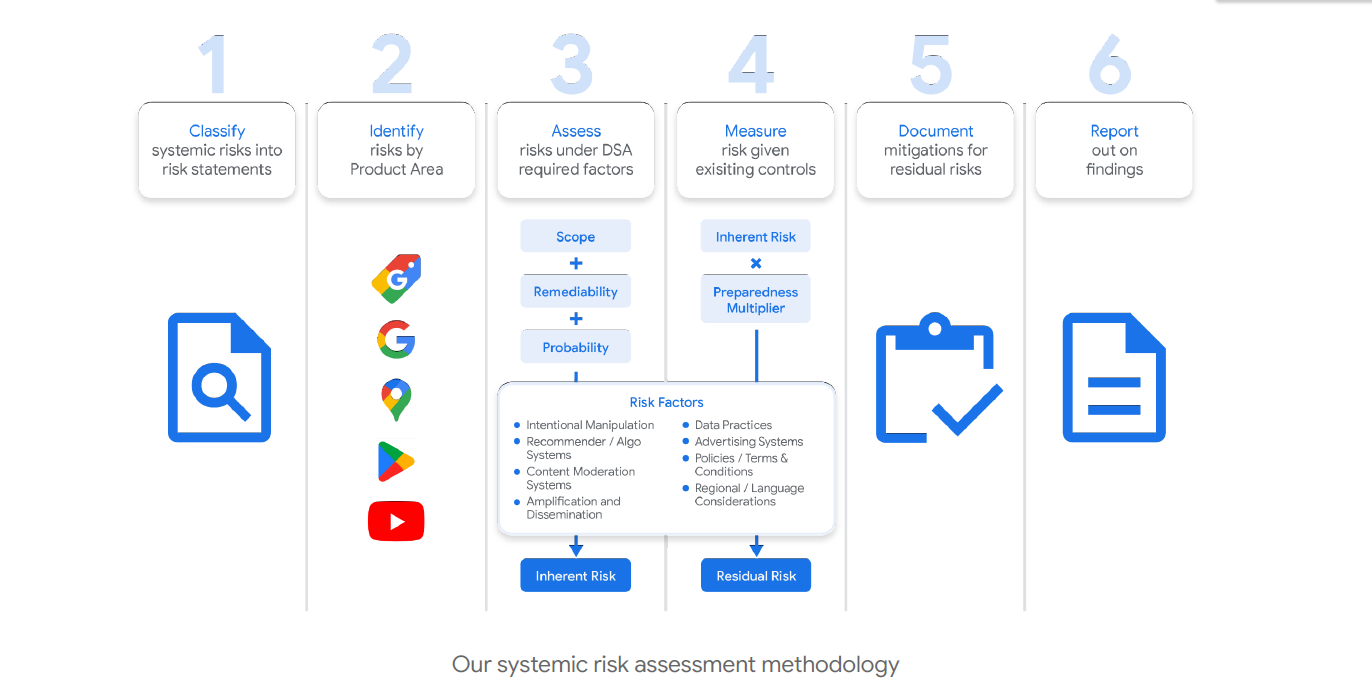

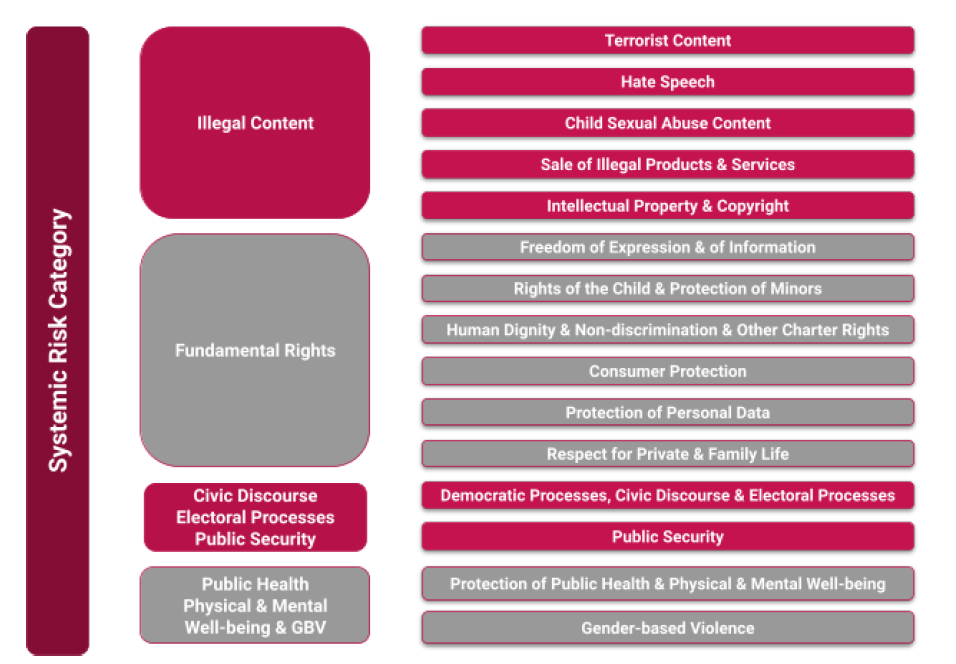

For its assessment, Google divided the four categories of systemic risk in DSA Article 34.1 into 40 specific risks, and for each VLOP or VLOSE, developed inherent risk estimations for each of the relevant risks (3 are limited to Google Play), and then estimated the residual risk after current mitigations and finally identified additional future mitigations.

Google, "Report of Systemic Risk Assessments"

The report’s introductory sections detail the teams across the company that are involved in risk mitigation, and then dive into the company’s approach to the topic, organized according to three safety pillars. These discussions are fairly detailed and touch on the DSA’s “influencing factors” and on how tradeoffs differ between products. The assessment results chapter for YouTube is divided into two sections: risks from user content and risks from “design and functioning” (the latter being terms found in DSA Article 34.1). In each section, examples of risks and their mitigations are discussed.

After a brief report conclusion, annexes give the full list of 40 risks, a list of mitigations organized according to DSA Article 35.1, and brief details of relevant stakeholder consultations. Outside of the annexes, the report is heavy on narrative, with few lists, charts, or tables. The reader is regularly directed to hyperlinked external documents and pages to learn more about particular topics.

What I Learned

Google consulted broadly within and outside of the company to develop its list of risks. They also reviewed the EU charter and international human rights texts. Google had previously developed risk statements for human rights assessments and that work informed the risks identified for this DSA compliance exercise. This may have contributed to the “fundamental rights” section of the risks register being by far the longest, with 27 of the 40 total risks.

Google also noted an important weakness of the assessment methodology: that inherent risk is not a reliable metric, as it relates to risk before any controls are implemented. Hence, “[t]his step is necessarily theoretical, imprecise, and abstract because we have long been dedicated to mitigating all the systemic risks identified in the DSA.”

In contrast to Meta, which framed almost all risk as emerging from abuse by users, Google’s assessment clarified that “systemic risks ... could stem from the design, functioning, and use of VLOSE and VLOP services, as well as from potential misuses by others.” To wit, almost half of the risks that the company developed were not about violative user-generated content or behavior, but about the “design and functioning” of the platforms. Though more space was likely given to the mitigation of user-originating risks, there was also consideration given to topics like AI systems that do not work as expected, policies that reproduce bias, privacy, and addiction-like harms.

The narrative style of the report allows the inclusion of a number of interesting examples and discussions. Among them:

- research into combating gender bias in machine translation (p.24)

- how Google experienced and mitigated medical misinformation in the early stages of the COVID-19 pandemic (p.26)

- the differences between YouTube video content and comments in the context of harassment (p.105-106)

- the challenge of child endangerment-related comments on non-violative videos (p.109-110).

A common theme in the report is misinformation, despite the fact that this category is notoriously challenging for platforms on many levels. This may have emerged organically from the systemic risks in the text in the DSA or from the risk management framework example on Russian disinformation published by the European Commission. Google’s approach is presented as following its safety commitment, “[d]elivering reliable information.” A company founded with Search as its first product, it also applies this principle to YouTube:

“Our systems are trained to elevate authoritative sources higher in search results, particularly in sensitive contexts. We raise high-quality information from authoritative sources in search results, recommendations, and information panels, in turn helping people find accurate and useful Information.”

Snippets of interest:

- Consultants from both BSR (human rights assessment experts) and KPMG (a Big Four auditor) contributed to the design of the assessment methodology.

- Two broad mitigation categories are taken into account: “(1) the existence and coverage of design decisions, features, policies, processes, metrics, accountability, and formal controls, and (2) other relevant measures, such as participation in industry and multi-stakeholder efforts to address risks.”

- “[G]eneric user flags typically have low actionability rates . ... Participants in the Priority Flagger program receive training in enforcing YouTube’s Community Guidelines, and because their flags have a significantly higher action rate than the average user, we prioritize them for review. However the size of the program compared to YouTube’s scale meant that in Q1 2023 Priority Flaggers accounted for only 0.6% of videos removed from the service.”

- “Every quarter between Q3 2022 and Q1 2023, we have terminated more than 5M channels for spam.”

What Was Missing

Despite a set of specific risks that was somewhat independent of existing internal policy and the inclusion of design issues, the report still dwelt much more on mitigations than on fleshing out how the risks could or do play out on the platform and what their consequences might be for society. Considering that volunteering more detail on these risks may open the company up to increased regulatory scrutiny, civil liability, and bad public relations, very specific guidance from the DSA’s enforcers might be required in order to obtain these reflections.

Although the report mentioned identifying future mitigations as part of the risk assessment process and flagged that these would appear in the introduction to the YouTube assessment results, few if any of these were clearly articulated in the body of the report. Annex B gives a list of “new or enhanced mitigations being put in place pursuant to Article 35(1) to address the salient residual systemic risks identified in the Article 34 assessment,” though these make copious use of terms like “continuously improving” and “updated regularly” raising questions about the newness or degree of increased investment for many of them.

If Meta’s approach was overly formalist, Google/YouTube’s report could be critiqued for not being quite formalist enough. Although the report made for a much more satisfying read (for this reader, at any rate) with a rich exploration of values and examples, some basic questions remained unanswered. There was no tabulation of the results of the assessment, laying out the levels of inherent and residual risk for each of the specific risks on YouTube. Even for the discussed examples, it was unclear how they measured up, with sometimes only residual or inherent risk described, and then only with vague terms (“one of the higher ... ratings,” “significant inherent risk [turning] into a much lower residual risk”). For the many risks not given a narrative explanation, we know almost nothing.

Response from Auditors

EY found that Google had complied “in all material respects” with the requirements of DSA Articles 34 and 35. Regarding Article 34, however, the consultancy made 3 recommendations, two of which pertain to the risk assessment discussed here.

- Google should “enhance documentation of the relevant considerations that support its scoring rationale and include sufficient information to support reperformance of risk rating as part of audit testing.” (This suggests that not only did Google not share the details of how they arrived at their assessment results in the public report, but also that the internal documentation that the auditors had access to was insufficient for them to replicate the scoring process.)

- Google should better explain as to how the Article 34.2 “influencing factors” impact each of the identified risks.

In its response, Google committed to improve its explanations of both scoring and impact of influencing factors on specific risks in the 2025 assessment cycle.

4. TikTok

Structuring the Assessment and Report

TikTok’s assessment and report represent the most straightforward interpretation of the letter of the law so far (and also the least public-facing, produced document, including no graphic design to speak of). As we shall see, this leads to some advantages and disadvantages.

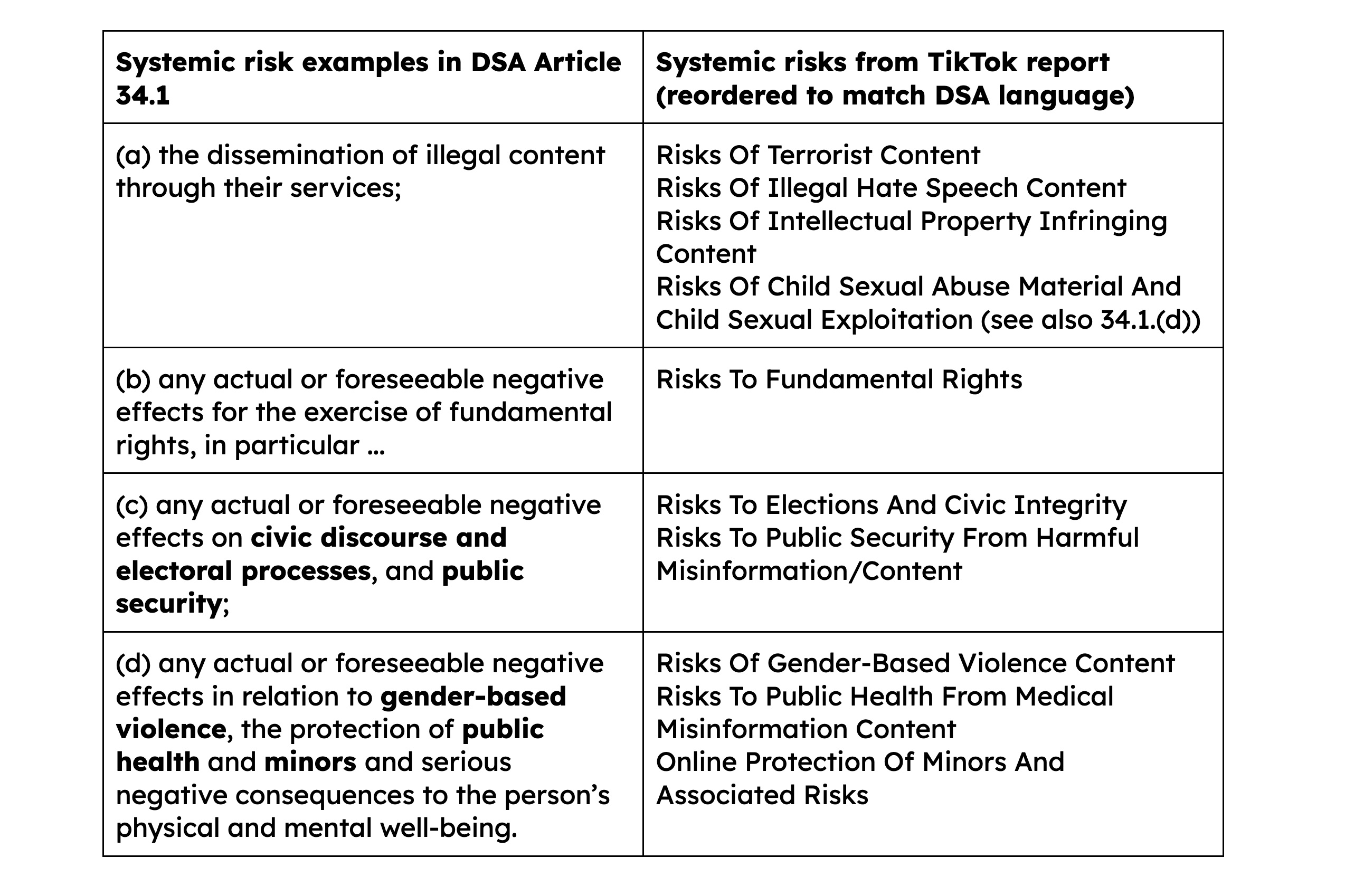

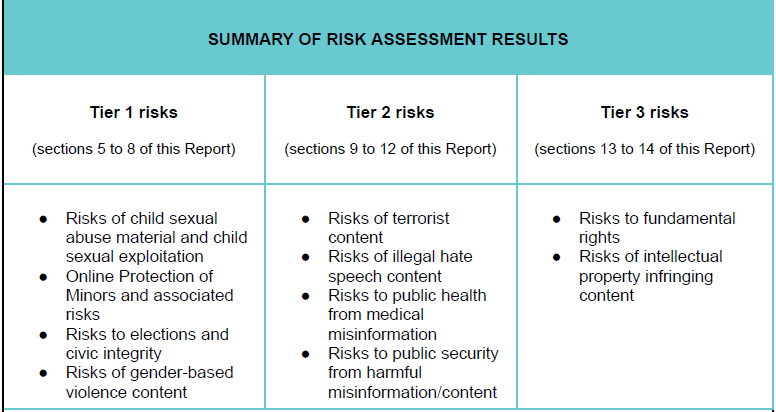

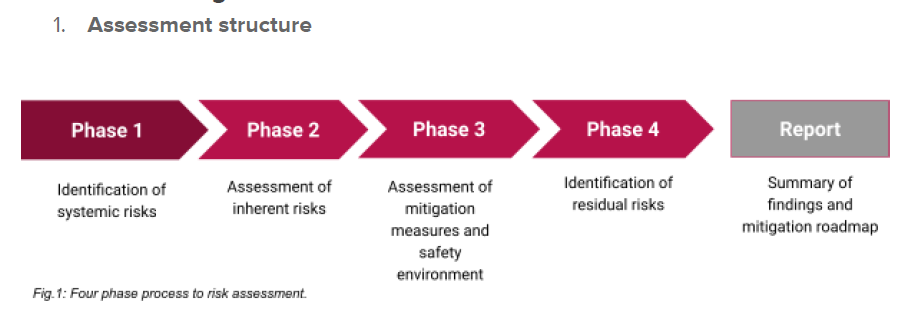

The assessment methodology TikTok used is covered in a one-page annex. The specific risks are taken very directly from the language of DSA Article 34.1 and only illegal content is subdivided into multiple risks:

While taking the same approach of considering severity and probability for each category, unlike Google and Meta, TikTok only assessed residual risk, incorporating mitigation effects before assigning a score. The assessments resulted in tier levels, which TikTok interprets as articulating its prioritizations for mitigation efforts.

Source: TikTok, DSA Risk Assessment Report 2023

The report’s introductory section is very concise, including some details about TikTok’s functioning, with emphasis on content moderation and other relevant areas of operations, and a summary of the assessment results. Thereafter, the report dives into the details of the assessment by risk, ordered by tier. With no conclusory section, the vast majority of the report consists of actual risk assessment and mitigation details as opposed to peripheral discussion.

For each risk, a description is given, often including reference to other EU laws and tradeoffs with various rights, and then key mitigations, stakeholder engagements, and data points are listed. The severity and probability for the risk are discussed, along with the final prioritization tier and a few “further mitigation effectiveness improvements.” Relevant mitigation measures are listed, tightly following the rubric in DSA 35.1. These categories include all of the DSA 34.2 influencing factors, with the notable exception of “data related practices of the provider.”

While there is some substantive information here, everything is presented very systematically in tables and in bullet-point format. Additional color and depth are provided by six interspersed case studies and deep dives, though most of these are also kept rather concise.

What I Learned

Despite the format not lending itself to in-depth treatments, for every risk there were a few sentences directly explaining how the risk can materialize on TikTok, and what the impact of that risk could be for users and the broader society, as well as discussing the factors that make it more and less likely to be prevalent. One example stood out in light of recent developments in Romania:

The spread of Election Misinformation in the context of a European election could potentially have a societal impact in a relevant member state, region or at the EU level, such as by influencing the environment surrounding an election or other civic event, which could have a undermine [sic] or erode trust in election processes and institutions.

While these were not extended explorations, they gave useful background for understanding the stakes and meaningfully considering how these risks balanced against mitigating factors.

TikTok’s For You Feed (FYF), famously essential to the platform’s success, also featured prominently in the report. Its role on the platform, according to TokTok, is helpful in reducing the spread of risk-related content in several key regards:

- TikTok’s community guidelines categorize some content as FYF ineligible, a stricter category for more risky material that is nonetheless not prohibited on the platform.

- When a video gets a certain number of views, it is enqueued for manual review, providing a check before getting recommended even more widely.

- Extreme content typically lacks the broad appeal that would be necessary before a video would be heavily amplified by the FYF algorithm.

As TikTok relies on the FYF rather than deliberate user-directed search for content discovery, if content does not get shared in the FYF, it is unlikely to be spread to many users.

The case studies and deep dives provide evidence that the platform is proactively working to prevent harm, including the use of both TikTok-commissioned and externally produced research, carefully constructed policy systems, and planning for upcoming situations. Of particular interest was a simulation that TikTok ran to explore how its systems would or should handle a live-streamed mass shooting, an event that had taken place on other platforms but not yet on TikTok.

Snippets of interest:

- TikTok employs 4 different third-party detection systems to identify child sexual abuse material (CSAM).

- TikTok takes measures that appear to be designed to avoid the “rabbit hole” effect of leading users to increasingly extreme content: “TikTok employs mitigation measures to diversify content so that Younger Users are not exposed to repetitive content, which is especially important if they are exploring content related to more complex themes but which is not in violation of TikTok’s terms or Community Guidelines.”

- “TikTok updated its Community Guidelines in March 2023 to recognise GBV [gender-based violence] as a separate focus for its moderation and mitigation efforts. While TikTok already prohibited male supremacy ideology as a form of hate speech, TikTok made this decision to bring more transparency to its policies following the high profile incident in 2022 where GBV content was repeatedly uploaded to the Platform promoting the male supremacy ideologies of Andrew Tate.”

- TikTok has in-app functionality to issue a counter-notice for users whose content is reported for takedown due to copyright.

What Was Missing

TikTok gives the overall tier for each of the specific risks but does not consistently and usefully break down the scoring for severity and probability. The methodology describes a scale for severity from “very low” to “very high,” and some of the risks are stated to be of “moderate” severity (and in the case of Fundamental Rights, “low to moderate”), which does appear on the scale, but the remainder is described as “material risk,” which is not on the scale. The probabilities are evaluated on a scale from “very unlikely” to “highly likely,” but they are sometimes offered with additional language that makes them difficult to compare (e.g., “unlikely that there is widespread dissemination” versus “possible that there will remain some level of ... content”).

The choice to cleave so closely to the risks laid in the language of the DSA, which are not necessarily intended to be exhaustive, is a missed opportunity to reflect more broadly on the concept of systemic risk and to explain how TikTok understands its responsibilities to society. Furthermore, even within this narrow interpretation, it is not clear that TikTok’s risk statements fully represent all of the risks in the DSA’s text. In particular, the risk of “serious negative consequences to the person’s physical and mental well-being” (DSA language) is not fully reflected in any of TikTok’s risk statements, and it may not be a coincidence that this is the most likely to include predominantly design-based risks, which are also almost entirely absent from the assessment.

Possibly the least substantive section of the report was the risk assessment for “Fundamental rights,” which is rather disappointing given the apparent weight that it is given in the DSA, merely judging by the length of the Article 34 subclause. Following the general approach of the assessment, the description of the risk statement states: “TikTok has determined the following Fundamental Rights as most relevant to its Platform,” and then simply rearranges the rights mentioned explicitly in the DSA, adding none and omitting only the rights of the child, which is presumably covered in other categories. The next bullet states, “[w]ithin the context of this Report, it is not possible to provide a detailed analysis of each Fundamental Right described above,” and, aside from a couple of anti-bias measures in full list of mitigations, the only topic covered in any substance in this section (including in the subsequent “Deep Dive”) is balancing expression with other concerns, which is worthy enough but is also, not inappropriately, discussed at intervals throughout the report.

Lastly, this report does contain a few redactions, so it is impossible not to be curious about what was omitted from the public report. Most of these, however, appear to be details about the signals that TikTok uses to detect abuse in adversarial contexts and, therefore, seem reasonable to redact. TikTok also should be credited with sharing some sensitive information with the European regulators, rather than presenting a squeaky-clean, public-ready document.

Response from Auditors

Because TikTok is the target of a current DSA investigation related to systemic risks, the auditor declined to assess TikTok’s compliance with most of the measures related to Articles 34 (Risk assessment) and 35 (Mitigation of risks).

5. X

Structuring the Assessment and Report

X’s determination of risks is essentially very similar to TikTok’s, barely diverging from the text of DSA Article 34, though adding “sale of illegal products and services” to TikTok’s breakdown of illegal content risks. The report regularly references the DSA’s Recitals to interpret these risks.

Source: X, “Report Setting Out The Results of Twitter International Unlimited Company Risk Assessment Pursuant to Article 34 EU Digital Services Act”

X’s assessment structure, on the other hand, is more similar to Meta’s and Google's, with a discrete evaluation of inherent risks preceding consideration of controls and residual risk. It uses the familiar formula of calculating risk from severity and probability, but breaks down severity further into components of scope, scale, and remediability. These lowest level scores are included in the body of the assessment results in the report, and all of the higher level scores are tabulated in Annex B.

Source: X, “Report Setting Out The Results of Twitter International Unlimited Company Risk Assessment Pursuant to Article 34 EU Digital Services Act”

The report begins with an introduction to the service, its risks, and its approach to mitigation. The assessment methodology is then discussed in some depth, including the rubric “scorecards” (the full collection of these can be found in Annex A), and details of engagement with stakeholders are given.

The full risk assessment results summary follows. This is divided by the sections that map onto the four subclauses of DSA Article 34.1. A narrative introduces the section, discusses the inherent risk, controls, and residual risk for all the risks in the section together (special subsections are included for the controls relating to CSAM and terrorist content), and then tables for each risk in the section summarize these three elements (inherent risk, controls, and residual risk) and include the scoring. No separate treatment is included for Article 34.2 “influencing factors,” but the copy above each table states: “This section provides a summarised assessment of the risk that the design or functioning of X services and its related systems, including algorithmic systems...” and some of the inherent risk bullets in the tables reference a number of the factors.

The last element of the body of the report is the “Mitigation Roadmap,” which catalogs recent and planned mitigation measures classified by the DSA Article 35.1 categories. The first section gives platform-wide measures, and the second lists those specific to the individual risks.

What I Learned

X included a discussion of considerations that seemed characteristic of its platform. While all the reports included here discussed freedom of expression or speech and how it factors as a tradeoff in content policy determination, X did this in far more extensively, representing a throughgoing (though not necessarily highly thoughtful) concern with over-moderation, at times suggesting suspicion of content moderation. This manifested in several statements of subsidiary risk, such as:

- “that X policies place restrictions on the type of content that users may post on the platform.”

- “that X’s policy enforcement may lead to increased alienation and radicalization of users who are suspended from the platform for posting violent or harmful content. They may alternatively find less popular platforms where there are decreased chances of conversations with opposing views. There is thus a risk of pushing harmful conversations off-platform to more obscure and less regulated spaces.”

- “that our platform policies could be applied unequally or in a subjective manner, for example due to moderator bias, or language specialisation (or lack thereof).”

Additionally, the report briefly raised the factors of financial constraints and the multi-platform nature of abuse, two concerns that were not highly visible elsewhere.

Full charts of the risk and control scores (as well as the inclusion of the component scores for severity) were a welcome inclusion, as were the rubrics for what each score was meant to indicate. X also admitted to some residual high risks and a preponderance of medium risks despite having determined, as all the platforms did, its own methodology for calculating them.

X did note the existence of a number of design-based or design-exacerbated risks, including the potential for mentions and messaging to be used for harassment, recommendation algorithms to result in information bubbles, and the possibility of negative mental health impacts on minors due to engagement metrics and infinite scrolling feeds. The report also gave substantial focus to the discussion of fundamental rights, listing out immediate risks and controls for each of those specified in the text of the DSA.

Snippets of interest:

- “There remains a residual risk that despite our controls, certain users may still feel unsafe and unwelcome on our platform due to attempts of abuse and harassment by other users.”

- “Previous research has also shown that in certain circumstances our recommender systems could lead to accounts from specific ideological leanings to be amplified over others. However, while there is a risk of bias in these systems, the research highlighted that there are no clear, singular factors in this effect and that in different circumstances the same algorithm produced different impacts on political content.”

- “Currently, we do not have conclusive research on whether our proactive models have bias such that it could materially impact a DSA systemic risk, or whether they disproportionately limit speech across different communities. We aim to support further research on bias in recommender systems and content moderation algorithms. This will allow us to train our models better and mitigate against any risk of disproportionate and/or biased enforcement.”

- “We will scale the option for X Premium users to verify their accounts through identification with a trusted third-party partner.”

- Between 40%-50% of X’s users are logged out when they access the platform.

What Was Missing

Even though risks are discussed and listed in all of the DSA’s categories, X did not go beyond those explicit in the text of the regulation. Furthermore, in laying out the risks, X focussed almost entirely on immediate impacts on individual users and did not reflect expansively on the potential for broader societal consequences.

It was not always clear how the stated controls in each section reduced the inherent risks (as broken down in the tables) to leave only the stated residual risks. It should be said that X’s decision to lay out these elements side-by-side highlighted this issue, which would have been significantly harder to detect in the other reports considered above.

As with the other reports, it was difficult to ascertain which “new” mitigations were prompted by this risk assessment. These mitigations were, as seen elsewhere, generously sprinkled with statements of continuity, leaving the degree of additional work, features, and investments uncertain.

At times, the report did not appear to have been undertaken with the utmost degree of care. Although typos and infelicitous language are almost unavoidable in any lengthy document, a few passages were lacking in coherence. There was also a mysteriously blank cell on page 28, presumably from the final preparation of the public document.

The redactions in X’s report were quite extensive. While some were likely to have indicated signals or techniques used to combat adversarial abuse, this was by no means the case for all of them. A great many data points were redacted for reasons that are unclear. Although X did not report content moderation figures for the time period of the report, many of these numbers would typically be made public in voluntary (and mandated) platform transparency reports.

In several places, entire bullets or series of bullets are omitted, and it is impossible to know why. One redaction obscures a list of occasions that X employed crisis measures, information of clear public interest. A particularly frustrating passage hides the crucial figures measuring the effectiveness of X’s policy of restricting the visibility of some content (“Freedom of Speech not Reach” or FoSnR):

Since its launch, we have seen how this type of restricted content receives ▮▮▮▮▮▮ less reach or impressions than unrestricted content globally. We have also observed that ▮▮▮▮▮▮ of authors proactively choose to delete the content after they are informed that its reach has been restricted. Between ▮▮▮▮▮▮ of FoSnR labels were appealed globally, with between ▮▮▮▮▮▮ of decisions being overturned.

Response from Auditors

FTI’s DSA audit affirmed that the initial assessment of (presumably inherent) risk was compliant with the DSA, albeit a little late in delivery. However, in the remaining two measures relating to the content of the report itself, the auditors gave a negative evaluation with extensive specifics. These are some of their key points:

- The influencing factors listed in Article 34.2 were not considered in “a robust and effective way,” and for each, there was evidence that they were not considered at all (perhaps meaning: for at least one of the risks).

- Particular weaknesses were in assessing the impact of recommender systems for each risk and the risks of the FoSnR policy and practice.

- X’s only data points to measure control effectiveness appeared to be the number of valid user reports of the targeted content and false positives. This was not sufficient for evaluating their impact (and therefore, presumably, calculating the residual risk).

- FTI had requested evidence that was not provided, and the elements of a robust approach to evaluating control effectiveness proposed by the auditors “did not seem to be understood or regarded as relevant” by X.

In response, X cited a European Commission investigation into their compliance with the risk assessment and, in the meantime, “there remains significant regulatory uncertainty” regarding proper compliance with the risk assessment requirements, so it is waiting until that is resolved before implementing the auditors’ recommendations.

Final thoughts

X’s report and audit raise central issues regarding the risk assessments and the reports. From the report alone, there appeared to be significant strengths to X’s approach in that it revealed all the scores from the evaluation and gave far more specificity as to their derivation and meaning than the reports from their peers considered here. The audit determined, however, that their actual assessment process may have been deeply flawed.

Despite some useful information here and there, the real value of this process is dependent on how rigorously the assessment is being conducted. This, in turn, relies on reliable audits or investigations. We should recall that, of the five platforms considered here (as Meta and TikTok’s auditor declined to give conclusions on account of ongoing investigations), only one, Google/YouTube’s, had a positive auditor evaluation for their whole risk assessment—and even that contained comments and recommendations calling for better articulation of some scoring rationales.

Even with rigorous processes guaranteed by auditors, there are necessarily subjective elements of the risk assessments, especially when it is left to the platforms themselves to decide on the methodology. And the platforms, of course, want outcomes that make them look like they have systemic risks under control. Clearly, more specificity from the European Commission as to how the risk assessments are to be performed would be welcome, whether through guidance documents or investigation outcomes. As the platforms differ in a range of respects, too much specificity in universal guidance may be undesirable. Alternatively, although this may risk allegations of unfair treatment, given the relatively small number of VLOPS, perhaps platform-by-platform guidance may be both feasible and lead to more substantive and revelatory systemic risk assessments and reports.

This piece was originally published on Tuesday, December 17, 2024.

Related Reading

- 5 Things to Know about the Digital Services Act’s First Risk Assessments and Audits

- What to Do with the Long-Awaited DSA Systemic Risk Assessments

- Assessing Systemic Risk Under the Digital Services Act

- Understanding Systemic Risks Under the Digital Services Act

- Unpacking “Systemic Risk” Under the EU’s Digital Service Act

- The European Commission's Approach to DSA Systemic Risk is Concerning for Freedom of Expression

Authors