Public Participation is Essential to Decide the Future of AI

Baobao Zhang / Feb 5, 2024

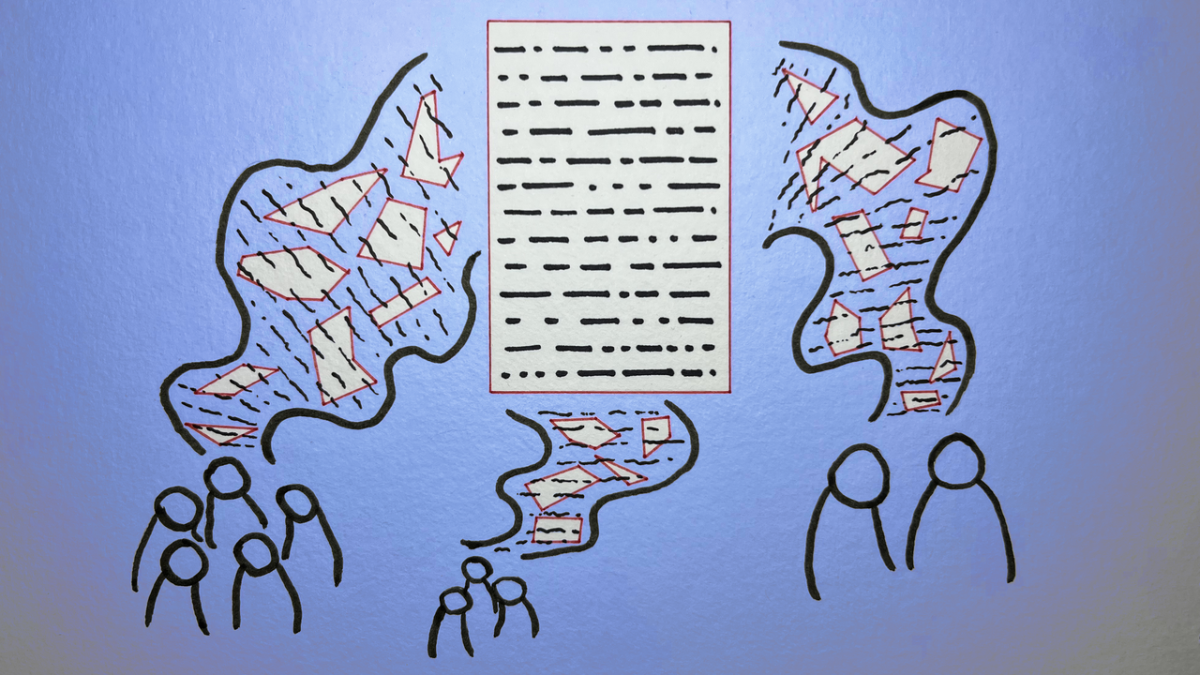

Yasmine Boudiaf & LOTI / Better Images of AI / Data Processing / CC-BY 4.0

Key decisions about the future of AI are almost exclusively made behind closed doors by powerful individuals. The rehiring of Sam Altman as the CEO of OpenAI after his ouster by the organization’s independent board showed that Silicon Valley’s tech elite and corporate executives have the final say in decisions that affect billions of everyday people.

What if the general public had a say in how AI systems are developed and deployed? Our research team tried just that in a project called the US Public Assembly on High Risk Artificial Intelligence. The project is the first national public assembly on AI governance in the United States.

Over eight days, a diverse group of forty US residents engaged in rigorous discussions and deliberations, exploring the risks, benefits, and responsibilities associated with AI. These non-expert participants were randomly selected from the US adult population, and convened by videoconference. They came from 21 states -- ranging from college students to retirees -- and represented all aspects of the political spectrum. They listened to eight computer scientists and AI ethicists present expert testimony about how AI systems work, the current state of AI regulation, and AI systems built using browser/search history, health records, images of faces, and administrative data.

The first thing we learned from the public assembly is that the participants recognized tradeoffs between the benefits and risks of AI systems. For instance, in the domain of health care, participants acknowledged the benefits of AI systems in diagnosing and providing medical recommendations. But they also highlighted the importance of ensuring accuracy and accountability in AI algorithms to avoid incorrect diagnoses or discriminating against marginalized groups. Likewise, the participants recognized the potential benefits of face recognition in areas such as public safety and identity verification. However, they also expressed concerns regarding privacy infringement and gender and racial discrimination in face recognition algorithms.

Second, the question of responsibility emerged as a central theme throughout the assembly. Participants explored the various stakeholders involved in AI governance and deliberated on who should bear the responsibility for the outcomes of AI systems. Although participants held individual users responsible for misusing AI systems, a greater percentage of them thought that developers and organizations that deploy systems should be held accountable when AI systems cause harm. The majority of participants want governmental agencies and the court system – not the tech developers – to determine who should be held accountable when an AI system causes harm.

Finally, this public assembly demonstrated that everyday people in the US can learn about how AI systems work and how they impact individuals and society. At the end of the public assembly, nearly all of the participants indicated that they were informed enough to tell someone what AI is and what it does. Furthermore, despite the political, age, and cultural differences of the participants, they were able to deliberate and debate respectfully. One consensus that organically formed amongst the participants is that an AI system violating people’s human rights and civil rights constitutes a serious harm, even when the AI system is “technically correct.”

“I am still not an expert. I will not allow this to stop me from realizing that technology is constantly evolving. The unknown does not equal something bad. It just means we need to open our minds and constantly be seeking knowledge. We came together as a group of strangers and formed a community to work together for the better of society,” one participant reflected.

To be sure, public assemblies require resources: the participants, expert witnesses, and facilitators need compensation and other accessibility support. Nevertheless, as our project demonstrated, public assemblies can be meaningfully conducted virtually with skilled facilitators. Virtual public assemblies are not only cost-effective but make them more accessible to those who are unable to travel.

The US government has released guidelines and principles for human-centered AI development, which is an important first step. Future regulation will likely be necessary to protect the public from possible risks and harms from AI, such as racial and gender discrimination, vulnerability to malicious use, and threats to physical safety. Governmental agencies, including the ones tasked by the Biden administration to regulate AI, should explore the use of public assemblies to meaningfully inform future policy-making and rule-making on AI governance. We also recommend the National Science Foundation and the National Endowment for the Humanities fund public assemblies and their evaluation by academic researchers, so that these participatory projects are independent of corporate interests.

As AI systems become more prevalent, it is crucial for policymakers, industry leaders, and researchers to take into account the findings of public assemblies like the one my team conducted. Meaningful public engagement can help develop responsible AI frameworks that mitigate risks, ensure transparency, and prioritize the well-being of individuals and communities.

Authors