Project Demonstrates Potential of New Transparency Standard for Synthetic Media

Justin Hendrix / Apr 5, 2023Justin Hendrix is CEO and Editor of Tech Policy Press. The views expressed here are his own.

With the proliferation of tools to generate synthetic media, including images and video, there is a great deal of interest in how to mark content artifacts to prove their provenance and disclose other information about how they were generated and edited.

This week, Truepic, a firm that aims to provide authenticity infrastructure for the Internet, and Revel.ai, a creative studio that bills itself as a leader in the ethical production of synthetic content, released a “deepfake” video “signed” with such a marking to disclose its origin and source. The experiment could signal how standards adopted by content creators, publishers and platforms might permit the more responsible use of synthetic media by providing viewers with signals that demonstrate transparency.

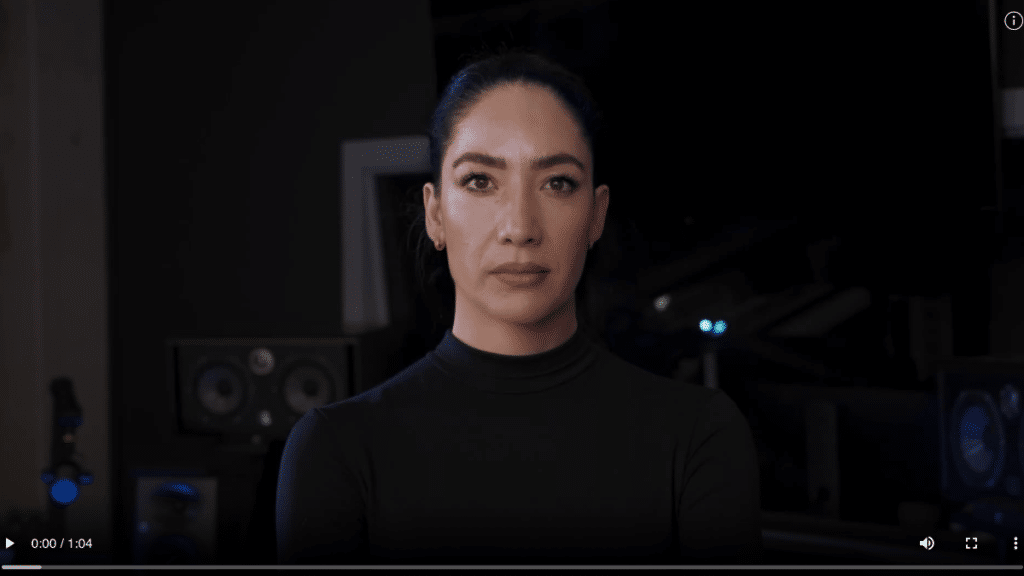

The video features a message delivered by a synthetic representation of Nina Schick, the creator of ‘The Era of Generative AI’ online community and author of the book ‘DEEPFAKES.’

The project follows years of effort by a wide variety of actors, including tech and media firms as well as nonprofit organizations and NGOs, to create the conditions for such signals to meet an interoperable standard. The video is compliant with the open content provenance standard developed by the Coalition for Content Provenance and Authenticity (C2PA), an alliance between Adobe, Intel, Microsoft, Truepic, and a British semiconductor and software design company called Arm. A joint development foundation intended to produce such a standard, the C2PA itself emerged from the Content Authenticity Initiative (CAI), a project led by Adobe, and Project Origin, an initiative to address disinformation in the digital news ecosystem led by Microsoft and the BBC.

Whether such standards will become widely adopted across the internet is an open question, even with the proliferation of so-called generative AI technologies. I spoke to Jeff McGregor, CEO of Truepic and Mounir Ibrahim, its Vice President of Public Affairs and Impact, to learn more about the prototype project, and their views on what might drive the adoption of standards. What follows is an edited transcript of our discussion.

Justin Hendrix

Can you tell me about the origin of your company, Truepic?

Jeff McGregor:

Our focus is really exclusively on building authenticity infrastructure for the internet. We've been around for six years now. Things have dramatically accelerated with the Gen AI boom that we're all living through right now. I think actually, even the past week or two is indicative of where we stand and where we'll be in a few years. There's the Pope in the puffer jacket. You've got the paparazzi Elon Musk photos. You've got the Trump arrest photos. All this stuff has become very topical in the conversation online, outside of our ecosystem. That's the first time we're really seeing this happen in a meaningful way.

So we're seeing this conversation play out. I think the tipping point we've reached in terms of generative AI is accessibility. Really, these platforms are available to anyone. Anyone can go and start generating images on Midjourney or Dall-E or Stability. Then you have a level of quality that's being reached that is truly deceptive in terms of understanding what's computer versus human generated.

The big call to action here is that all of the technology now exists to be transparent about where content comes from, what the history of that content is and to make that very easily consumable to the end viewer of photos and videos. A lot of this stuff has been conceptual for a long time and being built in labs or tested in academia.

The announcement with Revel and Nina and Truepic is really trying to plant a flag in saying, "Hey, this technology's here. Anyone can integrate it and be transparent around where content is coming from. And consumers can now stop guessing whether something is a deep fake or authentic or computer or human generated."

So our goal over the coming week, as we put this news out there, is really to start to beat the drum and to drive further adoption of the C2PA spec and transparency as a concept.

Justin Hendrix:

Can you give me a little more background on the technical implementation, and what the user would experience?

Jeff McGregor:

So in the video, you'll see this dropdown here. The consumer experience is that when this video is played, you have that eye button in the top right corner, and as you hover over it, you get a modal that appears here that just describes a little bit more about what you're viewing. So you see this very explicitly calls out that this contains AI generated content. It was created by Revel AI, which was the studio that produced this, and you see when it was created. And that's in a nutshell the transparency details that we're looking to surface to the consumer as part of this technology in general.

Now, what's behind that technology is essentially a digital signature. We have cryptographically signed that information into an MP4, which is just the file type of that video. It conforms to the C2PA spec. And we're using essentially a private key infrastructure very similar to what you would have behind an SSL certificate, but we're doing it on a per media basis. So every time a photo or video is generated, we're using a unique certificate to sign that data into the file in a tamper evident way. And if anything changes on that photo or video downstream, it breaks that digital signature and you no longer get the eye. So it's a way to basically establish the origin and the history of that content.

Justin Hendrix:

If I encounter the asset in the wild on any player, as long as the asset hasn't been manipulated, will it still carry the mark?

Jeff McGregor:

That's a great question. The player has to be C2PA compliant, but the way that we've architected the system is that that video can be shared using a simple embed code, which carries C2PA compliance with it. Now, we do expect that the C2PA as a standard will continue to get adoption across the ecosystem, and more and more publishers will start to show that eye based on more content, having those details associated with it.

Justin Hendrix:

There are social platforms like YouTube, but then there are also players like JW Player or others across the internet that serve video. Have any of them adopted the C2PA standard yet?

Jeff McGregor:

Not yet. I know there's several C2PA members that are working on getting these updates into the players. So if that hasn't happened now, it probably will happen in the near future. In terms of the platforms or even browser companies, no, those are not involved with C2PA.

That said, I think this is where the policy piece comes in. What I think we show with this video is that all the pieces are there. And by Revel's participation in this, they are saying we, like many other Gen AI contributors, want to be transparent. In fact, it's attribution for us, which actually begins to address other problems with Gen AI on ownership. So a lot of Gen AI providers don't have a problem with this.

So if Gen AI creators are marking content, the tools exist and an open standard exists, there's one major piece of the puzzle that's missing at scale. And that's for platforms to accept and display the standard. And I think that's the really interesting policy piece here. It's almost as if people online now not only want to be transparent, have the tools to be transparent, but they're being blocked from transparency by those that are either slow or unwilling to adopt standards. And there may be other standards that help transparency too, but this is the only one we know for digital content, and it exists and it works.

The big call to action here is this is an open standard. Everyone can comply with it. It is beneficial to society to have more transparency in digital media. We all see where this thing is going. We are not far off from being completely flooded with synthetic media, and it probably will require some legislative pressure for certain companies to act.

I think Microsoft is very vocal about being involved in C2PA. They may be an early mover, but then you have social media networks that may be later adopters, or may need legislative pressure to actually make the move. But the big tipping point here is, "Hey, this stuff is out there and it's ready to go." So everybody actually can act today if they choose to.

Justin Hendrix:

And are there any policy makers you've talked to about it? Have you shared the demo with anybody?

Mounir Ibrahim:

I just came back from Washington last night. I was at the Summit for Democracy. In terms of lawmakers and legislators, we had a variety of meetings with a variety of organizations at the federal level and in Congress for two purposes. One was previewing this video. Also, last week we announced a project we're working on with Microsoft in Ukraine using the same technology essentially, but from an authenticated space using our camera SDK to document cultural heritage destruction throughout the country. So we were there presenting that at the State Department, USAID, and NSC/White House teams.

We previewed this video, especially for those that are really thinking about generative AI. And one of the interesting things that appears to be happening in Washington is they're going to be starting up, it appears, an IPC process- interagency discussions that may eventually lead to policy at the federal level on generative AI.

And I do believe that these ideas of transparency and content are going to be discussed and be part of whatever best practices and/or policies that might come out. So at least what I'm hearing. It's still longer. It'll probably take months. I also know several congressional offices on the Senate side that are considering legislation around and calling for transparency on content for high risk areas, particularly areas that are maybe national or global security, but also addressing the name, image and likeness of known persons.

Justin Hendrix:

As far as your corporate sales pitch around this, what are people buying from you if they buy the ability to implement this standard? Are they buying a protocol? Is it a piece of software that does the encoding?

Jeff McGregor:

It's a piece of software that has a bunch of infrastructure that sits behind it, and we manage a trusted certificate authority where we can issue a per media certificate, digitally design the content and have it conform to emerging standards, basically in a turnkey fashion. So it cuts all of the technical complexity out and turns it into an infrastructure service.

Justin Hendrix:

So in that way, it's like an SSL certificate or something that I buy for my website.

Jeff McGregor:

Yep, that's exactly right.

Justin Hendrix:

Okay. Do you have any idea what it'll cost?

Jeff McGregor:

Fractions and fractions of a penny per certificate. The cost is nominal on a per media basis, and Truepic as a company is looking to drive scale. We look at this as every piece of content generated on the internet should be signed, and it should not be prohibitively expensive for one company to sign their own content.

Justin Hendrix:

I’m speaking to you during the week that a bunch of AI experts penned a letter calling for a pause in training models more advanced than GPT-4. That letter contains some pretty dire scenarios, end of the species type stuff. But along with the information ecosystem being polluted, knowing what you're working on, knowing where we're at in the process of the adoption of these things, how worried are you in the near term?

Mounir Ibrahim:

I am worried. I am. Where my concern really lies is on the local level. Think about the Vice article about the high school in Carmel, New York. That to me is really the concern. Bad actors and state sponsors, they're going to weaponize this. But my sense is the weaponization of it won't be on the things that are these big macro level events. It's a lot easier to know and debunk that Trump isn't in handcuffs in a matter of seconds than it is if your local principal let off all these racist remarks.

And on those local levels, particularly in areas that have a history of violence, sectarian or racial tension- think parts of India and Pakistan. It could really lead to chaos. And that's my concern, really. So I am concerned, and I think the idea of transparency in content is the best option we have, aside from major media literacy, which will be a generational effort. I think this can help mitigate, mitigate a lot of the issues and at least force people to kick in some media literacy skills when they see the module. But the big question to me is at what point are these larger platforms going to begin engaging on it and what their incentives are just not aligned right now.

Jeff McGregor:

My concerns mirror Mo's. The other concern that I think we both share is that as we continue to see more inauthentic content spread across the internet, it's going to also discredit real content. That creates a really challenging dynamic, I think for the whole information ecosystem.

And if we all start to lose trust collectively in this shared sense of reality, it's going to be very hard to operate online and make anything from dating decisions to political decisions and everything in between. I would underscore the media literacy point as well. It's not a fast change, but Mo's point on the one to one exploitive use of the technology, and it will continue to, I think unfortunately, harm people that have been harmed in the past. You have non-consensual deep fake pornography. With that being used on a local level one to one and spreading, it's just going to be a mess. So I think the media literacy part also has to accelerate very rapidly.

Justin Hendrix:

Thanks for your time.

Authors