Pro-Trump Telegram channel preserved by researchers studying hate speech captures planning for January 6

Justin Hendrix / Jul 13, 2021A group of researchers in Germany exploring computational linguistics, hateful and oppressive speech and how social media exacerbates it studied and preserved a public Telegram channel chronicling nearly five years of discussion between supporters of former President Donald Trump. In the days before the insurrection at the US Capitol, some members of the channel discussed traveling to the Capitol and preparing for violence.

The team, including Tatjana Scheffler, an assistant professor of digital forensic linguistics at the Ruhr-Universität Bochum, Germany; Mihaela Popa-Wyatt, a Marie Curie Fellow at ZAS in Berlin; and Veronika Solopova, a Ph.D candidate in Freie Univeristät Berlin, published a repository of the channel and a paper evaluating it earlier this month. Observing the dialogue over time, they describe how the channel’s members “gradually went from discussing governmental overthrow as a theoretical possibility to planning the January 6, 2021 Capitol riot by sharing information on hotels and transportation in Washington, DC, and finally discussing the aftermath of the event.”

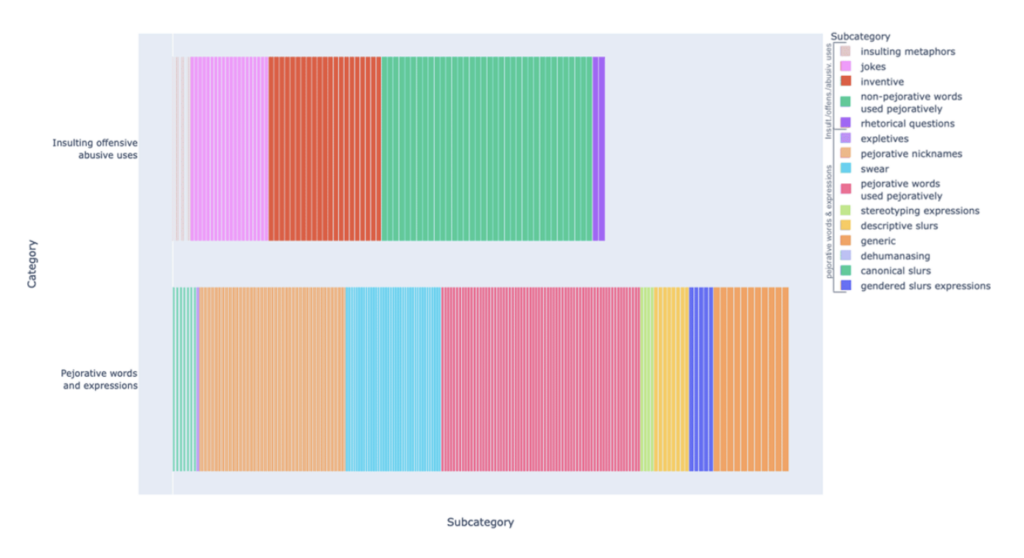

The goals of studying the Telegram channel included defining a taxonomy of harmful speech in it, studying mechanisms to detect that speech, and ultimately informing a broader investigation of the relationship between hate speech and harm. For instance, Popa-Wyatt hopes to understand “how oppressive speech shifts norms of society, retrenching social hierarchies and in particular how social media contributes to that trend and exacerbates it.”

“We're very broadly interested in how language can cause harm on the Internet. I'm a computational linguist and in computational linguistics the view is very practical”, said Scheffler. “We try to find hate speech- really very direct hate speech- and then find some ways to automatically identify that. But there are a lot more subtle ways that language can shift norms and cause harm- you don’t have to use explicit slurs for this to happen. So we thought, where can we find some data for these more implicit, problematic types of language?”

That led the team to Telegram, where they discovered a pro-Trump public channel active since December 2016. Telegram, a message app developed by a Russian entrepreneur in 2013 that boasts more than 500 million users, supports public and private channels where individuals engage in dialogue on a range of issues. The channel the researchers preserved contains 26,431 messages through January 2021 that represent a “continuously evolving isolated ‘echo-chamber’ discussion, produced by 521 distinct users,” they say in the Journal of Open Humanities Data, where the full dataset is now available for other researchers to study.

“The interesting thing is that we can see how they go from far-right discussions of many topics like abortion, or immigration, or many things into the discussion of what was going on with the election and the planning- like exact planning- of how they will go to Washington, how they will join their efforts to get there, and then the aftermath of what was happening,” said Solopova, who manually annotated more than 4,500 messages exchanged in the channel in the lead up to the insurrection at the Capitol and its aftermath.

While she saw no evidence any of the individuals in the channel entered the Capitol, she believes some of the participants were at the site of the insurrection and prepared for violence. “One guy proposed to the others to go with him in a car to Washington. They were discussing which hotels were the closest to the Capitol. The most striking message which I remember was, ‘in the morning session with Donald Trump, just take food. In the second session, near the Capitol, take everything- guns, food, everything you have.'”

The researchers are somewhat pessimistic about the near-term capacity of computational systems to adequately recognize hate speech and other problematic language that may lead to harm, beyond recognizing the most obviously problematic terms. Current technologies miss language and patterns of language that are problematic, and conversely, mistakenly flag language that is not in fact problematic. “These systems are still not diverse enough,” said Scheffler.

And, there are basic questions to be answered about how language leads to harm. It is very difficult, the researchers say, to locate the potential for harm in the language itself. That requires a much more sophisticated understanding of the motivations of the individuals involved. “It’s part of an entire package, and language is just one part- the ideologies, the narrative, the structural inequality that is in the background” also have to be considered, said Popa-Wyatt. This presents a challenge for content moderation systems that rely largely on text.

But Solopova is optimistic that future systems for content moderation may get better at understanding context and provide human moderators with an advantage. “We can use hybrid systems to help the moderators not to directly read the messages, but probably read only some linguistic features” to which they can react, she said.

Authors