Poll Indicates Broad Support for Regulating AI-Generated Media Related to Elections

Tim Bernard / Sep 26, 2024There is overwhelming support for regulating the use of generative AI in political advertising amongst US registered voters, which seems to be an issue of increasing salience, according to a new Tech Policy Press/YouGov poll. The poll was fielded to an online sample of 1,118 voters from August 26 to August 28, 2024.

Identifying AI-Generated Political Content

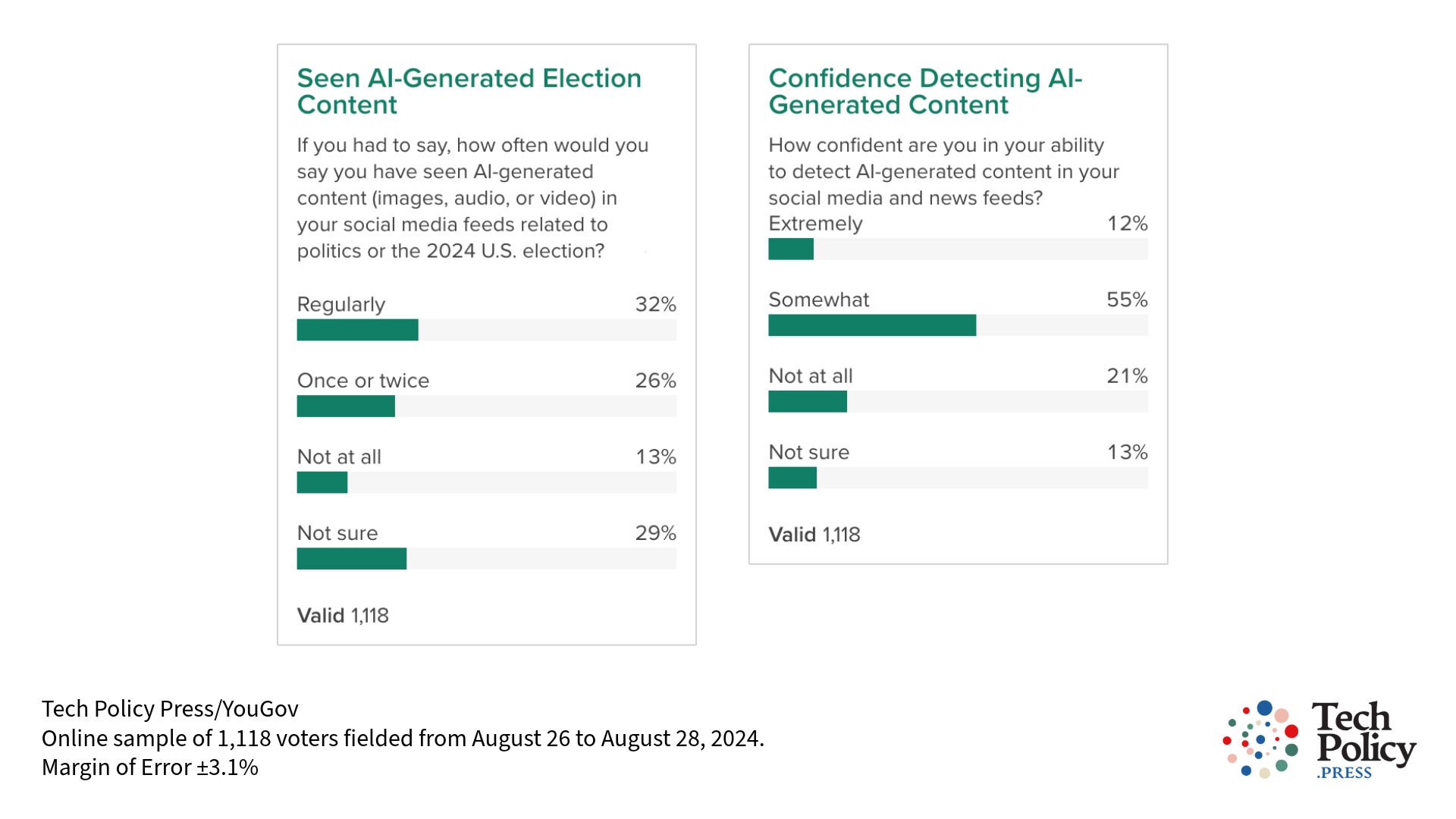

58% of respondents reported seeing political content in their social media feeds that they believed was the product of generative AI. This figure is a significant increase from 47% in the June results. With former President Donald Trump sharing on Truth Social an AI-generated image of Vice President Harris presiding over a Soviet-style convention in August, among other notable artifacts, it is unsurprising that more people have encountered such media in their feeds. At the same time, the surrounding discourse about the propriety of this conduct also raises the possibility that respondents are either more likely to notice this type of content, or that they may assume that they have seen it, even if they did not notice it at the time.

The poll also asked about confidence in identifying AI-generated content as such. While only 12% of respondents described themselves as “extremely confident” in their ability to do so, 55% judged themselves as “somewhat” confident. More recent studies on people’s abilities to discern genuine from AI-generated media found average accuracy scores of around 50%-60% (only marginally better than a random guess), suggesting that many respondents were overconfident in their ability to discern real from fake.

At the same time, some current uses of AI-generated imagery for political purposes, including the aforementioned image of Vice President Harris, are most likely not intended to be interpreted as genuine, but rather are akin to political cartoons with unfavorable caricatures (some have argued that the untruthful meme about J.D. Vance’s interest in furniture filled a similar role, with just a fabricated citation instead of a fabricated image). If this type of media is foremost in the mind of some of the poll respondents, it is reasonable that they may believe they are well-equipped to, in effect, distinguish jokes from facts.

The responses also indicate a clear trend of younger people being more confident in their ability to identify AI-generated media and older people much less so. The studies linked above found directionally similar trends when testing this out (albeit not always at the level of statistical significance), which may be due to physical capabilities that are known to decline with age, especially with respect to audio content. Young people are also most likely more familiar and comfortable with the technology.

Support for Regulation

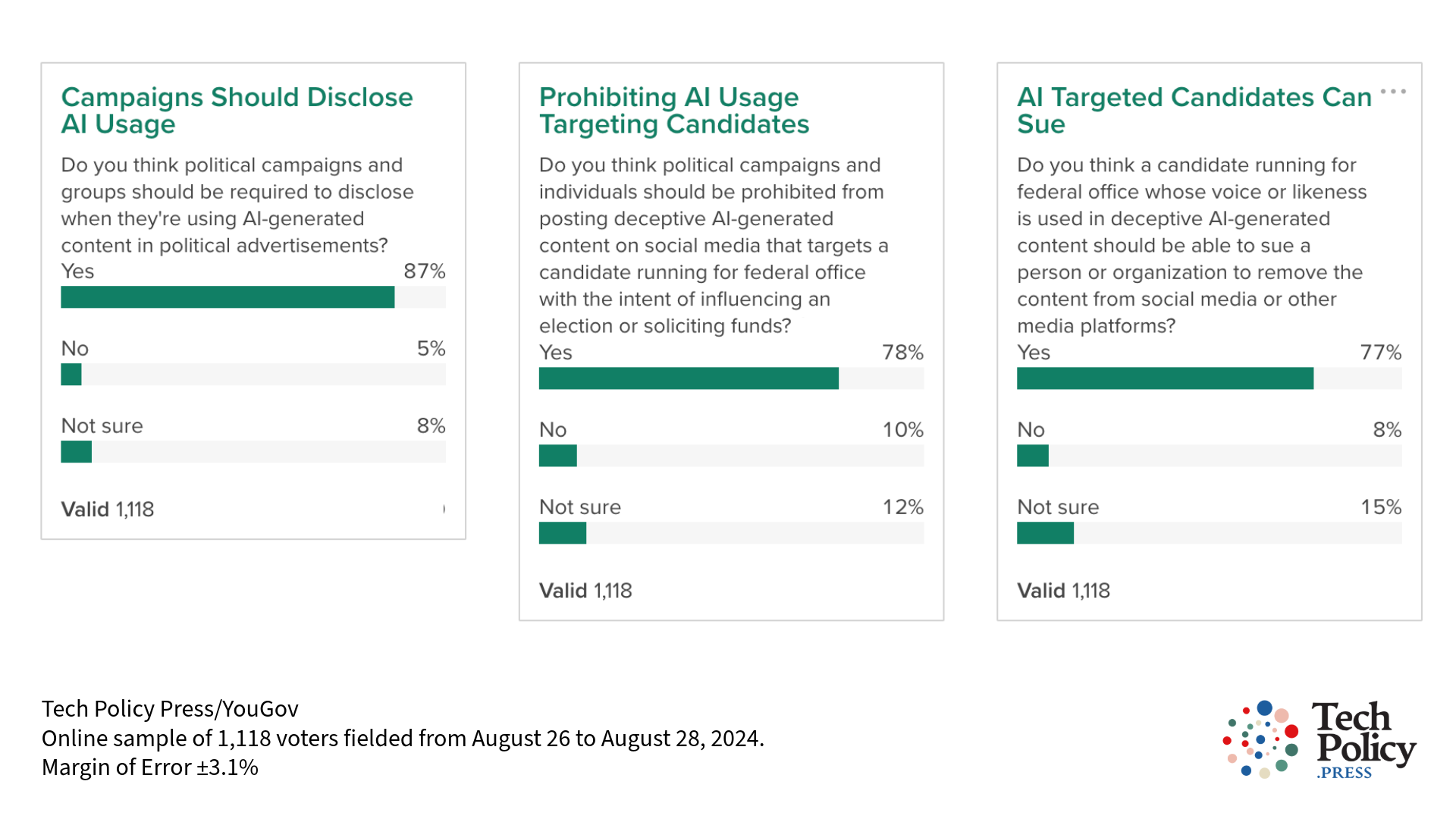

The poll also probed respondents’ support for three proposed measures for regulating the use of AI-generated media:

- Requiring a disclosure for the use of generative AI in political advertisements.

- Prohibiting the posting of deceptive AI-generated content on social media that targets a candidate for federal office with the intent of influencing an election or soliciting funds.

- Enabling candidates for federal office whose voice or likeness is used in deceptive AI-generated content who are impersonated in deceptive AI-generated content to sue to have the content taken down.

The first of these measures is contained within a Senate bill (S. 3875) that was introduced by Sen. Amy Klobuchar (D-MN) and co-sponsored by Sen. Lisa Murkowski (R-AK). The poll results indicated very high levels of support for this proposal, with 87% for and only 5% opposing, and this was very consistent across the political spectrum.

The second and third measures, with only slightly lower levels of support in the poll (78% and 77% for, 10% and 8% against, respectively), can be found in another bipartisan bill sponsored by Sen. Klobuchar (S. 2770). (This bill additionally provides for candidates for federal office to be able to sue the party responsible for the deceptive media featuring them for damages, as well as to remove the offending content.) These measures appeared to be a little more popular amongst liberals and Democrats than amongst those further right on the political spectrum, though clear majorities in all population segments still supported them.

For all three measures, there was an apparent trend of older respondents favoring regulation to a greater extent than younger respondents. This aligns with the finding above that the younger segments indicated a greater confidence in their ability to identify AI-generated content, suggesting a higher level of general comfort with this media.

California Gets There First

California’s recent torrent of AI-related legislation included a bill requiring disclosure of AI-generated content in political advertising (AB-2355) and another prohibiting deceptive AI-generated media targeting election candidates (AB-2839). Both of these bills were signed into law by Gov. Gavin Newsom on September 17, along with a third (AB-2655) that makes platforms liable for not promptly removing deceptive AI-generated elections content. The latter two bills are already the target of a lawsuit claiming that they are incompatible with the First Amendment.

Perhaps Congress will be able to draw lessons from any weaknesses that are revealed in California’s new laws, and create federal legislation that meets popular demand, is defensible in court, and promotes the integrity of information related to elections.

The poll results can be downloaded here.

Authors