Online “Wormholes”: How Scientific Publishing Is Weaponized to Fuel COVID-19 Disinformation

Adi Cohen / Sep 1, 2021Earlier this year at a school board meeting in Indiana, Dan Stock, who calls himself “a private practice family medicine physician”, argued that mask wearing causes more harm than catching COVID-19 and that vaccines are dangerous. Videos of his speech went viral and gained millions of views before many were taken down by YouTube and Facebook. Alongside posts about his speech, viewers shared links to 22 articles Dr. Stock referenced in support of his arguments. Ten links on the list referred to scientific papers on the National Institutes of Health (NIH) website, and two at the New England Journal of Medicine.

Multiple fact checkers subsequently debunked Dr. Stock’s arguments, providing more scientific context and corrections to rebut his statements. It turns out that one of the sources Dr. Stock referred to is a retracted paper on the NIH website. The paper, “Facemasks in the COVID-19 era: A health hypothesis”, is the most shared link from the NCBI- the U.S. National Center for Biotechnology Information- and the PubMed NCBI papers database on Facebook and Twitter for the year 2021 to date. Despite the retraction note (and reports on the study's falsehoods by fact checkers), it continues to be shared by thousands as a proof point to make the argument that even the NIH website shows mask wearing is damaging.

Unfortunately, this is not a unique case. Even prior to the Covid-19 era, providing links to scientific studies that confirm certain viewpoints was common practice for vaccine skeptics and proponents of health misinformation. But the pandemic further exposed how decentralized, fragmented, open-access online scientific literature systems provide opportunities to manipulate perceptions around scientific evidence and conclusions. Sharing a selection of hyperlinks to scientific papers, with the request from the user to “do your own research” fulfills a position similar to the notion of “wormholes'' in science fiction. They transport the user between Facebook, Twitter and other messaging and social media apps, jumbling science and facts in transit.

Just as the supposed physics of wormholes includes traversing time, these behaviors also exploit a temporal dimension to the presence of scientific information online-- sharing anachronistic, unrelated, or just cherry picked studies, in a new context. This tactic has been proven effective in driving different conversations revolving around “alternative facts” about COVID treatments, most recently about Ivermectin and mRNA vaccine risks. Mitigating this challenge might require more efforts by the scientific publishers, rather than just tech platforms and fact checkers.

Weaponization of PubMed: not just preprints and retractions

Recently, greater attention has been given to challenges presented by retracted studies and preprint versions of studies that have not been peer-reviewed, that have been shared widely online during the COVID-19 pandemic. Retracted studies gain eternal life online, if they can serve antivax, anti-mask, or conspiracy theorist positions (perhaps Wakefield retracted studies linking MMR vaccines to Autism are the most prominent example). Retraction Watch, a blog dedicated to monitoring retraction of scientific studies, enumerates over 130 retracted papers about COVID-19, including a paper about the connection between 5G technology and Coronavirus, and a paper about COVID-19 vaccine hazards that gained over 600k views and was shared widely even after its retraction.

University of Washington researchers Jevin West and Carl Bergstrom noted this phenomenon in an article, “Misinformation in and about Science”, documenting how a preprint version of a study (now withdrawn) about the origins of COVID-19 was shared widely through the BioRxiv paper repository and covered by dozens of media outlets, only to be withdrawn. A similar incident occurred in June 2021, with a study about Ivermectin, the anti-parasitic drug touted by some as a cheap alternative for Covid-19 treatment despite explicit recommendations against it from the U.S. Food and Drug Administration (FDA) and the World Health Organization (WHO).

But the problem does not simply lie with retracted and pre-print studies. The majority of the most popular links from PubMed and NCBI on Facebook and Twitter are peer reviewed studies and scientific articles. Most of them are shared by disinformation propagators to persuade the public that health authorities are hiding facts in an effort to push vaccinations and mask mandates, and to deny people access to supposedly cheap and effective therapies and cures. In today’s decentralized information environment, as papers migrate from a scientific server to a social media platform, they are easily decontextualized. Posters often will present the link, or a list of links, to make a case that the “evidence” and “truth” of their positions are ignored, while avoiding the whole scope or actual results of the studies they reference.

[wpdatatable id=1 table_view=regular]

Source: BuzzSumo

This process bears similarities to the phenomenon of “Platform Filtering” as defined by P.M. Krafft and Joan Donovan, where “deliberate information filtering” is done on one platform, before a re-contextualized “fact” is claimed on another platform. The difference is that the links that are shared from scientific repositories are not necessarily untrue or discredited (for instance, a study based on one clinical trial showing high efficacy of Ivermectin in treating Covid-19). Yet, when shared by antivax actors on social media, the re-contextualization of these studies is done often by abusing the lack of scientific expertise and literacy of the typical reader. The reader is sent through the wormhole where facts themselves are “reborn” by divorcing study content from essential context such as time (e.g. a study long debunked) and space (e.g. an academic website where scientific review norms apply). In the absence of key context, an alternative truth and body of knowledge can be reclaimed, and even presented as “backed by science”.

In August 2021, Del Bigtree, a prominent antivax online influencer, tweeted a link to a paper published in October 2020, with the title “Informed consent disclosure to vaccine trial subjects of risk of COVID-19 vaccines worsening clinical disease”.

The paper was published in October 2020, before the approval of the COVID-19 vaccines. The authors wished to highlight the option that the vaccines that are being experimented for COVID-19 “may worsen COVID-19 disease via antibody-dependent enhancement (ADE)”, and that participants in the vaccines experiments should be warned. ADE is a condition in which the antibodies that exist in the body (from vaccine or previous infection) are all non-neutralizing to the virus, and so a virus infection could result in a more severe symptoms of the disease.

But as health authorities started to approve COVID-19 vaccines, PubMed’s link to the paper began to circulate online, with posts “informing” readers that the vaccines may cause ADE. Viral videos warning about ADE referenced this paper, and blog posts from anti vax publications cited it. Fact Checkers and science communicators weighed in as the ADE theory gained virality, explaining that the popular paper does not prove ADE in case of COVID-19 vaccines, and results on the ground are actually proving the opposite. Yet, circulation of the paper continued, and actually increased over the summer of 2021 alongside the arrival of the new Delta variant. Now, CrowdTangle and BuzzSumo data suggest it has reached over 40k shares on Facebook and Twitter, as antivax activists claim even the NIH knows the hidden truth.

Clickbait papers, fanning the flames

Theories that COVID-19 vaccines cause ADE have been pushed online throughout 2021. One of the main voices pushing the conversation is Dr. Robert Malone, named recently by The Atlantic as “The Vaccine Scientist Spreading Vaccine Misinformation”. As an infectious disease scientist, Malone promotes what he says are potential harms that mRNA vaccines could cause. He became a popular talking head and social media influencer, warning about the cytotoxicity of the spike protein in the mRNA vaccines. To his over 270k followers on Twitter, Malone often shares scientific papers and articles that supposedly support his theory.

When the Delta variant caused a new wave of COVID-19 spread in the United States, Malone latched on to a new article, an August 2021 “Letter to The Editor” published in Elsevier's “Journal of Infection”: Infection-enhancing anti-SARS-CoV-2 antibodies recognize both the original Wuhan/D614G strain and Delta variants. A potential risk for mass vaccination? The letter warns about the possibility of ADE complications due to the new variant. In less than a month, the paper has been shared over 10k times on Twitter, and thousands of times on Facebook. Many of those shares suggesting this article being “peer-reviewed” are thanks to Malone’s tweet, which has been retweeted over 1.4k times. But, once again, the “Letter to The Editor”, though it is peer-reviewed, is not based on experimental study, and noted to be speculative.

While the theory about COVID-19 vaccines and ADE exists in the public discourse independently, scientific papers are nevertheless used to build the credibility of the argument, even if they are speculative, or anachronistic. This is not to suggest that the studies should not have been published. But once they are published, propagators of disinformation find it very easy to ignore the date of publication, the depth of the study and reactions within the scientific community, while emphasizing elements such as their existence on the NIH server, or the fact that those papers were peer-reviewed.

Cherry picked science has never been easier: the case of Ivermectin

Similar trends can be seen in references to clinical trials and reviews that position Ivermectin and Hydroxychloroquine as an effective therapy to COVID-19, research papers claiming herbals and vitamins showed positive results in treatment, and articles suggesting a lack of efficacy in COVID-19 vaccines. Only some of these papers referenced are pre-print versions- not yet peer-reviewed- that originate on the preprint servers MedRxiv, and BioRxiv, or papers that were retracted or withdrawn shortly after their publication. It appears that the current structure of science literature online enables quick “cherry-picking” by actors who seek to persuade others to their point of view, even if the evidence is counter to their argument.

For instance, the third most shared link from PubMed in 2021 (with 9k shares on Facebook and Twitter) is a review supporting the use of Ivermectin in treating patients with COVID-19. The paper was published in the July-Aug 2021 issue of the American Journal of Therapeutics. In addition to the shares of the PubMed link, the link from the journal was shared almost 33k times on Facebook and Twitter. This study, alongside several other clinical trials, is touted by proponents of the use of the drug to treat COVID-19.

Similarly to other Ivermectin studies, it has been highlighted by several right wing news outlets including The Epoch Times. “It’s time to follow the evidence-based science”, called Derek Sloan, a Canadian conservative politician, to his 90k followers on Facebook, linking to the paper. The only problem is, this review is just one study out of dozens that have addressed the use of Ivermectin for COVID-19. Citing medical research experts, Politifact noted that this review analyzed a series of low quality non peer-reviewed clinical trials, to provide a contradicting conclusion to the current scientific consensus, based on other clinical trials (that for now there is not enough evidence to justify the drug for use against COVID-19). Further, the study was authored by members of the UK based BIRD group (British Ivermectin Recommendation Development), who are advocating for the use of the drug. A review reaching the opposite conclusion was published several days later. Its URL on PubMed and Oxford Press’ Journal of Clinical Infectious Disease got less than 30% of the pro-Ivermectin review’s shares on Facebook and Twitter.

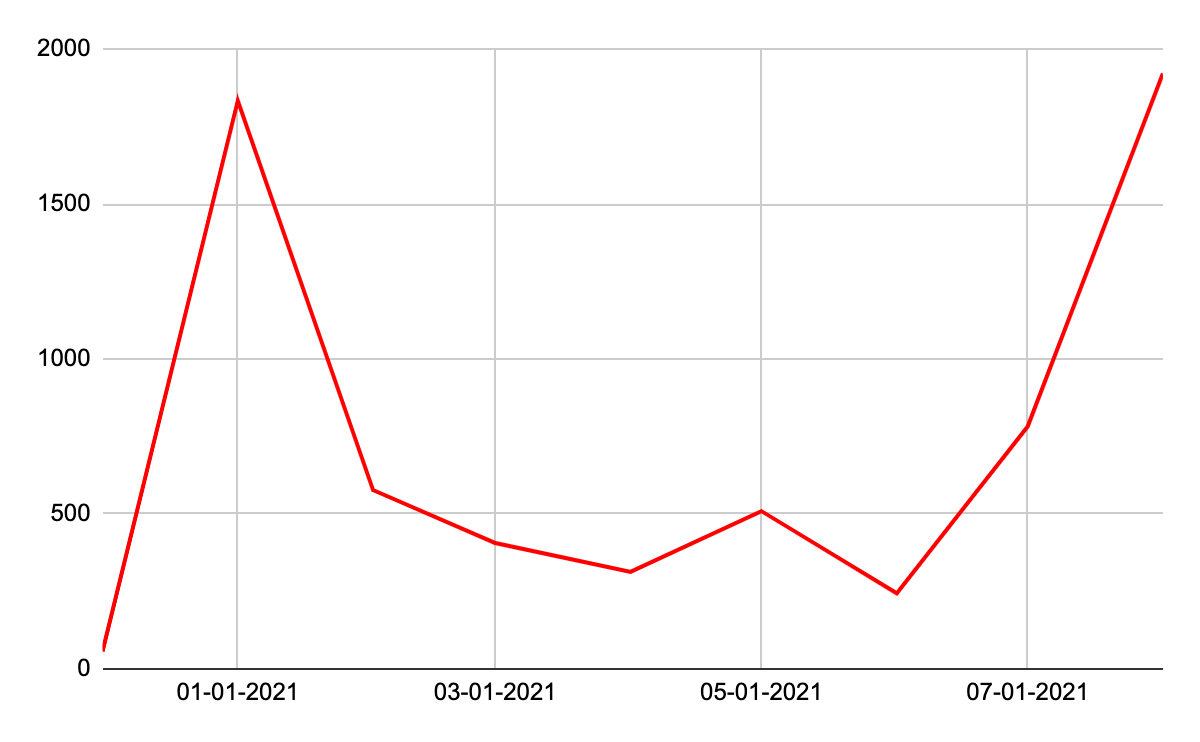

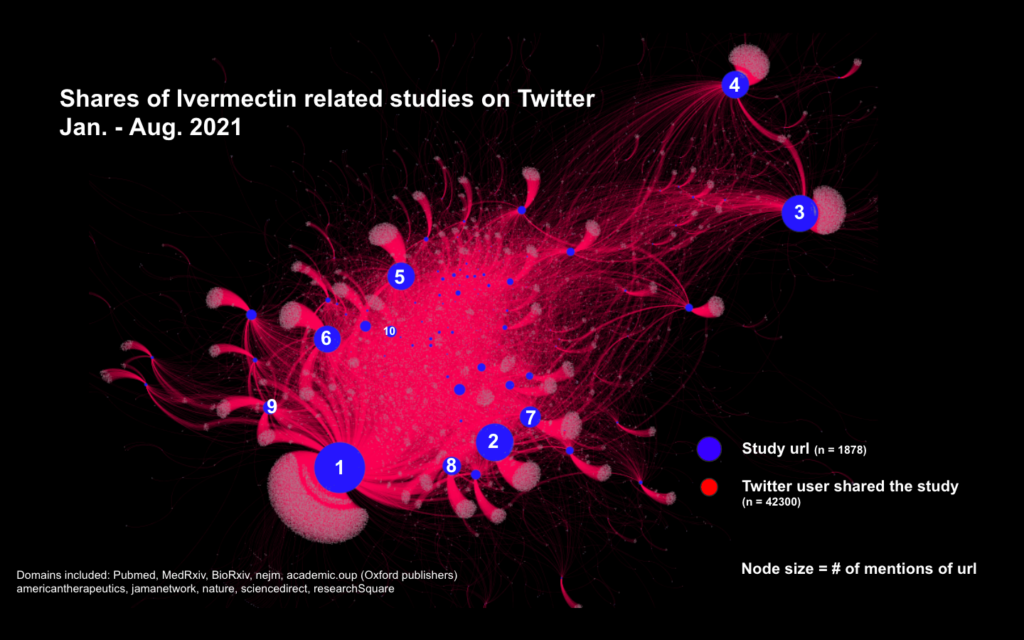

The map below shows shares of scientific papers from 1,878 URLs to papers published on online publications portals and servers including PubMed, MedRxiv, BioRxiv, Oxford Academic and others. Of those links, 14 were tweeted more than 1000 times since January 2021. Out of those, 11 studies supported the use of Ivermectin. The review mentioned above- supporting Ivermectin use- was by far the most popular paper, tweeted more than 20k times according to the data. Altmetric data suggests it was tweeted by 36k users, with exposure to over 12.5 million users (the discrepancy might be due to different methods of collecting the data from Twitter URL). Another review supporting the use of Ivermectin published on July 6, 2021 is the second most shared paper on this map. The “Expression of Concern” published by its authors on Aug. 9, 2021, noting that “[the] authors have learned that one of the studies on which this analysis was based has been withdrawn due to fraudulent data.”, was shared only by around 300 users, and mainly not from the same circles, according to the data.

But more importantly, as the above map shows, it's not just a numbers game. Oxford Academic’s Open Forum Infectious Disease journal published the review and its author's Expression of Concern with two separate URLs. These links continue to circulate in two separate circles: the circle supporting the use of Ivermectin continues to share the study as a proof that Ivermectin is effective, while other users- most notably the first author of the paper- are sharing the Expression of Concern.

Similar to the arguments about ADE, medical experts are constantly weighing the existing body of research on Ivermectin, and serious journalists have published explainers about the evolving knowledge related to the drug. But on the other hand, if your agenda is to advocate for using the drug, you only need to cherry pick the links to the studies supporting your agenda, and send your followers there. Some of those studies might be “low quality,” or later retracted, but for the layperson who is unfamiliar with the whole existing body of research, they seem valid, especially if they are hosted on the NIH website or a distinguished publisher server.

[wpdatatable id=2 table_view=regular]

From rabbit holes to wormholes: abuse of credible sources produces effective disinformation

While media outlets, social media and online search platforms are rightfully the immediate target of critics concerned about the circulation of online misinformation, mitigating the wormhole challenge puts more burden on other content platforms- in this case on scientific journal publishers and operators of scientific studies repositories. Those platforms should take into account that many of their visitors are there to check specific URLs, provided to them with misleading context. Some repositories, and mainly the preprint servers, have already taken several steps to curb abuse by disinformation propagators. Those steps included limiting publications of pre-prints on heavily politicised topics such as use of Ivermectin. But the challenge is not just with preprints. Dissenting views and evolving knowledge are essential and the challenge is not to eliminate an irrelevant or obsolete study, but rather to contextualize it.

Here are three ideas that may help mitigate the phenomena described above, and therefore reduce scientific and medical disinformation:

1. Scientific publishers need to invest more in defensive measures. In order to avoid their products being employed in campaigns that disinform the public, the publishers and operators of science and medical information publishing platforms need to do more to monitor traffic on their sites, provide more transparency for fact checkers, and flag to the news media and platforms when scientific studies are being abused to propagate disinformation. For example, if NCBI identifies when a specific paper is gaining more popularity online, that could be used to trigger a quick contextualization.

2. Consider design changes to discourage abuse. In addition to adaptations that have already been made to their user interfaces, science and medical information publishers should consider highlighting the date of publication (similar to what The Guardian has done with older articles), and adding more context about a topic such as recent studies that were published about a topic on the specific URL.

3. Double efforts to work with the news media, platforms and governments to curb disinformation. Science and medical publishers need to substantially resource their efforts to work with other stakeholders charged with mitigating disinformation, including social media platforms, the news media, and where appropriate, governments. This may be simple, including such interventions as collaborating with fact checkers to highlight when a study has been included in an article or post debunking or fact-checking claims made about its subject. This would allow a repository to give more context around an “abused” study, even if its not retracted. Or, such collaboration may involve more complex and strategic efforts, such as how to craft better content moderation policies or even regulations that could thwart the spread of deadly disinformation.

- - -

Overall, the interventions mentioned above could help, but will not provide an ultimate solution to the problem. Platforms must still take more substantial action to stop the spread of disinformation, as the US Surgeon General recently recommended. But to understand the serious challenges to science communications, and to our information environment in general, we should consider more research to theorize and understand these “wormholes”: the links that are dragging users from one platform to another, permitting them to be easily deceived even on the basis of what is often, by itself, valid information.

Authors