Online Safety Depends on a Growing Trust and Safety Vendor Ecosystem

Toby Shulruff, Lucas Wright, Jeff Lazarus / Jul 25, 2025Toby Shulruff is a research assistant on the History of Trust & Safety Project at the Trust and Safety Foundation (TSF), which is a 501(c)(3) non-partisan, charitable organization and the sibling organization of the Trust and Safety Professional Association (TSPA), which organizes TrustCon. Lucas Wright is a Ph.D. candidate in the Department of Communication at Cornell University. Jeff Lazarus is a UC Berkeley Tech Policy Fellow and a trust and safety professional at Meta; views expressed here do not reflect the views of his employer.

This year’s TrustCon, the annual gathering of trust and safety professionals, wrapped this week in San Francisco. One component of the event is an exhibition that features vendors of services such as AI solutions for content moderation, forensic tools, and other online safety products and services.

Since the 2000s, online platforms have come to rely more and more on third party vendors to do trust and safety work. This “third party ecosystem” does much more than outsourced content moderation—it now plays a vital role in core trust and safety work. You will find third parties at all levels of the trust and safety “stack”—from content and conduct moderation to policy-making/community standards development, tooling for content moderation, and more recently, compliance with new regulations.

While this slow, necessity-driven evolution is better known by trust and safety professionals working inside platforms, there has been little attention outside the field to how platforms have become so reliant on this third party ecosystem. This dependence has crucial implications on the trust and safety field as a whole and the end users that expect platforms to prevent, detect, and mitigate abuse, especially as regulatory compliance becomes a priority.

To reflect on how we got here, current state, and the implications of vendorization, we draw on findings from in-depth interviews conducted by the Trust and Safety Foundation as a part of the History of T&S Project (Toby), research on the T&S vendor ecosystem (Lucas), and direct experience in the T&S field (Jeff).

How we got here

The origins of the trust and safety field reach back to the 1990s. Third party vendors, contractors, and consultants emerged with the commercialization of the internet in the 2000s. According to interviews from the History of Trust & Safety project conducted between 2023-2024 with experts in civil society, government, and academia, as platforms grappled with an exponential increase in volume of user-generated content, speed (e.g. livestreaming), geographies, and languages, human content moderation at the largest companies shifted from in-house to business process outsourcing (BPOs).

Systematization—the development of rules, processes, and tooling—was necessary as work was automated or outsourced to BPOs. The kinds of work that could be systematized as “mature flows” or “scaled decisions” grew over time. For example, earlier on it was spam and pornography, but by the mid-2000s copyright violations and child sexual abuse material (CSAM) were also included. By the mid-2010s, terrorism and extremism were added to the list. As one trust and safety professional explained, “There's been a tendency to move some of the frontline jobs from being internal at companies to increasingly being at vendors.”

Early vendors primarily offered labor outsourcing for piecework that companies lacked the ability (or desire) to do in-house: data labeling for classifier development and content review. As trust and safety became more formalized in the mid-2010s, new start-ups offered automated tools for detecting violative content. The largest platforms had already developed some of this capacity internally, but these start-ups, often founded by former employees of the largest platforms, offered automated detection as a service to the rest of the industry.

Of mid-2010s vendor start-ups, those that survived were mostly acquired by platforms (or the next wave of vendors). The challenge they faced was that many customers needed content classifiers that were tuned for their specific rules and content formats. But budgets were limited, and the cost of building new, custom classifiers was high. Achieving the scale needed to sustain a venture-backed start-up is more difficult.

New developments in the early 2020s altered this equilibrium. First, rapid advancements in large language models (LLMs), in which a foundation model can learn new rules through plain language prompting, unlocked a new kind of automated moderation. Rather than training a new model for each policy on a large set of examples, advocates of LLM-moderation argue that one model can handle the vast majority of cases without retraining. Second, the proliferation of new online safety laws around the world has begun to create a “compliance floor” for trust and safety. Formerly ad hoc operations need to be standardized and systematized so outcomes can be reported to regulators and features like appeals can be offered to users.

The wave of vendors that coincided with these changes (roughly post-2018, but especially post-2021), took a different approach to the previous wave. Many of these companies still build classifiers that detect specific kinds of violations, like CSAM or hate speech, but they also build infrastructure. Rather than simply selling access to an individual model through an API, vendors sell access to “marketplaces” or “operating systems” — enterprise-grade, modular suites of tools that enable classification but also structure the entire trust and safety workflow. Typically, this includes tools for policy teams to set and refine rules, an interface in which content moderators review content, and centralized dashboards from which managers can monitor workflow and generate reports. Rather than competing directly in the market for content classification with more established BPOs and AI developers, this new wave of vendors is attempting to build and own the emerging complementary market that serves as the infrastructure for the prior market.

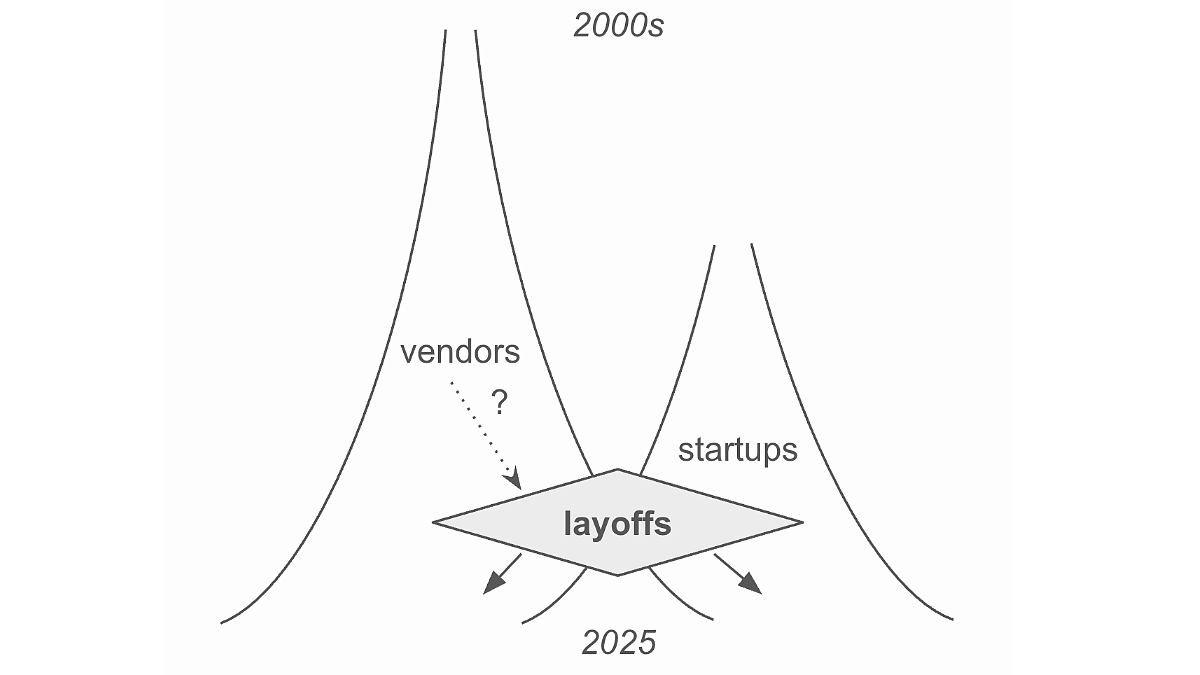

The History of Trust & Safety project interviewees connected the layoffs of 2022-2023 to the rise of vendorization. Prior to the layoffs, some trust and safety professionals had already started leaving larger companies, either moving to vendors or in more recent years to startups that were now recognizing the need for trust and safety earlier on– a key market for vendors. For instance, an analysis of job postings between August 2021 and May 2023 shows that trust and safety hiring shifted from larger, more established companies to newer companies. As one trust and safety professional told us:

The trust and safety field and way of doing things has sometimes intentionally, and I think sometimes unintentionally, followed the path that those [earlier, larger] companies and the folks who were part of those companies set up from the very beginning. Part of that has been a result of people who have been with those companies now leaving, either they've decided their time is done there and they want to do something else, or the layoffs that have happened... and the indoctrinated ways of those companies are now influencing or impacting or getting carried off to other companies. And so the ideas are flowing in one way in that direction.

In other words, these professionals carried particular approaches to the work with them from large companies to startups and to vendors.

Figure

Mapping the current state

Two years ago, Tim Bernard wrote about the evolving vendor ecosystem for content moderation tools on Tech Policy Press. Today, the services offered by external vendors and consultants have become even more diverse. There are three categories of services: identification, tooling, and expertise. (Lucas Wright describes these in a chapter in the Trust and Safety Foundation’s edited volume, Trust, Safety, and the Internet We Share: Multistakeholder Insights, to be published later by Taylor & Francis later this year.) While some vendors specialize in one and others offer all three, these groupings are useful for pinning down exactly what a particular product or service does.

- Identification refers to human or automated content review. This core offering is decision-making at scale.

- Tooling refers to software that aids in trust and safety workflows. This software acts as infrastructure that shapes and supports a myriad of tasks: case management software, dashboards for managers to monitor content across a platform, no code, drag-and-drop decision trees for creating new policies, etc..

- Expertise refers to vendors and consultants that offer specialized knowledge to help smaller companies set up their own teams and develop new policies, as well as more established consulting firms that view trust and safety as another vertical for their services. In addition, several vendors leverage knowledge of a particular abuse area (e.g., terrorist content), or set of skills (e.g., intelligence collection and analysis) to identify new trends or analyze platform abuse and misuse patterns.

While vendors are most widely associated with identification, the passage of online safety laws across the world is changing the ecosystem. In Wright’s interviews, founders of tooling-focused vendors explained that Europe’s Digital Services Act (DSA) has not generated as many new customers as many people expected. As Stanford scholar Daphne Keller recently explained, the requirements of the DSA focus on process, not content, which creates compliance functions that she compares to “the laws that govern financial transactions at a bank, or safety inspections at a factory.” Many companies are turning to external vendors to achieve this compliance.

However, the DSA is a young law. Compliance norms are still developing and the European Commission has yet to issue any substantial fines. In this moment of uncertainty, many vendors are marketing themselves as supporting DSA compliance, but most smaller and mid-sized platforms have little incentive to overhaul their trust and safety systems with new software. Instead, they’re focused on filing the right paperwork correctly and on time. This largely has them seeking out independent consultants, policy advice, and audits from traditional accounting firms rather than expensive new software. With that said, even tooling vendors are pivoting toward products that align with new regulatory requirements, such as systems that automatically produce transparency reports from vast amounts of collected data.

Near term implications: better tools, more options

The bevy of third party vendors and consultants offering advice, expertise, and a plethora of tools to do everything from automated content moderation to compliance reporting makes it easier than ever to perform critical trust and safety functions at early stage companies, a trend that many participants in the History of Trust and Safety project found encouraging. Prior to the early 2020s, these platforms normally would not have the resources to build functions in-house and would in turn choose not build tooling at all until certain growth or revenue benchmarks were hit. However, platforms can now turn to a range of off-the-shelf tools they can customize to quickly set up critical tasks, from detecting abuse to responding to legal requests.

Mid-size and large companies have to think through the “build it or buy-it” question when it comes to trust and safety solutions. In many cases, “buying it” may make sense – contracting with a vendor can be faster, simpler, and more efficient than re-allocating or onboarding new engineers to build classifiers, review tooling, or operational flows. It can also come at a cost – lack of customization, introducing potential fragility into the system (something always breaks), and possibly data security and privacy issues. As one History of T&S project interviewee warned, there is a potential to get locked into certain ways of doing the work, or path dependencies, when building on past work or decisions:

They developed these paths for scaling trust and safety, for outsourcing trust and safety...and it is in some ways tactically difficult or operationally difficult to stray from that kind of path. The decisions that were made at those companies seemed to really just still be influencing how everyone else developed their practice. [For example], “for child safety, P[hoto]DNA came before GIFCT, and so there was a precursor there.

Vendors can also bring improved solutions to long neglected areas of trust and safety. As an example, tooling has long been the Achilles heel of content moderation. The tools moderators use to takedown, keep up, or perform some other action on a piece of content, has long suffered from historical underinvestment resulting in inadequate, poorly designed, and unstable review tools. These tools often lack dedicated engineering support and were not designed with the benefit of UX design and research. As a result, the tools moderators rely on often have user interfaces that appear to be from a by-gone era of the computer age, and fail repeatedly, making it harder to review content and negatively impacting moderator wellbeing. Third party vendors now offer refined, user-friendly moderator tools with dedicated support and wellbeing tools built-in, improving uptime and moderator productivity.

Long term implications: caution ahead

Vendors are now deeply enmeshed in all aspects of trust and safety at nearly every online platform, from early start-ups to companies with billions of users. Those of us who work in or care about the field must recognize the influence this gives them over the practices, metrics, and goals of the field.

Vendors’ products and services currently play a more critical role throughout the T&S stack. When a company buys software to enable user appeals of content takedowns or automatically generate reports for regulators, those are more than just tools—they form part of the infrastructure for the entire operation. They lay the groundwork for all of the other T&S functions that depend on these services. While early vendor services primarily provided labor, the vast array of today’s consulting and tooling services have the potential to create lock-in effects where a handful of companies shape the work of T&S across the industry. As a result, it can be too costly to switch vendors and risks breaking that infrastructure. Locating accountability within these increasingly complex systems will only become harder for policymakers, journalists, academics, and T&S teams as decision making is further dispersed through this network.

The third party vendor ecosystem is also likely to play a growing role in shaping regulation and compliance. Vendors have a vested interest in enhanced content regulation and intermediary liability frameworks. The more online platforms need to do to detect, prevent, mitigate, and respond to illegal and harmful speech, and to demonstrate compliance, the greater the opportunity vendors have to offer their products and services. Such regulation has, of course, significant implications for online speech and conduct.

Trust and safety work now relies on third party vendors for not only content and conduct moderation, but a range of other functions including policy development, work flows, and compliance. This trend is both facilitating trust and safety work at scale for companies of all sizes and from the inception of startups, and it is also altering the landscape of the field. Civil society, policymakers, journalists, and academics need to better understand these shifts in order to collaborate with trust and safety professionals to protect online speech and safety across the many online spaces, apps, and connected products that trust and safety practitioners work to protect.

Authors