October 2023 U.S. Tech Policy Roundup

Kennedy Patlan, Rachel Lau / Nov 2, 2023Rachel Lau and Kennedy Patlan work with leading public interest foundations and nonprofits on technology policy issues at Freedman Consulting, LLC. Associate J.J. Tolentino and Hunter Maskin, a Freedman Consulting Phillip Bevington policy & research intern, also contributed to this article.

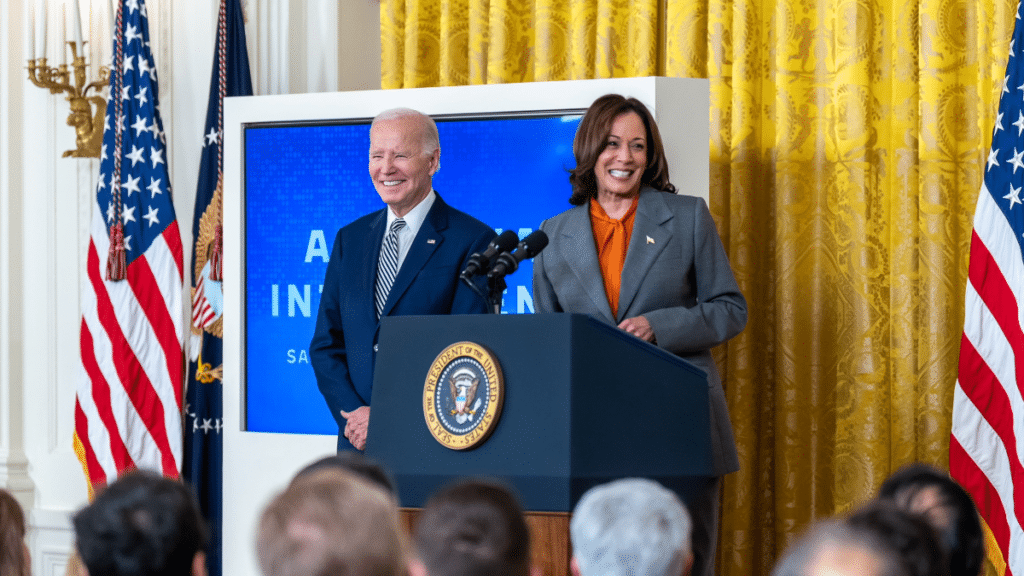

With a government shutdown narrowly averted and a new House speaker finally assuming the role, October saw many significant technology policy developments in the United States. The month marked the one-year anniversary of the White House Blueprint for an AI Bill of Rights, an executive effort to establish five principles to govern the United States’ use of AI, and President Joe Biden issued a landmark executive order on artificial intelligence, with other actions coming shortly after in early November. Continue reading for more details on the executive order, major litigation against Meta, and other domestic tech policy news.

In October, federal agencies engaged on issues ranging from AI and antitrust to net neutrality and semiconductors. As 2024 approaches, the Department of Justice continues to focus on the reauthorization of the controversial Section 702 of the Foreign Intelligence Surveillance Act, which allows the government to collect communications of “non-U.S. persons outside the United States” but has been subject to substantial abuse. The FCC voted 3-2 to move forward with Chair Jessica Rosenworcel’s proposal to reinstate net neutrality. In addition, the agency released tentative rules for equitable deployment and access to broadband services by preventing digital discrimination of such resources. Sarah Morris was also announced as NTIA’s acting deputy assistant secretary this month.

In other agency news, the Department of Commerce announced new rules tightening export controls on some semiconductors critical to advanced computing and artificial intelligence. Also, the Securities and Exchange Commission (SEC) sued Elon Musk to force him to testify about last year’s acquisition of X (formerly Twitter). The Federal Trade Commission’s (FTC) antitrust lawsuit against Amazon continued this month, as the agency claims that Amazon used an algorithm to systematically raise prices. Over 30 advocacy groups sent a letter to the FTC applauding the lawsuit and encouraging the agency to “be aggressive in [its] pursuit of these charges as well as the needed structural remedies.”

On the Hill, Rep. Yvette Clarke (D-NY) and Sen. Amy Klobuchar (D-MN) sent a letter to Meta and X asking how they plan to address AI-generated political ads on their platforms as election season approaches. Sens. Marsha Blackburn (R-TN) and Richard Blumenthal (D-CT) also sent a letter to TikTok inquiring about the company’s hiring practices and its relationship to parent company ByteDance.

In corporate news, recently unsealed court transcripts of the federal government’s antitrust suit against Google showed that the company spent over $26 billion in 2021 paying partners to be the default search engine, and that Apple turned down multiple opportunities to buy Bing. Neeva founder and ex-Google executive Sridhar Ramaswamy took the stand during trial, testifying that Google’s dominance made it extremely difficult to compete in the market for search engines, while Google CEO Sundar Pichai claimed that the company faced considerable competition as a contender to be Apple’s default.

Kaiser Permanente, which experienced the largest healthcare strike in U.S. history in October, partnered with healthcare startup Nabla to use AI for administrative work. The Electronic Privacy Information Center (EPIC) filed a complaint with the agency against the dating app Grindr detailing data privacy concerns. Finally, the Global Encryption Coalition hosted its annual Global Encryption Day 2023, which brought together experts, advocates, and civil society organizations to discuss encryption, online privacy, and digital security.

Internationally, misinformation and disinformation following the Hamas attack on Israel on October 7 and the Israeli government’s siege and bombardment of Gaza that ensued has resulted in false claims and fueled discourse being spread online. Unsubstantiated, online viral claims have been repeated by key political figures, with Meta, X, and TikTok facing significant fines from the European Union for the spread of disinformation.

Read on to learn more about October developments in AI policy and children’s privacy topics.

The Biden Administration’s Executive Order on AI Arrives

- Summary: At the end of the month, the Biden Administration released its highly anticipated executive order and accompanying fact sheet on “safe, secure, and trustworthy artificial intelligence,” establishing new standards for AI regulation that seek to advance the public interest while bolstering U.S. innovation and global competitiveness in AI development. The executive order builds on the voluntary safety and security commitments made by a number of companies at the forefront of AI innovation and directs dozens of federal agencies to develop guidelines and standards for the responsible development, deployment, and use of AI across sectors, industries, and issues. Key directives include a requirement for the National Institute of Standards and Technology to develop standards to help ensure AI tools are safe before becoming available to the public. The order also mandates that the Department of Commerce issue guidance on how to properly disclose and watermark AI-generated content. The executive order also includes provisions addressing data privacy, consumer protections, and workers rights. In early November, the Office of Management and Budget is expected to release detailed guidance to federal agencies on their own testing, purchasing, and deployment of AI tools. Analysis of these actions and more will be included in the November roundup.

- Stakeholder Response:

- In response to the AI executive order, civil society organizations have been largely positive in their reactions but warned that more work has yet to be done. Maya Wiley, President and CEO of The Leadership Conference on Civil and Human Rights, acknowledged that the White House’s executive order is “a real step forward,” but also that there is still need for Congress to “consider legislation that will regulate AI and ensure that innovation makes us more fair, justice, and prosperous, rather than surveilled, silenced, and stereotyped.” Damon Hewitt, President and Executive Director of the Lawyers’ Committee for Civil Rights Under Law welcomed the executive order as a “a critical step to help guard against algorithmic bias and discrimination” but stated that more needs to be done to mitigate the harmful use of AI by law enforcement agencies.

- Additional comments from civil society organizations can be found at the links provided: Accountable Tech, AFL-CIO, American Federation of Teachers, Center for Democracy and Technology, Common Sense Media, Communications Workers of America, Consumer Reports, Fight for the Future, New America’s Open Technology Institute, UnidosUS, Upturn.

- Elected officials also weighed in on the executive order, with sixteen members of Congress sending a letter to President Biden before the EO announcement urging him to create an ethical framework for AI by incorporating the AI Bill of Rights into the document. Following the announcement of the executive order, Sen. Mark Warner (D-VA) expressed support for the provisions while pushing for additional legislation. Ranking member of the House Science, Space, and Technology Committee, Rep. Zoe Lofgren (D-CA) expressed support for the AI executive order but also outlined that Congress “must consider further regulations to protect Americans against demonstrable harms from AI systems.” Sen. Ted Cruz (R-TX) voiced concern over the executive order stating that it “marks a retreat in U.S. leadership,” in regards to AI innovation and international competition.

- Additional comments from Members of Congress, industry leaders, and civil society leaders can be found in this White House collection of responses to the AI executive order.

- What We’re Reading

- The Washington Post wrote about the wide ranging impact the executive order will have on the federal government. Politico reported on the ambitious enforcement requirements coming out of the executive order. The Federation of American Scientists explained the impacts of the executive order on visa policies and immigration. In Tech Policy Press, Renée DiResta and Dave Willner wrote about how content integrity issues are addressed in the order, and Justin Hendrix summed up the sweeping new portfolio it gives to the Department of Homeland Security. Finally, Mashable compiled a list of the top 10 provisions to look at in the executive order.

Major Lawsuit Against Meta to Protect Children from Social Media Harms

- Summary: In October, Meta was confronted with lawsuits from multiple governing institutions across the country. A bipartisan federal lawsuit was filed by 33 state attorneys general over claims that Meta’s Facebook and Instagram platforms promote harmful features that knowingly “addict children and teens and pose a significant threat to children’s mental and physical health.”

- Meanwhile, nine other jurisdictions (Florida, Massachusetts, Mississippi, New Hampshire, Oklahoma, Tennessee, Utah, Vermont, and Washington, D.C.) have also filed similar lawsuits against Meta; these lawsuits will be reviewed across state courts as separate cases. These lawsuits follow earlier action from nearly 200 school districts across the U.S., who have filed federal suits against Facebook, TikTok, Snapchat, and YouTube for negatively impacting students’ mental health and behavior. The outcome of each of these lawsuits may impact how social media platforms operate and regulate content for minors.

- Stakeholder Response: California Attorney General Rob Bonta, lead AG on the federal lawsuit commented: “We refuse to allow Meta to trample on our children’s mental and physical health, all to promote its products and increase its profits.” He later went on to say that “the door is wide open” to any settlement negotiations. However, Meta released a statement expressing the company’s disappointment in a lack of collaboration between states and the social media company to develop clear standards for minors using the social media platforms. Facebook whistleblower Frances Haugen was enthusiastic about the legal action, stating that the lawsuit was “historic."

- What We’re Reading: Tech Policy Press covered components of The State of Arizona, et al. v Meta case that was filed in California; the article also includes civil society responses to the suit. The Atlantic’s Kaitlyn Tiffany argued these multistate lawsuits aren’t enough. Tim Wu, a professor at Columbia Law School and former special assistant to the president for competition and technology policy shared his thoughts on the consequences of congressional inaction. Politico outlined how the lawsuits filed against Meta are modeled on earlier GOP-state lawsuits against TikTok and are intended to evade defenses predicated on Section 230.

Other AI Policy News

- Congress:

- On October 31, the Senate Health, Education, Labor, and Pensions Employment and Workplace Safety Subcommittee held a hearing titled “AI and the Future of Work: Moving Forward Together”on AI’s future impact on the workforce.

- On October 24, Senate Majority Leader Schumer hosted the second AI Insight Forum. This forum was largely focused on innovation, and included an array of investor, academic and civic sector voices. To learn more about the day’s events, follow along with Tech Policy Press’s US Senate AI ‘Insight Forum’ Tracker.

- The Innovation, Data, and Commerce Subcommittee of the House Energy and Commerce Committee held a hearing titled “Safeguarding Data and Innovation: Building the Foundation for the Use of Artificial Intelligence” on privacy and AI.

- Federal Agencies:

- At an FCC roundtable, artists, creators, and industry representatives called on the federal government to regulate AI to shield their products from uncompensated and unconsented replication. The comments were timely, given that they followed a lawsuit from record labels against Anthropic, which alleges that the company’s AI service shared copyrighted lyrics without label company consent or licensing.

- The U.S. Copyright Office solicited information and comments due at the end of October to inform its report on the issues generative AI may pose for copyright protections.

- Corporate Action:

- On the corporate side, Anthropic, Google, Microsoft, and OpenAI announced Chris Meserole as the new executive director for the Frontier Model Forum. This month, the companies also introduced a $10 million AI Safety Fund that will “promote research in the field of AI safety.”

- International Governance:

- U.N. Secretary-General António Guterres announced a new AI Advisory Body that will operate through U.N.’s Office of the Secretary General. In his speech, Guterres said the 39-member Advisory Body will work independently under UN Charter values, and will consult with the Secretary-General’s Scientific Advisory Board, to develop international governance recommendations for AI ahead of the UN’s Summit of the Future in September 2024.

- In late October, the G7 (US, Japan, Germany, United Kingdom, France, Italy, Canada) announced an AI code of conduct for companies developing related technologies. The 11-point framework includes principles to evaluate and mitigate AI risks, encourages public reporting of AI systems’ capabilities, and promotes collaboration and information exchange.

- At a World Trade Organization meeting, U.S. Trade Representative (USTR) Katherine Tai announced U.S. plans to withdraw earlier Trump administration proposals for e-commerce trade agreements. USTR asserted their decision was made with the hopes that Congress could more thoroughly regulate major technology firms as a result.

- Civil Society:

- The Brookings Institution released a report on AI’s impact on the housing market and mortgage lending practices. The report shares concerns around bias, privacy, and transparency, and also provides oversight recommendations for federal agencies.

- Stanford’s Center for Research on Foundation Models released a Foundation Model Transparency Index, which evaluates foundation model developers’ transparency standards. The research found that no major developers have adequate transparency standards. However, open foundation models (e.g., Llama 2 and Stability AI) did receive higher transparency scores than some of their big tech counterparts. Collectively, the research concluded that the field is behind on delivering adequate transparency to consumers.

- Public interest organizations including AccessNow, Common Sense Media, Data & Society, the Electronic Frontier Foundation, NAACP, and Upturn co-signed a letter to Congress expressing concern about the risks of AI. The letter also encouraged Congress to continue including civil society in future Congressional activities, ranging from public hearings to legislation.

- The Brennan Center for Justice posted an article that outlines AI’s potential impact on U.S. elections and democracy and presents recommendations for how to mitigate related harms.

- Data & Society published a policy brief on AI red-teaming, a practice in which security engineers critically analyze and test AI systems to identify potential weaknesses, bias, and other failures. While AI red-teaming is a specific approach to tackling AI risk, the authors argue that it should not be the only solution to ensuring AI accountability.

New Legislation and Policy Updates

- Nurture Originals, Foster Art, and Keep Entertainment Safe (NO FAKES) Act (sponsored by Sens. Chris Coons (D-DE), Marsha Blackburn (R-TN), Amy Klobuchar (D-MN), and Thom Tillis (R-NC)): The NO FAKES Act would protect the voice and visual likeness of all individuals from unauthorized recreations using generative artificial intelligence. The legislation would hold individuals and companies liable for producing unauthorized digital replicas of performers and hold platforms liable for knowingly hosting such replicas without their consent. However, it would exclude certain replications protected under the First Amendment. The draft version of the bill can be found here.

- Artificial Intelligence Advancement Act of 2023 (S. 3050, sponsored by Sens. Mike Rounds (R-SD), Chuck Schumer (D-NY), Todd Young (R-IN), and Martin Heinrich (D-NM)): The Artificial Intelligence Advancement Act would require reports on AI regulation in the financial services industry as well as on data sharing and coordination best practices. It would also establish AI bug bounty programs and mandate a vulnerability analysis for AI-enabled military applications.

- Preventing Deep Fake Scams Act (H.R.5808, sponsored by Reps. Brittany Petterson (D-CO) and Mike Flood (R-NE)): The Preventing Deep Fake Scams Act would establish a Task Force on Artificial Intelligence in the Financial Services Sector, which would submit a report to Congress within one year of enactment about protection measures against fraud attempts using AI, definitions of ways in which AI is used, risks posed by AI, best practices for consumer protection, and legislative and regulatory recommendations for AI.

- Banning Surveillance Advertising Act (H.R.5534 / S.2833, sponsored by Reps. Anna Eshoo (D-CA) and Jan Schakowsky (D-IL) and Sens. Ron Wyden (D-OR) and Cory Booker (D-NJ)): The Banning Surveillance Advertising Act, first introduced in the 117th Congress, would prohibit targeted advertising by advertisers and advertising facilitators. However, it would exclude advertisements distributed based on content an individual is viewing or searching for. The FTC would be charged with implementing and enforcing the law, but the bill also authorizes state-level enforcement, as well as a private right of action.

- Eyes on the Board Act of 2023 (S. 3074, sponsored by Sens. Ted Cruz (R-TX), Ted Budd (R-NC), and Shelley Moore Capito (R-WV)): The Eyes on the Board Act would require schools receiving federal E-Rate or Emergency Connectivity Fund support for broadband to prohibit children from accessing social media on school networks and devices. The bill would also promote parent-imposed limits on screen time in schools and require implicated districts to enforce screen time policies. The Federal Communications Commission would be charged with creating a database of school internet safety policies.

- AI Labeling Act of 2023 (S. 2961, sponsored by Sens. Brian Schatz (D-HI) and John Kennedy (R-LA)): The AI Labeling Act would require producers of AI content to provide a “clear and conspicuous notice” of use of AI in their work. The bill would also require developers of generative AI tools and other promoters of AI content to work to prevent mass publication and distribution of content without proper disclosures.

- Facial Recognition Act (H.R. 6092, sponsored by Reps. Ted Lieu (D-CA), Yvette Clarke (D-NY), Jimmy Gomez (D-CA), Glenn Ivey (D-MD), Marc Veasey (D-TX), and Sheila Jackson Lee (D-TX)): The Facial Recognition Act would place strong limitations on law enforcement’s use of facial recognition technology. The bill would require that law enforcement agencies obtain a warrant in order to use facial recognition and restrict any potential evidence gleaned from facial recognition technology from being the sole basis for a search or arrest. To enhance transparency, the bill would establish a private right of action for harms caused by facial recognition technology and require law enforcement to notify individuals when facial recognition technology is being used to conduct a search. Finally, the bill would require annual audits for law enforcement agencies' use of facial recognition systems.

- Ensuring Safe and Ethical AI Development Through SAFE AI Research Grants (H.R. 6088, sponsored by Reps. Kevin Kiley (R-CA) and Sylvia Garcia (D-TX)): The Ensuring Safe and Ethical AI Development Through SAFE AI Research Grants bill would require the National Academy of Sciences to create a grant program that encourages responsible AI research and model development.

Public Opinion on AI Topics

The Public Religions Research Institute published results from a poll of 2,252 U.S. adults from Aug. 25-30, 2023, attempting to discern how Americans felt about the upcoming election, candidates, and national issues. They found that:

- Only 19 percent of Americans viewed AI as a critical issue right now, the second-lowest share of 20 issues tested.

The Vanderbilt Policy Accelerator for Political Economy and Regulation conducted a poll of 1,006 US adults from Sept. 8-11, 2023 to study attitudes towards AI companies, regulations, and competition in the industry. They found that:

- 62 percent of Americans believed a federal agency should regulate the use of AI.

- 75 percent of Americans agreed that “big tech companies should not have so much power and should be prevented from controlling all aspects of AI.”

- 76 percent of Americans said that big tech companies should be regulated similarly to public utilities.

- 68 percent of Americans supported breaking up the big AI companies.

- 76 percent of Americans supported using public funds to establish tech resources for researchers to focus on AI.

- 73 percent of Americans supported a public alternative to big tech companies in the development of AI technology.

- 81 percent of Americans believed that the government should develop a public cloud computing system for AI researchers

- 77 percent of Americans supported the creation of a team of government AI experts for improving public services and advising regulators.

- 62 percent of Americans wanted the government to have its own AI experts to prevent reliance on private consultants or big tech companies.

Citizen Data conducted two polls (July 19-21, and Sept. 13-20, 2023) of about 1,000 registered voters nationwide each on issues related to democracy. They reported that:

- 75 percent of Americans wanted Congress to enact legislation that restricts AI.

- 67 percent of Americans believed that the proliferation of AI tools will lead to greater misinformation and impact democracy.

Ipsos conducted a poll of 4,500 adult workers ages 21-65 across Australia, the United Kingdom, and the United States between July 18- Aug. 1, 2023. They found that:

- 59 percent thought AI could save them time by identifying relevant information across communication apps.

- 46 percent reported using AI at work and think it would be most helpful in note-taking and transcription, task management, and to a lesser extent, meeting recaps.

In October, YouGov published a poll conducted in June 2023 with adults in 17 countries on how artificial intelligence will impact personal financial management, with sample sizes ranging from 437 to 2,045 in each country. They found that:

- 25 percent of consumers believed that AI will improve managing their personal finances.

- 43 percent said that AI will improve diagnosing medical diseases/conditions.

- 42 percent said that AI will improve building travel itineraries.

- 27 percent believed AI will worsen customer service and driving a car or other vehicles.

Anthropic and the Collective Intelligence Project polled 1,127 people on their preferences for an AI system constitution. They found that:

- 87 percent of respondents believed AI should not say racist or sexist things.

- 58 percent believed AI should “actively address and rectify historical injustices and systemic biases in its decision-making algorithms.”

- 71 percent believed “AI should never endorse conspiracy theories or views commonly considered to be conspiracy theories.”

- 59 percent believed that “AI should prioritize the interests of the collective or common good over individual preferences or rights.”

Ipsos conducted a poll of 1,119 U.S. adult consumers between Oct. 10-11, 2023 on a variety of topics. They found that:

- 55 percent of American adults wanted to learn how to use AI.

- 32 percent of American adults claimed they possess a baseline knowledge about generative AI.

- 52 percent of American adults claimed they are teaching themselves how to use AI tools, either through social media or tutorials online.

Public Opinion on Privacy Topics

The Pew Research Center published a survey of 5,101 U.S. adults on their views on privacy, personal data, and their online activity conducted May 15-21, 2023. They found that:

- 71 percent of Americans said they were worried about government use of personal data. 77 percent of Republicans were worried about government use of personal data, compared to 65 percent of Democrats.

- 67 percent of Americans said they do not understand much about what companies are doing with their data.

- 77 percent of Americans had little or no trust in leaders of social media companies to publicly admit mistakes and take responsibility for data misuse.

- 71 percent had little to no trust that these tech leaders will be held accountable by the government for data missteps.

- 89 percent were very or somewhat concerned about social media platforms having personal information about children.

- 72 percent of Americans said there should be more regulation than there is now.

- 34 percent of Americans have experienced some form of fraud or hacking attempt in the past year.

Deloitte recently published results from itsQ3 2023 poll of 2,018 U.S. consumers on their privacy and security concerns, reporting that:

- About 60 percent of consumers worried that their personal devices are susceptible to security breaches.

- About 60 percent of consumers were concerned that they could be tracked through their devices.

- 79 percent of respondents reported they had taken at least one action to protect their data.

- 41 percent of respondents thought it was easier to protect their data online now compared to last year.

- 34 percent of respondents felt that companies are transparent about their use of collected data.

Morning Consult and EdChoice surveyed 2,258 Americans ages 18 and over and 1,315 parents of children currently in K-12 education from September 12-14, 2023. They found that:

- 58 percent of adults expressed strong concern about the impact of social media use on children.

- 50 percent of parents of children currently in K-12 education said they are extremely or very concerned about the impact of social media on children’s mental health.

- 29 percent of K-12 parents said their children use social media extremely or very often.

Gallup polled 6,643 parents and 1,591 adolescents from June 26-July 27, 2023 in its Familial and Adolescent Health Survey. The survey asked about wellbeing, mental health, parent-child relationships, and more. Some key findings include:

- 51 percent of U.S. teenagers reported spending at least four hours per day on social media apps like YouTube, TikTok, Instagram, Facebook, and X.

- 47 percent of respondents restricted screen time for their children.

- - -

We welcome feedback on how this roundup could be most helpful in your work – please contact Alex Hart and Kennedy Patlan with your thoughts.

Authors