Obama’s Speech on Social Media Signals Need for Two Critical Reforms

Brian Boland / Apr 26, 2022Brian Boland is a co-founder of The Delta Fund and a former Vice President at Facebook.

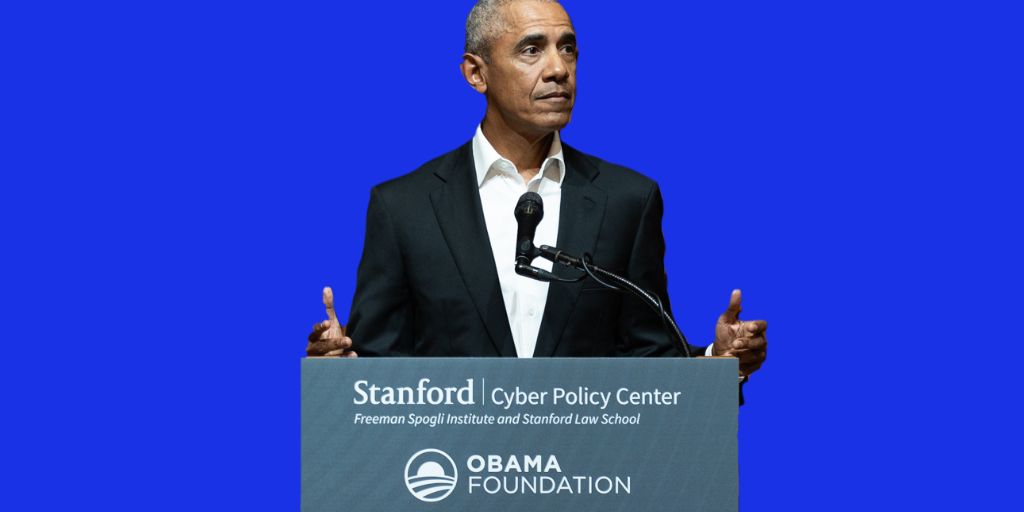

Last Thursday, former President Barack Obama delivered an address at Stanford University that identified problems and potential remedies to disinformation and other issues related to social media.

I listened intently. After working at Facebook for 11 years, I came to the conclusion that the company recklessly underinvests in safety measures as well as continuously underestimates the impact of dangerous and misleading content on the platform. I agree with President Obama that we can no longer wait for tech platforms to do the right thing on their own. We need to pass meaningful legislation to better guide the tech industry while continuing to foster innovation. But there are two critical areas specific to disinformation where I want to emphasize the importance of acting now.

Required Transparency

The former President advocated for greater transparency. While some have advocated for transparency around the algorithms themselves, that focus is misplaced. It isn’t about the technique here as much as it is about the results. The algorithms that promote content on Facebook have become so complex over time that, even within Facebook, nobody fully understands them or can predict what they will produce. A/B testing systems were developed to study the results of changes to the algorithms precisely because they could not be predicted. If the experts at Facebook cannot meaningfully understand what the algorithms will do, how could we expect anyone else outside of the company to understand them?

Like many complex systems, one of the best ways to understand them is to observe them in action. The National Highway Traffic Safety Administration (NHTSA) protects Americans through crash testing and the data they analyze on real world issues as they arise. With this data they can guide the industry on necessary changes to protect people. We can study the impacts of medicine through observation, or workplace safety when we gather the data. For social media we are flying blind.

One of the turning points for me was the summer of 2020. As political and race-related divisions in this country grew, I saw those same divisions reflected in the public data from CrowdTangle, a tool Facebook had purchased to help companies understand how people engaged with their content. CrowdTangle was also utilized by researchers and journalists to find problematic content on Facebook and understand how people engaged with it.

As the public scrutiny intensified, Facebook attempted to delegitimize the CrowdTangle-generated data. It rejected strong internal proposals to take industry-leading actions to increase transparency. And rather than address the serious issues raised by its own research, Facebook leadership choses growing the company over keeping more people safe. While the company has made investments in safety, those investments are routinely abandoned if they will impact company growth.

This is why laws defining and enforcing transparency are critical now. Last year, Nate Persily of Stanford proposed the Platform Transparency and Accountability Act, which would create the legal and regulatory framework to require more transparency and hold companies accountable for what happens on their platforms.

A foundation of transparency enables researchers to study what happens on tech platforms, journalists to uncover problematic content, and human rights organizations to better protect our communities. If the platforms aren’t going to meaningfully invest in this work themselves - and we have every indication that they are not - we need to put the data in the hands of those who will.

Section 230 Reform

There are two modifications we should make to Section 230 of the Communications Decency Act now: creating potential liability for actions algorithms take to promote content and adding a clear duty of care.

We can protect innovation while protecting people through a couple of targeted changes. Studying the impact of algorithms is a start, but we need to create real accountability and incentives to produce a safer platform.

Consider the position that many express - including the former President - that the best counter to bad speech is more good speech. This view stems from a 1927 Supreme Court case on the First Amendment and is referred to as the Counterspeech Doctrine, which holds that bad speech should be addressed by “more speech, not enforced silence.” The issue is that it is less about the choices made about what can and cannot stay on the platform and much more about what content the algorithms amplify. In our modern feed environment, all speech is not equal. In ranked feeds the algorithms will select some speech over other speech and will amplify certain content over other content. It may do so even to the point where the counterspeech is almost never seen.

You can think about it like a town square full of many diverse people sharing their ideas. Anyone can say almost anything. At one end of the square, however, is a stage with a microphone and a huge soundsystem. There is a moderator who selects people from the crowd to get up on stage and they can speak into the microphone. It seems like they are choosing people who get the biggest reaction, and so those voices get louder. Differing views aren’t selected and soon the crowd believes that the most common views are the most divisive. Even worse, disinformation can be projected from the stage with little opportunity for the voices in the crowd to counter it if they aren’t selected to be on stage.

This is why we need to modify Section 230 to introduce accountability for the algorithmic amplification of content. Roddy Lindsay, a former Facebook engineer who designed algorithms, put forward an argument in support of this approach. At the time I had hoped that this proposal would receive more attention - it is a strong path and we should pursue it now.

A second Section 230 reform revolves around the duty of care that platforms should exercise to protect the people who use them. On one hand, you can protect the platforms from liability for the rare bad things that people will say on a user generated platform. When there isn’t a pattern, it is hard to hold any business accountable. If someone buys drugs in a hotel bar you can’t really hold the hotel liable. But if the hotel becomes broadly known as the place to go for your drugs, you can hold it liable.

When there are patterns of known harms like human trafficking or strong indications of issues like child predators the platforms are responsible for making every reasonable effort to protect people and prevent that content. Danielle Citron, among others, has advocated for Section 230 reforms to specifically require that tech platforms provide a duty of care more broadly “with regard to known illegality that creates serious harm to others.” This is a second important 230 change that we should enact to better protect people.

Conclusion

There was a lot of initial energy when Frances Haugen released a trove of internal Facebook documents. We saw a flurry of articles and hearings before Congress. Since that initial burst of momentum, we have seen little action in the United States. The good news is that Europe has taken the lead and is bringing forward meaningful regulation in the Digital Services Act.

Considering the fact that these companies are headquartered in the United States, we should take a proactive stance and protect Americans - as well as people around the world - from the harms these platforms create. We could have a future where we enjoy the great benefits of these platforms, while meaningfully reducing the harms.

The time to act is now. We know that the industry isn’t investing on its own; Facebook has prioritized building the metaverse over investing in safety. Facebook touts $13 billion spent on safety during a period where they spent $50 billion on stock buybacks. Without incentives to significantly invest in safety I doubt they will do so.

The right regulations won’t stifle innovation. We have seen many examples throughout our history where we better protect people while helping industries to thrive - such as safer buildings, safer cars, food systems we can trust, medicines that save lives, chemicals that we use every day and many more examples.

It is time to create those incentives based on these very good proposals. As President Obama said, we have to act now “not just because we’re afraid of what will happen if we don’t, but because we’re hopeful about what can happen if we do.”

Authors