November 2023 US Tech Policy Roundup

Kennedy Patlan, Rachel Lau / Dec 1, 2023

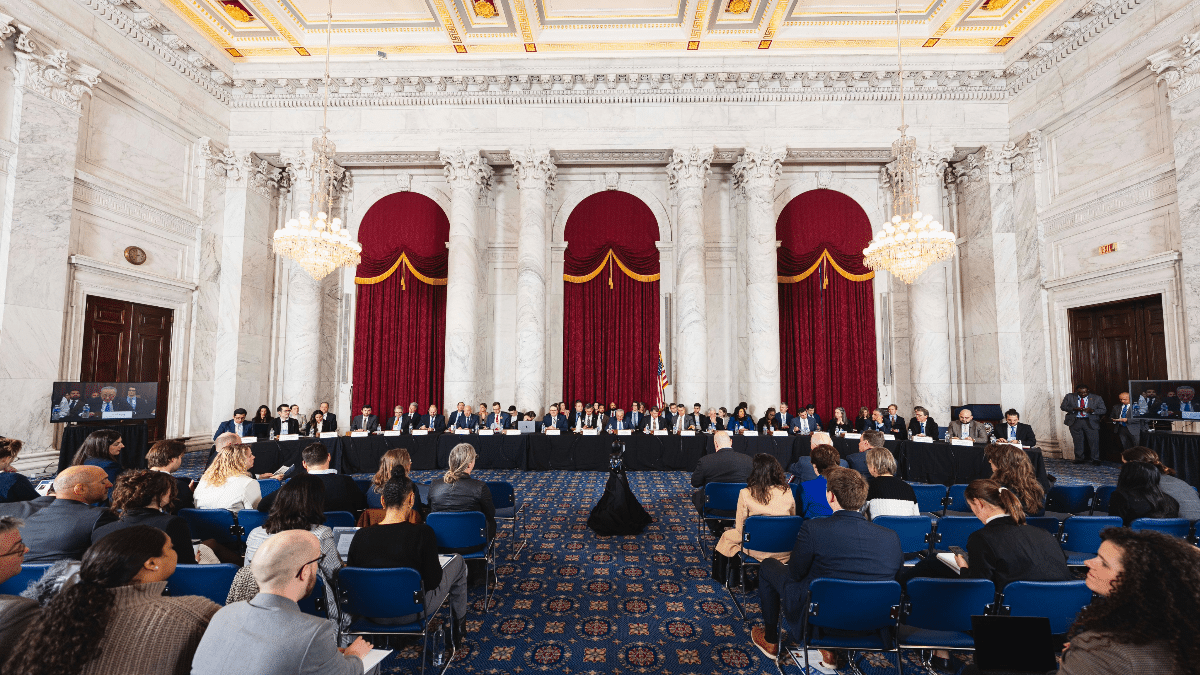

The 7th Senate “AI Insight Forum,” November 29, 2023. Source

November saw another slew of tech policy developments in the US. Artificial intelligence (AI), in particular, dominated the headlines:

- After President Biden signed a historic executive order on AI in late October, the Office of Management and Budget (OMB) started the month by releasing draft guidance to help federal agencies implement new governance structures and advance AI innovation.

- The US co-led the joint release of a non-binding international agreement among 18 countries that aims to keep AI safe throughout development and deployment cycles.

- President Biden met with Chinese President Xi Jinping to discuss numerous issues, including the dangers posed by the use of AI in military operations.

- Following the release of the AI executive order, federal agencies launched hiring initiatives to identify chief AI officers, with a projected 400+ officials needed to fill all posts.

- Senate Majority Leader Chuck Schumer (D-NY) held three additional AI Insights Forums, which covered a range of topics including election, privacy, transparency, and intellectual property issues. (Learn more about each forum using the Tech Policy Press AI ‘Insight Forum’ Tracker.)

- At the state level, the California Privacy Protection Agency released draft regulations for businesses using AI, including a host of consumer protections.

- OpenAI suddenly dismissed CEO Sam Altman, only to reinstate him less than a week later. His reinstatement was also accompanied by board changes, prompting wider calls to diversify the new board’s representation. Before agreeing to return, Altman had accepted a leadership role on Microsoft’s advanced AI research team. On the same day Altman was removed, the company disclosed it hired its first federal lobbyists.

But AI wasn’t the only subject that saw movement. Another was internet access and connectivity. In agency news, the FCC finalized rules to prevent digital discrimination in access to broadband services. The Biden administration also released a National Spectrum Strategy that includes four pillars to strengthen US innovation, security, and collaboration.

And, there was movement in legal battles over social media. A federal judge issued a win for the FTC in children’s online privacy against Meta, ruling that the agency can adjust a previous settlement with the company to reduce the earnings it derives from minors on its platforms.

In other social media news, Elon Musk “endorsed an antisemitic post on X” (formerly Twitter), causing companies like Apple, IBM, and Disney to announce they will stop advertising on the platform. Marketing leaders from various companies have also urged X CEO Linda Yaccarino to resign amidst the backlash. The White House also criticized Musk’s actions. However a New York Times report revealed the federal government’s increasing reliance on the mogul: it has contracted his company SpaceX for more than $1.2 billion to put Pentagon equipment in space.

Heading into December, Congressional lawmakers also face a tight deadline to reconcile House and Senate versions of the National Defense Authorization Act, which currently include provisions related to children’s online safety, AI, and semiconductor manufacturing. Separately, a group of 26 state governors wrote a letter urging Congress to provide continued funding for the Affordable Connectivity Program.

Read on to learn more about November developments in federal guidance on AI, children’s safety, and what’s next for Section 702.

Highly Anticipated AI Executive Order and OMB Guidance Catalyze Federal AI Action

- Summary: Following the Biden Administration’s release of the AI executive order at the end of October, the federal government kicked off a string of AI actions this month. Vice President Kamala Harris delivered a speech at the US embassy in London, exploring the executive order and emphasized that current AI-driven discrimination and threats to democracy should also be considered existential threats to society. Additionally, Harris detailed plans for an AI Safety Institute, which will help federal agencies develop standards and guidelines for AI usage. In November, OMB released draft guidance related to “Advancing Governance, Innovation, and Risk Management for Agency Use of Artificial Intelligence,” which is open for public comment through December 5. The draft policy included three pillars: “strengthening AI governance, advancing responsible AI innovation, and managing risks from the use of AI.” It would “establish AI governance structures in federal agencies, advance responsible AI innovation, increase transparency, protect federal workers, and manage risks from government uses of AI.” The White House pointed to five examples of where AI had already been successfully used within the federal government, including at the Department of Health and Human Services, Department of Energy, Department of Commerce, National Aeronautics and Space Administration, and the Department of Homeland Security.

- Stakeholder Response: The Biden Administration’s AI Executive Order and the draft OMB guidance prompted a wide range of responses from stakeholders. Alondra Nelson, former acting White House Office of Science and Technology Policy chief, supported the EO while emphasizing the need for an effective implementation process. Many other civil society organizations have supported the EO while highlighting the need for increased focus on the current, real-world harms perpetuated by AI models. Tech industry associations like NetChoice, the US Chamber of Commerce and the Software & Information Industry Association expressed concerns about the EO regarding its competition and innovation provisions, and its short timelines for public feedback. For more stakeholder responses to the EO, see last month’s Roundup. Relatedly, Fight for the Future and Algorithmic Justice League launched a new project, Stop AI Harms, for people to submit comments on the OMB guidance.

- Many entities published analyses of the EO and OMB guidance this month, with the Congressional Research Service publishing an overview of the EO’s highlights and the Brookings Institution publishing an analysis of both documents. Other analyses of the executive order have focused on different subsections of the EO, demonstrating the breadth of industries that the EO will impact, including Forbes’s article on the EO’s potential impact on immigration and HealthAffairs’ analysis of how the EO will change healthcare. On the OMB guidance, the International Association of Privacy Professionals published a review of the draft OMB guidance. Politico analyzed the document’s tech talent acquisition sections and the need for additional funding to attract AI expertise. An op-ed in The Hill focused on the potential impact of OMB guidance on cloud computing and government acquisitions. In Tech Policy Press and Just Security, Justin Hendrix focused on the broad mandate the order gives to the Department of Homeland Security (DHS).

- What We’re Reading: Politico did a profile on White House Deputy Chief of Staff Bruce Reed, a major leader in the creation of the EO, and reported on international conversations on AI at the Halifax International Security Forum. The Open Markets Institute and its Center for Journalism and Liberty program published a new report on AI and monopoly risks. Commenting on the report’s findings, Prithvi Iyer warned about the dangers of oligopoly in the AI space in Tech Policy Press. In a similar vein, Politico reported on Consumer Financial Protection Bureau Director Rohit Chopra’s concerns about AI’s effects on oligopolies. Public Citizen released a tracker on state legislation on deepfakes in elections. The Washington Post analyzed Google’s “AI Opportunity Agenda” of recommendations for the federal government. Scott Babwah Brennen and Matt Perault at the Center on Technology Policy at the University of North Carolina at Chapel Hill released a new report on the anticipated effect of AI on political ads. The Brennan Center for Justice published a report on AI, participatory democracy, and democratic governance.

Kids’ Online Safety Returns to the Congressional Spotlight

- Summary: Congressional attention returned to online kids’ safety in November as movement in the states to hold social media companies accountable has created a patchwork of laws. The Senate Judiciary Committee’s Subcommittee on Privacy, Technology, and the Law hosted a hearing on “Social Media and the Teen Mental Health Crisis,” in which Arturo Béjar, a former Meta employee, testified that Meta not only knew that its platforms hurt children, but that it refused to act to remedy the problem and tried to cover up the findings. The company also faces growing challenges on children’s safety at the state level, with California Attorney General Rob Bonta releasing an unredacted version of a federal complaint he and 33 other state attorneys general filed in October. At the congressional level, many kids’ online safety bills have been introduced this session, including the Kids Online Safety Act (KOSA, S.1409) and the Children and Teens’ Online Privacy Protection Act (COPPA 2.0, S.1418). Both KOSA and COPPA 2.0, along with the slate of other kids’ online safety bills, are controversial for many reasons, including concerns around privacy, age verification requirements, duty of care provisions, and possible implications on LGBTQ+ and reproductive health content.

- The Senate Judiciary Committee subpoenaed the CEOs of X, Snap, and Discord to testify at a hearing now scheduled for January 31, 2024 on CSAM after reporting out two CSAM-related bills in May 2023: the Eliminating Abusive and Rampant Neglect of Interactive Technologies Act of 2023 (EARN IT Act, S.1207) and Strengthening Transparency and Obligations to Protect Children Suffering from Abuse and Mistreatment Act of 2023 (STOP CSAM Act, S.1199). For more details on KOSA, COPPA 2.0, the EARN IT Act and the STOP CSAM Act, please see the May 2023 U.S. Tech Policy Roundup.

- Stakeholder Response: Stakeholders disagreed on who should be responsible for regulating social media usage among youth and how that regulation should occur. Senators Marsha Blackburn (R-TN) and Richard Blumenthal (D-CT), lead sponsors of KOSA, published a statement in support of Béjar’s testimony and called for federal regulation. In contrast, Meta published a blog post pushing for the burden of responsibility to be placed on app store providers to require parents to approve their teens’ app downloads instead. KOSA, specifically, inspired different responses from a range of stakeholders. The Keep Kids Safe and Connected campaign renewed its efforts to oppose the bill.

- The perspectives of likely 2024 voters on KOSA were also published in November, as the Council for Responsible Social Media and Fairplay interviewed 1,200 likely 2024 election voters between October 28-30, 2023 on youth access to social media and the Kids Online Safety Act (KOSA). They found that:

- 87 percent of likely voters believed the President and Congress should take action to “address the harmful impact of social media on children and teens.” 55 percent would strongly support the passage of national legislation to address this.

- 86 percent of respondents supported Congress passing KOSA.

- 74 percent of respondents said they would be more likely to vote for their senator if they supported KOSA.

- 69 percent of respondents believed KOSA will protect LGBTQ+ children, while 31 percent say the legislation will “cut LGBTQ+ kids off from important communities and resources.”

- What We’re Reading: John Perrino and Dr. Jennifer King, writing at Tech Policy Press, did a deep dive into KOSA, the major debates behind the bill, and the future of the kids’ online privacy regulation. Michal Luria and Aliya Bhatia, also in Tech Policy Press, called for policymakers to listen to young people when passing legislation. The Washington Post reported on France lobbying the United States to join the international campaign for childrens’ online safety. Slate explained the legal challenges related to regulating online childrens’ privacy. TechCrunch analyzed and gave context to Meta’s proposed kids’ safety framework.

Section 702 Action Stirs as Deadline Approaches

- Summary: Section 702 is a provision in the Foreign Intelligence Surveillance Act (FISA) Amendments Act of 2008, which allows the NSA to “collect, analyze, and appropriately share foreign intelligence information about national security threats” connected to non-Americans located abroad. With Section 702’s upcoming expiration at the end of the year, Congress will soon decide whether it will be reauthorized. Historically, concerns have been raised about the ways that Section 702 has wrongly targeted Americans. While many lawmakers agree that the Section 702 should be renewed and revised, lawmakers have begun to propose different approaches to the program’s reform. Next month, the White House and Congress will have to determine which proposals make it into a potential Section 702 renewal at the end of the year. With Section 702’s expiration closely approaching next month, House leadership is expected to include a short-term extension that would run through early 2024. However, over 50 lawmakers sent a letter to House and Senate leadership with a request to exclude any short-term FISA extensions from the NDAA or other “‘must-pass’ legislation,” deeming it unnecessary and not responsive to broader concerns over Section 702’s uses. Read below for more in-depth coverage of ongoing efforts to adjust Section 702.

- Stakeholder Response: In response to concerns over the legislation’s potential privacy violations and lack of transparency and oversight, Congressional lawmakers introduced the bipartisan Government Surveillance Reform Act (H.R.6262, originally sponsored by Sens. Ron Wyden (D-OR), Mike Lee (R-UT); Reps. Warren Davidson (R-OH), Zoe Lofgren (D-CA)). The bill would reauthorize Section 702 of the Foreign Intelligence Surveillance Act for four years. However, the bill also includes new protections against abuses and additional accountability mechanisms for such abuses. Specifically, the bill would require warrants for government purchases of private data from data brokers, as well as location, internet search, AI assistant, and vehicle data. Other reforms would protect Americans from unwarranted spying while abroad with non-U.S. citizens and limit government access to Americans’ information in large datasets. Public interest groups including the Electronic Frontier Foundation, the Center for Democracy and Technology, and the Brennan Center for Justice have expressed support for the bill.

- However, Rep. Mike Turner (R-OH), the Republican chairman of the House Intelligence Committee, announced plans to introduce a separate package of reforms that have emerged from a Republican-majority Congressional working group within the committee. These proposals follow the release of the committee’s November report, FISA Reauthorization: How America’s Most Critical National Security Tool Must Be Reformed To Continue To Save American Lives And Liberty, which highlights the program’s importance, but also outlines the program’s historical misuse by the FBI. The report also contains 45 “improvements” that can be made to Section 702, including enhanced penalties for federal violations of FISA procedures and the implementation of a court-appointed counsel who can “scrutinize” governmental use of FISA to surveil US citizens. These provisions will likely inform the Republican-led package.

- Meanwhile, the Senate Intelligence Committee is now considering a FISA extension that contains modest reforms. Sponsored by Sens. Mark Warner (D-VA) and Marco Rubio (R-FL), the FISA Reform and Reauthorization Act of 2023 would not require FBI officials to secure a warrant before accessing NSA data and information – a key differentiator from other proposed bills, which aim to address concerns of FBI misuse. The Senate move has raised concerns from civil society groups.

- What We’re Reading: WIRED reported on Asian American and Pacific Islander community concerns over Section 702. The New York Times covered the disputes and discussions happening on the Hill related to the program. MIT Technology Review shared civil society critiques of Section 702. Just Security featured a series on Section 702, which includes the most recent news connected to the program and relevant stakeholder discussions.

New AI Legislation

- Artificial Intelligence (AI) Research, Innovation, and Accountability Act of 2023 (S.3312, sponsored by Sens. John Thune (R-SD), Amy Klobuchar (D-MN), Roger Wicker (R-MS), John Hickenlooper (D-CO), Shelley Moore Capito (R-WV), and Ben Ray Luján (D-NM)): The Artificial Intelligence (AI) Research, Innovation, and Accountability Act would require the National Institute of Standards and Technology (NIST) to research the development of AI standards and provide recommendations to agencies for regulations on “high-impact” AI systems. It would require internet platforms to disclose to users when AI is used to generate content on the website. Additionally, it would require companies using “critical-impact” AI to undergo rigorous risk assessments. Lastly, the bill would direct the Department of Commerce to create a working group to develop plans for consumer AI education programs.

- Travelers’ Privacy Protection Act of 2023 (S.3361, sponsored by Sens. Merkley (D-OR) and John Kennedy (R-LA)): This bill would prohibit the Transportation Security Administration (TSA) from using facial recognition technology in airports without congressional authorization. It would also instruct the Administrator of the TSA to delete any information obtained using facial recognition technologies within 90 days of bill passage.

- Five AIs Act (H.R.6425, sponsored by Reps. Mike Gallagher (R-WI) and Ro Khanna (D-CA)): This bill would direct the Secretary of Defense to establish a working group to develop and coordinate an artificial intelligence initiative among the Five Eyes countries (the US, Canada, Australia, the United Kingdom, and New Zealand).

- Federal Artificial Intelligence Risk Management Act (S.3205, sponsored by Sens. Jerry Moran (R-KS) and Mark Warner (D-VA)): This bill would require federal agencies to use the AI Risk Management Framework developed by the National Institute of Standards and Technology (NIST) when using AI. It would obligate OMB to offer guidance on incorporating the framework to federal agencies and create an AI workforce initiative that would give agencies access to experts in the field. In addition, the bill would require NIST to study test and evaluation capabilities for AI acquisitions.

- AI Labeling Act (H.R.6466, sponsored by Rep. Thomas Kean (R-NJ)): This bill would require the Director of the National Institute of Standards and Technology (NIST) to establish a working group with other agencies to identify AI-generated content and create an AI labeling framework. The bill would also require generative AI developers to provide a visible disclosure on products made using their tools. It would mandate that developers “take reasonable steps to prevent systemic publication” of undisclosed content. Finally, it would create a second working group composed of representatives from government, academia, and platforms to develop best practices for identifying and disclosing AI-generated content.

Other New Legislation

- Protecting Kids on Social Media Act (H.R.6149, sponsored by Reps. John James (R-MI) and Patrick Ryan (D-NY)): This bill would mandate that social media platforms check the age of their users and ban children under the age of 13 from accessing such platforms. The bill would prohibit platforms from using algorithm manipulation systems on users under 18 and require parental consent for their use of social media.

- Online Dating Safety Act of 2023 (H.R.6125, sponsored by Reps. David Valadao (R-CA) and Brittany Pettersen (D-CO)): This bill would require internet dating services to notify their users when they receive a message from a person who has been banned on the platform.

- Tech Safety for Victims of Domestic Violence, Dating Violence, Sexual Assault and Stalking Act (H.R.6173/S.3213 sponsored by Reps. Anna Eshoo (D-CA) and Debbie Lesko (R-AZ) in the House; Sen. Ron Wyden (D-OR) in the Senate): This bill would create a pilot program providing funding for organizations to address domestic violence issues, particularly as they pertain to technology-enabled abuse. The bill would allocate $2 million to the Department of Justice’s Office on Violence Against Women to fund efforts to support victims of abuse perpetrated using technology and establish an additional grant for nonprofits and universities to develop programs for these aims.

- Reform Immigration Through Biometrics Act (H.R. 6138, sponsored by Reps. Byron Donalds (R-FL), Randy Weber, Sr. (R-TX), Troy Nehls (R-TX), Paul Gosar (R-AZ), Clay Higgins (R-LA), Lance Gooden (R-TX), and Russell Fry (R-SC)): This bill would require the Department of Homeland Security to evaluate its efforts to implement a biometric entry and exit system for immigrants on temporary visas.

- US Data on US Soil Act (H.R.6410, sponsored by Reps. Anna Paulina Luna (R-FL), Mary Miller (R-IL), Ralph Norman (R-SC), and George Santos (R-NY)): This bill would prohibit certain social media platforms from storing data on U.S. nationals in data centers located in a country designated as a foreign adversary (China, Cuba, Iran, Russia, and others). It would also bar government officials from foreign adversary countries from accessing the data.

- Promoting Digital Privacy Technologies Act (S.3325, sponsored by Sens. Catherine Cortez Masto (D-NV) and Deb Fisher (R-NE)): This bill would direct the National Science Foundation (NSF) to fund research on technologies to bolster digital privacy, such as de-identification or anonymization, anti-tracking, encryption, and more. The NSF would be directed to work with the National Institute of Standards and Technology, the Office of Science and Technology Policy, and the Federal Trade Commission to hasten the research and development of privacy technologies.

- Digital Accountability and Transparency to Advance (DATA) Privacy Act (S.3337, sponsored by Sen. Catherine Cortez Masto (D-NV)): This bill would require entities that collect and use data on more than 50,000 people or devices to issue privacy notices to service users describing what and how data is collected, as well as how the entity uses it. The bill mandates that data collection and use practices be reasonable, equitable, and forthright. Entities must provide prospective users with an opt-out option for data collection and processing, as well as the option to access and transfer or delete their data.

- Internet Application Integrity and Disclosure (Internet Application I.D.) Act (S.3329, sponsored by Sen. Catherine Cortez Masto (D-NV)): This bill would require websites and apps to disclose if their products or services have been owned by a resident of China or store data in China, regardless of involvement with the Chinese Communist Party.

- Shielding Children’s Retinas from Egregious Exposure on the Net (SCREEN) Act (H.R.6429 / S.3314, sponsored by Reps. Mary Miller (R-IL) and Doug LeMalfa (R-CA) in the House and Sen. Mike Lee (R-UT) in the Senate): This bill would require all pornographic websites to implement age verification technology to bar children from accessing their content. Measures must be more robust than simple age attestation, such as entering a birthdate. Additionally, the bill creates data security and minimization standards for relevant companies.

- Eliminate Network Distribution of Child Exploitation (END) Act (H.R.6246, sponsored by Reps. Lucy McBath (D-GA), Barry Moore (R-AL), Glenn Ivey (D-MD), Jefferson Van Drew (R-NJ), Sylvia Garcia (D-TX), and Ann Wagner (R-MO)): This bill would extend the time technology companies are required to retain information on online depictions of child abuse and exploitation from 90 days to one year, after reporting the material to the National Center for Missing & Exploited Children.

- COOL Online Act (H.R.6299, sponsored by Reps. Carlos Gimenez (R-FL) and Andy Kim (D-NJ)): This bill would require online vendors to disclose products’ countries of origin if the product is required to be marked under 19 U.S.C. 1304.

- Telehealth Privacy Act of 2023 (H.R.6364, sponsored by Reps. Troy Balderson (R-OH), Neal Dunn (R-FL), David Schweikert (R-AZ), Bill Johnson (R-OH), and Mike Carey (R-OH)): This bill would amend the Social Security Act to prohibit the publication of physicians’ home addresses if they elect to deliver telehealth services from their private residences.

- Stopping Terrorists Online Presence and Holding Accountable Tech Entities (STOP HATE) Act (H.R.6463, sponsored by Reps. Josh Gottheimer (D-NJ) and Don Bacon (R-NE)): This bill would require social media companies to publish reports on violations of their terms of service and how they are addressing them. It would also require the Director of National Intelligence to issue a report on how terrorist organizations use social media networks.

Public Opinion on AI Topics

AP-NORC and the University of Chicago Harris School of Public Policy conducted a poll of 1,017 Americans from October 19-23, 2023 on their views of elections and AI. They found that:

- 58 percent of Americans believe AI will further the spread of misinformation during the 2024 election.

- 66 percent of Americans favor banning AI images in political advertisements, including 78 percent of Democrats and 66 percent of Republicans.

- 54 percent of Americans would support banning all AI-generated content in political advertisements.

- 14 percent of Americans are at least somewhat likely to get information about the 2024 election using AI.

- Most Americans think that technology companies (83 percent), social media companies (82 percent), the media (80 percent), and the federal government (80 percent) have some responsibility to “prevent the spread of misinformation by AI.”

The AI Policy Institute polled 1,188 respondents from October 26-27, 2023. They found that:

- 94 percent of respondents are at least somewhat concerned that AI can be used for scams.

- 73 percent of respondents support the government holding companies responsible for scams perpetrated using their AI products.

- 96 percent of respondents believe it is at least somewhat important for the government to regulate AI.

Axios, Generation Lab, and researchers at Syracuse University’s Institute for Democracy, Journalism and Citizenship and the Autonomous Systems Policy Institute surveyed 216 computer science professors from October 25-30, 2023. They found that:

- 68 percent of respondents believe AI is “moderately advanced” at producing audio and video deepfakes.

- 39 percent say “digital authenticity certification” is the best way to combat the spread of AI deepfakes, followed by public education (29 percent), enhanced detection technology (18 percent) and tighter regulation (13 percent).

- 62 percent said that “misinformation will be the biggest challenge to maintaining the authenticity and credibility of news in an era of AI-generated articles.”

- 33 percent believe that transparency about AI-generated news is the most effective way to guarantee trustworthy news reporting, followed by independent fact checking (32 percent), editorial oversight (19 percent), and improved media literacy (16 percent).

- 56 percent believe AI can help journalists “produce and disseminate local news,” while 25 percent believe it could hurt local reporting.

Morning Consult polled 2,203 U.S. adults between October 27-29, 2023 about AI. They found that:

- 27 percent of Americans consider regulating AI a top priority, while 33 percent calling it “important, but a lower priority.”

- 44 percent of women and 23 percent of men say it is impossible to regulate AI.

- 53 percent of women and 26 percent of men would not let their kids use AI.

- 78 percent of respondents believe that political ads should be required to disclose use of AI, compared to 64 percent who believe that AI should be disclosed in professional spaces.

- 69 percent of respondents expressed worry about AI in relation to jobs, work, misinformation, and privacy.

In another publication of this poll’s results, Morning Consult reported that:

- 27 percent of U.S. adults believe AI will improve their lives, while 23 percent believe it will have no impact, 25 percent believe it will make their lives worse, and 25 percent say they don’t know.

- About 67 percent of respondents worried that other countries will leverage AI to create a competitive advantage in technology over the U.S.

- 34 percent of employed U.S. adults believe AI will make their jobs easier while 23 percent believe it will make their job harder.

- 41 percent of U.S. adults say AI will “negatively impact trust in the outcome of U.S. elections.”

- 58 percent of U.S. adults believe AI-enabled misinformation will impact the outcome of the 2024 election.

From mid-September to mid-October 2023, Bloomberg Philanthropies and the Centre for Public Impact issued two surveys to field city administrators’ perspectives on how they can use generative AI to improve local government. One survey focused on mayors across the world, while the other focused on other municipality staff. The surveys found that:

- 69 percent of surveyed cities are exploring and/or testing how to utilize generative AI, but only 2 percent currently implement the technology.

- 22 percent of the surveyed cities have created an AI lead position.

- 13 percent of surveyed cities have created policies about the use of AI, and 11 percent have administered AI training to staff.

- 34 percent of surveyed cities see AI being most impactful in addressing traffic and transportation, followed by infrastructure (24 percent), public safety (21 percent), environment and climate (21 percent), and education (18 percent)

- 81 percent of surveyed cities claim that security and privacy are their guiding principles for their study and implementation of AI, while 79 percent identified accountability and transparency as their primary concern.

The Institute of Governmental Studies at the University of California, Berkeley polled 6,342 registered California voters between October 24-30, 2023. The study found that:

- 84 percent of California voters are concerned about the impact that disinformation, deepfakes, and AI will have on next year’s elections. 73 percent say that California is responsible for protecting voters from these threats.

- 87 percent agree that “tech companies and social media platforms should be required to … label deepfakes and AI-generated audio, video, and images…”

- 90 percent agree that tech and social media companies should explain how they utilize user data to curate content.

Pew published results from a survey of 1,453 U.S. teens ages 13-17 from September 26-October 23, 2023 on American teens’ use of ChatGPT in schools. They found that:

- 19 percent of American teens have used ChatGPT for school, with 11th and 12th graders most likely to use the technology (24 percent).

- 21 percent of boys ages 13-17 have used ChatGPT for their schoolwork, compared to 17 percent of girls.

- 72 percent of White teens report having “heard at least a little” about ChatGPT” compared to 63 percent of Hispanic teens and 56 percent of Black teens.

- 75 percent of teens in households earning $75,000 or more annually have heard of ChatGPT, compared to teens with household incomes between $30,000 and $74,999 (58 percent) and less than $30,000 (41 percent).

In November, Retool, a software development company focusing on internal tools, published results from its August 2023 survey of 1,578 professionals in technology, operations, data, and other technical roles. The company found that:

- 52 percent of respondents believe AI is “overrated.”

- 94 percent of respondents believe AI will at least somewhat impact their industry or work.

- 51 percent of respondents say their company’s investment in AI is “just right,” compared to 45 percent that report there is “not enough” investment.

- 39 percent of respondents believe cost savings is their companies’ main motivation to invest in AI.

- 80 percent of companies incorporating AI into their daily business use OpenAI (ChatGPT 4, 3.5, or 3).

- The top pain points mentioned by respondents around creating AI apps were model output accuracy (39 percent) and data security (33 percent).

The AI Policy Institute polled 1,268 Americans from November 20-21, 2023. They found that:

- 59 percent of respondents would support the government “requiring AI companies to monitor the use of AI for racist content.”

- 64 percent agree that the government should prosecute people who use AI to create racist images, as well as the AI company that made the tool.

- 58 percent support the U.S. proposing an international agreement on AI regulation during war.

- 59 percent would support the U.S. and China agreeing to ban the use of AI in drone warfare and decisions involving nuclear weaponry.

- 49 percent would support banning political advertisements that use AI-generated depictions of people’s voices or appearances.

- 51 percent would support the creation of a “global watchdog” to regulate AI usage.

Public Opinion on Other Tech Topics

Ipsos conducted a survey of 8,000 participants from 16 countries from August 22-September 25, 2023 about online disinformation and hate speech. They found that:

- 56 percent of internet users often learn about current events using social media.

- 68 percent of internet users believe disinformation is the most prevalent on social media as opposed to online messaging or media apps.

- 85 percent of internet users are concerned about the impact of disinformation on their fellow citizens.

- 87 percent of internet users are concerned about how disinformation will affect their countries’ elections.

- 67 percent of internet users report having encountered hate speech online.

- 88 percent believe it is the responsibility of governments and regulatory bodies to address disinformation and hate speech online.

- 90 percent believe it is the responsibility of social media platforms to address disinformation and hate speech online.

- 48 percent have reported disinformation related to elections at least once.

In November, Pew published results from a survey of 8,842 U.S. adults from September 25-October 1, 2023 about how they get their news. They found that:

- 32 percent of U.S. adults under age 30 regularly get news on TikTok, including 15 percent of U.S. adults ages 30-49, 7 percent of those aged 50-64, and 3 percent of those 65+.

- 43 percent of TikTok users report regularly getting news from the platform.

Ipsos conducted a poll of 1,120 U.S. adults from November 7-8, 2023. They found that:

- 76 percent of Americans “support developing standards, tools, and tests to help ensure that AI systems are safe, secure, and trustworthy.”

- 73 percent support “establishing standards and best practices for detecting AI-generated content and authenticating official content.”

- 73 percent support “passing bipartisan data privacy legislation to protect all Americans, with an emphasis on protecting children.”

- 60 percent of Democrats support increasing federal funding for AI research, compared to only 43 percent of Republicans.

Pew recently published results from a survey of 8,842 U.S. adults from September 25 to October 1, 2023 about how they get their news. They found that:

- 32 percent of U.S. adults under age 30 regularly get news on TikTok. This percentage is higher compared to U.S. adults ages 30-49 (15 percent), 50-64 (7 percent) and 65+ (3 percent).

- 43 percent of TikTok users report regularly getting news from the platform, a ten-point increase from those who said the same in 2022.

Google Cloud released results of a 2023 poll by Public Opinion Strategies of 2,504 working American adults, including 411 federal, state, or local government employees. They found that:

- 69 percent of surveyed working adults believe that the federal government will suffer a foreign based cyberattack in the next few years.

- 81 percent of surveyed working adults believe tech companies “should be held accountable for repeated breaches of software in the federal government.”

- 47 percent of surveyed national government workers believe “Microsoft products and services are vulnerable to cyberattack.”

On behalf of the Progressive Policy Institute, YouGov surveyed 860 working-class registered voters without a four-year college degree from October 17-November 6, 2023. They found that:

- 49 percent of respondents believe that breaking up large tech companies would create more consumer choice.

- 68 percent either somewhat oppose or strongly oppose the FTC suing Amazon over its Prime two-day free delivery due to market power concerns.

- 80 percent would prefer the government to pass a comprehensive privacy and data security bill to protect consumer safety, rather than break up big tech companies.

– – –

We welcome feedback on how this roundup could be most helpful in your work – please contact Alex Hart and Kennedy Patlan with your thoughts.

Authors