Minors Are On the Frontlines of the Sexual Deepfake Epidemic — Here’s Why That’s a Problem

Kaylee Williams / Oct 10, 2024

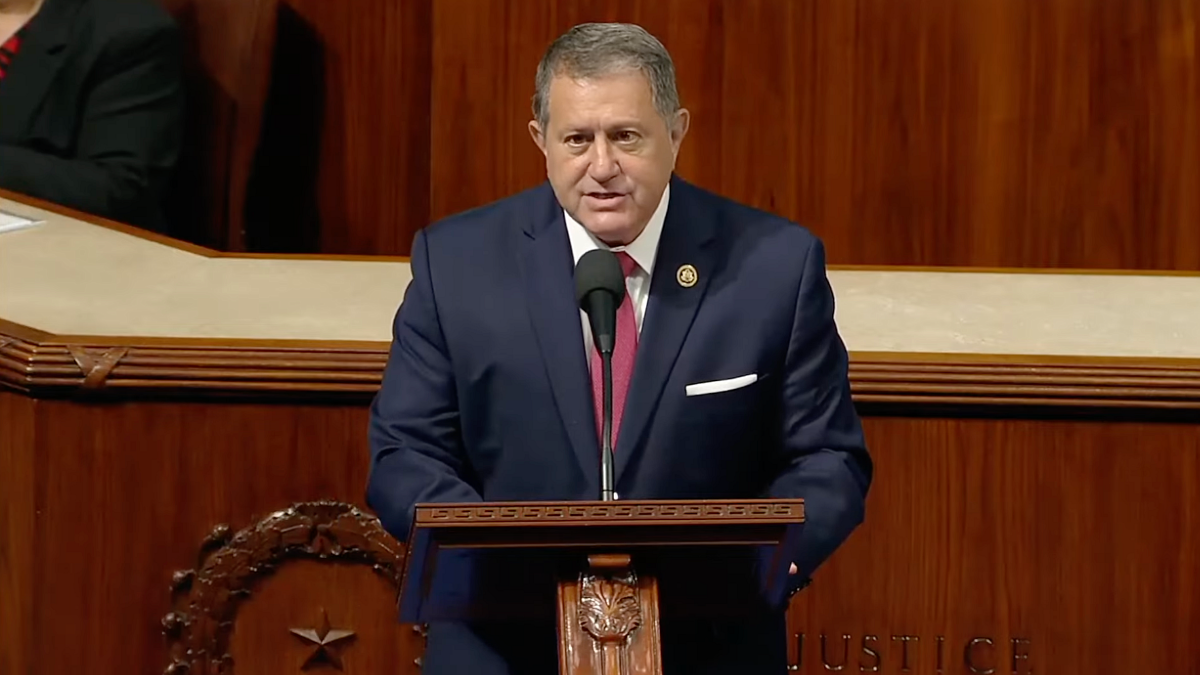

Washington DC, July 25, 2024: Rep. Joe Morelle (D-NY) makes remarks on the floor of the US House of Representatives to draw attention to the issue of sexually explicit digital forgeries and his proposed legislation—the Preventing Deepfakes of Intimate Images Act. Source

As digital safety experts in the US continue to push for the federal regulation of AI generated nonconsensual intimate images (NCII), minors are all too frequently the most prominent survivors — and perpetrators — of this emerging form of technology-facilitated sexual abuse, sparking difficult questions about the aims and drawbacks of criminalization.

On Nov. 2, 2023, the Wall Street Journal reported that students at a New Jersey high school had used an AI powered “nudify” app to generate sexually explicit images of more than 30 girls at the school, before sharing the manipulated images in various group chats.

In an email to parents obtained by CBS News, the school’s principal wrote, “It is critically important to talk with your children about their use of technology and what they are posting, saving and sharing on social media. New technologies have made it possible to falsify images and students need to know the impact and damage those actions can cause to others.”

The New Jersey teens’ story was picked up by the New York Times, CBS, ABC, and a variety of other outlets, effectively making it one of the nation’s most prominent cases of deepfake abuse involving private citizens (or non-celebrities) to date. Furthermore, public attention toward the case only increased after one particular student, Francesca Mani, spoke at a January 2024 press conference advocating for Congress to pass the Preventing Deepfakes of Intimate Images Act, a bill sponsored by Rep. Joe Morelle (D-NY).

“No kid, teen or woman should ever have to experience what I went through,” NBC quoted Mani as saying at the press conference. “I felt sad and helpless.”

In the months since, similar cases involving young people creating and sharing sexual deepfakes of their peers have been reported in Beverly Hills, CA, Issaquah, WA, and Aledo, Texas, among other places, posing the question of just how common AI generated NCII has become in American schools.

A new survey of public high school students conducted by the Center for Democracy & Technology (CDT) attempted to answer exactly that. Published on September 26, the study found that 15 percent of students said they were aware of at least one “Deepfake that depicts an individual associated with their school in a sexually explicit or intimate manner” shared in the last school year. CDT estimates that this proportion of respondents represents 2.3 million out of the 15.3 million total public high school students in the US.

When asked to comment on this finding, Dr. Anna Gjika — an Assistant Professor of Sociology at the State University of New York and author of When Rape Goes Viral: Youth and Sexual Assault in the Digital Age — said via email that the figure is “very much in line with broader patterns of nonconsensual intimate image sharing among youth over the last few years, which research shows is seen as common and acceptable practice by teens and young adults.”

Dr. Gjika posits — in both her comments to Tech Policy Press and her previous research — that for many young men in particular, circulating intimate images (authentic, AI generated, or otherwise) can serve as a means to ”fit in” amongst friends. “Collecting and sharing sexually explicit images of girls is how young men display and communicate their heterosexuality to other young men, and how they increase their status and popularity among their peer groups,” she writes.

The CDT survey also found that social media platforms — such as Snapchat, TikTok, and Instagram — were the most commonly-used medium for sharing AI generated NCII, and that girls and LGBTQ+ students were more likely than their peers to be depicted in sexually explicit deepfakes.

Despite the novel technology enabling this phenomenon, Gjika argues that deepfake abuse should be understood within a broad spectrum of sexual violence targeting young people, which exists both online and off.

“The increase in deepfake NCII reflects the availability of new, free, and easily accessible tools to create this content, rather than new abusive behavior by teens,” Gjika explains. “Synthetic deepfakes are the latest iteration of an ongoing pattern of image-based abuse and sexual harm; its existing behavior extended onto a new platform, and I think it's important that we recognize it as such if we are really serious about addressing it.”

At the time of publication, around 20 states have passed bills criminalizing AI generated NCII in general, and at least 15 have adopted laws that specifically pertain to AI generated child sexual abuse material (CSAM). California Governor Gavin Newsom signed one such bill as recently as September 30.

And while it is difficult to estimate how many people have been charged or convicted under these laws thus far, local news coverage from within these states suggests that minors are among this phenomenon’s earliest, and most vulnerable victims.

For example, on Dec. 1, 2023, a 32-year-old man was arrested in Bossier Parish, Louisiana, for two counts of pornography involving juveniles, and two counts of “unlawful deepfakes.” Local coverage of the arrest notes that the man was the first to be charged with violating Louisiana Senate Bill No. 175, which prohibits the creation, distribution, and possession of “unlawful deep fakes involving minors,” and went into effect exactly four months before his arrest.

A local news website quoted the Bossier Parish sheriff’s public information officer describing the new law: “It was enacted this year. A lot of people aren’t familiar with it, even law enforcement; we’re still learning. We’re still trying to apply it to cases when it comes up, if it’s applicable.”

However, other cases — including that of two middle school students arrested in Florida for generating intimate images of their classmates — demonstrate the challenges associated with imposing heavy penalties for violating these new statutes, particularly when the perpetrators are minors themselves.

As a Wired investigation into this case explained, the students — aged 13 and 14 — “were charged with third-degree felonies—the same level of crimes as grand theft auto or false imprisonment—under a state law passed in 2022 which makes it a felony to share ‘any altered sexual depiction’ of a person without their consent.”

According to Dr. Gjika, this punishment is harsher than the one that the Florida students likely would have received had they been found guilty of distributing authentic NCII.

“I think the Florida case should raise some serious questions about how we want to respond to image-based sexual abuse, and whether criminalizing youth is the best way to go,” she said via email.

“Without efforts toward appropriate response and educational prevention, current NCII policies and practices threaten to reinforce the school-to-prison pipeline,” the CDT researchers said in the report that accompanied their survey findings. “Although law enforcement can act as an important form of deterrence, it cannot be the largest or only piece of the puzzle.”

The researchers recommend that schools adopt a number of “proactive efforts” to prevent the proliferation of NCII of all types, including by updating Title IX policies to reflect technological advancements, training teachers to report and respond to allegations of image-based sexual abuse, and directly addressing the issue in the classroom as a part of broader sexual harassment and digital safety curricula.

Authors