May 2025 US Tech Policy Roundup

Rachel Lau, J.J. Tolentino, Ben Lennett / Jun 2, 2025Rachel Lau and J.J. Tolentino work with leading public interest foundations and nonprofits on technology policy issues at Freedman Consulting, LLC. Ben Lennett is the managing editor of Tech Policy Press.

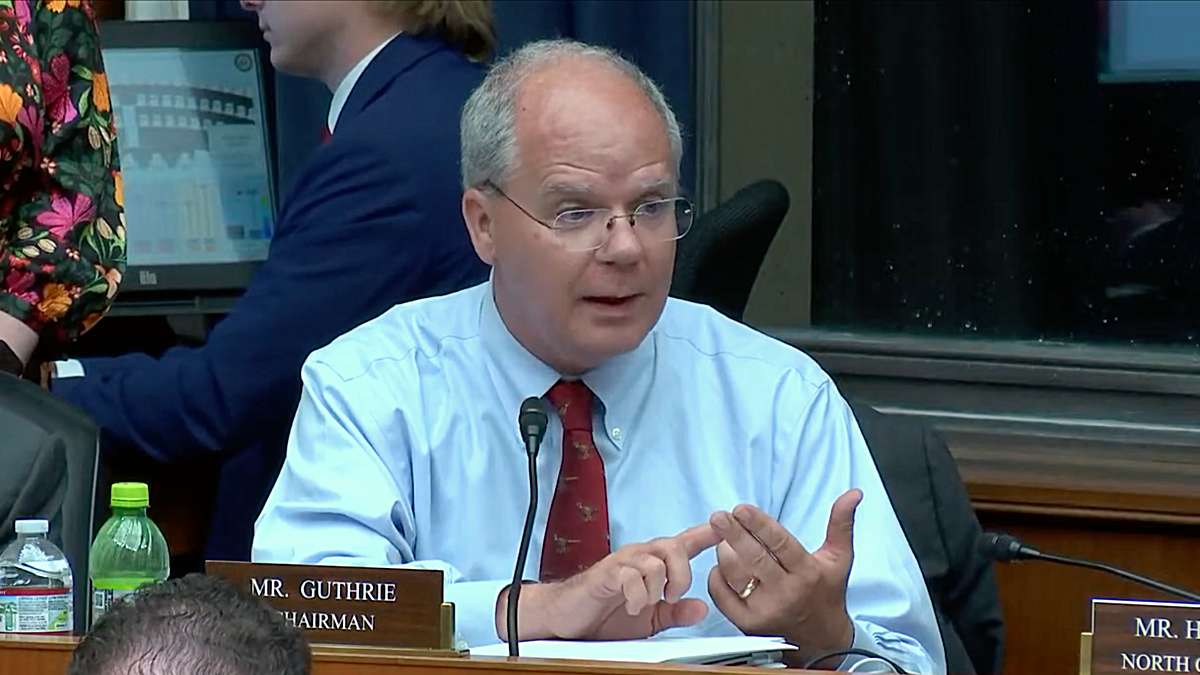

Washington, DC, May 14, 2025 - House Energy and Commerce Committee chairman Rep. Brett Guthrie (R-KY) at a markup hearing on a budget reconciliation bill. Source

AI policy continues to take center stage in federal tech policy discussion, most notably through a controversial provision tucked into the House budget bill, H.R. 1, known as the "Big Beautiful Bill," that passed the House of Representatives this month. The bill includes a sweeping 10-year moratorium on state and local laws regulating artificial intelligence. Passed by the House in a narrow 215–214 vote, the moratorium would block state and local efforts to oversee AI systems used in interstate commerce, raising alarms among civil rights groups and state officials who fear unchecked algorithmic harms.

At the same time, the Trump Administration’s aggressive AI agenda has drawn further scrutiny following the abrupt firing of US Copyright Office Director Shira Perlmutter, just one day after the Office released a report challenging the legality of using copyrighted works to train AI models under the fair use doctrine. Critics alleged the firing was politically motivated and tied to pressure from major AI firms. Together, the moratorium and the copyright controversy reflect a continued push by the administration to protect industry interests while limiting regulatory oversight.

Additionally, May saw significant tech policy actions across other branches of government. The Department of Commerce reversed major funding decisions under the CHIPS and Science Act, redirecting Tech Hub grants based on new, cost-cutting criteria. The Federal Communications Commission (FCC) announced efforts to streamline operations by closing over 2,000 inactive dockets, while the Consumer Financial Protection Bureau (CFPB) withdrew dozens of guidance documents to reduce regulatory burdens. On Capitol Hill, lawmakers also introduced a flurry of other AI-related bills, from whistleblower protections and national testing standards to support for public AI education campaigns.

Read on to learn more about May developments in US tech policy.

A 10-year Moratorium on Regulation of Artificial Intelligence

Summary

A provision buried deep within the US House budget bill, known as the "Big Beautiful Bill" (H.R. 1), would enact a 10-year moratorium on state and local laws regulating artificial intelligence. The House Energy and Commerce Committee advanced the specific provision, voting along party lines, and it was subsequently passed by the full House in the budget bill by a largely party-line vote of 215 to 214. The bill is now advancing to the Senate. The moratorium seeks to preempt state laws despite Congress having failed to pass meaningful tech or AI legislation for years.

The moratorium broadly states that, "no State or political subdivision thereof may enforce... any law or regulation limiting, restricting, or otherwise regulating artificial intelligence models, artificial intelligence systems, or automated decision systems entered into interstate commerce". The scope of the moratorium hinges on the definitions of these terms, particularly "automated decision system," which is defined as any "computational process derived from machine learning, statistical modeling, data analytics, or artificial intelligence that issues a simplified output... to materially influence or replace human decision making."

Some experts expressed concern that this definition is so broad that it could encompass "any meaningful use of a computer." While the provision includes a "rule of construction" intended to exempt generally applicable laws, its problematic structure for listing conditions appears to make it difficult for most state civil laws to qualify for the exemption. A separate exemption was added to carve out state laws with criminal penalties. However, the ambiguity of the language means that the moratorium's full extent is unclear and likely to require years of litigation to resolve.

Supporters of the moratorium, including House Republicans, tech industry groups, and think tanks such as the R Street Institute, argued that it is necessary to prevent a burdensome "patchwork" of conflicting state laws that could stifle AI innovation and US global leadership, comparing it to the Internet Tax Freedom Act. Conversely, numerous opponents, including Democrats, civil society organizations like Demand Progress, Public Citizen, EPIC, CFA, CAIDP, CDT, and the ACLU, along with state lawmakers and attorneys general, argue the moratorium is overbroad, anti-democratic, irresponsible, and leaves consumers vulnerable to harms like algorithmic discrimination, deepfakes, and lack of accountability.

What We’re Reading

- J.B. Branch, Ilana Beller, “Buried in Congress’s Budget Bill is a Push to Halt AI Oversight,” Tech Policy Press.

- Kara Williams, Ben Winters, “Debunking Myths About AI Laws and the Proposed Moratorium on State AI Regulation,” Tech Policy Press.

- Anna Lenhart, “Do We Need a ‘NIST for the States’? And Other Questions to Ask Before Preempting Decades of State Law,” Tech Policy Press.

- Justin Hendrix, “Expert Perspectives on 10-Year Moratorium on Enforcement of US State AI Laws,” Tech Policy Press

- Ellen P. Goodman, “House Moratorium on State AI Laws is Over-Broad, Unproductive, and Likely Unconstitutional,” Tech Policy Press.

- Gideon Lichfield, “Why Both Sides Are Right—and Wrong—About A Moratorium on State AI Laws,” Tech Policy Press.

- David Brody, “The Big Beautiful Bill Could Decimate Legal Accountability for Tech and Anything Tech Touches,” Tech Policy Press

- Gary Marcus, “When it Comes to AI Policy, Congress Shouldn’t Cut States off at the Knees,” Substack.

The US Copyright Office Receives Major Shakeup Following Their Report on AI and Copyright Law

Summary

The US Copyright Office (USCO) released the third installment of its series on copyright and AI, focused on the use of copyrighted works to train generative AI models. The report explored whether AI developers must obtain copyright owners’ consent or provide compensation when using protected works, in addition to enumerating what legal or practical frameworks could support such licensing. The report also disputed the claim often made by AI companies that training processes should be covered by the “Fair Use” exemption of copyright law.

A day after the USCO released its report, USCO Director Shira Perlmutter was dismissed by the Trump administration without explanation – a break from precedent, as the USCO has historically operated under Congressional oversight. Some USCO employees suspected that Perlmutter’s termination could potentially stem from the recent report and conclusion that using copyrighted material to train AI models could “overstep laws governing fair use.” Pressure for the Trump administration to take action against Perlmutter may have originated from industry sources, as many AI companies, including OpenAI, have advocated for the right to use copyrighted data to train AI models to remain competitive with China. In response to her dismissal, Perlmutter filed a lawsuit against the administration, claiming that her termination was“unlawful and ineffective.”A federal judge rejected Perlmutter’s request to block the Trump administration from firing her.

Many Democrats have been critical of the Trump administration’s decision to fire Perlmutter. In a press release, Rep. Joe Morelle (D-NY) called Perlmutter’s termination a “brazen and unprecedented power grab with no legal basis,” arguing that the firing was connected to the USCO report’s “refus[al] to rubber-stamp Elon Musk’s effort to mine troves of copyrighted works to train AI models.” Sen. Adam Schiff (D-CA) and Senate Minority Leader Chuck Schumer (D-NY) released a joint statement condemning Trump’s firing of Perlmutter as unlawful, stating that the “President lacks the legal authority to terminate the position unilaterally.” Rep. Hank Johnson (D-GA) added in a statement that “it’s no mistake that just hours before she was fired, the Copyright Office issued a report assessing the nature of the fair use doctrine as applied to its use in training large language models.”

Various labor unions have also been vocal, condemning Perlmutter’s termination. The American Federation of Musicians released a statement saying that “[Perlmutter’s] unlawful firing will gravely harm the entire copyright community.” Writers Guild of America East stated that it agrees with the USCO’s report that AI companies that “train their systems through unauthorized access to copyrighted materials ‘goes beyond established fair use boundaries’ and causes harm to copyright owners,” and added that they demand Perlmutter’s “immediate reinstatement.”

What We’re Reading

- Tor Constantino, MBA, “U.S. Copyright Office Shocks Big Tech With AI Fair Use Rebuke,” Forbes.

- Tori Noble, Mitch Stoltz, and Corynne McSherry, “The U.S. Copyright Office’s Draft Report on AI Training Errs on Fair Use,” Electronic Frontier Foundation.

Tech TidBits & Bytes

Tech TidBits & Bytes aims to provide short updates on tech policy happenings across the executive branch and agencies, Congress, civil society, industry, and courts.

In the executive branch and agencies:

- The Trump Administration paused the scheduling of new interviews for student visa applications and weighed the possibility of requiring all foreign students applying for a student visa to have their social media accounts vetted, sparking backlash from universities, higher education groups, and advocates concerned about the US’s ability to compete economically without top international talent pipelines.

- The FCC announced plans to close over 2,000 dormant dockets. The agency is seeking public comment and stated that the move will help free up resources and improve regulatory clarity. The FCC argued that clearing out inactive proceedings will support future infrastructure investments and allow staff to focus on the agency’s core mission.

- The CFPB announced its withdrawal of dozens of guidance documents issued since 2011. The agency said that many of the documents created a regulatory burden without going through proper rulemaking and will no longer be enforced. The Bureau said future guidance will only be issued when necessary and will be required to reduce compliance costs. Officials argued that the move aligns with legal standards and supports deregulation efforts.

- The Department of Commerce announced a clawback of funds for the Tech Hub program, originally established under the 2022 CHIPS and Science Act to support regional innovation and workforce development. The program seeks to award over $541 million in grants to 31 Tech Hubs, with $504 million in grants already awarded to 12 out of 31 designated hubs through a competitive, two-phase process. However, Commerce Secretary Howard Lutnick ordered a reversal of previously announced Phase 2 implementation awards and directed the Economic Development Administration to relaunch the competition for $220 million in loan funding. All 31 Tech Hubs must reapply, including six that had been told they would receive awards, under revised criteria that emphasize how projects will be a “bargain to taxpayers” and strip references to the previous administration’s policy goals related to diversity, equity, inclusion, labor, and energy.

- Despite reporting earlier in May that the Cybersecurity and Infrastructure Security Agency (CISA) might pause layoffs due to large cuts in personnel through deferred resignations and early retirements since the beginning of the Trump Administration, CISA’s workforce continued to shrink significantly. By the end of May, almost all leadership had left the agency, with five out of six operational divisions and six out of ten regional offices missing top leaders.

- The Commerce Department published guidance warning that using certain Chinese-made chips, like Huawei’s Ascend models, may violate US export rules. Companies without proper authorization risk enforcement action and significant penalties

- The Government Accountability Office (GAO) released a report on “smart city” technologies used in transportation and law enforcement. While cities benefit from tools like traffic sensors and gunshot detectors, the report raised concerns about privacy, transparency, and data use.

- Elon Musk helped amplify a Russian disinformation campaign when he reposted a fake AI-generated video about USAID. The video falsely claimed that the agency paid celebrities to boost Ukrainian President Zelensky’s image.

In Congress:

- Sen. Ted Cruz (R-TX) held a Senate hearing to explore how cutting regulations on the AI supply chain could boost US innovation. Speakers included top tech leaders from OpenAI, AMD, CoreWeave, and Microsoft.

- Rep. Brett Guthrie (R-KY) and Rep. Gus Bilirakis (R-FL) held ahearingon AI regulation and US global leadership, emphasizing the need to avoid Europe’s restrictive policies and promote innovation-friendly rules.

In civil society:

- The Gay & Lesbian Alliance Against Defamation's 2025 Social Media Safety Index highlighted alarming setbacks in platform accountability, especially Meta’s decision to scale back protections against anti-LGBTQ hate speech. In response, GLAAD is expanding its strategies by advocating policy reforms and supporting LGBTQ users directly to confront rising online threats with data, education, and organized action.

- A coalition of 23 AI research labs, universities, and public interest groups urged Congress to fully fund NIST’s $47.7 million request to support AI safety research. They warned that underfunding NIST would hamper efforts to set technical standards and safeguard the public as AI rapidly advances.

- Engine, an advocacy nonprofit that represents tech start-ups, released a report urging policymakers to adopt AI policies that support startup innovation. The group warned that overly broad regulations, duplicative legal frameworks, and limited access to data and computers could sideline smaller firms. Engine emphasized the importance of open-source models, clear national standards, and startup access to foundational AI tools. It called on Congress to reject state-by-state patchworks and prioritize policies that sustain a competitive AI ecosystem.

In industry:

- OpenAI amended its plans to convert its for-profit work into a public benefit corporation after significant legal and public challenges. Although previous announcements planned for OpenAI’s nonprofit parent company to cede control over its for-profit operations, CEO Sam Altman announced that OpenAI’s nonprofit parent would now retain control after the restructuring.

- Apple announced plans to add AI-powered search options like OpenAI and Perplexity to Safari, directly challenging Google's $20 billion-a-year deal to remain the browser’s default search engine. In antitrust testimony, Apple Executive Eddy Cue said that Safari searches declined for the first time last month as users increasingly turned to AI tools.

In courts:

- Government plaintiffs, including the Department of Justice and state attorneys general, filed a list of proposed remedies to address Google's monopolistic practices in digital advertising, which included the divestiture of the company's AdX and DFP platforms.

- The Ninth Circuit affirmed a lower court’s decision to deny the Federal Trade Commission’s application for a preliminary injunction to block the acquisition of Activision Blizzard, Inc. by Microsoft Corp. In response, the Commission dismissed the complaint against Microsoft.

- Google agreed in principle to a $1.375 billion settlement with the state of Texas for “unlawfully tracking and collecting users’ private data regarding geolocation, incognito searches, and biometric data.”

- A District Court allowed a case to proceed involving alawsuit against Character.AI on behalf of a parent whose 14-year-old took his life after interacting with and becoming dependent on role-playing AI "characters" offered by the company.

Legislation Updates

The following bills made progress across the House and Senate in May:

- TAKE IT DOWN Act – S.146. Introduced by Sen. Ted Cruz (R-TX), the bill was signed into law, becoming Public Law No. 119-12.

- Guiding and Establishing National Innovation for US Stablecoins Act (GENIUS Act) – S.1582 - Introduced by Sen. Bill Hagerty (R-TN), the bill passed the Senate.

The following bills were introduced across the House and Senate in May:

- App Store Accountability Act – S.1586. Introduced by Sen. Mike Lee (R-UT), the bill would “safeguard children by providing parents with clear and accurate information about the apps downloaded and used by their children and to ensure proper parental consent is achieved, and for other purposes.” A companion bill in the House, H.R.3149, was introduced by Rep. John James (R-MI).

- Kids Online Safety Act – S.1748. Reintroduced by Sen. Marsha Blackburn (R-TN), the bill would “protect the safety of children on the internet.”

- AI Whistleblower Protection Act – H.R.3460. Introduced by Rep. Jay Obernolte (R-CA), the bill would “prohibit employment discrimination against whistleblowers reporting AI security vulnerabilities or AI violations, and for other purposes.”

- Protecting AI and Cloud Competition in Defense Act of 2025 – H.R.3434. Introduced by Rep. Pat Fallon (R-TX), the bill would “provide for certain requirements relating to cloud, data infrastructure, and foundation model procurement.”

- Websites and Software Applications Accessibility Act of 2025 –H.R.3417. Introduced by Rep. Pete Sessions (R-TX), the bill would “establish uniform accessibility standards for websites and applications of employers, employment agencies, labor organizations, joint labor-management committees, public entities, public accommodations, testing entities, and commercial providers, and for other purposes.”

- American Privacy Restoration Act – H.R.3245. Introduced by Rep. Anna Paulina Luna (R-FL), the bill would “repeal the USA PATRIOT Act.”

- Reproductive Data Privacy and Protection Act – H.R.3218. Introduced by Rep. Ted Lieu (D-CA), the bill would “amend title 18, United States Code, to ensure requests for data on individuals do not pertain to reproductive services.”

- Protection Against Foreign Adversarial Artificial Intelligence Act of 2025 –S.1638. Introduced by Sen. Bill Cassidy (R-LA), the bill aims “to protect the United States from artificial intelligence applications based in or affiliated with countries of concern, and for other purposes.”

- Testing and Evaluation Systems for Trusted Artificial Intelligence Act of 2025 (TEST AI Act) – S.1633. Introduced by Sen. Ben Ray Luján (D-NM), the bill would “require the Director of the National Institute of Standards and Technology to establish a pilot program that uses testbeds to develop measurement standards for the evaluation of artificial intelligence systems, and for other purposes.

- Artificial Intelligence Public Awareness and Education Campaign Act – S.1699. Introduced by Sen. Todd Young (R-IN), the bill would “require the Secretary of Commerce to conduct a public awareness and education campaign to provide information regarding the benefits of, risks relating to, and the prevalence of artificial intelligence in the daily lives of individuals in the United States, and for other purposes.”

- Traveler Privacy Protection Act of 2025 – S.1691. Introduced by Sen. Jeff Merkley (D-OR), the bill would “limit the use of facial recognition technology in airports, and for other purposes.”

- ACCESS Act of 2025 – S.1634. Introduced by Sen. Mark R. Warner (D-VA), the bill would “promote competition and reduce consumer switching costs in the provision of online communications services.”

- CHIPS Act Repeal and Federal Mandate Limitation Bill – S.1745. Introduced by Sen. Tom Cotton (R-AR), the bill would “repeal certain provisions of the CHIPS Act of 2022 and the Research and Development, Competition, and Innovation Act, to limit Federal mandates imposed on entities seeking Federal funds, and for other purposes.”

- Chip Security Act –S.1705. Introduced by Sen. Tom Cotton (R-AR), the bill would “require the Secretary of Commerce to issue standards with respect to chip security mechanisms for integrated circuit products, and for other purposes.” A companion House bill, H.R.3447, was introduced by Rep. Bill Huizenga (R-MI).

- Quantum LEAP Act of 2025 – S.1746. Introduced by Sen. Marsha Blackburn (R-TN), the bill would “establish the Commission on American Quantum Information Science Dominance, and for other purposes.”

- Post Quantum Cybersecurity Standards Act – H.R.3259. Introduced by Rep. Haley M. Stevens (D-MI), the bill would “amend the National Quantum Initiative Act and the Cyber Security Research and Development Act to advance the rapid deployment of post quantum cybersecurity standards across the United States economy, support United States cryptography research, and for other purposes.”

- Quantum Sandbox for Near-Term Applications Act of 2025 – H.R.3220. Introduced by Rep. Jay Obernolte (R-CA), the bill would “amend the National Quantum Initiative Act to establish a public-private partnership for near-term quantum application development and acceleration, and for other purposes.”

We welcome feedback on how this roundup could be most helpful in your work – please contact contributions@techpolicy.press with your thoughts.

Authors