May 2023 U.S. Tech Policy Roundup

Kennedy Patlan, Rachel Lau, Carly Cramer / Jun 1, 2023Kennedy Patlan, Rachel Lau, and Carly Cramer work with leading public interest foundations and nonprofits on technology policy issues at Freedman Consulting, LLC. Alondra Solis, a Freedman Consulting Phillip Bevington policy & research intern, also contributed to this article.

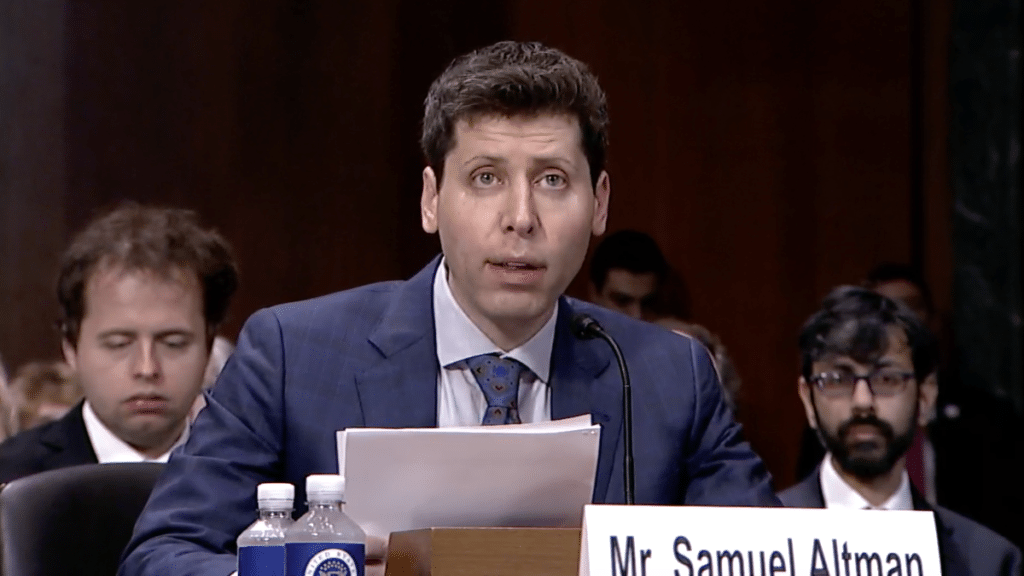

In May, ongoing debates about the ethical use of AI came to a head when OpenAI CEO Sam Altman testified before the Senate Judiciary Committee Subcommittee on Privacy, Technology and the Law. Altman encouraged policymakers to create a new agency dedicated to regulating emerging technologies. He was also among a group of influential tech CEOs and AI researchers who signed a statement encouraging society to mitigate the “risk of extinction” due to the rise of artificial intelligence.

At the agency level, the Federal Trade Commission (FTC) is coordinating an investigation into the pharmaceutical digital advertising market through its inquiry into a sector-shifting merger between IQVIA and PropelMedia. The agency also banned Easy Healthcare (creators of fertility tracking app Premom) from sharing consumer data with advertisers, and it released a policy statement on biometric information and its potential to pose harm to consumer privacy and data security, as well as its susceptibility to promoting bias and discrimination. In addition, the FTC released a proposed rule to amend the agency’s Health Breach Notification Rule to “better explain” the rule’s application for digital health apps and platforms that do not fall under HIPAA.

News reports revealed that the TSA is using facial recognition across more than two dozen U.S. airports, agreeing to a $128 million contract to software company Idemia last month despite objections from lawmakers across the aisle earlier this year and increasing security and privacy concerns. And, a bipartisan group of 10 lawmakers wrote a letter addressed to 20 data brokers, including Equifax, Oracle, and others, with a request for information regarding their collection and use of consumer data.

In corporate news, Facebook owner Meta must face external investigations into potential Cambridge Analytica-related privacy violations following a Delaware judge’s ruling that the company’s internal investigations displayed conflicts of interest. The Irish Data Protection Commission’s decision fined Meta $1.3 billion and ordered that the company suspend the transfer of EU user data within six months. TikTok was also under renewed scrutiny this month after it was revealed that the company has monitored journalists and users “who watch gay content.”

In May, Twitter was criticized for sharing violent images from the Allen, Texas mall shooting. The company also appointed a new CEO, Linda Yaccarino, who was previously an executive at NBCUniversal. Twitter announced that it will remove accounts with limited activity, raising questions about archiving platform content in the digital age. And lastly in Twitter news, former Fox News personality Tucker Carlson announced that he will be launching a show on the platform, after he was fired from Fox News.

Meanwhile, a Washington Post investigation revealed that Google is still storing users’ sensitive location data, including trips to abortion clinics, after previously announcing plans to delete such information.

The below analysis is based on techpolicytracker.org, where we maintain a comprehensive database of legislation and other public policy proposals related to platforms, artificial intelligence, and relevant tech policy issues.

Read on to learn more about May U.S. tech policy highlights regarding attempts across the federal government to rein in AI, continued pushes for children’s online privacy, and developments related to Section 230.

Federal Agencies Respond to AI Concerns

- Summary: Federal entities are accelerating efforts to issue guidance and seek information on how best to regulate AI. On May 23, the White House announced new efforts dedicated to enhancing responsible development and use of AI systems. The announcement included a strategic roadmap for federal investments in research and development, a request for information from the Office of Science and Technology Policy that seeks input on the development of a national strategy on AI and aims to inform future policy responses, and a report from the Department of Education that analyzes how AI will impact the future of learning.

- The Equal Employment Opportunity Commission released a new technical assistance document that explains how employers can use artificial intelligence systems to screen applicants and manage employees without infringing on civil rights. The new document, which is part of the EEOC’s Artificial Intelligence and Algorithmic Fairness Initiative, detailed how Title VII of the Civil Rights Act of 1964 applies to automated systems and reiterates that such technologies may be illegal without proper safeguards. This complements an interagency joint statement last month that emphasized the existing legal authorities that apply to the use of AI. Furthermore, the White House and the National Institute for Standards and Technology have also recently released guidance related to the safe and ethical use of automated systems.

- Stakeholder Response: The Lawyers’ Committee for Civil Rights Under Law, Upturn, and Public Citizen authored a letter signed by 14 leading civil rights organizations warning against provisions in the proposed Indo-Pacific Economic Framework that could make it more difficult for legislators to appropriately regulate AI. Human Rights Watch warned that AI has the potential to complicate human rights investigations through the proliferation of misinformation. The American Civil Liberties Union delivered its first congressional testimony on AI to the Senate Committee on Homeland Security and Government Affairs, reiterating the need for greater constitutional and regulatory protections.

- What We’re Reading: In a New York Times op-ed, Federal Trade Commission Chair Lina Khan argued that rigorous federal monitoring is critical in preventing automated discrimination. Annie Lowrey of The Atlantic articulated the need for strong workforce protections as artificial intelligence threatens to make some jobs obsolete. After Sam Altman, the CEO of Open AI, testified before the Senate Judiciary Committee on Privacy, Technology, and the Law, the Brookings Institution’s Darrell West argued that the hearing demonstrated the need for stricter AI regulation. The Washington Post Editorial Board argued that AI regulations should mandate transparency and prevent discriminatory harms to civil rights and liberties. In Tech Policy Press, AI Now Institute Managing Director Sarah Myers West reviewed the U.S. national AI R&D strategy, and suggested how it could be improved.

Kids Online Privacy Bills on the Move in Congress

- Summary: Kids online privacy bills continued to move through Congress in May. Sens. Marsha Blackburn (R-TN) and Richard Blumenthal (D-CT) reintroduced the Kids Online Safety Act (KOSA), which was first introduced in February 2022 and passed the Commerce Committee unanimously in the 117th Congress. KOSA’s reintroduction was co-sponsored by over 30 senators, including both Democrats and Republicans. KOSA would “require social media platforms to provide minors with options to protect their information, disable addictive product features, and opt-out of algorithmic recommendations—and require platforms to enable the strongest settings by default.” It also would mandate that social media platforms create tools for parents to report harmful behaviors; establish a duty for social media platforms to assess, prevent, and mitigate harms to minors; and share datasets with academic researchers to research the impact of social media on minors. Slight modifications were made from the 2022 version of the bill, including changes around age verification, covered ages, and an explicit list of harms that platforms must take reasonable steps to mitigate, including preventing access to posts promoting suicide, eating disorders, and substance abuse.

- Additionally, the Senate Judiciary Committee marked up and advanced the Eliminating Abusive and Rampant Neglect of Interactive Technologies (EARN IT) Act (S. 1207) with bipartisan support. The Senate Judiciary Committee also unanimously advanced the Strengthening Transparency and Obligations to Protect Children Suffering from Abuse and Mistreatment (STOP CSAM) Act of 2023 (S. 1199). The introductions and contents of the EARN IT Act and STOP CSAM Act were covered in the April 2023 Tech Policy Roundup.

- Sens. Ed Markey (D-MA) and Bill Cassidy (R-LA) also reintroduced theChildren and Teens’ Online Privacy Protection Act (COPPA 2.0, S. 1418) this month. COPPA 2.0 would amend the Children's Online Privacy Protection Act of 1998 to strengthen online protections for users under the age of 17 by requiring platforms to obtain opt-in consent before collecting any personal information from minors. It would also ban online marketing targeted toward minors, require kids and their parents to delete personal information, and establish a Youth Privacy and Marketing Division at the Federal Trade Commission to enforce these provisions. COPPA 2.0 was also introduced in the 117th Congress, but was not voted out of the Committee on Commerce, Science, and Transportation.

- Other federal bodies also weighed in on kids’ online safety in May. The White House announced a slate of actions addressing online safety for minors, which included the establishment of an interagency Task Force on Kids Online Health & Safety with the Department of Health and Human Services and the Department of Commerce. The U.S. Surgeon General published a report titled “Social Media and Youth Mental Health,” which acknowledged the potential benefits of social media among youth, but also highlighted the potential negative mental health impacts and risk of exposure to harmful content.

- Stakeholder Response: The reintroduction of KOSA has led to an avalanche of responses from civil society and industry groups. The Electronic Frontier Foundation (EFF) published an article opposing KOSA, arguing that the bill “requires filtering and blocking of legal speech,” gives too much power to attorneys general to decide what content is dangerous, and creates issues related to age verification on platforms. NetChoice made a statement criticizing the bill, raising the dangers posed by age verification mechanisms and increased data collection. In contrast, ParentsTogether Action, Dove Self-Esteem Project, and Common Sense Media partnered to run a campaign supporting the passage of KOSA. Common Sense supported the reintroduction and Children and Screens: Institute of Digital Media and Child Development’s Executive Director also expressed support for the bill. Finally, the Eating Disorders Coalition for Research, Policy & Action (EDC) published a letter in support of KOSA.

- COPPA 2.0 sparked responses from civil society groups: over 50 organizations endorsed the legislation at the bill’s reintroduction. James P. Steyer, founder and CEO of Common Sense Media, published a statement in support of COPPA 2.0. The bill has also run into some opposition: the American Action Forum argued that COPPA 2.0 could “harm competition and innovation in digital markets, user privacy, and online speech,” pushing for a federal data privacy standard instead. Additionally, the Federal Trade Commission filed an amicus brief this month supporting a federal appeal court’s ruling in Jones v. Google, a case reviewing COPPA’s preemption of state privacy laws that could potentially impact the purview of COPPA 2.0.

- The EARN IT Act continued to be contentious, with cybersecurity advocates reporting concerns about the potential chilling effect on the development and roll-out of end-to-end encryption services. Additionally, the Center for Democracy & Technology published a letter to the Senate Judiciary Committee in partnership with over 100 other organizations opposing the EARN IT Act, arguing that it undermines free expression, makes communication and privacy less secure, and threatens child abuse prosecutions. Sen. Ron Wyden (D-OR) opposed both the EARN IT Act and STOP CSAM Act, arguing that the bills would make children less safe by weakening encryption. However, a group of over 200 organizations also published a letter to Congress in support of the EARN IT Act, arguing that it “allows victims the possibility of restoring their privacy, recognizes CSAM as a serious crime, and protects the rights of children online.”

- What We’re Reading: Mark MacCarthy at the Brookings Institutionput KOSA in context of how the U.S. has historically addressed children’s privacy. Vox’s Sarah Morrisonreported on how other children’s online safety legislation at the state level can help us understand the risks of KOSA. The American Psychological Association published a report on the scientific evidence to date on the impact of social media on adolescents, with recommendations on how social media’s positive impacts could be maintained while negative impacts are mitigated. At Tech Policy Press, Tim Bernard published a compendium and analysis of 144 child online safety bills at the state level, while Amanda Beckham argued for the merits of national data privacy legislation.

Supreme Court Resolves Section 230 Cases Without Weighing in on Section 230

- Summary: The Supreme Court rulings on Twitter v. Taamnehand Gonzalez v. Google proved anticlimactic this month as the justices rejected the cases without a wider pronouncement on Section 230 of the Communications Decency Act. The rulings were narrowly decided, finding that the platforms were not liable for content promoting terrorism on their sites due to the specifics of the cases, but leaving the door open for future litigation about Section 230. Experts say that next the Supreme Court is likely to take on cases considering laws in Texas and Florida restricting online content moderation. According to many stakeholders, the Supreme Court’s decision to not weigh in on Section 230 put the onus back on Congress to pass legislation relating to the law. A plethora of Section 230 bills are on the table in the 118th Congress, including the EARN IT Act (discussed above) and the Curtailing Online Limitations that Lead Unconstitutionally to Democracy’s Erosion (COLLUDE) Act (S.1525), introduced by Sen. Eric Schmitt (R-MO), which would eliminate Section 230 immunities for tech companies if they “collude with the government to censor free speech.”

- Stakeholder Response: Public Knowledge applauded the Supreme Court decisions, arguing that it is Congress’s role, not the Courts’, to address the goals of free expression and competition online. House Energy and Commerce Committee Chair Rep. Cathy McMorris Rodgers (R-WA) issued a statement arguing for reforms to Section 230. Sen. Ron Wyden (D-OR) supported Section 230 in response to the ruling, saying that the law has been “unfairly maligned by political and corporate interests” and that it is “vitally important to allow users to speak online.” On the opposite side, Sen. Lindsey Graham (R-SC) pushed for the repeal of Section 230 following the ruling, promoting the EARN IT Act (which would create a carveout in the law) and threatening to introduce legislation to fully repeal Section 230 if the EARN IT Act fails.

- What We’re Reading: Ben Lennett at Tech Policy Press wrote about the consequences of the decisions. Four legal experts weighed in on the cases’ impact on free expression at Freedom House. Bloomberg provided an overview on Section 230 and other laws moderating speech online in the United States. Bina Venkataraman’s op-ed at The Washington Post argued for “a nuanced, legislative cure for social media’s ills” following the Supreme Court decision. AP News published an explainer on the impact of the decisions on technology companies. And Georgetown Law professor Anupam Chander joined Justin Hendrix on the Tech Policy Press podcast for a final word on the cases.

Federal Agencies Seek Input on AI

Multiple federal agencies are soliciting public input on AI policy across key issue areas, and public interest groups are facilitating efforts to collect comments:

- The National Telecommunications and Information Administration issued a RFI as it works to develop policies in support of more effective AI audits.

- Deadline: June 10th, 2023

- Comments can be submitted here.

- The Consumer Financial Protection Bureau is seeking information on data brokers in relation to the Fair Credit Reporting Act.

- The White House Office of Science and Technology Policy issued a RFI that aims to understand the role of automated technologies in employment.

- Deadline: June 15th, 2023

- Comments can be submitted here.

- The National Science Foundation is requesting public input on the development of its new Directorate for Technology, Information, and Partnerships.

- Deadline: July 27th, 2023

- Comments can be submitted here.

Other New Legislation and Policy Updates

- Require the Exposure of AI–Led (REAL) Political Advertisements Act (H.R. 3044, sponsored by Rep. Yvette Clark (D-NY)): This bill would expand current disclosure requirements for political advertising to include notice if generative AI was used to create any videos or images in the ad. The Senate companion bill was also introduced this month by Sens. Amy Klobuchar (D-MN), Cory Booker (D-NJ), and Michael Bennet (D-CO).

- You Own the Data Act (H.R. 3045, sponsored by Rep. Michael Cloud (R-TX)): This bill “establish[es] a standard that users own their data, and that only with a user’s consent can a company collect, share, or retain an individual’s information.” It would require companies to obtain user consent before collecting their data and create a private right of action for users to sue tech companies.

- Protecting Young Minds Online Act (H.R.3164, sponsored by Rep. Bryan Steil (R-WI)): This bill would mandate that the Center for Mental Health Services create a strategy that addresses the impacts of new technologies such as social media on children's mental health.

- AI Leadership Training Act (S.1564, sponsored by Sen. Gary C. Peters (D-MI)): This bill would require the Director of the Office of Personnel Management to create an AI training program for federal management officials and supervisors. The program would be updated every two years and include information on how AI works, different types of AI, benefits and risks of AI, mitigating AI risks, refinement of AI technology, the role of data in AI, AI failures, and the norms and practices when using AI.

- Immersive Technology for the American Workforce Act of 2023 (H.R.3211, sponsored by Rep. Lisa Blunt Rochester (D-DE)): This bill would allow the Secretary of Labor to establish a grant for community colleges and career and technical education centers in creating immersive technology education and workforce training programs in order to facilitate the entry into high demand industry sectors and occupations. Immersive technology includes extended reality, virtual reality, augmented reality, and mixed augmented reality. The Secretary of Labor and Secretary of Education would be responsible for establishing and publishing best practices for using immersive technology in training programs.

- Know Your App Act (sponsored by Sen. Tim Scott (R-SC)): This bill would mandate that application stores disclose the country from which an application originates and provide a disclaimer to users if an application originates from a “country of concern,” as determined by the Secretary of the Treasury and the Secretary of Commerce.

Public Opinion Spotlight

A Pew Research Center study conducted among 10,701 U.S. adults from March 13-19, 2023 regarding Twitter users’ views about the platform found that:

- 32 percent of all users say the platform is mostly good for democracy, while 28 percent say the platform is mostly bad

- 54 percent of all users believe inaccurate or misleading information is a major problem on Twitter

- 68 percent of Democrats believe inaccurate or misleading information is a major problem of Twitter while only 37 percent of Republicans believe it is a problem

- 48 percent of users say harassment and abuse from other users is a major problem, with 65 percent of Democrats and 29 percent of Republicans agreeing

- 30 percent of users believe Twitter banning users from the platform is a major problem, with 43 percent of Democrats and 19 percent of Republicans agreeing

A poll conducted by Morning Consult from March 17-21 with 1,991 registered voters on tech regulation found that:

- 56 percent of voters believe the federal government is more responsible for passing internet and technology regulations, while 44 percent believe states are more responsible

- 80 percent of voters support state laws that protect the privacy of children online

- 54 percent of voters support state laws that require IDs to access adult websites

- 52 percent of voters support state laws that limit social media platforms' ability to perform content moderation

In a national poll conducted by Morning Consult and Ed Choice between March 24 – April 5, 2023 among 1,000 teens regarding their experiences in school, they found that:

- 42 percent of teens have heard a lot or some about ChatGPT

- 60 percent of teens have not used ChatGPT either in school or in their free time, while 15 percent of teens have

- 72 percent of teens rely on social media to get information about current events

A national Change Research poll conducted between April 28-May 2, 2023 asked 1,208 registered voters about their views of AI and found that:

- 41 percent of respondents believe Congress should be the driving force behind AI policies and regulations, while 20 percent believe the driving force should be tech companies

- 54 percent of respondents believe Congress should take action to regulate AI in a way that promotes privacy, fairness, and safety

- 15 percent of respondents believe regulating AI will stifle innovation and hinder the development of AI tools

Impact Research ran a poll for Common Sense Media from March 28–April 9, 2023 and surveyed 1) 1,181 parents who have children ages 5–18 enrolled in grades K–12 and 2) 300 students ages 12–18 enrolled in grades K–12 on AI attitudes. The poll found that:

- "38 percent of students say they have used ChatGPT for a school assignment without their teacher's permission

- 68 percent of parents and 85 percent of students believe that AI programs will have a positive impact on education

- 89 percent of parents and 85 percent of students support schools putting rules in place for ChatGPT before it is allowed to be used for school assignments

- 77 percent of parents and 75 percent of student schools setting an age limit on the use of ChatGPT"

In a poll conducted by Ipsos from May 9-10, 2023 among 1,117 U.S,. adults on issues going on the news, they found that:

- 45 percent of U.S. adults are somewhat comfortable with AI analyzing data to help companies make decisions

- 35 percent of U.S. adults are somewhat comfortable with AI screening job applicants while 34 percent are not very comfortable

- 35 percent of U.S. adults are not very comfortable with AI creating video or audio in the likeness of actual actors from the past or present

A Quinnipiac University survey of 1,819 U.S. adults, conducted from May 18 to May 23, 2023, found that:

- 54 percent of U.S. adults believe that artificial intelligence poses a danger to humanity, while 31 percent think that it will benefit humanity.

- Among employed respondents, 24 percent reported that they were very concerned (8 percent) or somewhat concerned (16 percent) that artificial intelligence may make their jobs obsolete. 77 percent said that they were not so concerned (22 percent) or not concerned at all (55 percent).

- - -

We welcome feedback on how this roundup and the underlying tracker could be most helpful in your work – please contact Alex Hart and Kennedy Patlan with your thoughts.

Authors