Leak Reveals Facebook Data Management At Odds with GDPR

David Carroll / Apr 27, 2022Facebook tracks its users, which is legal, but it doesn’t track how it uses its users’ data, which likely isn’t legal, says David Carroll.

An internal document from Meta, the company that operates Facebook, Instagram and WhatsApp, surfaced in a leak to Motherboard/Vice that appears to show that the company is presently unable to comply with some of the most basic requirements of the European Union’s data protection regulation. Experts reviewing the document, which describes the company’s “preparedness, investments and technology plans with respect to inbound regulations,” swiftly stroked-out highlighted instances of Facebook documenting its own non-compliance internally, especially concerning purpose limitations and subject access control rights that undergird the General Data Protection Regulation (GDPR), the sweeping laws that enshrined data protection rights guaranteed in the European Union’s Charter. This may create substantial liability for the company.

In a way, the European regulations mandate a kind of building code for designing the factories of industrial data processing. To comply with GDPR, personal data flows must be traced through the supply chain and across the metaphorical conveyor belts combining and assembling the automated attention auctioneering that powers Facebook’s advertising business model. But, this document appears to confirm, Facebook never bothered to build a traceable, accountable data inventory control system and so it cannot adequately respond to people exercising their data rights or forensically verify compliance to regulators without data flow controls and accounting. Will it be forced to do anything about this now?

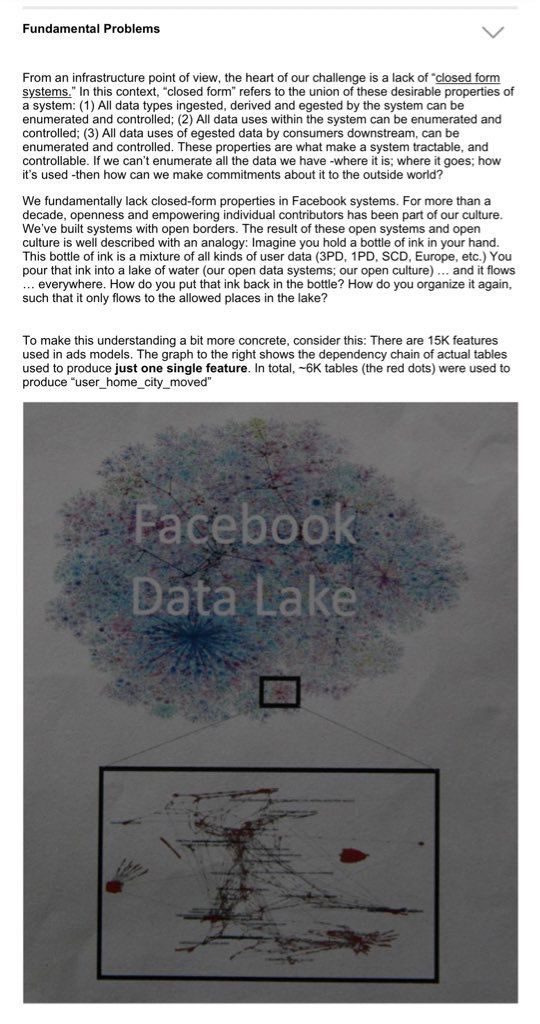

Seemingly ignorant of established European data protection jurisprudence, Mark Zuckerberg’s original ideology of an “open-and-connected” ads business with his network forming the center of everyone’s social life guided his engineers to structure the design of data management systems into highly entangled data lakes that pool data flows freely and fluidly. While proving exceptional for deducing when a user moves from their hometown out of fifteen thousand data points with profitable accuracy, this approach is fundamentally unable to provision for many of the GDPR’s basic requirements of limiting the use of data for specific consented purposes and enabling the data rights of people that include access and erasure. These basic legal frameworks specify design requirements of closed and strictly controlled data flow mechanisms that carefully curate, isolate, and audit data manipulations. The engineers and product managers who must have authored the leaked internal report make a compelling case to upper-management for rethinking everything about how data is collected to deliver ads inside the company. Simply put, Facebook was never built to protect Europe’s data rights, and it needs to be radically reimagined and rebuilt to accommodate even the basics.

Fundamental Problems: A diagram from the leaked report illustrates how Facebook deliberately intermingles data in data lakes so that algorithms more fluidly recognize user behaviors useful for vectors of ads targeting - Motherboard/Vice

In another section of the report, the engineers give some indication to the nuances of what it means to “opt-out” within Facebook. Users probably misunderstand this choice as a simplistic expression but one reason why re-structuring data management is a daunting task is because certain opt-out data “is used in training but not in ranking or targeting.” Even if a more GDPR-compliant system is developed moving forward that lawfully curates data for ads, it would still require an efficient means to “filter opted-out users” that will take “multiple years” to build. The report goes on to describe how the company had plans to launch a special version of ads for Europeans with more extensive opt-outs than the rest of the world, further indication that Facebook remains uncomfortably incompatible with European values expressed in its dignity-defending data protection policies. Offering users clear and lawful choices about how their data gets re-purposed at Meta is apparently not so easy.

"We do not have an adequate level of control … over how our systems use data, and thus we can't confidently make … commitments such as 'we will not use X data for Y purpose'"

From the summary of the internal doc "written to advise leadership" in 2021:https://t.co/j8TxtZOMWs pic.twitter.com/QaitPHAgcB

— Wolfie Christl (@WolfieChristl) April 26, 2022

This latest document leak plainly contradicts public claims by the company that it is presently complying with the GDPR, such as its limitations on the purposes of data collection or fulfilling user requests to locate all of their personal data to potentially claw-back for erasure. Even worse, the document details how expensive it would be to radically re-architect and re-code systems to even begin to provide for compliance. Adding insult to injury, the leak also demonstrates the inadequacy of the regulatory enforcement and auditing regime that is tasked with somehow ensuring that tech titans are not being deceptive in their claims of compliance. (Apparently, they are.)

Wicked problems of designing for tech transparency are starkly illustrated in this leak, once the reader gets beyond the company’s internal jargon and acronyms. It becomes abundantly clear that Facebook was never designed to protect and manage data responsibly, but now the company’s workers realize what the law expects more concretely. Their unpreparedness to comply with the European data protection regime reflects to what extent privacy is an afterthought at Meta to this day. The company is nowhere close to achieving GDPR compliance, even as it will soon be expected to comply with the Digital Services Act (DSA) and Digital Markets Act (DMA) in addition to the “tsunami” of new regulations building up around the world, much of it meticulously documented in the leaked report.

In hindsight, Facebook instigated this regulatory backlash. These new rules were arguably catalyzed substantially by the Cambridge Analyica scandal that first dominated headlines in the weeks of spring 2018. Now, in the spring of 2022, Menlo Park’s public relations machine is probably delighted that people are preoccupied with the fate of another important social networking platform purchased by another unaccountable billionaire to worry much about the intrinsic intractability of Facebook. Likewise with Cambridge Analytica, if it wasn’t for whistleblowers leaking to journalists, we would still be in the dark about all of it.

Authors