Lawmakers are talking the talk on Big Tech accountability. But will they walk the walk?

Nathalie Maréchal / Mar 2, 2022Nathalie Maréchal, Ph.D., is the Senior Policy and Partnerships Manager at Ranking Digital Rights.

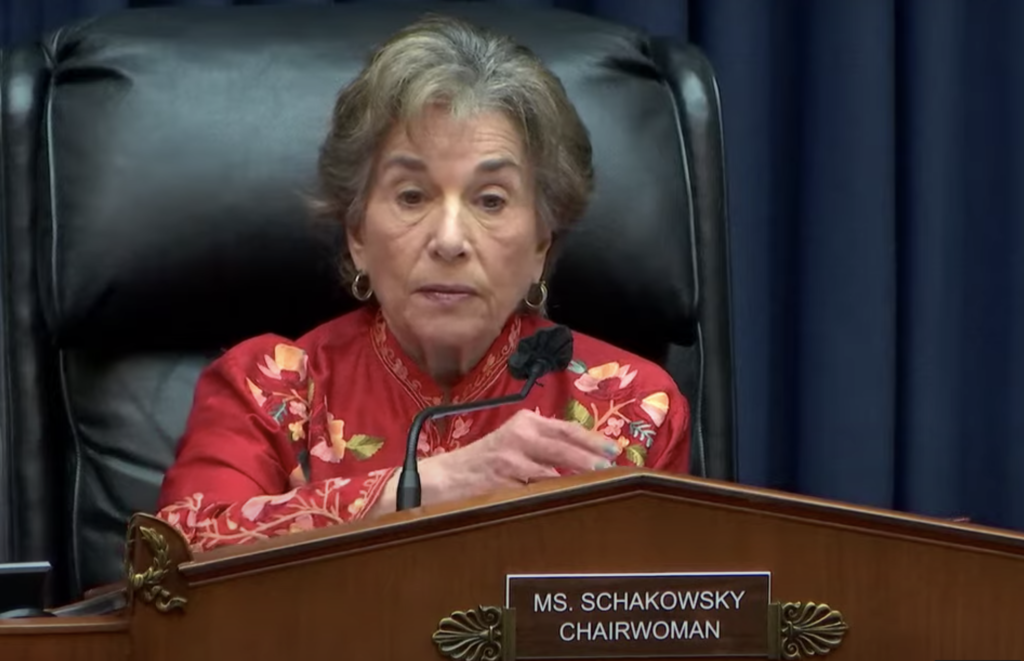

On Tuesday, the House Energy & Commerce Committee held its latest hearing on “Holding Big Tech Accountable,” focusing on recently introduced legislation to protect users online. As many observers have noted, the dialogue at Congressional tech hearings keeps getting more sophisticated, and this one was no exception. Members and witnesses alike demonstrated their grasp of Big Tech’s social harms and commitment to addressing them.

Of course, there was no escaping the obligatory dog-whistles about encryption, gratuitous bemoaning of Section 230, and requisite reminders that various legislators are indeed parents or grandparents, but at least we avoided dubious complaints about anti-conservative bias on social media platforms. The good news is that the bills under consideration all pursue legitimate policy goals in a thoughtful way—a high bar for a Congressional tech hearing. Below, I evaluate the dialogue on the bills proposed by House Republicans and Democrats, and discuss the path forward.

The proposed Republican bills

The two Republican-sponsored bills discussed are narrowly focused on the role of law enforcement in protecting people from online illegal activity. The CAPTURE Act requires the Comptroller General, who heads the Government Accountability Office (GAO), to study the relationship between Big Tech platforms and law enforcement, and to make recommendations for new legislation to prevent, identify and prosecute online criminal activity like child exploitation and illegal drug sales. The Increasing Consumers’ Education on Law Enforcement Resources Act directs the Federal Trade Commission (FTC), the Attorney General and other relevant federal agencies to conduct an education campaign to help Americans understand where to get help when their safety and security has been violated online.

The E&C Republicans directed most of their questions to their sole witness, a Florida law enforcement officer named Mike Duffey whose answers underscored the need for something like the CAPTURE Act to empirically document how online platforms do (and don’t) cooperate with law enforcement. A rigorous GAO study would be a boon for evidence-based policy-making. These are fine bills that would almost certainly help people who are harmed by online illegal activity, but they would do little to make Big Tech companies themselves more accountable.

The proposed Democrat bills

The three Democrat-sponsored bills are much broader, aiming to finally bring some oversight and accountability to a tech sector (broadly defined) that has evaded it for far too long.

The Algorithmic Accountability Act (AAA) is a comprehensive, forward-looking bill that requires all private companies—not just social media platforms—to assess the impacts of automated systems that they use or develop that make "critical decisions" about people's lives, and share these impact assessments with the FTC under certain conditions. The precise scope of “critical decisions,” as well as other implementation details, will be determined by the FTC through rule-making, but the bill’s authors have made clear that it would not include systems for moderating social media content. The AAA’s main drawback—and it’s a big one—is that it doesn’t apply to government agencies or non-profits, as these actors don’t fall under the FTC’s jurisdiction. That being said, many (perhaps even most) of the algorithmic tools used by the public and nonprofit sectors are developed by private companies, so the tools themselves would be covered by the bill.

The Banning Surveillance Advertising Act does what it says on the label: it prohibits “advertising facilitators” from targeting ads with the exception of broad location targeting to a recognized place (e.g., municipality) and prohibits advertisers from targeting ads based on protected class information and user data purchased from a third party. Contextual advertising is explicitly allowed. In other words, its ambition is to abolish targeted, behavioral and programmatic advertising and reshape the internet economy around contextual advertising, subscription models and new business models that haven’t been invented yet.

The current version of the bill goes further than the EU’s proposed Digital Services Act by prohibiting targeting based on personal data that users have opted to share. This departure from the broken “notice and consent” model is welcome, but one can nonetheless imagine models where users would opt-in to predefined audience interest categories in exchange for site access, as long as the opt-in is truly voluntary and the categories are carefully vetted for discrimination risks. Such a provision could also go a long way toward assuaging legitimate concerns from civil rights organizations, other public interest groups and political candidates challenging well-resourced incumbents. It would further undermine Alphabet and Meta’s self-serving talking point about the importance of surveillance advertising for small businesses.

Finally, the Digital Services Oversight and Safety Act (DSOSA) creates a new FTC Bureau of Digital Services Oversight and Safety to study “systemic risks” of online platforms, including the dissemination of illegal content or goods, discrimination based on protected class status, and malfunctioning or intentional manipulation of a hosting service. This could include “the amplification of illegal content, and of content that is in breach of the community standards of the provider of the service and has an actual or foreseeable negative effect on the protection of public health, minors, civic discourse, electoral processes, public security, or the safety of vulnerable and marginalized communities.”

The complex bill would further require platforms to be transparent about their content moderation processes and the aggregate outcomes of their policies, and to offer appeal mechanisms for users whose content is moderated. It also bears noting that DSOSA is sensitive to platform size and scope, a necessary policy design that acknowledges the pitfalls of one-size-fits-all approaches to tech regulation. The largest platforms (defined as services with ~20% of the US population) face the heaviest compliance burdens and would have to report on systemic risks like illegal content, user discrimination, and platform manipulation. Other requirements include platforms submitting to independent audits and being transparent about how user data is used in algorithmic recommendation systems. Ultimately, the FTC would develop and share guidance on systemic risk mitigation and facilitate independent researcher access to platform data.

How to move forward

The AAA, Banning Surveillance Advertising Act, the DSOSA, and the conversation surrounding these bills should finally put to rest the tired notion that Capitol Hill doesn’t understand the technology sector well enough to regulate it. They may fall short of adding up to a comprehensive privacy bill, as several Republicans noted, but they represent three big steps in the right direction.

If enacted, these bills would force AI companies to think about how their products could go wrong before unleashing them on the world, and gut the Alphabet/Meta duopoly’s existing surveillance advertising revenue model while shifting the incentive structures for entire industries: social media platforms, data brokers, ad tech providers, and the myriad companies that make money directly or indirectly from third-party data sales. They would also give the FTC the authority and capacity it needs to truly protect people from unfair and deceptive practices in the 21st century. Such achievements would be a huge win, and I urge my fellow public interest advocates to work with the relevant lawmakers to strengthen these bills and move them along the legislative process.

This week’s hearing, which builds on two hearings in late 2021 (I testified at the second), leaves one question wide open: how serious the leadership of both parties really are about passing Big Tech accountability legislation in this Congress. At each hearing, representatives from both parties lobbed barely-veiled accusations of partisanship while claiming to only be waiting for their counterparts to engage in good faith.

Republican attempts to portray the Democrats as uninterested in comprehensive privacy legislation are especially unpersuasive, given their caucus’ inability to produce a bill that would actually extend consumer privacy rights. Rep. Cathy McMorris Rodgers’ (R-WA) Big Tech Accountability Platform memo centers the same kind of transparency that civil society groups have been calling for. Yet transparency is completely missing from the Republican bills discussed in the recent E&C hearings. If Rep. McMorris Rodgers is serious about transparency, she needs to introduce a bill laying out her Big Tech transparency agenda.

More positively, many House E&C Democrats and several Republicans seem convinced that the various harms we’re all concerned about are related to business models driven by surveillance advertising. Rep. Greg Pence (R-IL) gave an especially cogent summary of that argument, which is one I’ve been making since at least 2018. Let’s hope they act on it by supporting the Banning Surveillance Advertising Act. (Actually, don’t hope. Lobby them. You know Meta and Alphabet are).

On the Democrat side, even if these and other promising bills make it out of the E&C Committee—and I think at least some of them will—they’ll still need to pass the full House and the Senate on their way to the Resolute desk, and the odds will be better if they have leadership support. But where do Speaker Nancy Pelosi (D-CA), Majority Leader Chuck Schumer (D-NY) and other top Democrats stand?

As for President Biden, his understanding of tech policy issues has historically been shaky. He appeared to signal something by inviting Frances Haugen to join Dr. Jill Biden at the State of the Union, and used the speech to call for banning surveillance advertising for children, framing it as part of a holistic push for youth mental health. Dr. Alondra Nelson’s elevation as Director of the White House’s Office of Science and Technology Policy is another positive sign, but it will nonetheless take sustained civil society pressure to prevent the tech accountability agenda from being deprioritized.

My fellow Big Tech accountability advocates, we have our work cut out for us.

Authors