Judging Platform Responses to Election Mis- and Disinformation

Justin Hendrix / Aug 28, 2022Audio of this conversation is available via your favorite podcast service.

In last Sunday’s podcast, I promised an occasional series of discussions on the relationship between social media, message apps and election mis- and disinformation. In today’s show, I’m joined by two guests who just did a deep dive into the issue, producing a 'score card' that compares the policies and performance of the tech companies on multiple dimensions for New America’s Open Technology Institute:

- Spandana (Spandi) Singh, a policy analyst at New America's Open Technology Institute, and

- Quinn Anex-Ries, PhD candidate in American studies at USC and an intern with the Open Technology Institute this summer.

Their findings are summarized in a report, Misleading Information and the Midterms: How Platforms are Addressing Misinformation and Disinformation Ahead of the 2022 U.S. Elections.

What follows is a lightly edited transcript of our discussion.

Justin Hendrix:

So before we get started looking at this specific report that you all published at the end of last month on misinformation and misleading information in the midterms, tell me a little bit about your research generally there?

Spandi Singh:

Sure. So OTI has a pretty long-standing history of working on platform accountability issues. Over the last few years, my work has really focused on examining how internet platforms are moderating content on their services, how their policies and processes are structured, and then how they're using AI and machine learning to curate the content we see, through newsfeed ranking, through ads, through recommender systems, and through traditional moderation. And overall, our work is really trying to identify ways that we can push platforms to be more transparent and accountable in this regard, and to develop policies and practices that are equitable, fair, and that really align with of the broader spectrum of digital rights in this space.

Justin Hendrix:

Quinn, what's your part in this work?

Quinn Anex-Ries:

In the past, OTI published an initial report about how 11 different internet platforms were addressing the spread of election mis- and disinformation. And so as a follow-up to that, we worked on publishing the Scorecard this summer. And so I was looped in to help do the background research and writing of the follow-up Scorecard.

Justin Hendrix:

I suppose you could say the US 2020 election was a disaster when it comes to mis- and disinformation. Part of that is because, of course, so many Republican elites essentially supported the Big Lie that Donald Trump had in fact won the election. Do you think that based on your research for this update at the midterms, that the platforms seemed to have learned some lessons from the 2020 cycle?

Spandi Singh:

So I'm happy to talk about some of the specifics and Quinn can definitely talk about the positive areas, but just as some context, I think 2020 was a really interesting moment from a process and policy standpoint, because although we had January 6th and many examples of how platform efforts occurred, misinformation failed, I think it's important to recognize that the efforts that we saw in 2020, were actually a vast improvement from what we saw before in 2016. So pre-COVID, platforms, when they were trying to tackle misinformation and disinformation, their efforts were frankly very limited, but when COVID started and we started seeing the very real offline consequences of COVID misinformation and disinformation, platforms really expanded the repertoire of interventions that they were using.

And then the closer we got to the 2020 elections, I think there were major concerns, rightfully so, around voter suppression in communities of color, the spread of conspiracy theories. And so platforms carried around many of those COVID interventions to the election cycle. Now, again, obviously not all of them were as effective and we still had January 6th, and we still saw Stop the Steal conspiracy theories continue to spread afterwards. But I just want to emphasize that there has been a fundamental shift and of course, more is needed. But just to give that context, I think is important. And I think Quinn can talk about some of the positive changes that we've seen.

Quinn Anex-Ries:

So based on our research, we actually found that between 2020 and now, there's actually been a few positive developments in terms of how platforms are addressing election mis- and disinformation. So for instance, all of the platforms that we evaluated, have instituted policies to address the spread of election mis- and disinformation in organic content and have instituted measures to either remove, reduce, or label this kind of content, in order to limit its circulation. In addition, a majority of the platforms that we looked at, offer dedicated reporting features that allow users to flag mis- and disinformation when it appears on the services and many platforms have either continued or expanded their fact-checking partnerships. So these really do mark major improvements and are positive steps towards instituting comprehensive and clear mechanisms for detecting misinformation, and also enforcing platform of policies against misleading content and the accounts that spread that kind of content.

Justin Hendrix:

One of the things that seems apparent, is that most of the platforms manage their elections, civic integrity efforts, in a cyclical or episodic way, at this point, they still think of elections as events that occur on a calendar. And of course they are, but it seems that even in some of their public statements they tip their hand to the idea that they're not following up on their civic integrity or elections policies as closely in some of the off-season. Do you think that is still an appropriate response, given what we've seen about the durability of some election misinformation?

Spandi Singh:

I'm definitely concerned about it because I definitely think, like you said, we've seen many of these harmful narratives continue to spread. One of the major gaps that I think relates to this, that we found in the Scorecard, is that there has been really almost no transparency from platforms around which of their interventions worked and why they worked. And if they didn't work, what subsequent changes they've been making. And I think without this kind of information, it's really difficult for us to hold platforms accountable, push them to implement interventions that are actually effective, but it's also difficult to answer these kinds of questions about which interventions are okay to roll back and which of them have to be permanent. So I think without this kind of more engaged dialogue around data and impact, it's really difficult to make these kinds of determinations.

And I think this issue around data transparency and impact, is one that we see in the content moderation space more broadly. And I think it's really important for us as researchers, for civil rights groups, and for platforms and many others, to come together to understand what are the actual data points that we want to see platforms disclose and in what format? Like who's the audience for this, is it just vetted researchers? Is it through a transparency report? Is it through an ad library? And I think that kind of multi-stakeholder dialogue is really critical to moving some of these conversations forward, rather than it being what it is right now, where a platform say, "Here's what we're doing," and then everyone has to just be like, "Okay, great. Let us know how it goes."

Justin Hendrix:

You've looked at as many as ten platforms here, including some of the message apps, WhatsApp, for instance, Snap. When you step back from each of the measures, are there platforms that you think are slightly ahead, at least from a policy perspective, and ones that you feel are at the rear of the pack?

Spandi Singh:

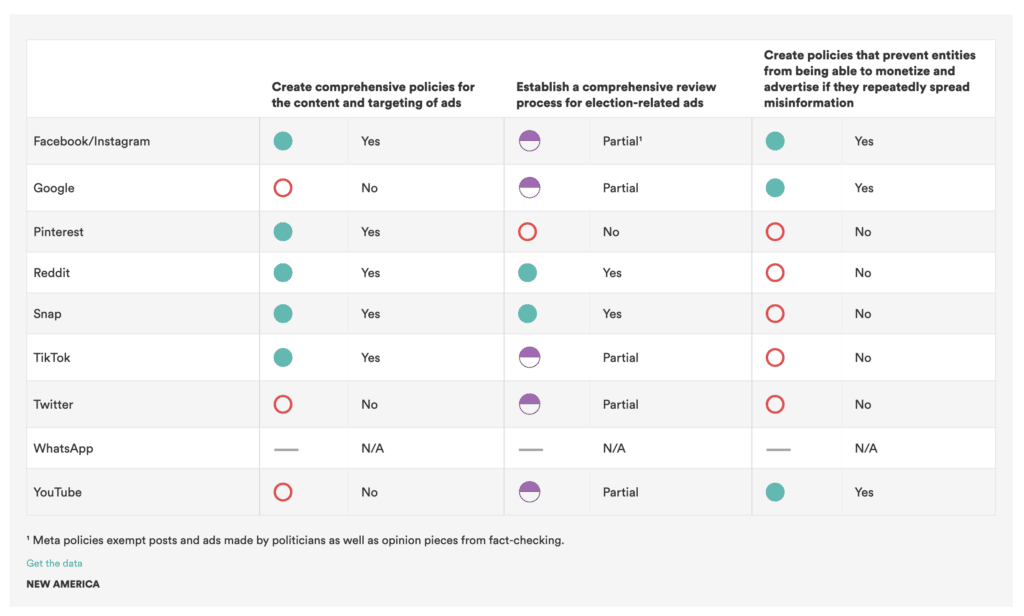

I'm honestly hesitant to say that one is ahead of the other. I don't know. Quinn, feel free to jump in here too as well. I think that, like Quinn mentioned, we've seen a lot of progress in that area of basic policies and principles for moderating content and fact checking, and so on. But I think that aside from transparency, also we've seen almost no progress in the advertising space. And I think we have seen that many platforms lack comprehensive policies that ban election misinformation in their advertising. We've seen many of them lack strong review processes for taking down this kind of advertising. And then we've also seen very few platforms who have policies that prevent super spreaders from spreading misinformation and disinformation. So while there is progress in some areas, there's no progress in the others. So it's really hard to say that one is ahead of the other because they're really locking some key movement in some key areas.

Quinn Anex-Ries:

Just a quick thing to add about the messaging platforms. When I was looking into WhatsApp, I think the point that Spandi raised earlier about the gaps in transparency and data, WhatsApp is exploring similarly innovative potential solutions around addressing the spread of misleading election information on their platform. And on the one hand, the encrypted nature of the services makes it hard for them to do perhaps a full analysis of the effectiveness of those measures, but without any research or access to data, it's hard to know if some of those solutions are actually making an impact.

Justin Hendrix:

One of the categories where your Scorecard essentially gives most of these platforms that you looked at, at least a green, indicating that they do in fact have policies in this regard, is around moderating and curating misleading information. I did find myself reading this though, and looking at it alongside a report from advanced democracy, which found platforms still rife with claims essentially calling into question the outcome of the 2020 election. And wondering if there isn't just an enormous distance between having the right policies in place and actually being able to do anything about them?

Spandi Singh:

This has definitely been a huge concern of ours as well. It's been really great to see that platforms have developed these policies but again, there's been almost no transparency around the actual enforcement of these policies. So in 2020, for example, we saw in the run up to the election, platforms were continuously expanding or changing their policies in response to advocacy and the changing landscape. But one of my biggest questions was, "Okay, but are you able to as quickly train your automated systems and your human reviewers to adapt to these changes? Are they able to enforce them the day that you announce them," for example?

And I think we see the same thing with ads as well. And it's important to recognize that moderation is never going to be a 100% perfect. There will always be errors but again, it's important for us to be able to understand when are those errors happening and what impact are they having, so that we can try and plug those gaps. Otherwise, we're just at the mercy of platforms who'll say, "Our automated systems are very effective. Don't worry." Right? But it's our job to worry. It's our job to hold the platforms accountable.

Justin Hendrix:

I want to ask you specifically about the video sharing sites, about TikTok, slightly newer to the scene and obviously having to play a bit of catch-up, but also YouTube, which on your Scorecard is consistently, it appears at least, maybe slightly behind on having pieces in place to, I guess, meet the standard that you've set out in this report for dealing with election information?

Spandi Singh:

I will say I think that with TikTok, I've seen some really interesting approaches to tackling misinformation, disinformation. Again, I can't promise that they're effective because of the lack of data, but I am really intrigued by how those interventions are developed and what kind of user research or other research they've done, because I think they're distinct in some ways, from what we see on the other platforms. In terms of YouTube, I mean, yes, I think YouTube has received its fair share of criticism, both in our Scorecard and just generally in the research community, especially because of the use of recommender algorithms on the platforms and how that can drive polarization, and push people down the so-called rabbit holes of misleading information. But again, I mean, I think the biggest gaps that I see with YouTube, are similar to many of the other platforms, are in transparency, and then around monetization and advertising.

Justin Hendrix:

And I want to come to you and ask you a little bit about an offshoot of this report. Didn't quite make it onto the Scorecard, but you looked at the sort of universe of gaming apps, including both games themselves, also live streaming, places like Twitch and Discord. What did you learn about the role of election misinformation on these platforms? What's the threat dimension there?

Quinn Anex-Ries:

Absolutely. Well, I was interested in writing the piece because online gaming platforms have been relatively under examined within this bigger discussion and research about the spread of election mis- and disinformation in online platforms. And at the same time, there are pretty significant indicators that online gaming platforms may be equally as susceptible to false and misleading election information. So I just want to flag two things to think about here, the first would be that recent research by civil society organizations has really shown that hate, harassment, extremism, and radicalization, are growing issues across online multiplayer games. Extremists can exploit gaming platforms to radicalize users and spread extremist ideologies, that can include things like conspiracies theories, such as QAnon. And at the same time, the spread of extremist content can contribute to and amplify hate and harassment, specifically targeted at women, people of color, and LGBTQ folks.

And this really highlights the potential vulnerabilities that already exist within gaming platforms and much like more traditional social media platforms that the Scorecard looked at, bad actors can exploit these vulnerabilities to spread misleading election information. The second thing just to quickly add here, that there are also some pretty unique aspects to the actual configuration of the gaming ecosystem, that presents some challenges to actually addressing misleading election information. So the gaming ecosystem is actually made up of a couple general types of platforms. The first category here would be distribution services like Steam and interconnected devices.

So Xbox or PlayStation actual consoles, that allow users to purchase and download games, but they also often include built-in messaging or social networking functionalities that allow people to interact with one another. And the other category are the gaming adjacent social messaging and live streaming platforms like Discord or Twitch, that provide different kinds of in-real-time interaction for gamers. The problem really though, is that this array of platforms within the gaming ecosystem, mean that any given users in game conduct could actually be subjected to three to four different platform policies. And this ends up creating a really complicated environment for both users and platforms to navigate.

Justin Hendrix:

My children often game, and message, and stream all at the same time. So I have some sense there. When you think though about the scale of the problem there, I mean, just for any listener that may not be familiar with these services, what are we talking about here? Do you think of it as commensurate with a platform like Twitter?

Quinn Anex-Ries:

Absolutely. The number of adult online multiplayer gamers in the United States alone, is almost a 100 million people, which is a little shy of a third of the overall US population. And that sits in between the total US-based users of Twitter and Facebook, right? So right in the middle of those two. So that just points to the potential scale of impact. And the other thing to note too, that this is a growing and lucrative industry that researchers predict will be worth over $219 billion of revenue in the next two years. So I think that helps to put into perspective the scale of what the potential problem might look like here.

Justin Hendrix:

Spandi, since you published your report, I suppose there have been multiple announcements by the platforms about more specific actions they're taking ahead of the midterm elections. And I noticed in some cases, they're crowing about their investments in this area. I saw Meta's Nick Clegg for instance, throwing out a number, $5 billion invested in integrity-related efforts around the world. Do we have the baseline to know whether that's an impressive number or whether it's enough?

Spandi Singh:

No, I don't think we do and I don't think we have the details to understand where that money is going, and whether it's actually being effective. I will say that since these announcements have come out, they haven't really changed our perception of where companies need to invest more resources towards. So the announcements have been great. It's great to see that platforms are thinking about the midterms, but most of the announcements have solidified our understanding that there's been progress in the content moderation policy area and sharing authoritative information about the elections, but they have not filled any of the concerns that we have around advertising, monetizing, and transparency. So I think they're generally investing resources in these also important areas, but largely ignoring the other ones that also have fundamental consequences, and are very related to platform business models and researchers' ability to hold these platforms accountable.

Justin Hendrix:

You mentioned at the start that, of course, these platforms have made a lot of progress since 2016, certainly. And I think that's certainly true. There's a lot more effort, but there's also been a lot more pain in the meantime and then accountability for them, including many trips to Capitol Hill. Do you think it's possible we're approaching a kind of, I don't know, point of diminishing return when it comes to continued effort here? I mean, unless political leads that and influencers stopped trying to spread so many misleading ideas about elections, that it'll be hard for the platforms to make a lot more progress?

Spandi Singh:

I don't know if I would agree with that. I mean, I definitely hear the concerns around people at the top spreading misleading information, but it's not just that, right? There is also a vast population of just everyday users who are increasingly susceptible to these kinds of conspiracy theories and information, and then are responsible for helping to amplify it. I think there's an interesting conversation to have around whether platforms are the only people who are responsible for intervening? Should we be having media literacy classes in schools? Are there other sorts of community-based interventions that we can look at? But just to Quinn's point around gaming, I don't think this is an issue that's going away. When Quinn was doing his research, I was really thinking about how platforms are investing more in AR, VR, and the metaverse, and really doubling down on, "This is the next phase of the internet."

And so many of these content issues will carry over into that space. And like Quinn was mentioning with this sort of environment where you have multiple platforms policies operating and governing how a user interacts all at once, these issues will continue to be things that we have to think about. And so I think, again, it's really important for us to know right now what's been working well, so that once we theoretically reach this new phase of the internet, whenever it arrives, we are prepared with a good amount of information on lessons on what worked, what didn't work, and how we can make the future of this space more secure for users and for our democracy.

Justin Hendrix:

Is this ongoing work for you or is it episodic? Do you expect that OTI will continue to produce this Scorecard and have an update going into the 2024 cycle?

Spandi Singh:

That seems so far away. I think generally, we've done a lot of research around what best practices in this space look like. So I don't know if there'll be another Scorecard in the run up to the 2024 election, but I think we will generally continue working on pushing platforms to adopt our recommendations and generally take a stronger stance at tackling misinformation and disinformation.

I mean, I would just add that I think there's a couple of other areas of misinformation, disinformation, that could be significantly influenced by what we're seeing right now, like climate change and other kinds of categories, and other forms of health misinformation. So I'd be interested to see if there are any learnings that are taken from this current era and carried on into those spaces.

Justin Hendrix:

I think this baseline problem is a real problem. And I found myself thinking that yesterday, reading the Twitter document, which is, what is the baseline? How many employees do we expect them to have? What is the right level of investment?

Spandi Singh:

Right. Or is it actually the number of employees or is it what they're working on, and how effectively they can work on it?

Justin Hendrix:

And what are the measures and that sort of thing? And I don't know. I mean, I'm split-brained about this because even in my question, I was split-brain to you, but I do think on one hand, it is natural that we would at some point reach a point of diminishing returns, in terms of what these platforms can do in the face of perpetual influencer and political elite-driven lies about how our election system operates. If 30% of Americans want to believe that the moon's made of chocolate, we're not going to be able to strike that off every user-generated platform. But on the other hand, I don't think $5 billion's an impressive number, Facebook.

Spandi Singh:

Right. I mean, I think that's why the community interventions to me, is a really interesting space. I know that some of the big tech platforms funded community-level trainings, for example, in countries like India, where their platforms were really having a big impact on misleading information, but they weren't happening at a scalable level. And obviously, I think there are a lot of political sensitivities around teaching media literacy in a classroom in different countries, but I do increasingly think that this is not just a tech platform problem. They didn't come up with the concept of misinformation and disinformation. That's been around for ages.

Justin Hendrix:

Well, and in fact, if there is a next round of this research, I hope you'll come back and tell me about it. So Spandi, Quinn, thank you so much for speaking to me today.

Spandi Singh:

Thank you.

Quinn Anex-Ries:

Thanks so much.

Authors